Local SEO ranking tracking is essential for businesses that want to be found online. By tracking their rankings in Google Maps, businesses can identify opportunities to improve their visibility and attract more customers.

Traditionally, ranking tracking has focused on organic search results. This is because organic search is the most common way people find businesses online. However, tracking Google Maps rankings can be even more important for local businesses.

Google Maps is a powerful tool for local businesses. It is the go-to destination for people looking for businesses in their area. By tracking their Google Maps rankings, businesses can see how their business or location is displayed and ranked in Google Maps results. This information can improve their online presence and attract more customers.

Understanding Local Search Rankings

Google Maps ranking significantly impacts local businesses and is an important factor that can majorly impact attracting customers and building your reputation. Here are some of the benefits of tracking Google Maps rankings:

- See how your business or location is displayed in local results.

- Identify opportunities to improve your ranking.

- Track your progress over time.

- Compare your ranking with your competitors.

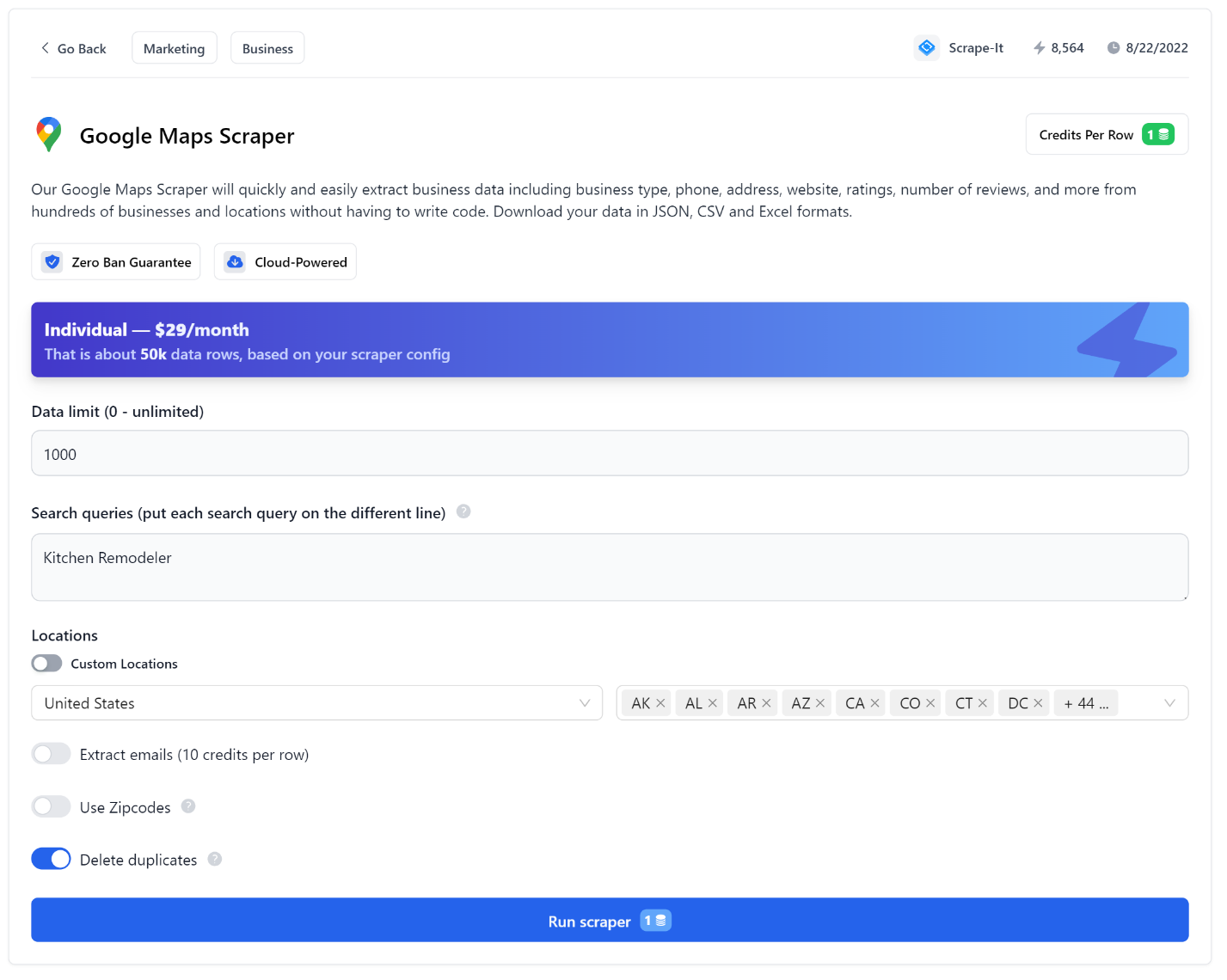

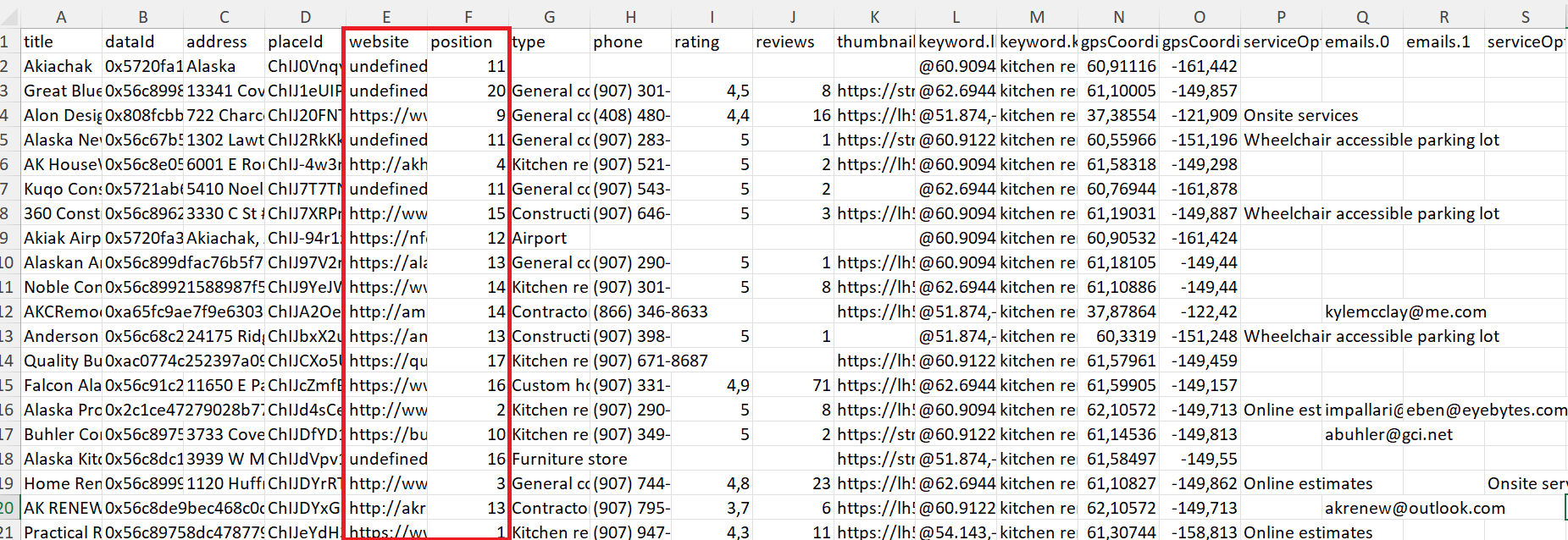

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Factors Influencing Local Rankings

Several factors can affect your ranking in Google Maps. One of the most important is having a complete and optimized Google My Business profile. This includes having accurate and up-to-date information about your business, photos, hours of operation, and contact information.

Positive reviews and high ratings can build trust with potential customers. They can also help you attract more customers. However, the exact impact of reviews on local rankings is still unclear. Some experts believe the rating is more important, while others believe the number of reviews is more important.

In addition to reviews and ratings, many other factors can influence a business's ranking on Google Maps. These factors include:

- Relevance: The more relevant your business is to the search query, the higher your ranking will likely be. For example, if a user searches for "pizza near me," your business is more likely to rank higher if you are a pizza restaurant.

- Distance: Google Maps considers the distance between your business and the user's location. Businesses that are closer to the user are more likely to rank higher.

- Accuracy and completeness: Google Maps uses your Google My Business profile information to rank your business. It is important to make sure that your profile is accurate and complete.

- Photos: Photos can help your business stand out from the competition. Upload high-quality photos of your business and its products or services.

- Social media: Social media activity can help Google Maps understand how popular your business is. Share updates about your business on social media and encourage your customers to follow you.

- Backlinks and Mentions: High-quality local backlinks from relevant and authoritative sources can improve your position. These can be links to your website or mentions of your business in local directories.

By understanding the factors that influence local rankings, you can improve your position in Google Maps and attract more potential customers to your business.

Google Maps API as a Basis for Local Rank Tracker

You need to choose or create a suitable tool to track your Google Maps ranking. The choice of tool should be based on your skills, capabilities, and goals. The most optimal and flexible solution is to create your tool using the Google Maps API. However, if you don't have the time or skills, you can use this ready-made script that runs directly in Google Colab.

Choose Google Maps API

To reduce the difficulty of local data scraping, we will use an API. First, let's determine what data we will collect and what will be the source. We have two options:

- Google Search Locals;

- Google Maps.

Both options are suitable for collecting data on local business positions, but these sources provide different amounts of data. In addition, the Google SERP API provides a broader range of parameters that can be changed and customized, from geographic location and localization to pagination settings. You can find the full list of parameters in the Google SERP Locals API documentation.

Google Maps Search API is easier to use and provides a larger data set with fewer customizable parameters. However, creating a local rank tracker is more convenient as it provides a larger data set and allows you to customize the location using latitude and longitude parameters.

Following the above, we will use the Google Maps Search API as an example to build a simple script to track the positions of local businesses. To get started, you'll need to register for a Scrape-It.Cloud account and copy your API key, which can be found on the dashboard. We'll need this later.

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Set Up Libraries and Parameters

Let’s write a Python script to perform the necessary actions. Python is a good choice for this task because it is easy to learn and well-suited for scraping and data manipulation. We have also uploaded the final script to Google Colab so you can use it without installing any packages or libraries on your PC.

First, import the libraries we'll need to make requests, work with files, and save data.

import requests

import csv

import os

from datetime import datetimeNext, define variables to store the mutable parameters. The only required parameters are the API key, keyword, and URL of the site whose positions will be tracked. You can also use the name or ID of the place as the search parameter. One of the advantages of this API is its ability to use coordinates, which makes it convenient for tracking positions based on keywords like "near me".

api_key = 'YOUR-API-KEY'

keyword = "Pizza"

url_to_track = 'littleitalypizza.com'

country = 'US'

domain = 'com'

location = '@40.7455096,-74.0083012,14z'Then, set the request body and headers and make the API call using the above parameters.

url = "https://api.scrape-it.cloud/scrape/google/locals"

payload = {

"country": country,

"keyword": keyword,

"domain": domain,

"ll": location

}

headers = {'x-api-key': api_key, 'Content-Type': 'application/json'}

response = requests.post(url, headers=headers, json=payload)At this stage, you can optionally view the JSON response and determine the parameters you want to save, along with the position number.

Search Position of Requested Resource

Before proceeding, check that the API response was successful and that data was returned.

if response.status_code == 200:Parse the JSON response and store the data in a variable for later use.

result = response.json()Verify that the locals attribute was returned in the JSON response.

if "scrapingResult" in result and "locals" in result["scrapingResult"]:Iterate over the elements in the response, looking for the website you want.

for local in result["scrapingResult"]["locals"]:

if url_to_track in local.get("website"):When you find the website, get its position in the response and print it to the console.

position = local.get("position")

print(f"The position of the site {url_to_track} in Google Maps: {position}")Define the data you want to save to a file, along with the keys that identify it.

selected_fields = {

"keyword": keyword,

"position": local.get("position"),

"website": local.get("website"),

"date": datetime.now().strftime('%Y-%m-%d %H:%M:%S'),

"title": local.get("title"),

"placeId": local.get("placeId"),

"dataId": local.get("dataId"),

"thumbnail": local.get("thumbnail"),

"phone": local.get("phone"),

"address": local.get("address")

}Use a break to exit the loop after finding the first match.

break As an optional extra, you can print the data to the console, add other attributes to the data you save, or remove attributes you don't need.

Storing Local Ranking Data

Let's save the data to a CSV file. First, we need to check if a file with the current positions exists. If not, we will create a new file and set the headers. To do this, we will define the file name and then check if the file exists.

csv_filename = 'google_maps_positions.csv'

file_exists = os.path.exists(csv_filename)Next, open the file in append mode. If you want to open it in write mode, delete the old file and create a new one each time, instead of the parameter ‘a’, use ‘w’.

with open(csv_filename, mode='a', newline='', encoding='utf-8') as file:Next, we will create a writer object to write the data.

writer = csv.DictWriter(file, fieldnames=selected_fields.keys())If the file did not exist previously, create a new file and write the headers.

if not file_exists:

writer.writeheader()Finally, we will write the data to the file.

writer.writerow(selected_fields)Note that the script will end with an error if the data is not found. To avoid this, you can place the data saving code in the same place where you process the data.

The Google SERP API library is a comprehensive solution that allows developers to integrate Google Search Engine Results Page (SERP) data. It provides a simplified…

The Google Maps API Python library offers developers an efficient means to harness detailed location data directly from Google Maps. This library simplifies the…

Continuous Monitoring Mechanism

To run a script once, simply run it and get the results. To run a script multiple times, you need to define the frequency and automation tool. For example, we previously showed how to add a task to Cron to track your Google SERP rankings in PHP.

If you are using Windows, you can use the Task Scheduler to configure the script to run at a specific time or under certain conditions (such as when the computer starts). The Task Scheduler is easy to use and requires no additional skills.

Alternative Methods for Google Maps Rank Tracking

There are other ways to create a local rank checker besides the Google Maps API. These methods do not require programming skills. You can choose the best method for your needs, depending on whether you need to check the data once or periodically.

Get Position with No-Code Scrapers

The simplest and fastest way to get the current position of a local business on Google Maps is to use a ready-made no-code Google Maps scraper. To use it, specify the location and keywords and launch the scraper.

After the process is complete, the scraping results will be available for download in three formats: CSV, JSON, and XLSX. The collected data also includes the current position and website addresses, so you can get the position by a specific website by simply using filters.

This method is one of the fastest and simplest, and you don't need to use any additional tools or skills to use it.

Use Integration Services

Integration services allow you to use the same algorithm as with API. However, this method is also available to those who do not have good programming skills, as in this case, you will need to implement your algorithm visually using various blocks.

The most popular integration services are Zapier and Make.com (formerly Integromat). We have already provided a comprehensive guide on Zapier methods. In addition, on our website, you can find articles on how to use Zapier to work with Google Maps API.

Explore Google Sheets

The last option for obtaining the current position of a website in Google Maps is to use Google Sheets. You can create your add-ons or extensions or use existing ones. For example, you can use the APIs already discussed to create a Google Maps Rank Checker.

Alternatively, you can use the ready-made template on rank tracking we discussed in the article. This template allows you to get data not only from Google Maps but also from Google SERP Locals.

Challenges and Solutions

You will encounter several challenges and issues during the development and use of the Google Map ranking checker. These can be technical or general. We will discuss them in more detail later and provide information on how to overcome them.

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Overcoming Technical Hurdles

Creating a Google Maps Rank Tracker can be challenging, as there are some technical hurdles to overcome.

One of the biggest challenges is scraping Google Maps to collect the necessary data. Google has a policy against scraping, so avoiding getting blocked is essential. To overcome this challenge, you can use our Google Maps Search API. The API provides access to Google Maps search results data in a structured format, making it easy to collect the needed data without getting blocked.

Another challenge of developing a Google Maps rank checker is that the page structure can change frequently. This is done for security reasons, to combat bots, and to improve the displayed data. As a result, if you use your position tracking tool, you will need to update it regularly to ensure that it continues to work properly.

To avoid this, you can use an API that will collect all the necessary data and return it to you in a ready-to-use format. This will ensure that your position tracking tool is always up-to-date, regardless of changes to the page structure. The API provides a powerful and flexible tool that can be used to track your rankings quickly and easily.

Addressing Common Implementation Challenges

In addition to the technical challenges involved, there are some considerations to consider when developing a Google Maps Rank Tracker. For example, it is important to pre-plan the principles for adding specific regions and keywords to your queries. Additionally, you need to decide how often you need to collect this data and what specific data you need beyond the website URL and position, such as reviews, price and ratings.

Another consideration is the data source. As mentioned, you can get data about local businesses from Google Maps or Google SERP Locals. However, the amount of data provided and additional information available varies.

Conclusion

In this guide, we've delved into creating and utilizing a Google Maps Rank Tracker. From understanding the fundamental factors that influence local search rankings to delving into the technical aspects of the Google Maps Search API, this guide offers a simple Python script for seamless and continuous monitoring, empowering you to track your Google Maps positions effortlessly.

Recognizing the varying skill levels across businesses, we've explored alternative methods, including no-code scrapers, integration services, and Google Sheets integration, ensuring that businesses of all sizes can adopt an approach that aligns with their needs and expertise.

In conclusion, whether you choose a custom solution using the Google Maps API or code-free alternatives, continuous monitoring and strategic adaptation are key.