Scraping is a very useful tool in almost any field. If you need data to compare your and your competitors' prices, or if you want to collect leads, you'll get the job done faster if you use scraping tools.

However, ways to create scrapers are usually divided into two options: use off-the-shelf universal tools, such as Chrome extensions, or write your scraper. In the first case, you will be very limited in what you can do, and in the second, you will need programming skills.

But there is a way to write your web scraper for your needs, even without programming knowledge. To do this, you need Zapier, an application integration system. And today, we will tell you in detail how you can solve your tasks using its capabilities.

Understanding Zapier: Simplifying Task Automation

As we said before, Zapier is an online no-code automation platform that allows you to connect different apps and services so that they can interact with each other and perform specific actions without having to write any code.

With Zapier, you can integrate over 2,000 applications and services, including email, social media, project managers, CRM systems, cloud file storage, and more. This allows you to automate routine tasks and workflows, save time and improve efficiency by moving data and tasks between different tools and platforms.

Creating Your First Zapier Web Scraper

Zapier provides the ability to create automated "zaps" consisting of triggers and actions.

Triggers are events or conditions that trigger the execution of a zap. For example, a new email, clicking a link, creating a new entry in Google spreadsheets, or receiving a new order from an online store.

Actions are tasks or operations that must be performed in response to triggers. For example, sending a notification to a cell phone, adding data to a table, or to a CRM system.

So, let's create our first zap. To do this, go to the Zapier website and sign up. After that, the site will ask you to create your first zap, agree, and get started.

Add a trigger

The first thing we need to do is add a trigger. A trigger can be any action, such as a letter in the mail or a new row in a table, or we can use a webhook.

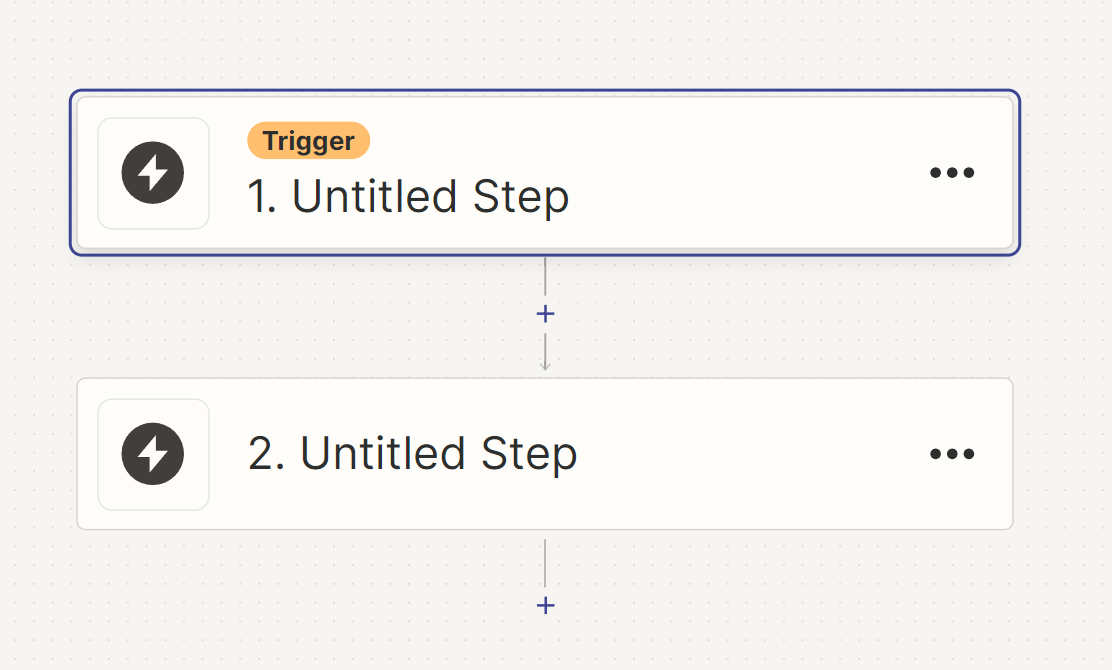

It all starts with a trigger. Look at the template that Zapier automatically generates when you create a zap. Here you see 2 steps, and the first is marked as a trigger.

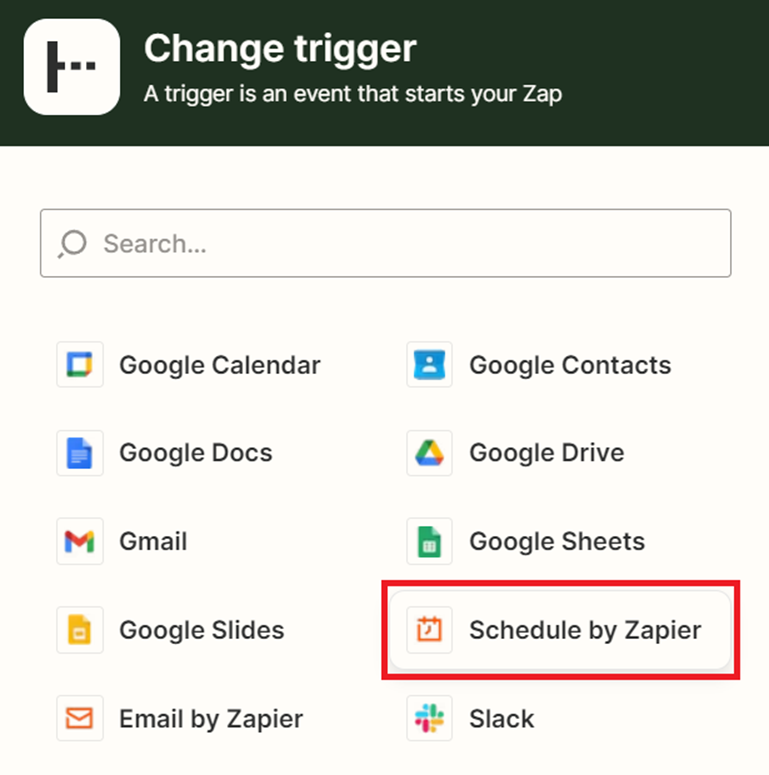

Click on it to change the trigger. We will choose schedule because it is very convenient for simple zaps. When you use a schedule, you can be sure that your zap will run at the frequency you want.

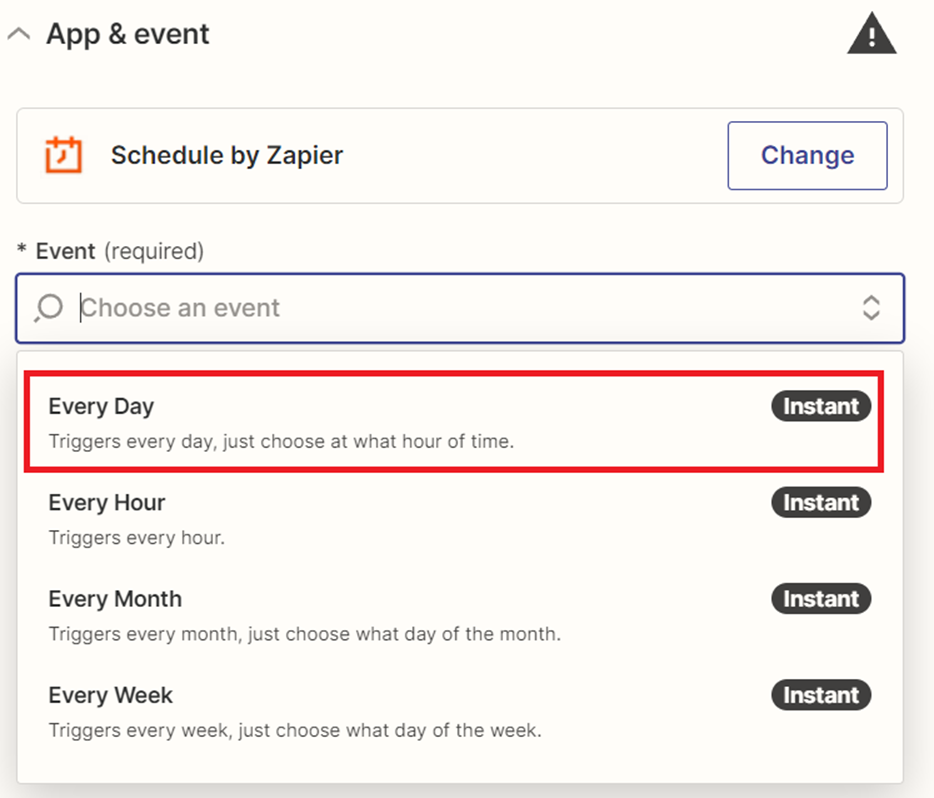

After selecting a schedule as the trigger for your zap, you will need to decide on the frequency at which you want it to run. We will choose once a day as an example.

Zapier also allows you to choose whether you want your zap triggered on weekends or not. If you select no, it will only run Monday through Friday. Then select the time at which the Zap will be performed.

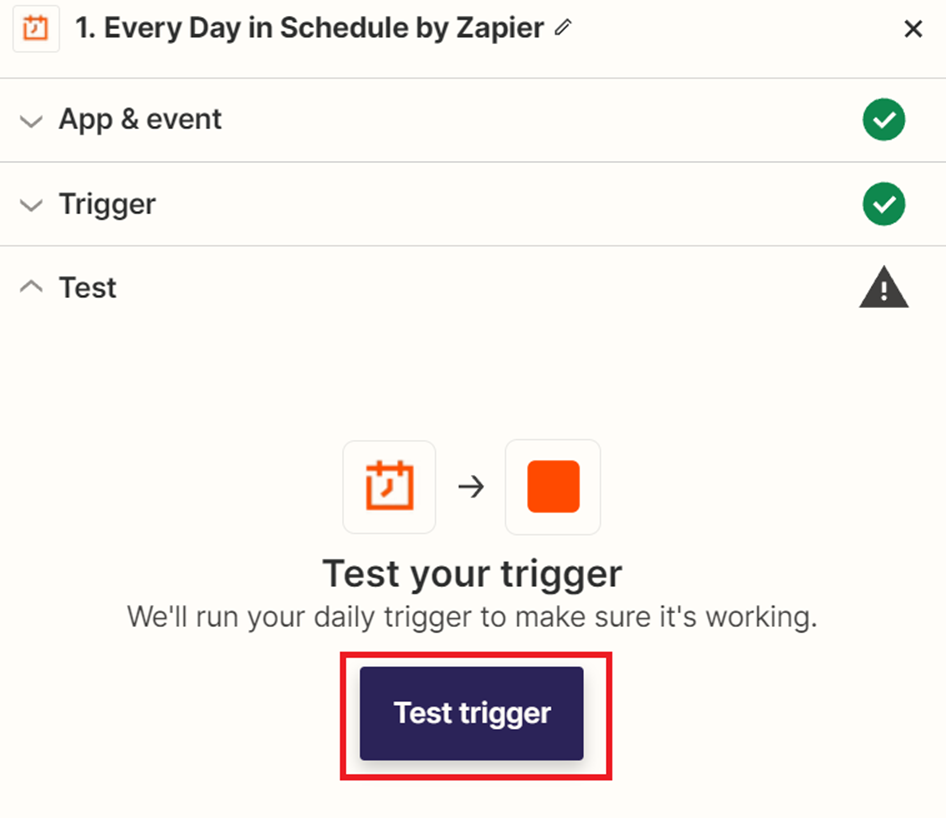

This completes the configuration of the trigger and you can check if it works correctly.

If the result is correct, you can go on to the next step of creating your zap. And if not, you can try to test the trigger again; if that doesn't help, try to perform all the steps again. Now let's see how to build a web scraper using Zapier and HasData. To do this, let's complete the trigger and create the action.

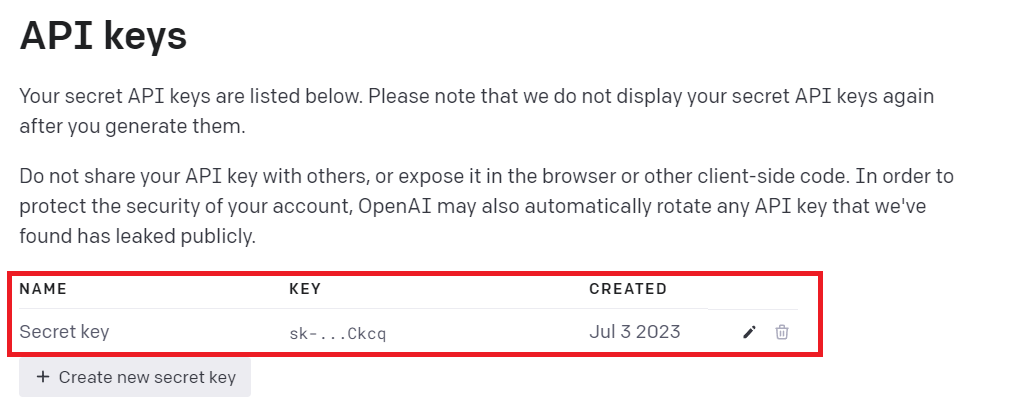

Get HasData API key

Before we add the action, let's get the data we'll need when using it. You will need your unique API key to work with our web scraping API. Let us show you where to find it.

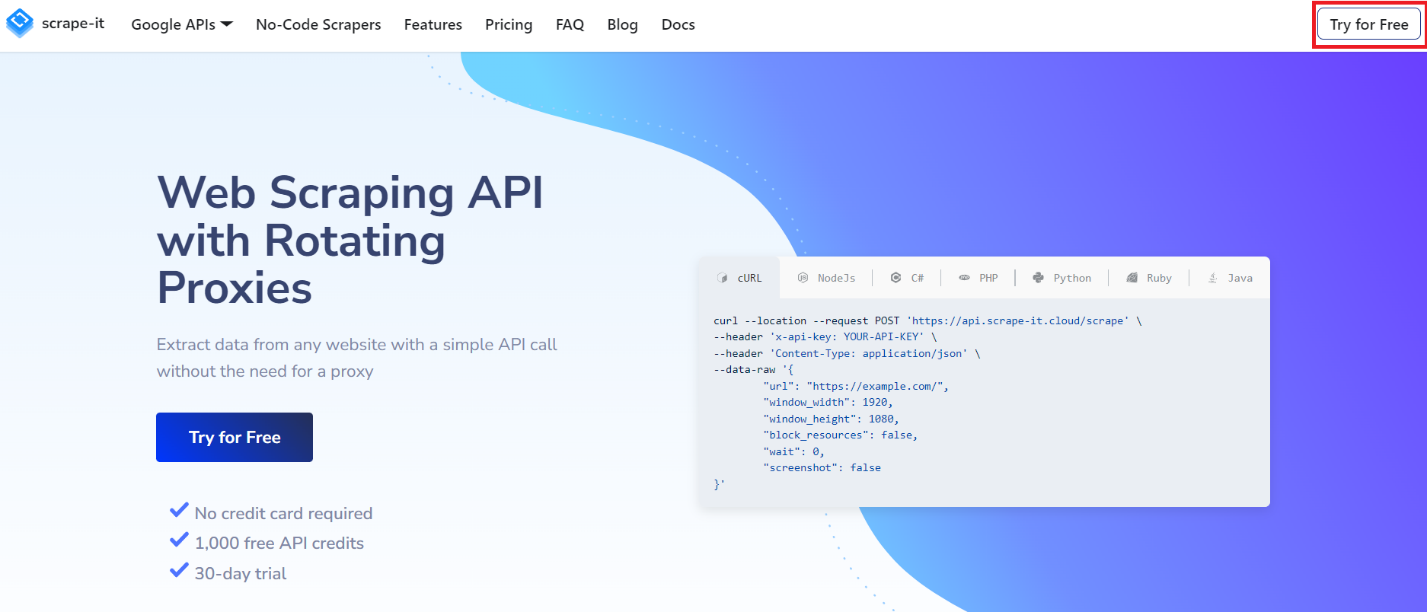

Go to our website and sign up by clicking the "Try for free" button at the top right corner of the screen. We provide a free trial period without requiring personal data during signing up. This allows you to experience the convenience of our service firsthand.

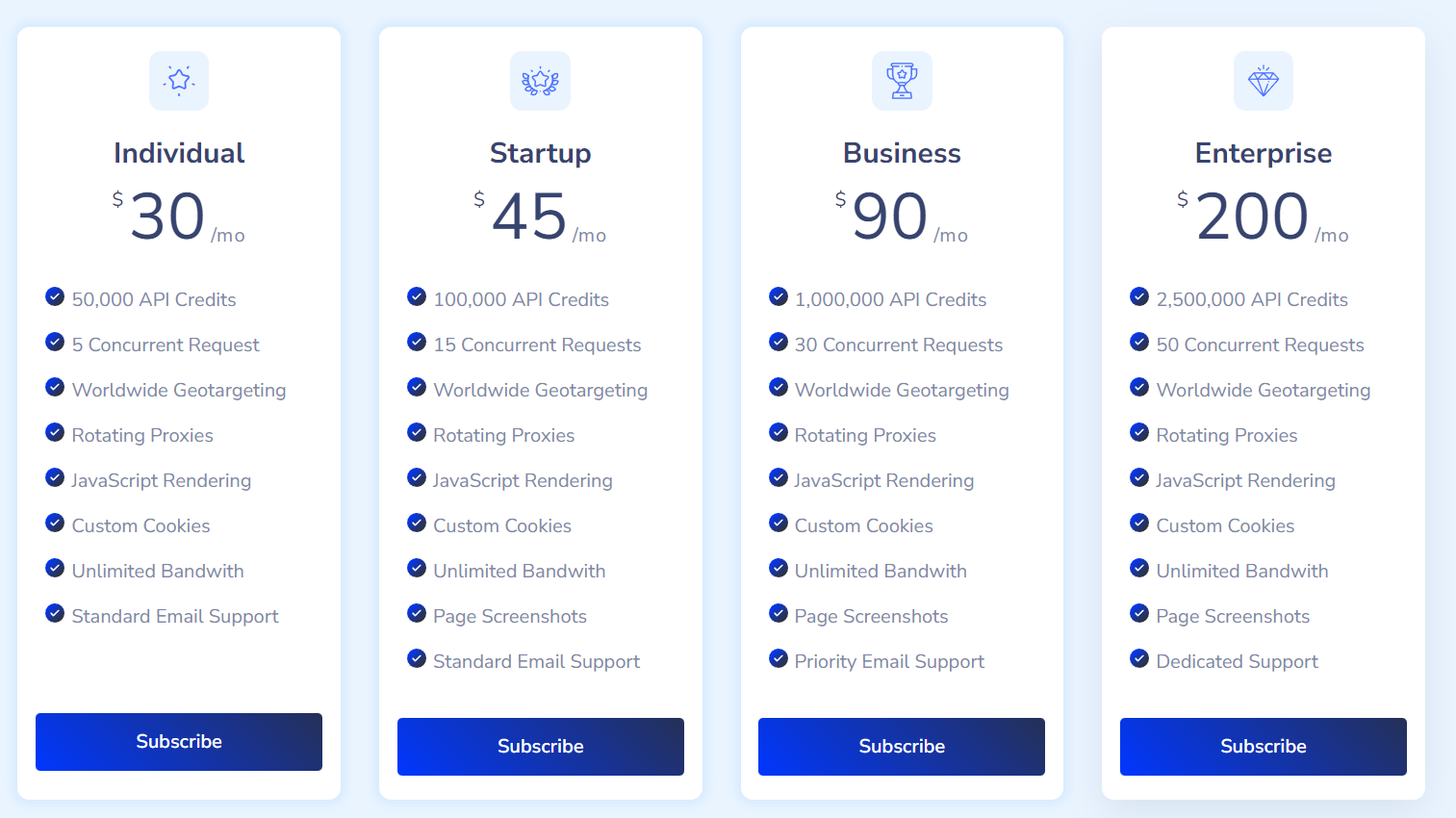

If you want to learn about our pricing plans, scroll down to the bottom of the page and learn about all the details.

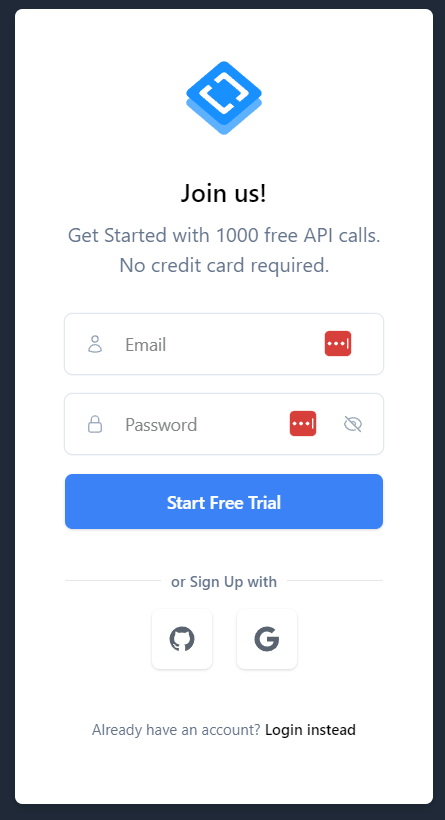

So, let's get back to signing up. To do this, all you need to do is specify your e-mail and create a password. After that, go to your e-mail and confirm your signing up. That's all, and now you can use our service.

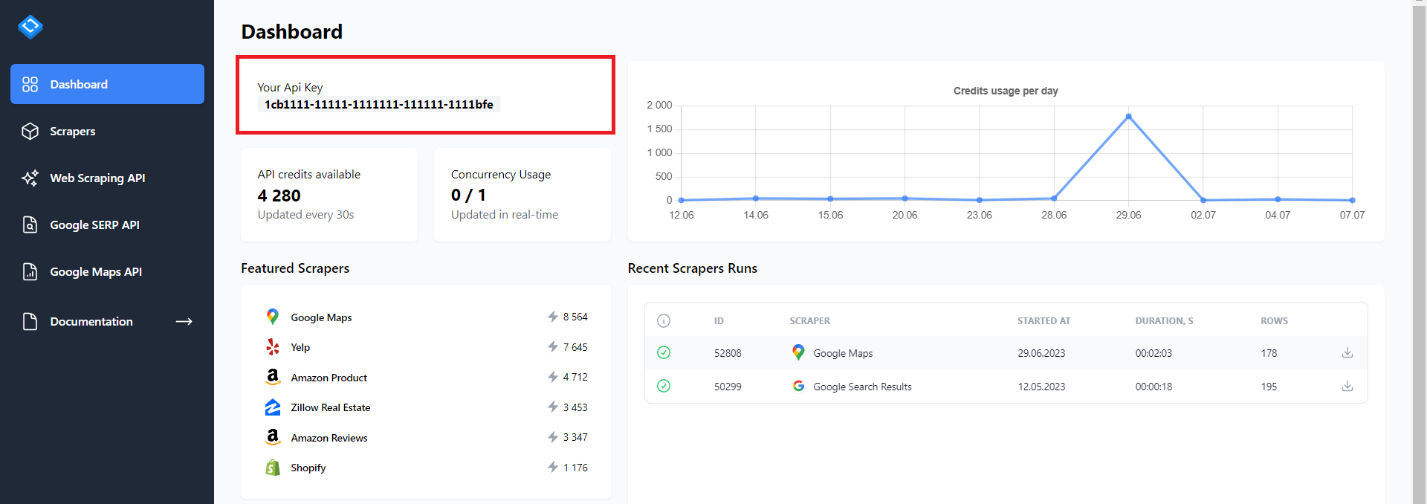

After signing up, you will be taken to your account to the dashboard tab, where you can find your API key, view the number of credits on your balance and use statistics. You can also browse through various tabs, including the Scrapers tab, where you can find our no-code scrapers for various popular resources.

So, copy your API key and return to creating our Zap.

Connecting HasData API to Zapier

Using our API allows you to automate retrieving data from web pages and Google Maps and collect information from Google search pages. This can be a helpful tool for various tasks, including data parsing, lead generation, web page monitoring, and gathering information for analysis.

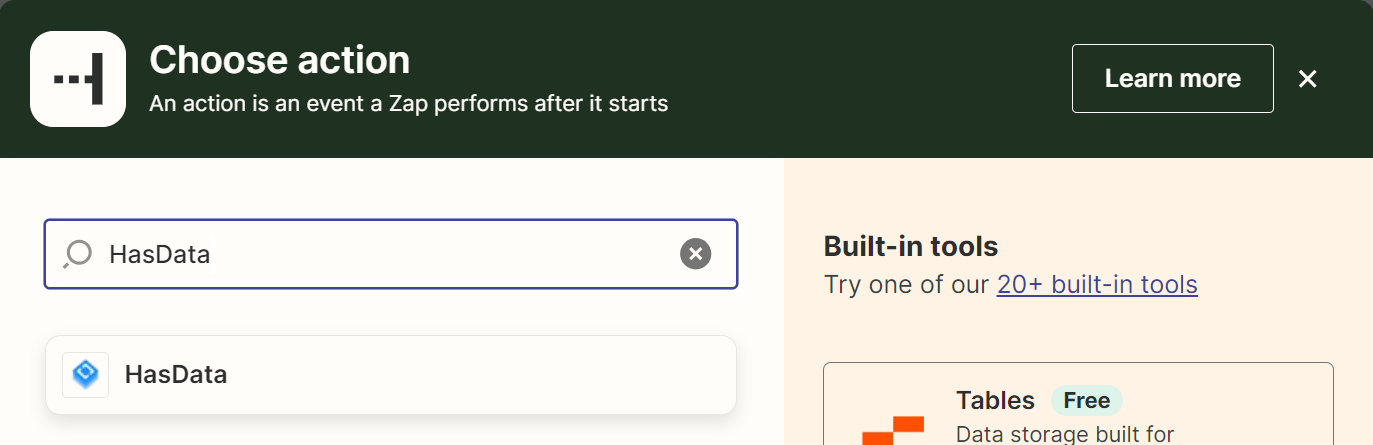

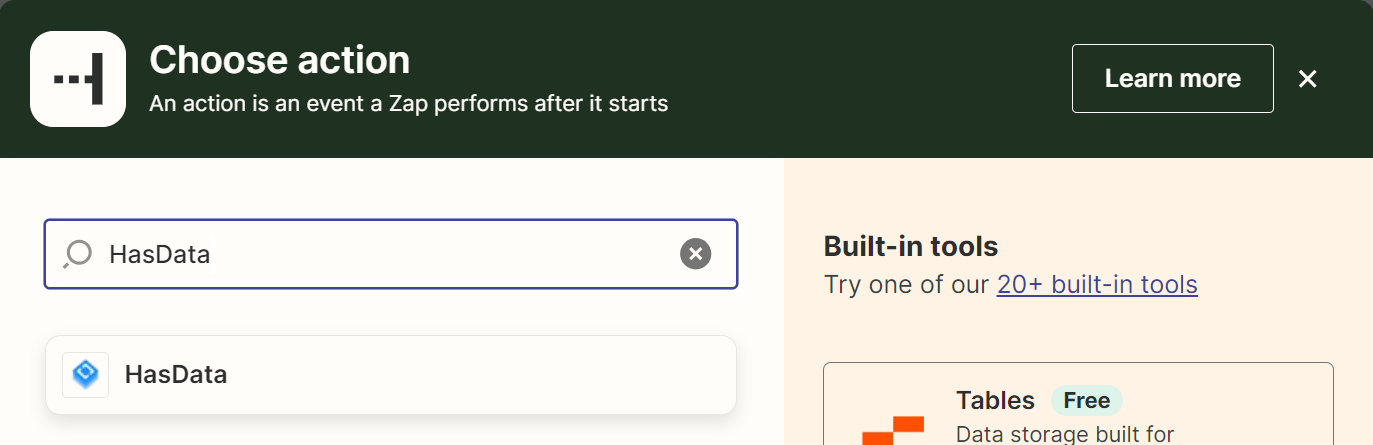

So, similar to the trigger, let's change the action. Use the search to find HasData.

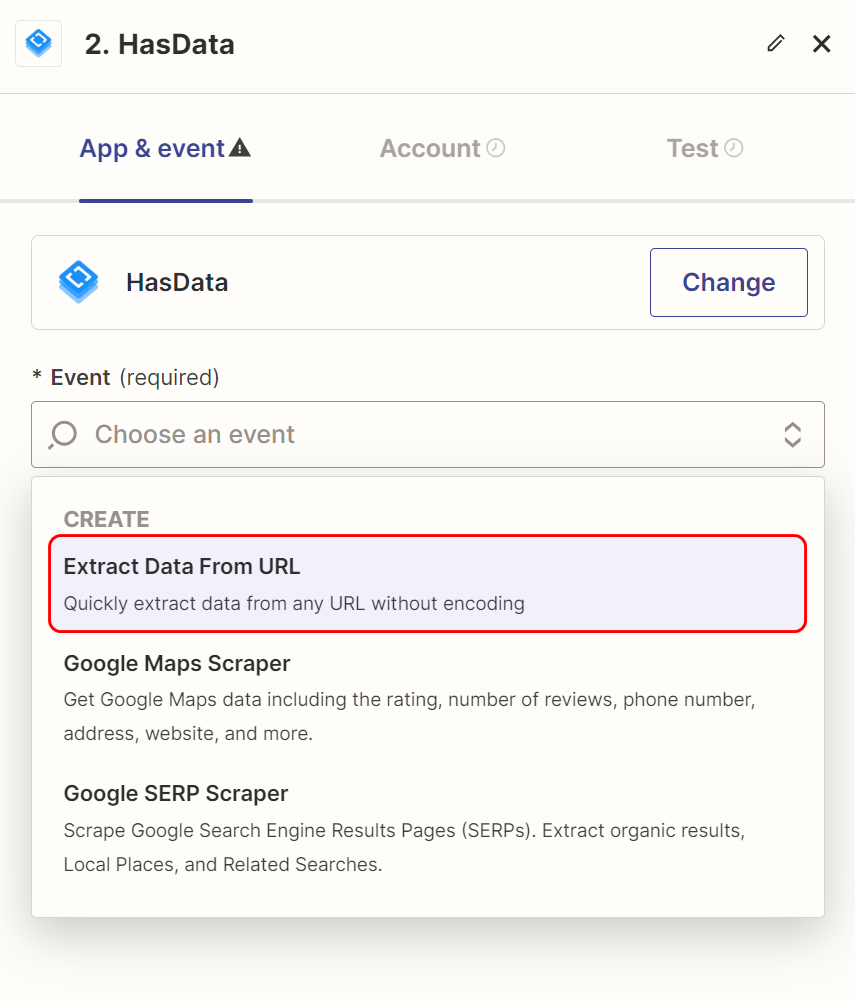

HasData provides options for extracting different information. Here is a brief description of each of the three types of information that can be extracted:

- Extract Data From URL. This feature lets you quickly extract data from any URL without coding. It allows you to extract structured data from web pages such as headers, text, tables, images, and other elements. You can specify HTML selectors to show which page elements you need to extract.

- Google Maps Scraper. This feature lets you retrieve data from Google Maps, including rating, number of reviews, phone number, address, website, and other information. You can specify a specific address or keywords to search and retrieve data about relevant items on the map. If you want to know more about it, you can read our article on the topic we wrote earlier.

- Google SERP Scraper. With this feature, you can extract search results from Google's search engine results pages (SERPs). You can get information about organic search results, local locations, and related searches. This is useful if you need to automate collecting data from Google search results for analysis or other purposes.

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Let's focus on a universal option for extracting data from any website by resource link.

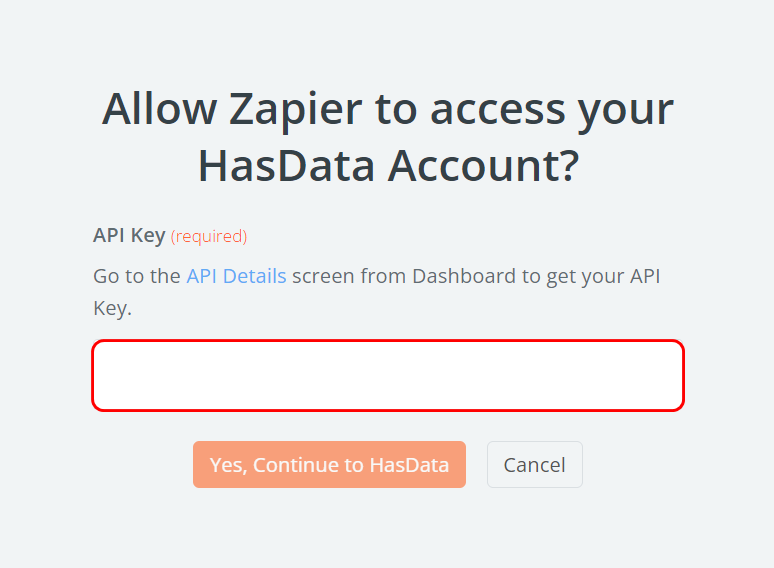

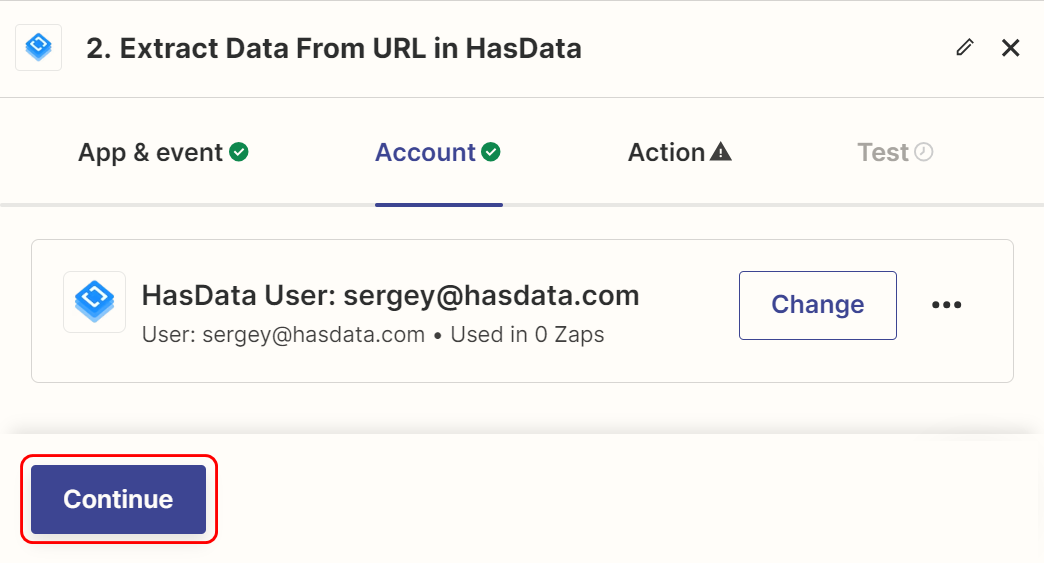

Now you need to connect your HasData account to Zapier. To do this, we only need the API key, which we showed earlier in the Dashboard tab of the account.

Copy your API key and paste it into the field that appears. Don't worry, you can always unlink your account from Zap or connect to another.

Now you will see the name of your account instead of the connection field, which means that you have successfully connected your Scape-It.Cloud account to Zapier.

Once your account is connected, you can move on to the next step to learn how to scrape websites.

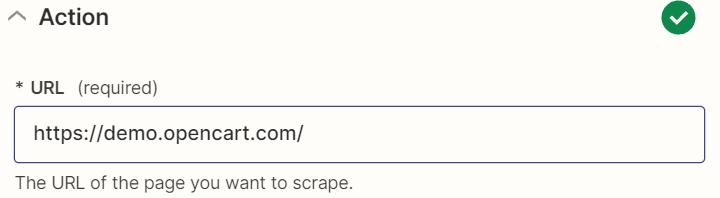

Add Link

We won't go into detail about all the fields, but we will focus on the essentials. Each field has a description; if you find it necessary, you can fill it out yourself. We will cover only the required fields and the most commonly used ones. You can leave the optional fields unfilled at all.

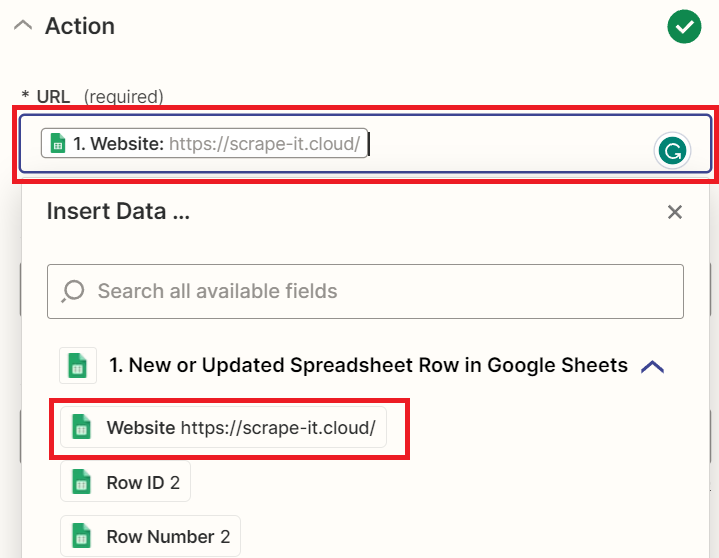

And the first field you need to fill out is a link to the target site you want to scrape data from.

You cannot leave this field empty, as it is a required parameter.

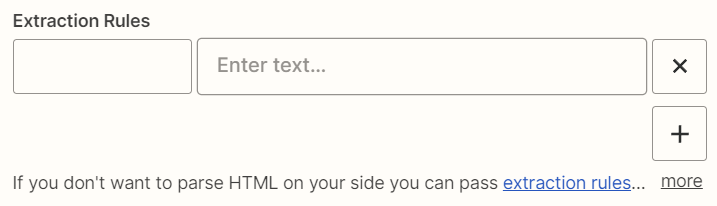

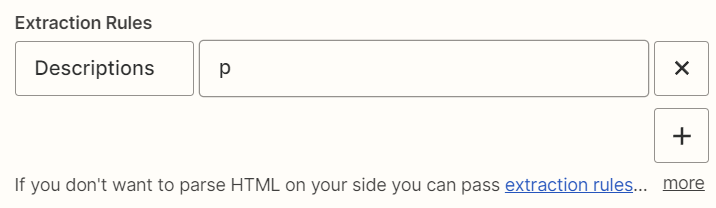

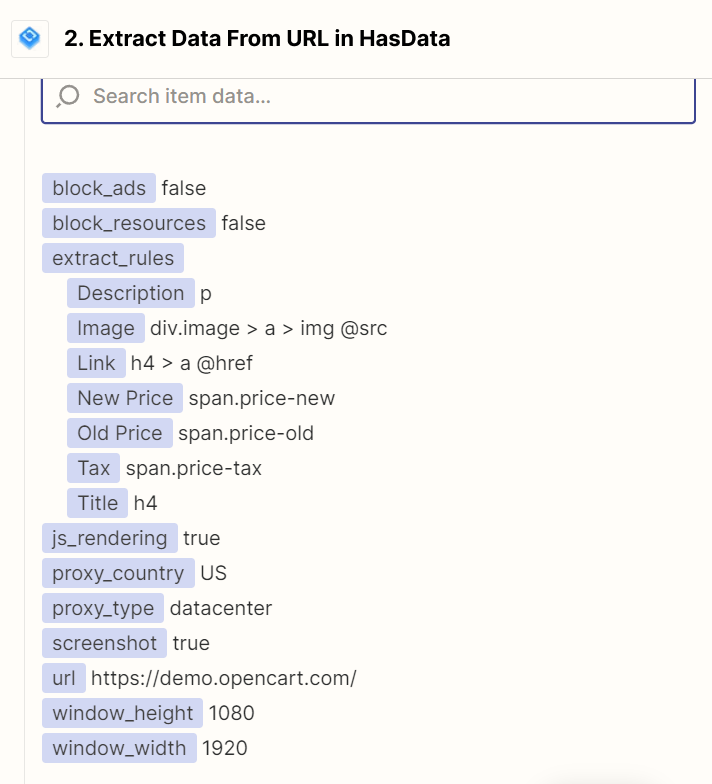

Specify Extraction Rules

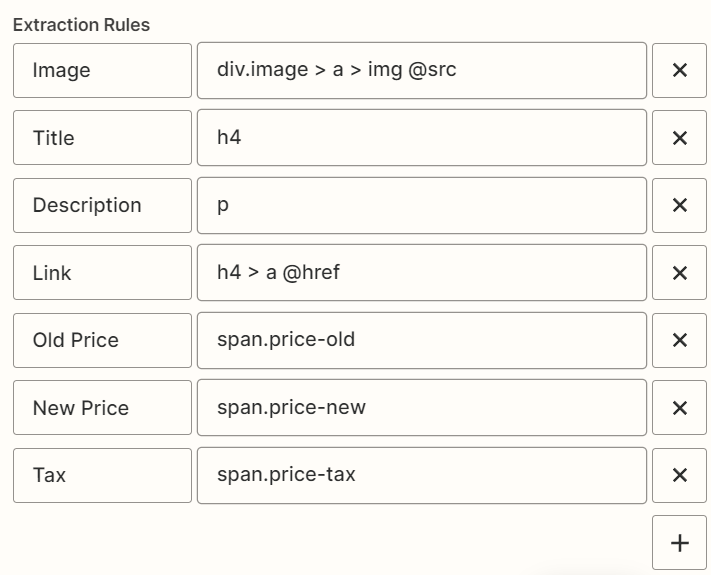

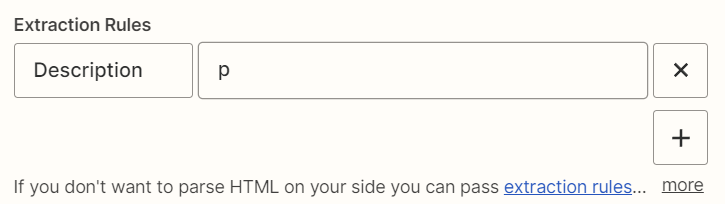

The following field is Extraction Rules, which allows you to extract specific data from a page rather than all of its HTML code.

In the first field, you should specify an optional name for the data group; in the second field, you should specify their CSS selector. For example, if we want to get the descriptions that are stored in the <p> tag, we can name them "Descriptions" in the first field and just write "p" in the second one.

Although using Extraction Rules may seem complicated, this is not true. And to show you this, let's make Extraction Rules for the data from this demo site.

Find CSS Selectors

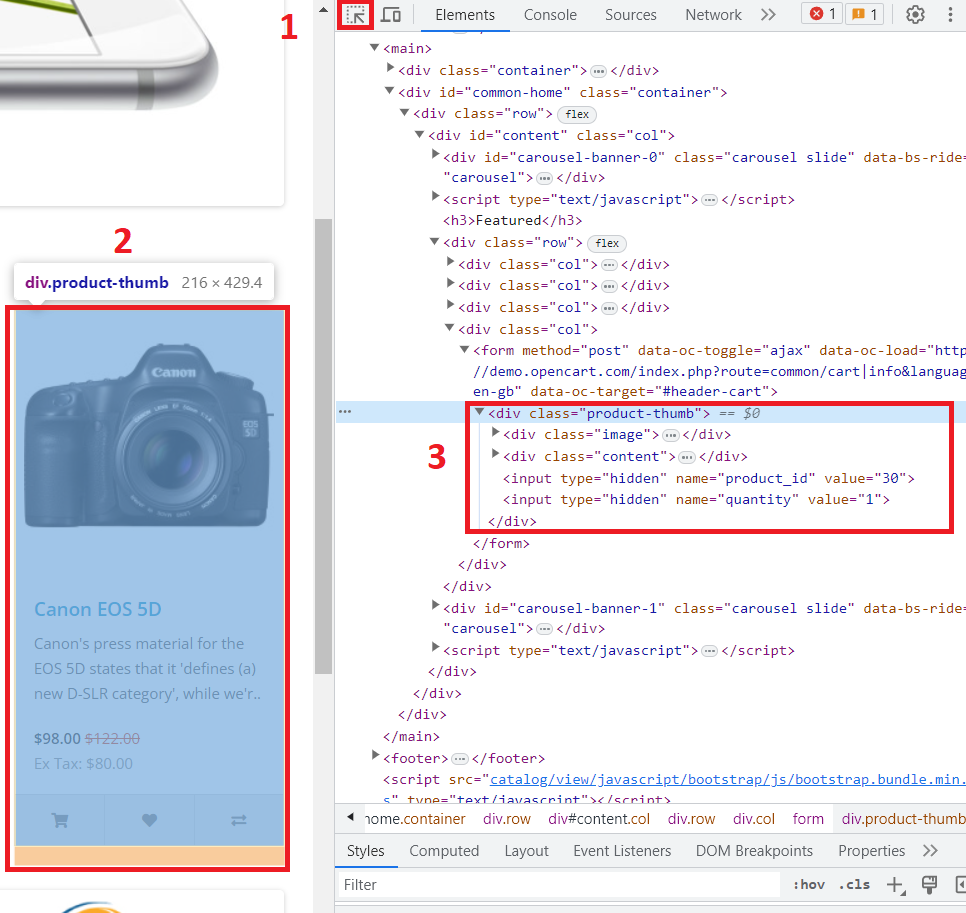

First, we need to find the CSS selectors for the elements we want to get. To do this, go to the page and open DevTools (F12 or right-click on the screen in space and select Inspect). All the code of the page is displayed here. To find out the CSS selectors of each element, we need to analyze them.

To do this, let's use the built-in features of DevTools. Select the element search function and simply select the element you want to find the CSS selector.

Let's select each element and remember the tags where they are.

Here, we can see that all the necessary data is stored in the parent tag "div" with the class name "col," which contains all the products on the page. It includes the following information:

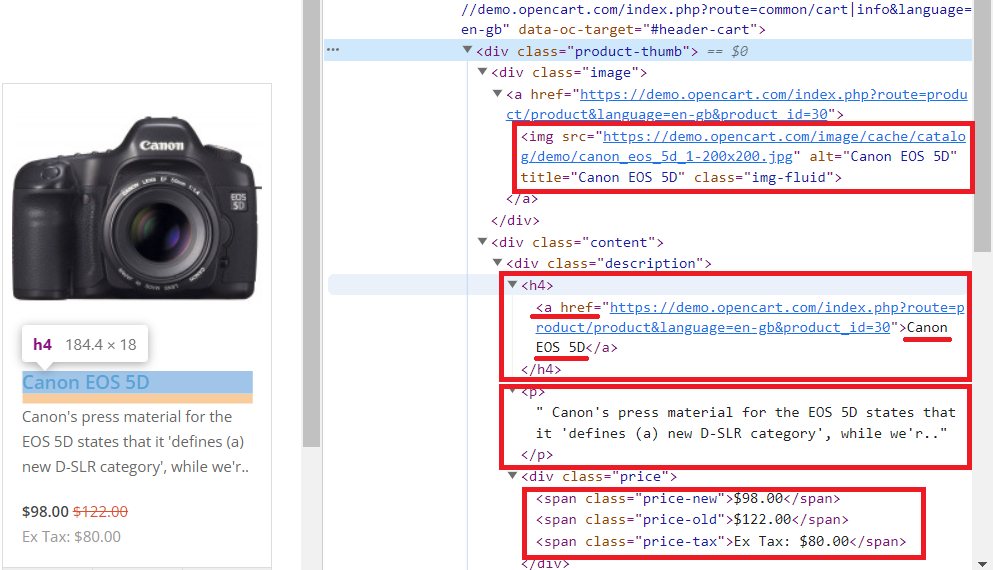

- The " div.image > a > img @src" CSS selector holds the link to the product. If we seem too generic CSS selector like "img @src", we got every image on the page.

- The "a" tag contains the product link in the "href" attribute.

- The "h4" tag contains the product title.

- The "p" tag contains the product description.

- Prices are stored in the "span" tag with various classes:

5.1. "Price-old" for the original price.

5.2. "Price-new" for the discounted price.

5.3. "Price-tax" for the tax.

Now that we know where the information we need is stored, we can return to Zapier and Extraction Rules.

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Put CSS Selectors to Extraction Rules

Choose descriptive names for each element and provide their CSS selectors. If you're unfamiliar with how to construct CSS selectors or are unsure what they are, you can read our article on CSS selectors to understand better.

Now we will only get the information we need.

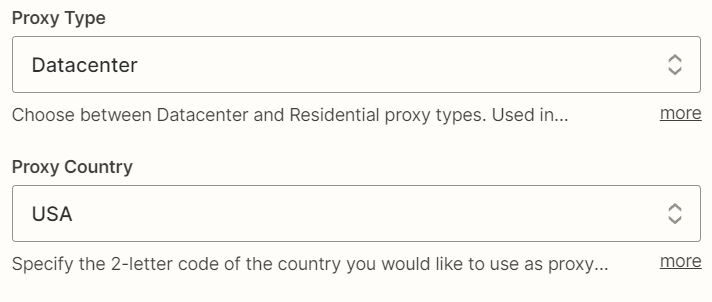

Change Proxy

This is an optional step so you can skip it. However, if the website provides different information for different countries, you can choose a proxy server from the country you want to get results.

If you skip this step, the default values of the datacenter USA proxy will be used.

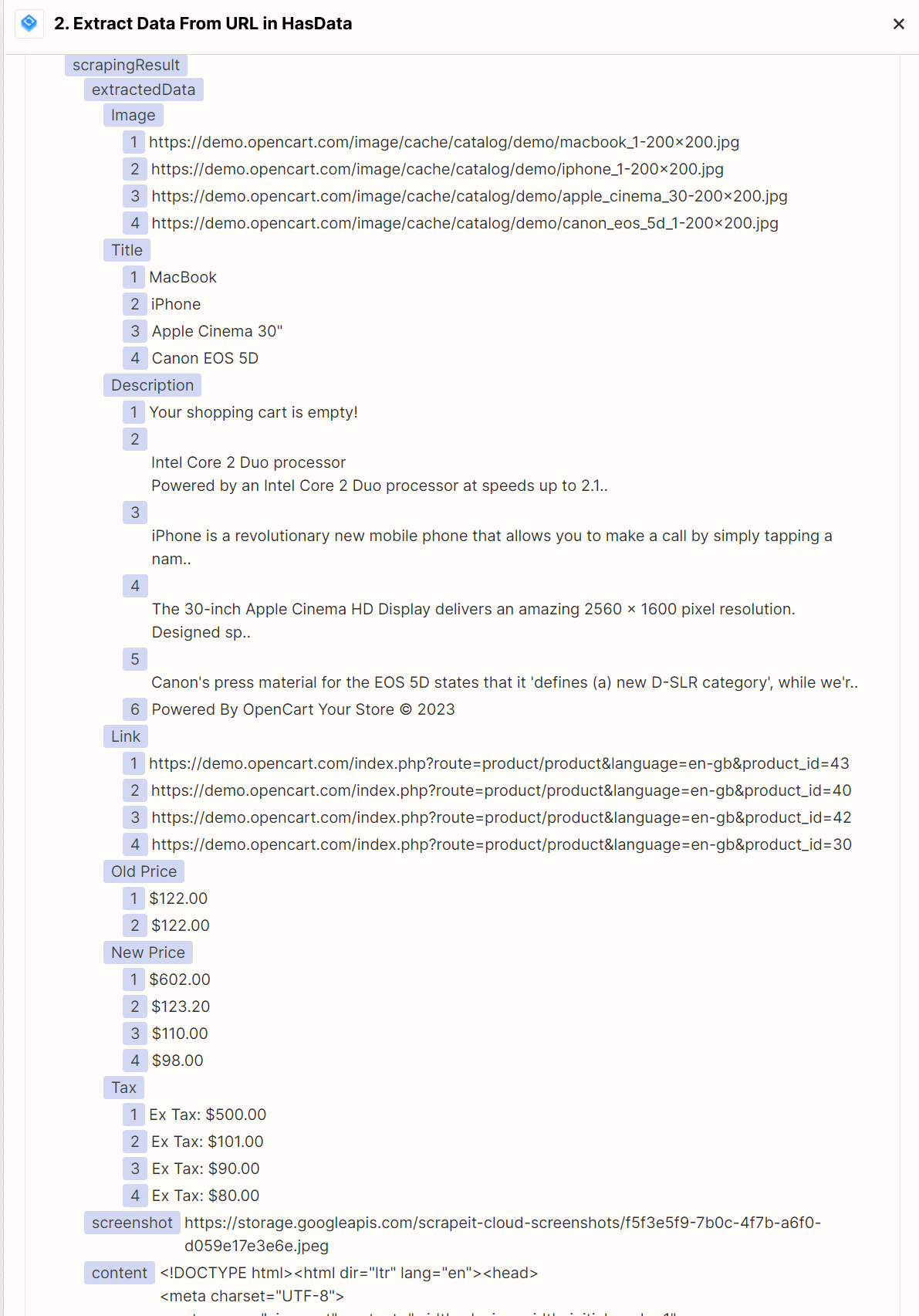

Test the result

You can also fill in the rest of the fields as you see fit, and we'll move on to the next part - checking the results.

You can also fill in the rest of the fields as you see fit, and we'll move on to the next part - checking the results.

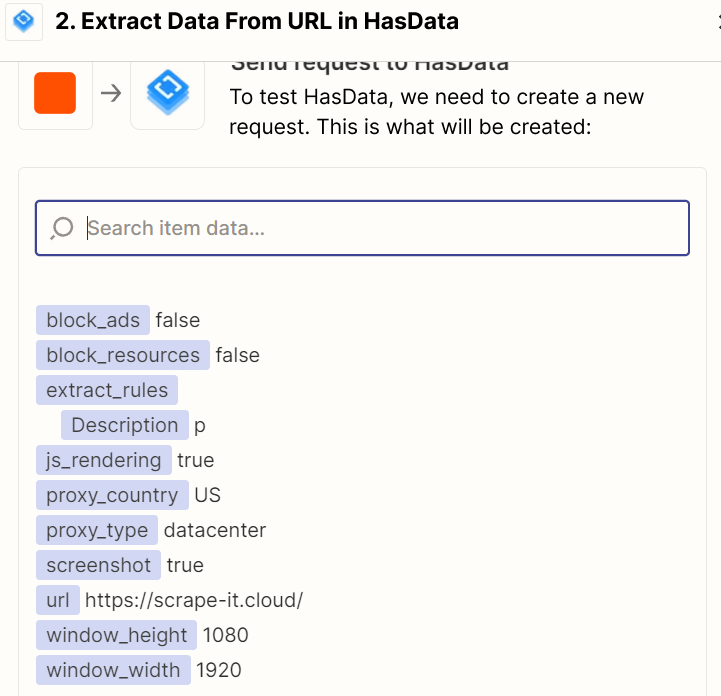

When you're satisfied that everything is correct, run the test. As a result, get the data as a JSON response.

Remember, while composing CSS selectors, it is necessary to be careful that the elements are clearly defined.

Advanced Use Cases

Now that we have covered the basics, we can move on to more advanced examples. Based on our experience, the most popular and frequent integrations with our API are Google Sheets and ChatGPT. Therefore, we will now detail how these can be used together.

HasData with ChatGPT

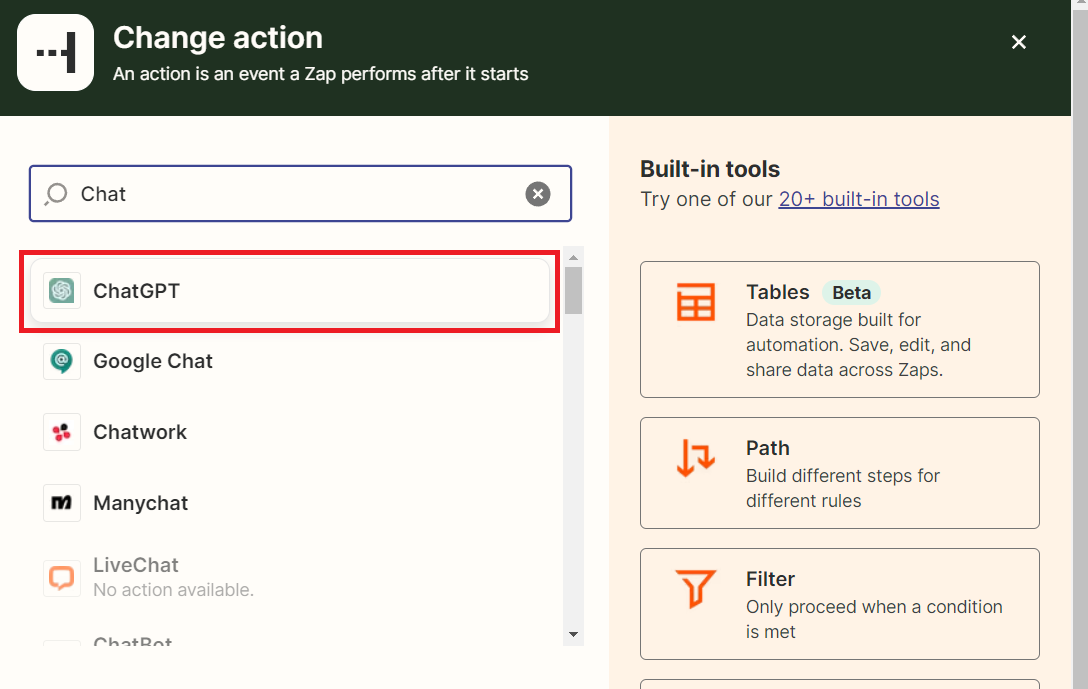

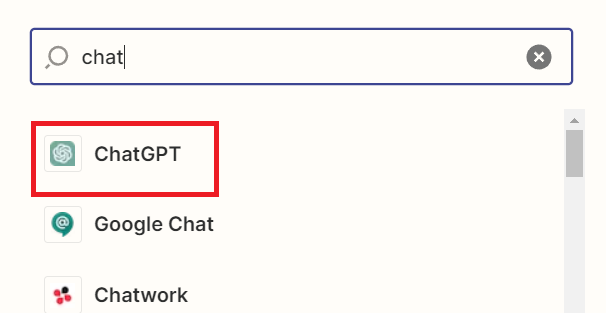

And we'll start with ChatGPT. To avoid repeating the steps already discussed, we will take the zap from the previous example and add the following ChatGPT action.

As with HasData, you'll need an API key to connect ChatGPT to your Zap. To get it, you need to sign up on OpenAI platform and go to the API key section. You can create one in the same section if there is no API key.

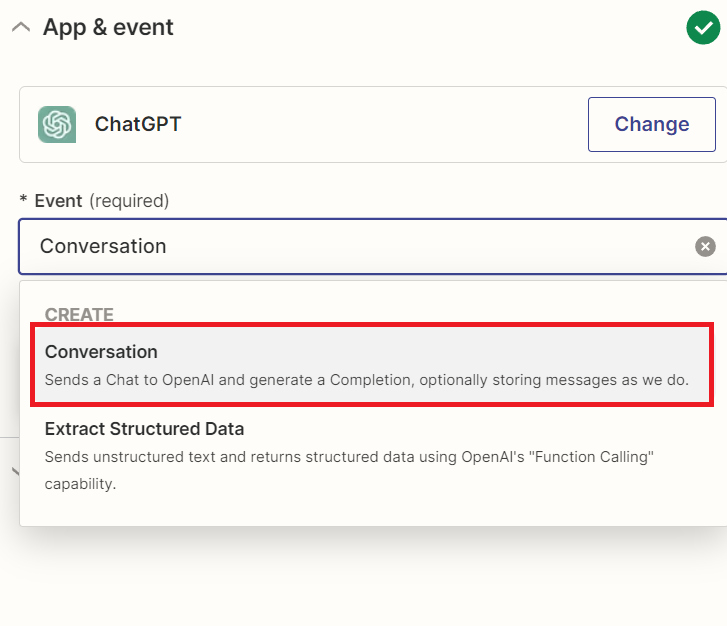

Now let's go back to Zapier and select the ChatGPT interaction type. When using ChatGPT in your zap, you can use the following options to extract information:

- Here, you can send a sequence of messages (a conversation) to ChatGPT, which will be used as context to generate a response. Your messages and model responses can be saved and used in further processing. This allows you to create dialog systems or interact with a user in a chat format where the model answers questions and provides information based on user input. You can also use this option to analyze the input and draw conclusions.

- Extract Structured Data. This interaction option is suitable for analyzing and extracting structured data from incoming unstructured information. The model will look for information about specific entities, such as names, dates, addresses, phone numbers, etc., and return structured data you can use in further processing or integration with other systems.

Thus, with ChatGPT in your zap, you can create chat systems, process conversations with users, and retrieve structured data from unstructured text, giving you more flexibility in processing and analyzing information. We will use the Conversation case as an example to analyze the information we collected earlier.

Now let's connect ChatGPT. To do this, click the Sign in button and wait for the pop-up window. Here you will need your API key.

Put your API key into the highlighted field. The second field can be left blank if you signed up with the original OpenAI without using your company's modification.

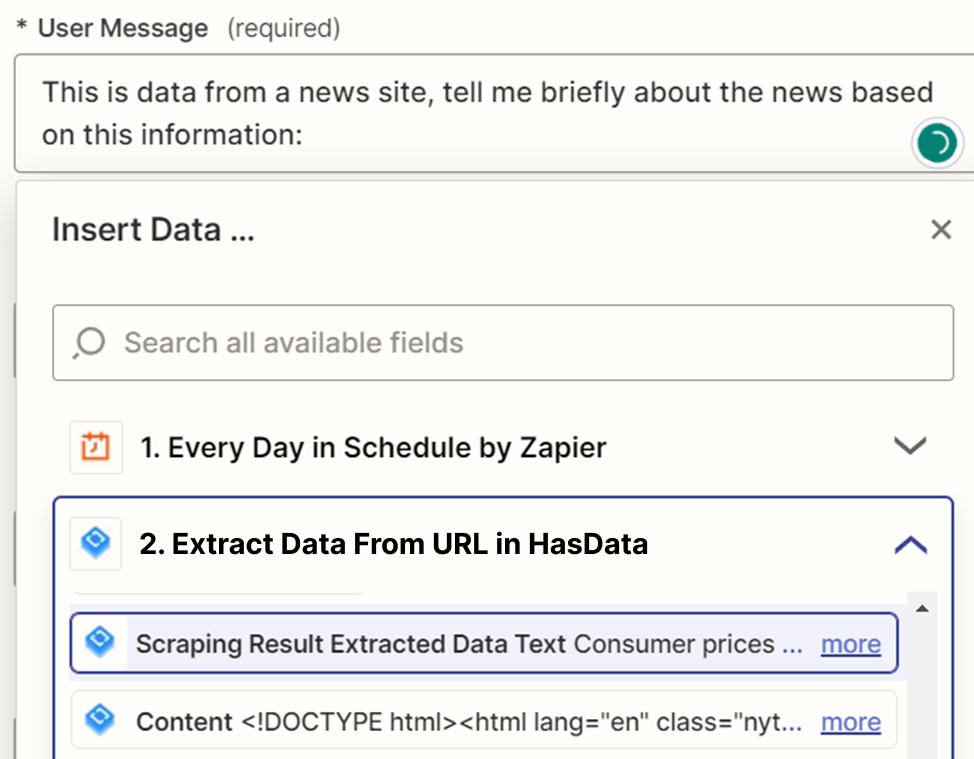

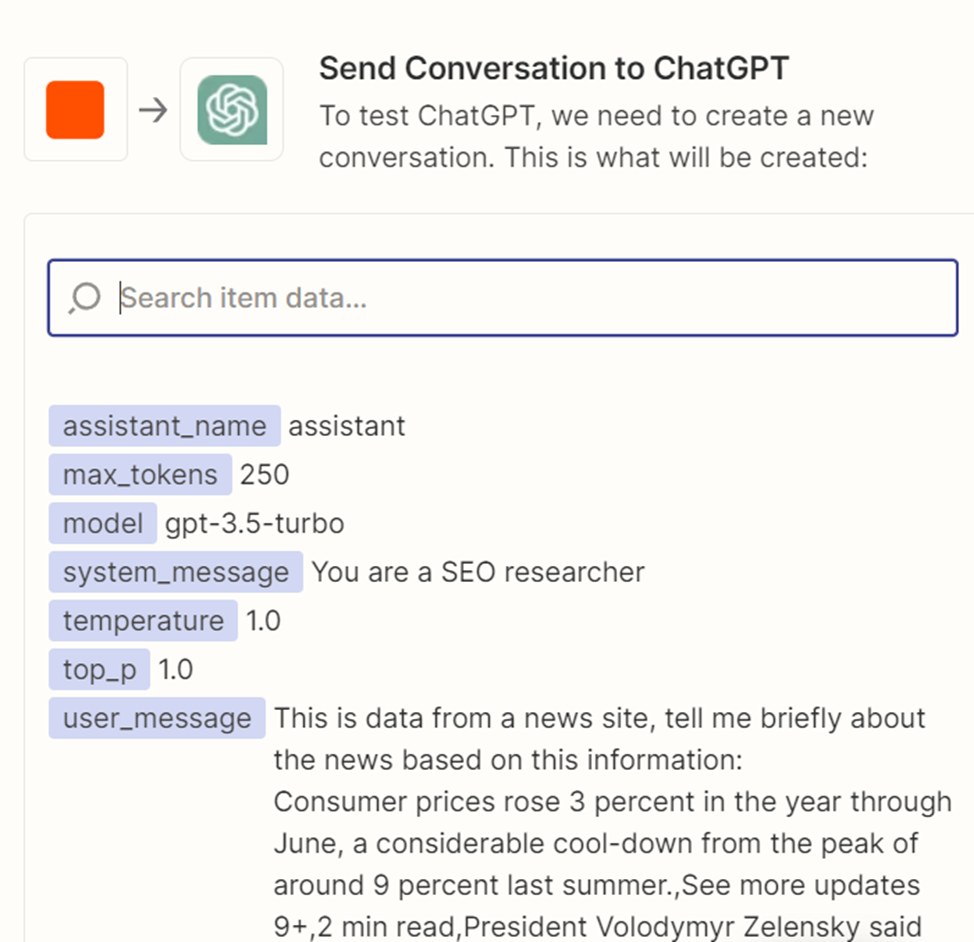

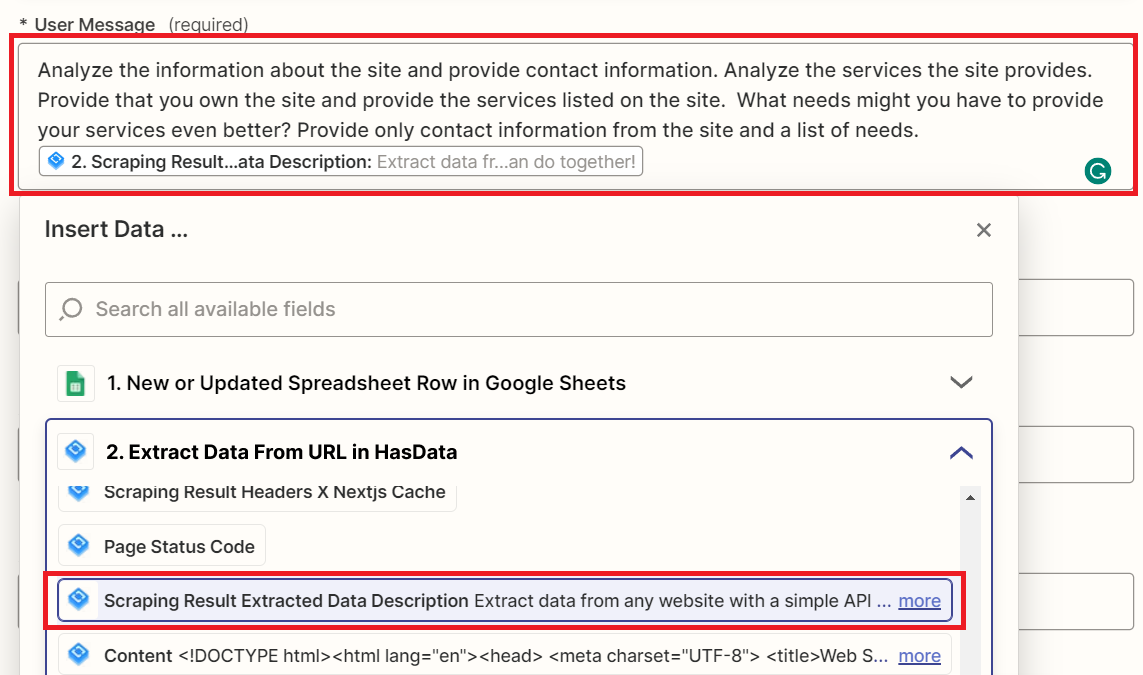

Now you need to generate a request. You can use the text part, which will not change, and the scraped data, which may change under different conditions. To make the example more useful, let's take any news site and replace the link in the previous step to it. Also, we will change extraction rules to get only text in <p> tag. Get a brief summary of the news using ChatGPT.

As a result, we get a query like this:

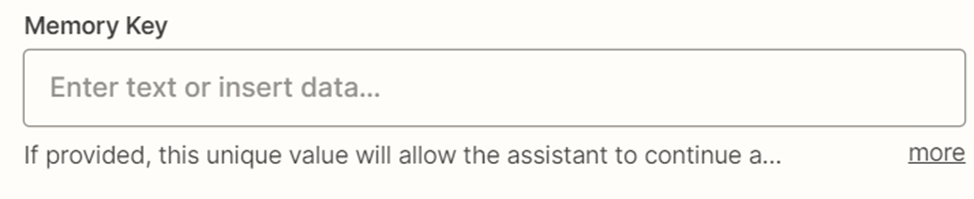

If you want to create a conversation that remembers past messages, specify the key of this conversation by which you will find it.

The rest of the fields are intuitive; you can fill them in at your discretion. So, let's move on to the testing stage. Before you run the test, ensure you have entered all the data correctly.

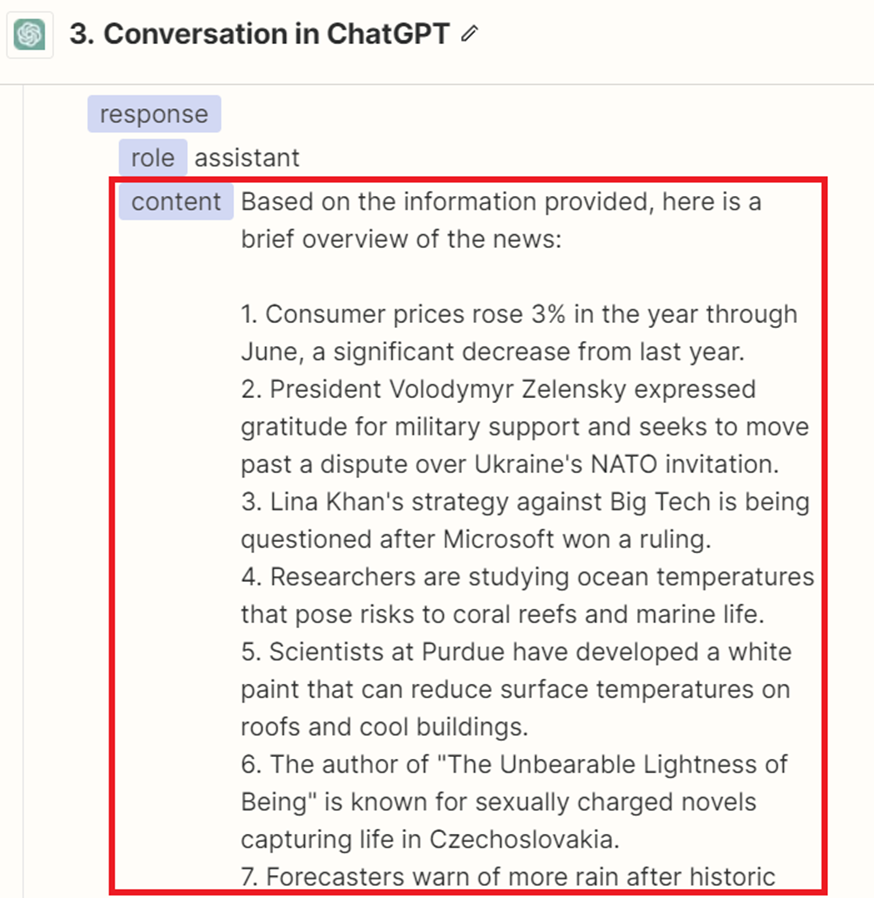

As a result, we will get this (or a similar) ChatGPT response:

As you can see, connecting to ChatGPT is easy enough, but with it, you get many options for how to use Zapier.

HasData with Google Sheets

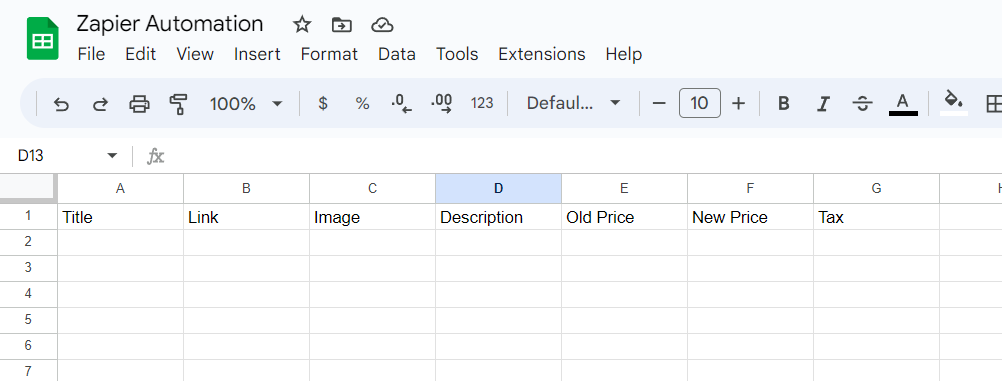

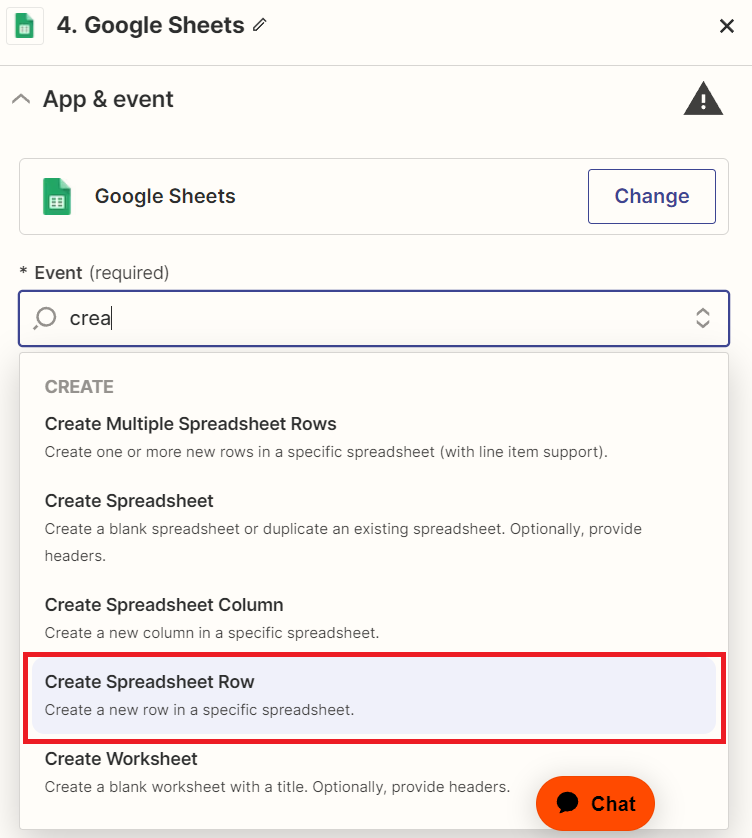

Now, let's see how to get all the data from HasData into Google Sheets. To do this, first, go to Google Sheets and create a new table. Specify the columns where we will write the information and remember the table's name.

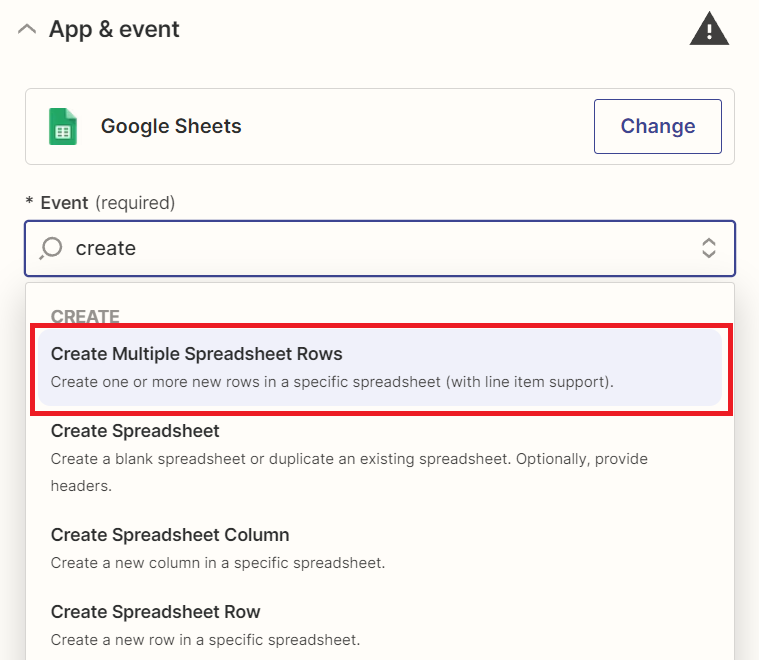

Now go to Zapier and create a new action - Google Sheets. Here we need to select the event option. We will not examine each option in detail but only briefly consider the options:

- CREATE. Allows you to create an empty document, column, row, or worksheet.

- COPY WORKSHEET. Creates a new worksheet by copying an existing worksheet.

- CREATE MULTIPLE SPREADSHEET ROWS. Creates one or more new rows in the specified spreadsheet. Can support line items.

- DELETE SPREADSHEET ROW. Deletes the contents of a row in the specified table. The deleted rows will appear as empty rows in the table.

- FORMAT SPREADSHEET ROW. Applies formatting to the specified spreadsheet row.

- UPDATE SPREADSHEET ROW. Updates the contents of the row in the specified table.

- UPDATE SPREADSHEET ROW(S). Updates one or more rows in the specified table. Can support line items.

- SEARCH. Finds a worksheet, row, or line item.

- REQUEST API (Beta). This advanced action performs an HTTP request using this integration's authentication. This is useful if the application has an API entry point that Zapier has not yet implemented.

Effortlessly scrape and analyze customer reviews from Google Maps to gain actionable insights into customer sentiment, identify areas for improvement, and enhance…

Google Trends Scraper pulls data from several search queries and current topics listed on Google by selecting categories and specifying geographic location, allowing…

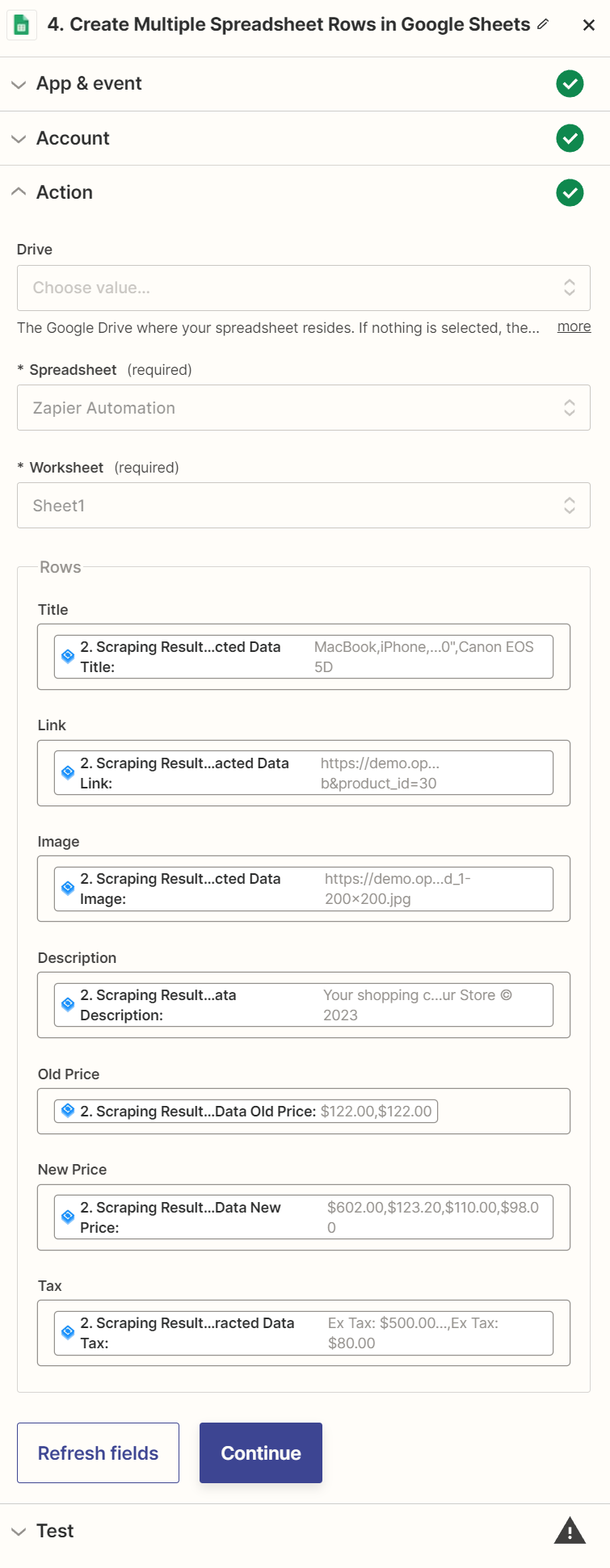

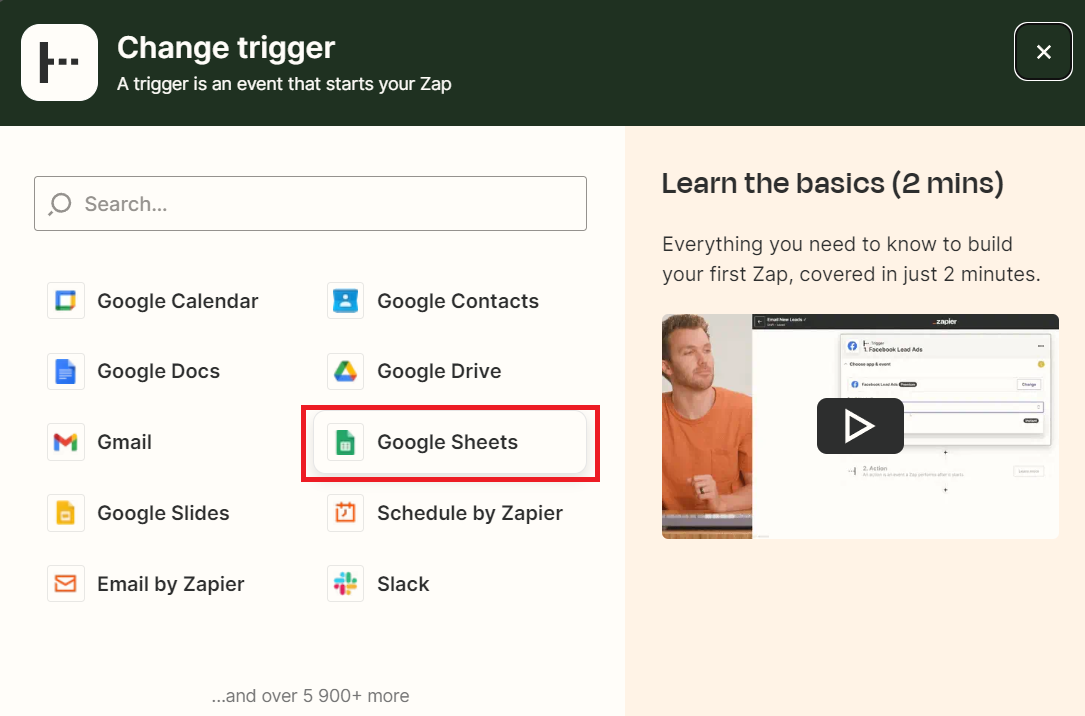

You can use these actions in your zaps with Google Sheets to create, update, format, search, and delete data in tables and worksheets. In our example, we get multiple rows of product data using the HasData API. Therefore, we will use the ability to add multiple rows to Google Sheets document.

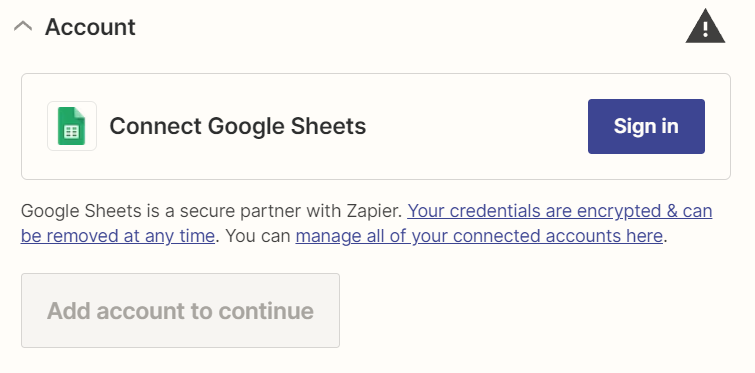

Now you need to log in to your Google account and grant access to your documents so that Zapier can change, add and manage your documents.

Select the account for which you want to share documents.

You can read the terms and conditions and what you provide access to. As we said, you can remove the document management permission from your account anytime.

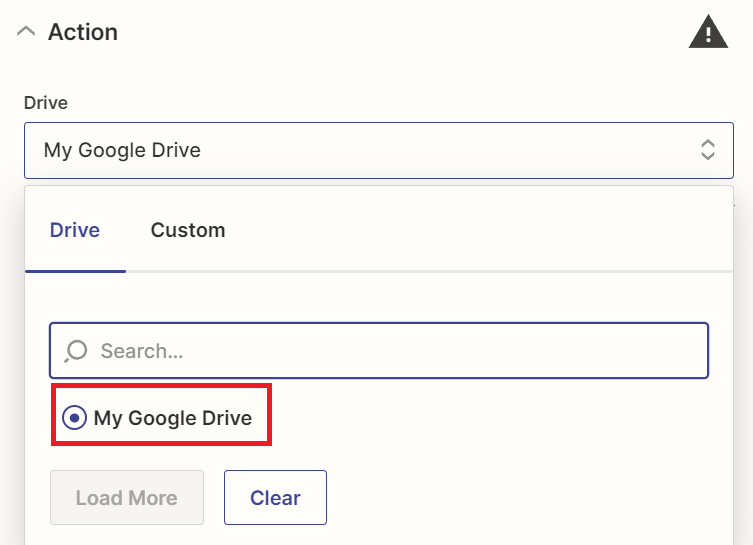

Now we need to select the pre-created table. To do this, we will specify google drive and its location. If you have only one account connected, you will have only one option in this field.

Now select the table you created beforehand.

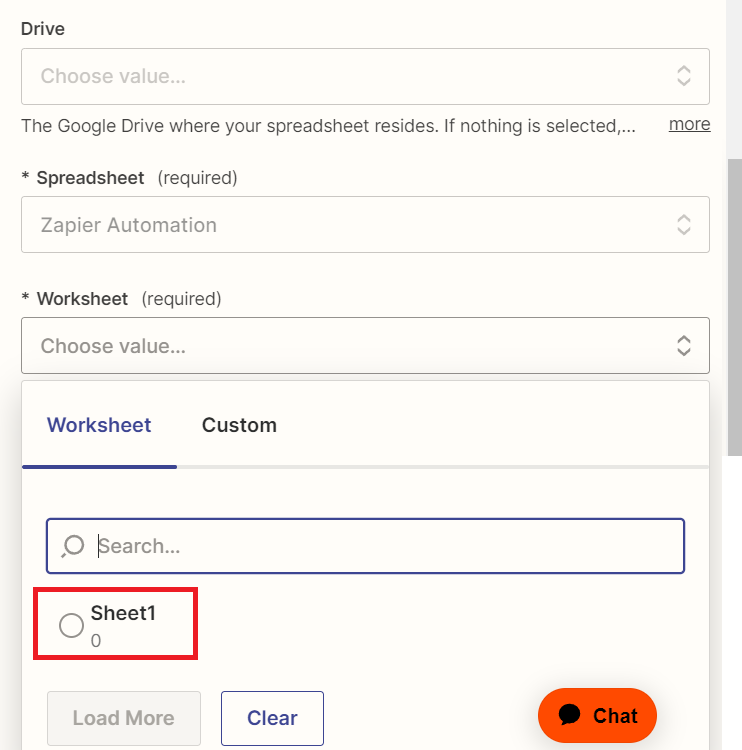

Finally, choose the sheet name containing the pre-created column headers. We didn't change it, so we have only one option here.

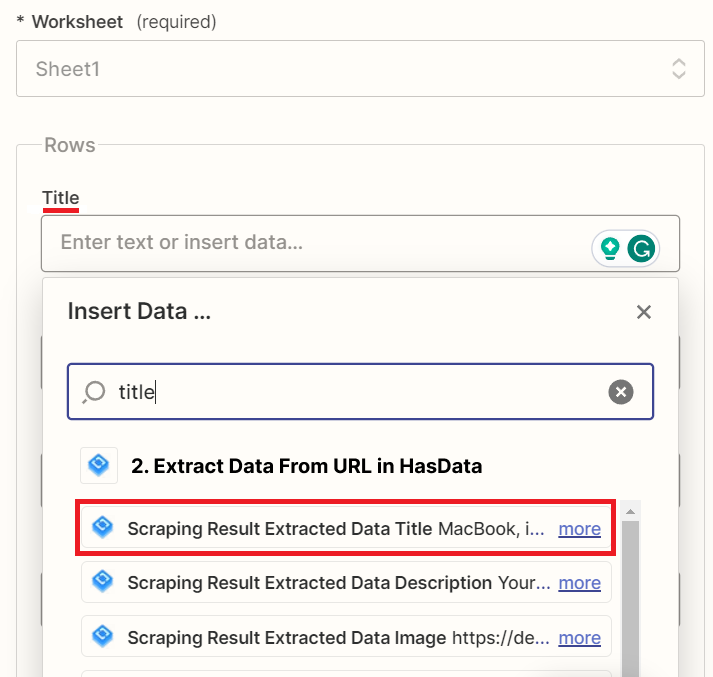

Once we have filled up these fields, we have the columns that are already in this table automatically pulled up. Define the data that will be stored in each column. To do this, click in the input field of the column required and use search or manually find the data that should be in that column.

Given that these are rather simple and monotonous actions, we won't show you how to fill in each field individually. Instead, we will show you the already filled fields so that you can see how the result should look like.

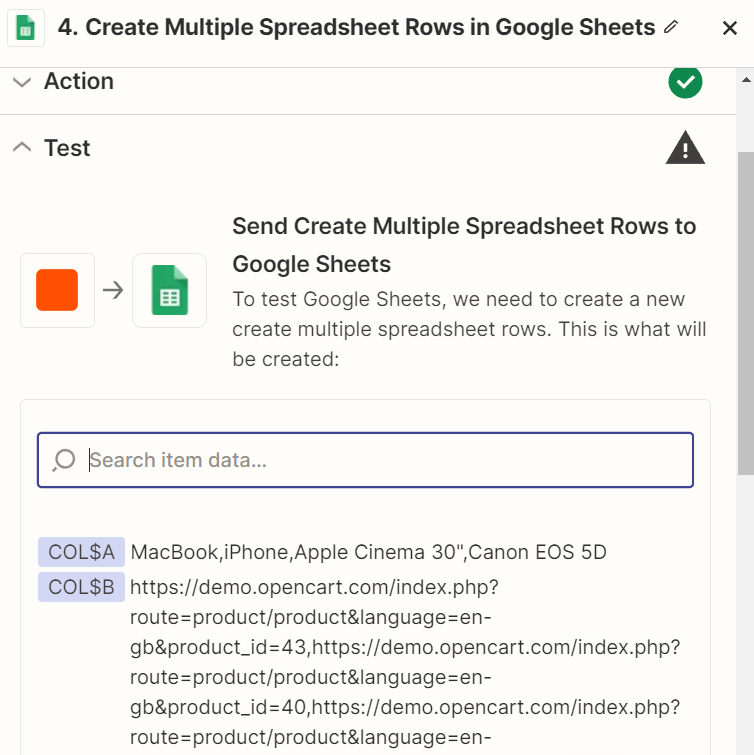

Let's run the test and make sure everything works correctly. Let's go to the testing section and check the data to do this.

Then, run the test and wait for it to complete (usually takes no more than 5 seconds). After that, go to Google Sheets and open your spreadsheet. You will get this table with all the scraped data if you have done everything correctly.

As you can see, we have created a Zapier web scraper that collects data from the specified website and enters the data into a table without writing a single line of code. And we hope it can help you get a handle on the basics and start creating your scrappers with many possibilities.

For example, you can connect Gmail and send the emails generated with ChatGPT based on scraped information to the mailboxes specified in Google Sheets. This is as simple as the examples discussed earlier, the main thing is to take your time and do everything in small steps.

Make a Zap with Google Sheets & HasData & ChatGPT

Now that we have explored examples, let's create a no code web scraper that you can easily replicate and use for your purposes.

Our goal is to create a Zapier web scraping tool that works as follows:

- We input a website link into a Google Sheets document to gather data from.

- Our scraper is triggered When a new row with a website link appears in the document.

- Using HasData, we extract all the text information from the website's <p> tags.

- The extracted data is sent to ChatGPT with a request to gather information about the page, including contact details and possible offers for the website owners.

- The response from ChatGPT, along with the website link, is recorded in the same Google Sheets document but on a different sheet.

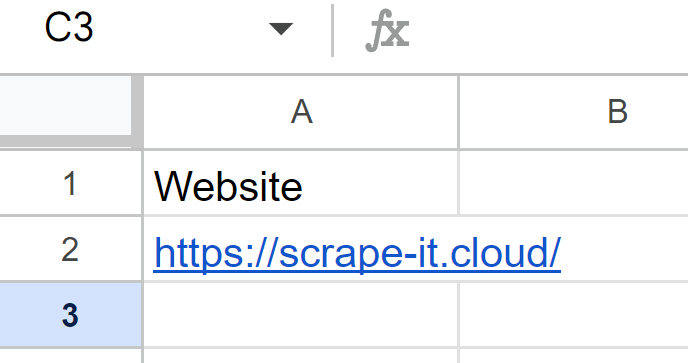

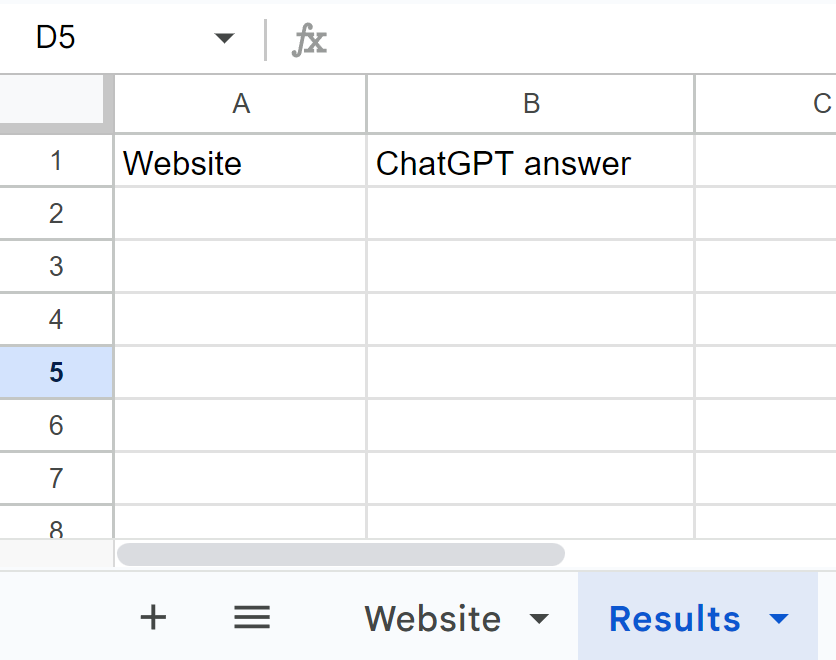

Now that we have outlined our plan step by step, it will be easier for us to write the no coding data scraper by simply following this plan. Let's start by creating a Google Sheets document and setting the column header as "website".

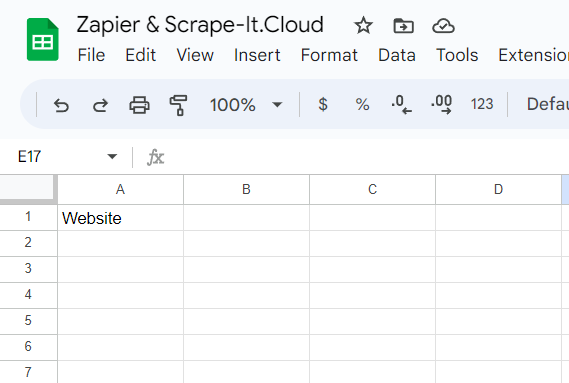

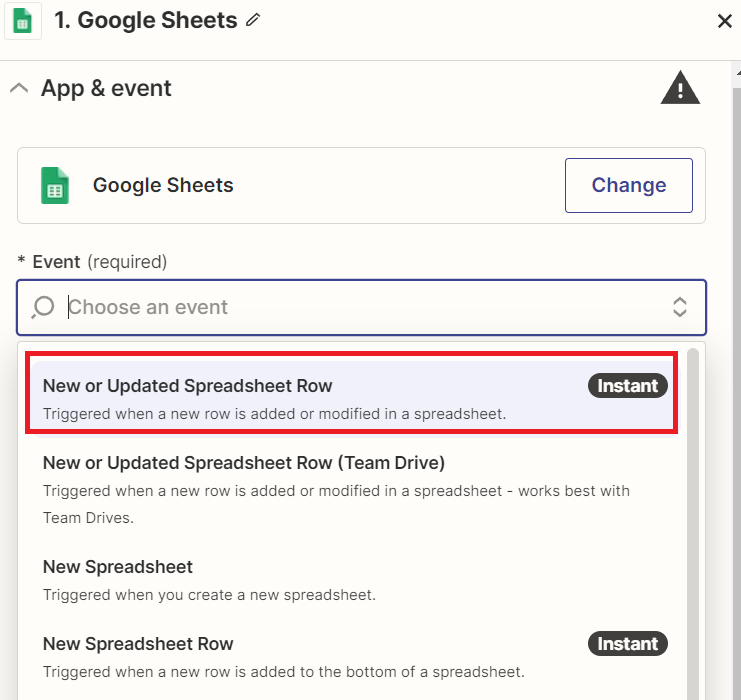

Next, let's move on to Zapier, where we'll create a new Zap. For the trigger, we'll choose Google Sheets.

We've previously discussed the actions that can be performed using Google Sheets, and now let's explore the available triggers:

- New or Updated Spreadsheet Row: This trigger is activated when a new row is added, or an existing row is modified in the spreadsheet. You can use this trigger to detect and respond to new or updated data in the table.

- New or Updated Spreadsheet Row (Team Drive): This trigger works similarly to the previous one, but it is optimized for use with Team Drives (collaborative folders in Google Drive). If you're working with tables in a Team Drive, this trigger allows you to react to new or updated data.

- New Spreadsheet: This trigger is activated when you create a new spreadsheet.

- New Spreadsheet Row: This trigger is activated when a new row is added to the end of the spreadsheet. You can use this trigger to detect new records added to the table.

- New Spreadsheet Row (Team Drive): Similar to the previous trigger, this one is optimized for use with Team Drives. It is activated when a new row is added to the end of the spreadsheet in a Team Drive.

- New Worksheet: This trigger is activated when you create a new sheet within the spreadsheet.

Zillow Scraper is the tool for real estate agents, investors, and market researchers. Its easy-to-use interface requires no coding knowledge and allows users to…

Redfin Scraper is an innovative tool that provides real estate agents, investors, and data analysts with a convenient way of collecting and organizing property-related…

In our case, we need to be informed about new or modified rows in the table. This way, you'll be able to add websites to the end of the list and replace existing ones if needed.

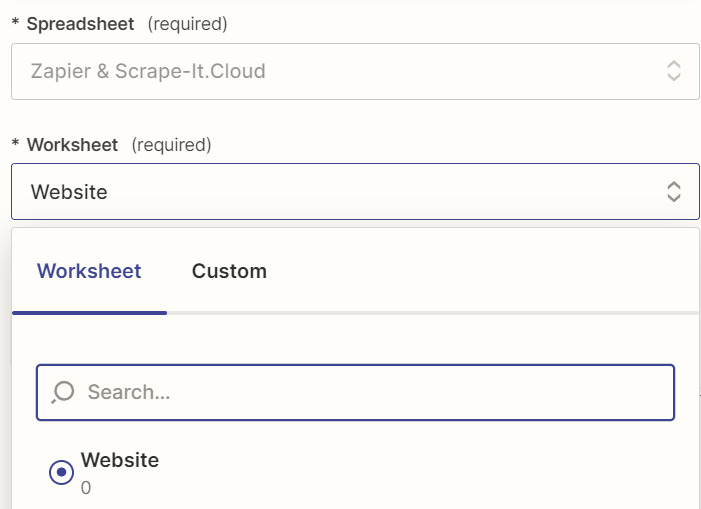

Now, as with adding an action, we need to select a table and a sheet:

Next, select the column that will be monitored for changes or adding new rows. In our case, this column is titled "Website."

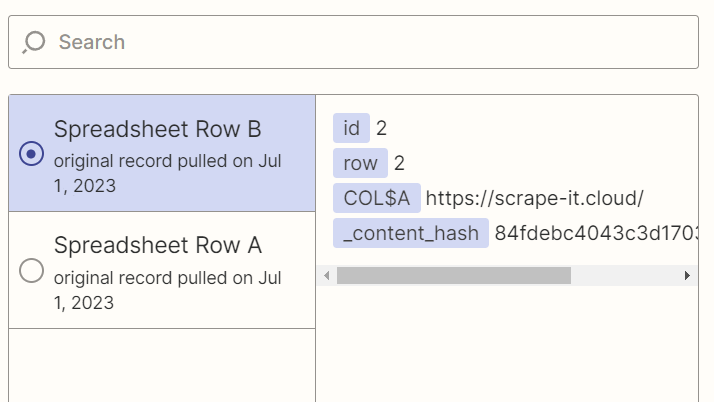

Finally, we can check if our trigger is working.

To do this, go to the sheet and add a new row with a link to the web page you want to scrape.

Next, let's go back to our Zap and run the test. As we can see, it works correctly.

We got a link to a website, and we set a trigger to change or add new links. So, let's scrape all the paragraphs from the site. To do this, add a new action and select HasData.

We have already shown before how to sign in and where to get the API key so that we won't repeat ourselves. If you missed it, return to Creating Your First Zapier Web Scraper. We'll move on to what we need to fill in this time. First, we need to specify the URL we got using the trigger.

Then, use Extraction Rules to assemble all the paragraphs on the page. Typically, paragraphs are in <p> tags, which will work for most sites.

That's all for now, be sure to verify all data and test the action before moving on to the next step. Do not skip tests even if you are sure that everything works correctly, because in case of a small error, you may have problems with the result.

Now let's add one more action and add ChatGPT. We have also already shown where to find the ChatGPT API and discussed what functions you can use. If you missed anything, you can go back to HasData with ChatGPT.

Add a text query and data to process.

Do a test and add another action. All we have to do is save all the obtained data in a Google Sheets document. Then you can download this document and open it using Excel.

Return to your document and add another sheet where you set the headings "Website" and "ChatGPT answer" to put the results in there.

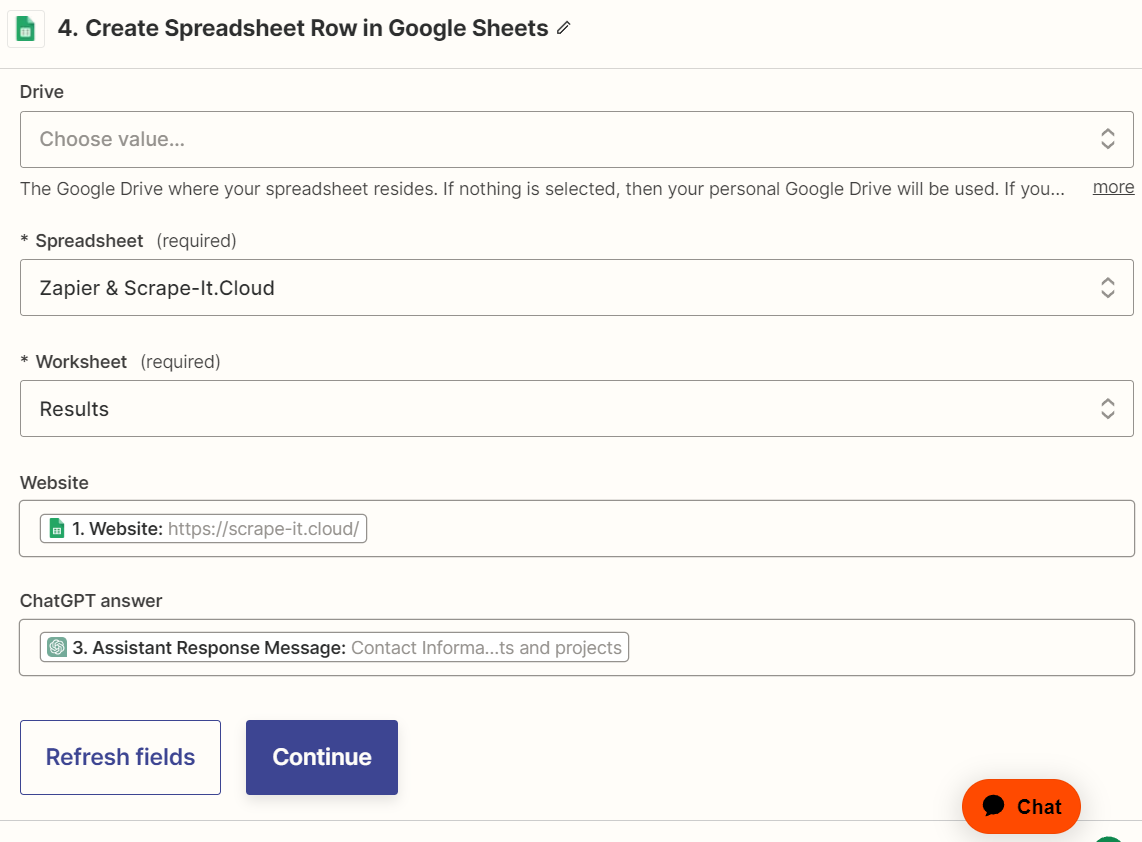

Now in Zapier, configure the tab and sheet settings where we want to save the data. And also, fill in the fields with the relevant names. You should end up with something similar:

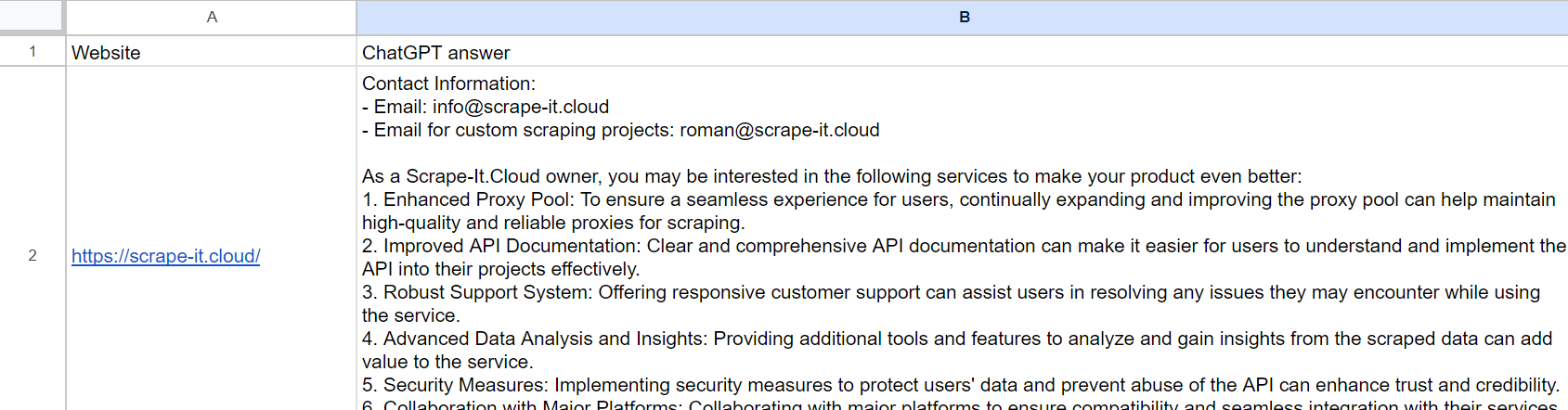

Run the action test and check in your Google Sheets document everything works correctly.

If you got a similar result, congratulations. You have created a full-fledged scraper that uses ChatGPT to analyze the data and saves the results to Google Tables.

Zapier has many different applications you can integrate into your project. Most of them work and integrate similarly. Therefore, if you understand the examples in this tutorial, you can make almost any automation that perfectly suits your purposes, even without programming knowledge and skills.

Results and Benefits

By using Zapier with various integrations, we could make a web parser by Zapier that automatically processes your data in several services. You may have performed such operations manually in the past, but now you can automate this process with Zapier and save time. For example, thanks to the Zapier custom integration with HasData, you automatically get up-to-date data without having to update it manually.

In addition, by automating data collection, you significantly reduce errors. You've reduced the risk of typos or missing information by eliminating manual data entry and intervention.

One option for using the stock you've considered could be automatically responding to incoming requests and messages, which can simplify and improve communication with your audience or customers.

Conclusion and Takeaways

Programming skills are not always required for everyday tasks. Thanks to special services that make it easy to integrate different applications, you can get almost any automation in no time.

By automating processes and integrating data quickly, you can increase the amount of work you do or improve your response to changes in external conditions.

In addition, using Zapier will allow you to easily add new Zapier integrations as needed and expand the functionality of your system. This tutorial has helped you understand the basics of working with Zapier and creating your integrations.