Redfin is an online platform that provides real estate information, including listings for sale and rent, market data, and search tools. Its goal is to make it easier for clients to buy, sell, or rent a home by providing them with access to an extensive database of properties.

Web scraping can automate the process of consistently collecting data from Redfin. This enables the analysis of trends, property value assessment, and market forecasting.

In this article, we will discuss and demonstrate with examples how to scrape data from Redfin using various methods, including creating your own Python scraper, utilizing no-code scrapers, and employing the unofficial Redfin API. Each of these approaches has its own advantages and disadvantages, and the choice depends on the specific needs and skills of the user.

Redfin Scraper is an innovative tool that provides real estate agents, investors, and data analysts with a convenient way of collecting and organizing property-related…

Zillow Scraper is the tool for real estate agents, investors, and market researchers. Its easy-to-use interface requires no coding knowledge and allows users to…

Choosing a Scraping Method

As we've mentioned before, there are several ways to collect data from Redfin. Before we delve into the specifics of each method, let's take a quick overview of the available options, ranging from the simplest and most beginner-friendly to the more advanced:

- Manual Scraping. This method is free but time-consuming and is only suitable for collecting small amounts of data. It involves manually browsing through relevant pages and extracting the desired information.

- No-code scrapers. These services provide tools for data collection without coding expertise. They are ideal for users who simply need the data without integrating it into an application. Simply specify the parameters in the Redfin no-code scraper and receive the data set.

- Browser Automation Tools. Extensions can be used to collect data from Redfin. However, unlike no-code scrapers, you need to have the browser window open during the scraping process and cannot close it until it's finished.

- Redfin API. Redfin doesn't have an official API, so you'll need to use unofficial alternatives. We'll provide instructions and code examples later.

- Creating your own scraper is the most complex method. While using the Redfin API involves setting parameters and receiving well-structured data sets, creating your own scraper requires handling captchas, bot protection, and proxies to prevent IP blocking when scraping large volumes of data.

All considered options have advantages and disadvantages, so the choice should be made depending on your needs. In short, if you only need data from a few listings, simply collect it manually. If you need to collect data from a large number of listings but don't have any programming experience, the Redfin no-code scraper is a great option. And if you want to integrate data collection into your application and don't want to mess around with data retrieval or bypass Redfin's bot protection, the Redfin API is for you.

But if you want to collect data yourself and have the skills and time to solve all the associated problems, then create your own Redfin scraper in Python or any other programming language.

Method 1: Scrape Data from Redfin Without Code

No-code web scraping tools are software solutions that enable users to extract data from websites without writing any code. They provide a simple and intuitive interface, allowing users to get the data they need in a few clicks.

Since no coding skills or knowledge are required, anyone can use a Redfin no-code scraper. This method is also one of the safest, as the data from Redfin is not collected from your PC, but by the service that provides the no-code scraper. You only receive the ready-made dataset and protect yourself from any blocking by Redfin.

Let's take a closer look at how to use such tools using the example of HasData's Redfin no-code scraper. To do this, sign up on our website and go to your account.

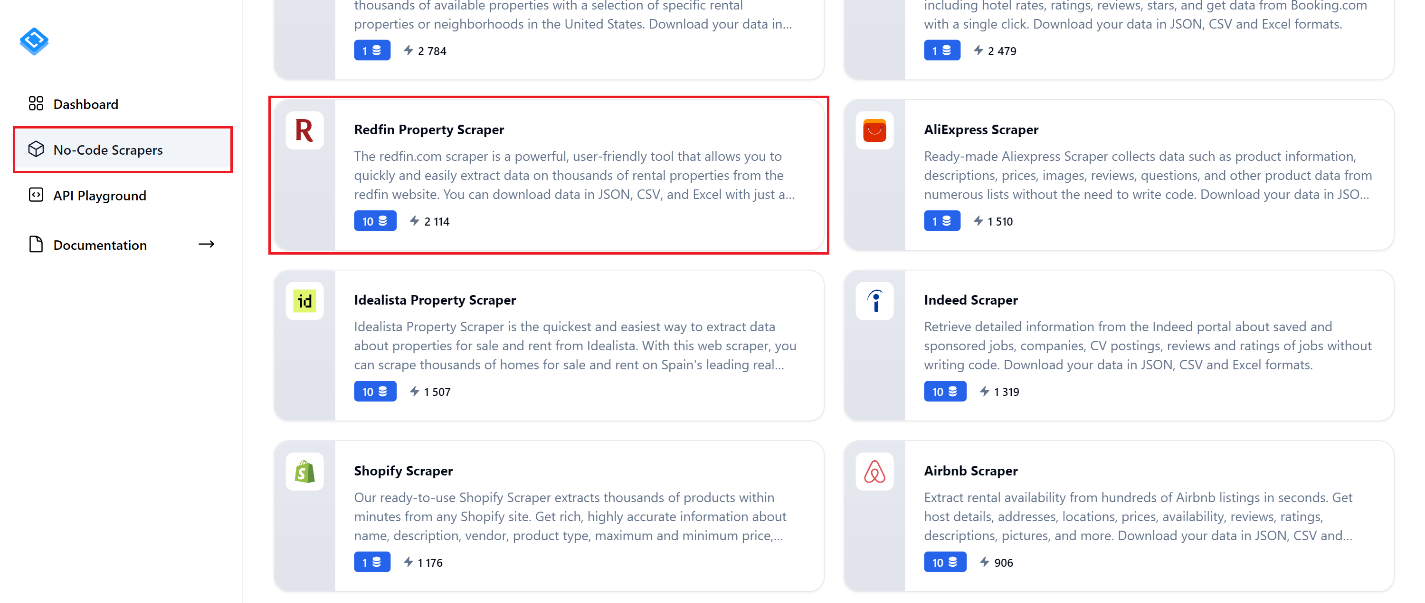

Navigate to the "No-Code Scrapers" tab and locate the "Redfin Property Scraper". Click on it to proceed to the scraper's page.

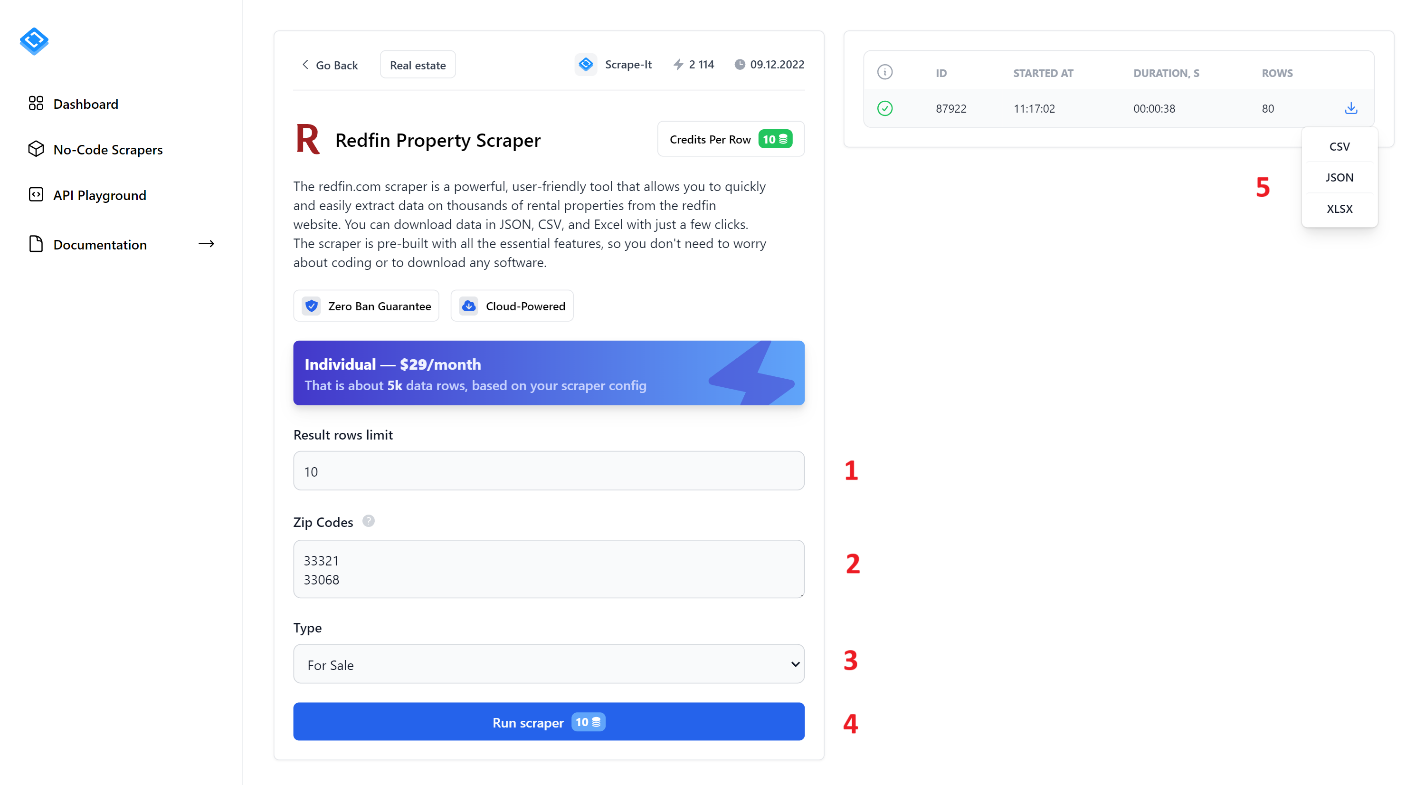

To extract data, fill in all fields and click the "Run Scraper" button. Let's take a closer look at the no-code scraper elements:

- Result rows limit. Specify the number of listings you want to scrape.

- Zip Codes. Enter the zip codes from which you want to extract data. You can enter multiple Zip Codes, each on a new line.

- Type. Select the listing type: For Sale, For Rent, or Sold.

- Run Scraper. Click this button to start the scraping process.

- After starting the scraper, you will see the progress bar and scraping results in the right-hand pane. Once finished, you can download the results in one of the available formats: CSV, JSON, or XLSX.

As a result, you will receive a file containing all the data that was collected based on your request. For example, the result may look like this:

The screenshot only shows a few of the columns, as there is a large amount of data. Here is an example of how the data would look in JSON format:

{

"properties": [

{

"id": "",

"url": "",

"area": ,

"beds": ,

"baths": ,

"image": "",

"price": ,

"photos": [],

"status": "",

"address": {

"city": "",

"state": "",

"street": "",

"zipcode": ""

},

"homeType": "",

"latitude": ,

"agentName": "",

"longitude": ,

"yearBuilt": ,

"brokerName": "",

"propertyId": ,

"description": "",

"agentPhoneNumber": "",

"brokerPhoneNumber": ""

}

]

}Overall, using no-code web scraping tools can significantly streamline the process of collecting data from websites, making it accessible to a wide range of users without requiring coding expertise.

Method 2: Scrape Redfin Property Data with Python

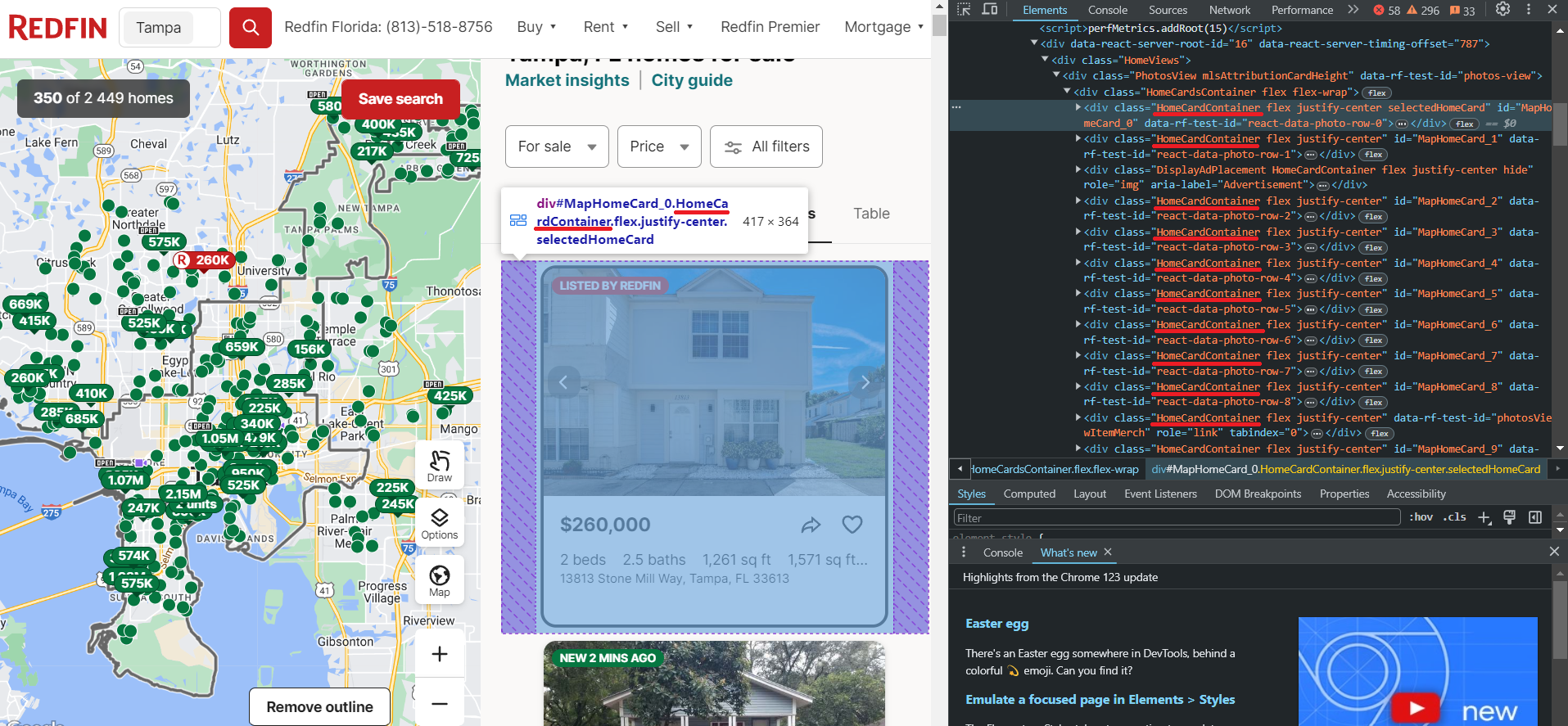

Before scraping data, let's research the specific data we can extract from Redfin. To do this, we'll navigate to the website and open DevTools (F12 or right-click and Inspect) to immediately identify the selectors for the elements we're interested in. We'll need this information later. First, let's examine the listings page.

As we can see, the properties are located in containers with the div tag and have the class HomeCardContainer. This means that to get all the ads on the page, it will be enough to get all the elements with this tag and class.

Now let's take a closer look at one property. Since all the elements on the page have the same structure, what works for one element will work for all the others.

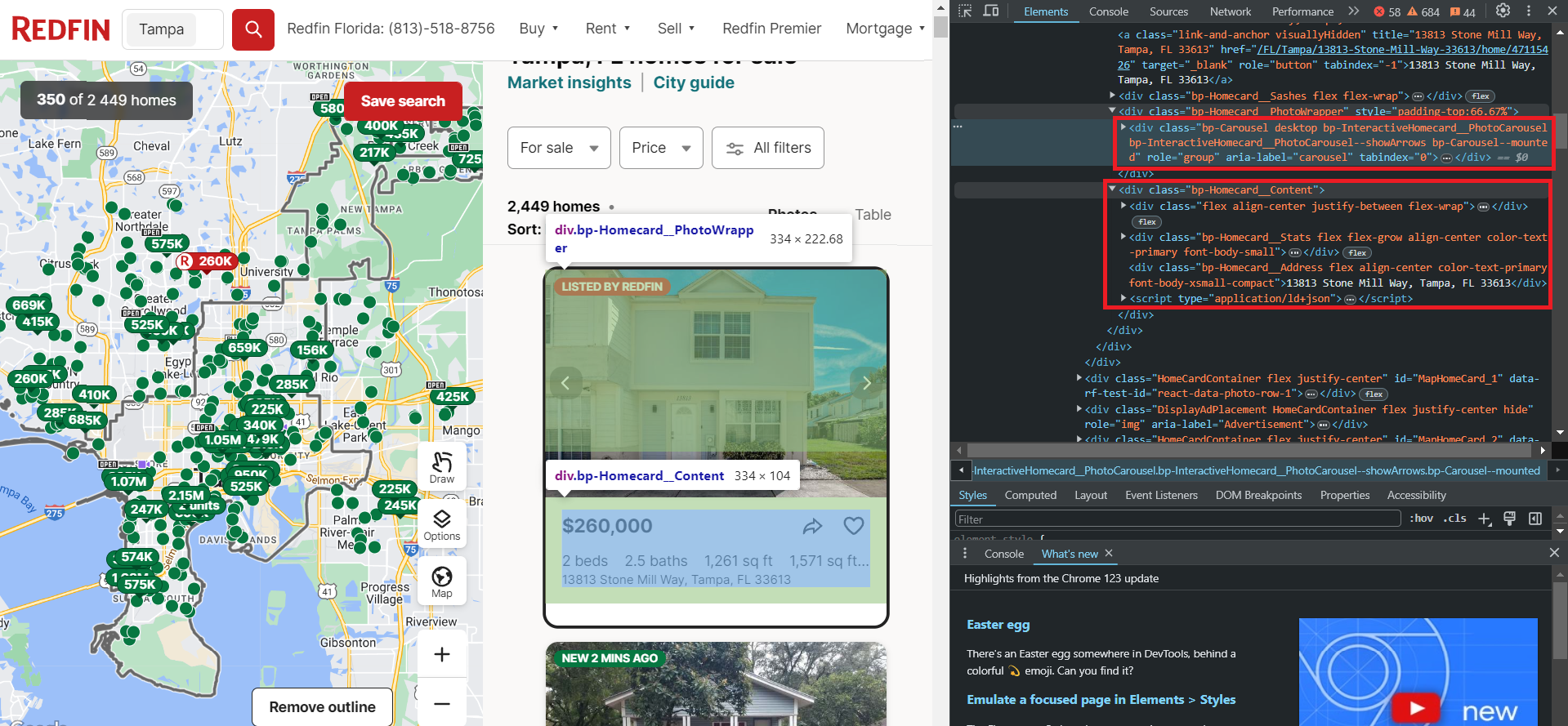

As you can see, the data is stored in two blocks. The first block contains the images for the listing, while the second block holds the textual data, including information about the price, number of rooms, amenities, address, and more.

Notice the script tag, which contains all this textual data neatly structured in JSON format. However, to extract this data, you'll need to use libraries that support headless browsers, such as Selenium, Pyppeteer, or Playwright.

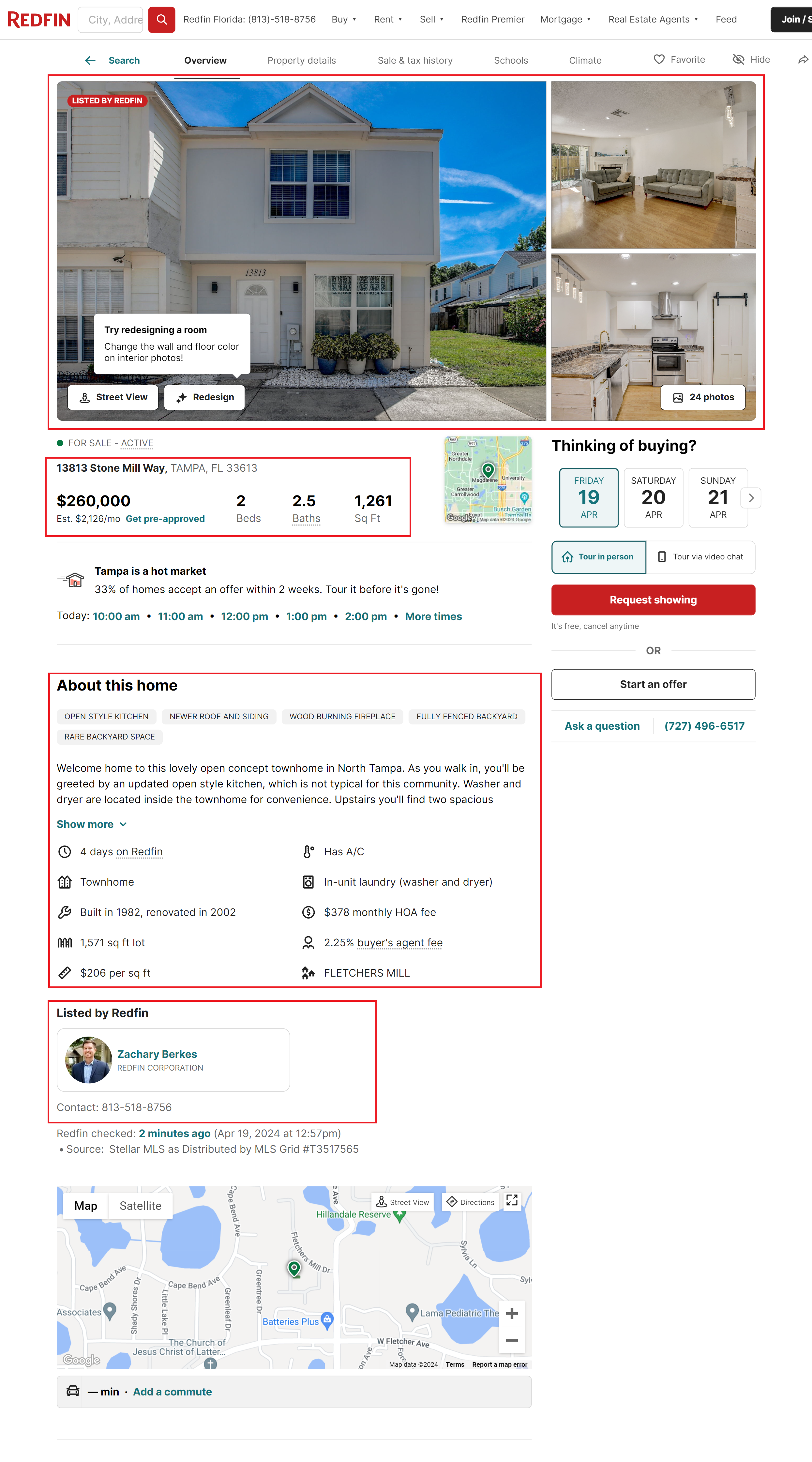

Now, let's move on to the listing page itself. There's more information available here, but scraping it will require navigating through all these pages. This can be quite challenging without using proxies and captcha-solving services, as the more requests you make, the higher the risk of getting blocked.

Key information that can be extracted from this page includes:

- Images. All images are contained within a single container. Typically, each listing has multiple images.

- Basic listing information. This is identical to the information that can be obtained from the listing page itself.

- Property details. Additional information about the property, including the year built, description, and more.

- Realtor information. This section provides details about the listing agent, including their contact information and a link to their profile page.

To retrieve all this information, you either need to identify selectors or XPath for each element in all blocks or utilize the ready-made Redfin API that will return pre-formatted data.

Setting Up Your Development Environment

Now, let's set up the necessary libraries to create our own scraper. In this article, we will be using Python version 3.12.2. You can read about how to install Python and how to use a virtual environment in our article on the introduction to scraping with Python. Next, install the required libraries:

pip install requests beautifulsoup4 seleniumTo use Selenium, you may need to additionally download a webdriver. However, this is no longer necessary for the latest Selenium versions. If you are using an earlier version of the library, you can find all the essential links in the article on scraping with Selenium. You can also choose any other library to work with a headless browser.

Get Data with Requests and BS4

You can view the final version of the script in Google Colaboratory, where you can also run it. Create a file with the extension *.py where we will write our script. Now, let's import the necessary libraries.

import requests

from bs4 import BeautifulSoupCreate a variable to store the URL of the listings page:

url = "https://www.redfin.com/city/18142/FL/Tampa"Before proceeding, let's test the query and see how the website responds:

response = requests.get(url)

print(response.content)As a result, we will receive a response from the website that contains the following information:

There seems to be an issue...

Our usage behavior algorithm thinks you might be a robot.

Ensure you are accessing Redfin.com according to our terms of usage.

Tips:

- If you are using a VPN, pause it while browsing Redfin.com.

- Make sure you have Javascript enabled in your web browser.

- Ensure your browser is up to date.To avoid this issue, let's add User-Agents to our request. For more information on what User-Agents are, why they're essential, and a list of latest User-Agents, please refer to our other article.

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36"

}

response = requests.get(url, headers=headers)The request is now working properly, and the website is returning property data. Let's verify that the response status code is 200, indicating successful execution, and then parse the result:

if response.status_code == 200:

soup = BeautifulSoup(response.content, "html.parser")Now, let's utilize the selectors we discussed in the previous section to retrieve the required data:

homecards = soup.find_all("div", class_="bp-Homecard__Content")

for card in homecards:

if (card.find("a", class_="link-and-anchor")):

link = card.find("a", class_="link-and-anchor")["href"]

full_link = "https://www.redfin.com" + link

price = card.find("span", class_="bp-Homecard__Price--value").text.strip()

beds = card.find("span", class_="bp-Homecard__Stats--beds").text.strip()

baths = card.find("span", class_="bp-Homecard__Stats--baths").text.strip()

address = card.find("div", class_="bp-Homecard__Address").text.strip()Print them on the screen:

print("Link:", full_link)

print("Price:", price)

print("Beds:", beds)

print("Baths:", baths)

print("Address:", address)

print()As a result, we will have 40 real estate objects with all the data we need in the convenient format:

If you want to save them, create a variable at the beginning of the script to store them:

properties = []Instead of printing the data to the screen, store it in a variable:

properties.append({

"Price": price,

"Beds": beds,

"Baths": baths,

"Address": address,

"Link": full_link

})The next step depends on the format in which you want to save the data. We will discuss this in more detail in the section on data processing and saving so as not to repeat ourselves.

Handling Dynamic Content with Selenium

Let's do the same thing using Selenium. We've also uploaded this script to the Colab Research page, but you can only run it on your own PC, as Google Colaboratory doesn't allow running headless browsers.

This time, instead of using selectors and selecting all the elements individually, let's use the JSON that we saw earlier and combine them all into one file. You can also remove unnecessary attributes or create your own JSON with your own structure, using only the necessary attributes.

Let's create a new file and import all the necessary Selenium modules, as well as the library for JSON processing.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import jsonNow let's set the Selenium parameters, specify the path to the webdriver, and also indicate the headless mode:

chrome_driver_path = "C:\driver\chromedriver.exe"

chrome_options = Options()

chrome_options.add_argument("--headless")

service = Service(chrome_driver_path)

service.start()

driver = webdriver.Remote(service.service_url, options=chrome_options)Provide a link to the page with the listing:

url = "https://www.redfin.com/city/18142/FL/Tampa"Let's move to the properties page and to prevent the script from terminating in case of an error, add a try block:

try:

driver.get(url)

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CLASS_NAME, "HomeCardContainer")))

homecards = driver.find_elements(By.CLASS_NAME, "bp-Homecard__Content")

properties = []Iterate over all product cards and collect JSON:

for card in homecards:

script_element = card.find_element(By.TAG_NAME, "script")

json_data = json.loads(script_element.get_attribute("innerHTML"))

properties.extend(json_data) Next, you can either continue processing the JSON object or save it. At the end, we should stop the web driver work:

finally:

driver.quit()This is an example of a JSON file that we will obtain as a result:

[

{

"@context": "http://schema.org",

"name": "13813 Stone Mill Way, Tampa, FL 33613",

"url": "https://www.redfin.com/FL/Tampa/13813-Stone-Mill-Way-33613/home/47115426",

"address": {

"@type": "PostalAddress",

"streetAddress": "13813 Stone Mill Way",

"addressLocality": "Tampa",

"addressRegion": "FL",

"postalCode": "33613",

"addressCountry": "US"

},

"geo": {

"@type": "GeoCoordinates",

"latitude": 28.0712008,

"longitude": -82.4771742

},

"numberOfRooms": 2,

"floorSize": {

"@type": "QuantitativeValue",

"value": 1261,

"unitCode": "FTK"

},

"@type": "SingleFamilyResidence"

},

…

]This approach allows you to get more complete data in a simpler way. In addition, using a headless browser can reduce the risk of being blocked, as it allows you to better simulate the behavior of a real user.

However, it is worth remembering that if you scrape a large amount of data, the site may still block you. To avoid this, connect a proxy to your script. We have already discussed how to do this in a separate article dedicated to proxies.

Method 3: Scrape Redfin using API

Now, let's consider the simplest option for obtaining the necessary data. This option does not require connecting proxies or captcha-solving services and returns the most complete data in a convenient JSON response format. To do this, we will use Hasdata's Redfin API, the full details of which are in the documentation.

Scrape Listings using Redfin API

Since this API has two endpoints, let's consider both options and start with the one that returns data with a listing, as we have already collected this data using Python libraries. You can also find the ready-made script in Google Colaboratory.

Import the necessary libraries:

import requests

import jsonThen, put your HasData API key:

api_key = "YOUR-API-KEY"Set parameters:

params = {

"keyword": "33321",

"type": "forSale"

}In addition to the ZIP code and property type, you can also specify the number of pages. Then provide the endpoint itself:

url = "https://api.hasdata.com/scrape/redfin/listing"Set headers and request the API:

headers = {

"x-api-key": api_key

}

response = requests.get(url, params=params, headers=headers)Next, we will retrieve the data, and if the response code is 200, we can either further process the received data or save it:

if response.status_code == 200:

properties = response.json()

if properties:

# Here you can process or save properties

else:

print("No listings found.")

else:

print("Failed to retrieve listings. Status code:", response.status_code)As a result, we will get a response where the data is stored in the following format (most of the data has been removed for clarity):

{

"requestMetadata": {

"id": "da2f6f99-d6cf-442d-b388-7d5a643e8042",

"status": "ok",

"url": "https://redfin.com/zipcode/33321"

},

"searchInformation": {

"totalResults": 350

},

"properties": [

{

"id": 186541913,

"mlsId": "F10430049",

"propertyId": 41970890,

"url": "https://www.redfin.com/FL/Tamarac/7952-Exeter-Blvd-W-33321/unit-101/home/41970890",

"price": 397900,

"address": {

"street": "7952 W Exeter Blvd W #101",

"city": "Tamarac",

"state": "FL",

"zipcode": "33321"

},

"propertyType": "Condo",

"beds": 2,

"baths": 2,

"area": 1692,

"latitude": 26.2248143,

"longitude": -80.2916508,

"description": "Welcome Home to this rarely available & highly sought-after villa in the active 55+ community of Kings Point, Tamarac! This beautiful and spacious villa features volume ceilings…",

"photos": [

"https://ssl.cdn-redfin.com/photo/107/islphoto/049/genIslnoResize.F10430049_0.jpg",

"https://ssl.cdn-redfin.com/photo/107/islphoto/049/genIslnoResize.F10430049_1_1.jpg"

]

},

…

}As you can see, using an API to retrieve data is much easier and faster. Additionally, this approach allows you to get all the possible data from the page without having to extract it manually.

Scrape Properties using Redfin API

Now, let's collect data using another endpoint (result in Google Colaboratory) that allows you to retrieve data from the page of a specific property. This can be useful if, for example, you have a list of listings and you need to collect data from all of them quickly.

The entire script will be very similar to the previous one. Only the request parameters and the endpoint itself will change:

url = "https://api.hasdata.com/scrape/redfin/property"

params = {

"url": "https://www.redfin.com/IL/Chicago/1322-S-Prairie-Ave-60605/unit-1106/home/12694628"

}The script will remain the same in other aspects. The API will return all available data for the specified property.

Data Processing and Storage

To save data, we'll need an additional library, depending on the format. Let's save our data in two of the most popular formats: JSON and CSV. To do this, we'll import additional libraries into the project:

import json

import csvNext, we will save the data from the previously created variable to the corresponding files:

with open("properties.json", "w") as json_file:

json.dump(properties, json_file, indent=4)

keys = properties[0].keys()

with open("properties.csv", "w", newline="", encoding="utf-8") as csvfile:

writer = csv.DictWriter(csvfile, fieldnames=keys)

writer.writeheader()

writer.writerows(properties)This method allows us to preserve all the essential data. If you want to streamline the data-saving process or save it in a different format, you can utilize the Pandas library.

Conclusion

In this article, we have explored the most popular methods for obtaining real estate data from Redfin and discussed various technologies and tools, such as BeautifulSoup and Selenium, that extract information from websites. As a result, you can easily obtain data on prices, addresses, the number of bedrooms and bathrooms, and other property characteristics. You can also find out our tutorial on scraping Zillow using Python to get more real estate data.

All of the scripts discussed have been uploaded to Google Colaboratory so that you can easily access and run them without the need to install Python on your PC (with the exception of the example using a headless browser, which cannot be run from the cloud). This approach allows you to take advantage of all of the scripts discussed, even if you are not very familiar with programming.

In addition, we have also provided an example of how to obtain data using a no-code scraper even without programming skills. If you want to obtain data using Hasdata's Redfin API but do not want to write a script, you can use our API Playground.