Python is one of the most common languages for writing web scrapers. There are many libraries, frameworks, and utilities for it, from simple ones, like the Requests library or PycURL, to more feature-rich and advanced ones, such as Selenium or Puppeteer.

However, Selenium is the second most popular library after Requests, which allows you to scrape static web pages and dynamic web page content. You can save the received data conveniently, including a CSV file.

Prerequests

To scrape website data using Selenium, you need 3 things: Python, Selenium, a web driver, and a browser. In this tutorial, we will use Python 3.11, and if you don’t have it or are using Python 2, you will need to download the latest version of Python interpreter. To install Selenium, simply use pip in the command prompt:

pip install seleniumSelenium supports almost all popular browsers. To use them, you need the appropriate web driver, which must match the version of the installed browser. The most commonly used web browsers are Chrome, Firefox, and Edge.

Our Google Maps Scraper lets you quickly and easily extract data from Google Maps, including business type, phone, address, website, emails, ratings, number of reviews,…

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Alternatively, you can install the WebDriver using web driver-manager. It will automatically download and install the required WebDriver. You can install and access webdriver manager as a regular Python library:

pip install webdriver-managerThis is the easiest option for automating web browsers, that allows you not to worry about version matching and finding the right web driver.

Set Up the Web Driver

Before interacting with web pages and elements, let's properly configure the web driver window and set all required options and arguments.

Customize Windows Size

Selenium provides a set of properties and methods to control a browser window. You can use these to change the window's size, position, and fullscreen state. For example, you can use the set_window_size() method to change the window's size. This method takes two arguments: the window's width and height.

driver.set_window_size(800, 600)To maximize the window, you can use the maximize_window() method.

driver.maximize_window()To minimize the window, you can use the minimize_window() method.

driver.minimize_window()You can use the set_window_position() method to position the window. This method takes two arguments: the window's x and y coordinates.

driver.set_window_position(100, 100)You can use the fullscreen_window() method to put the window in fullscreen mode.

driver.fullscreen_window()If you want to get the size of the current window, not change it, you can use the following method:

driver.get_window_size()The specific settings you use will depend on your needs. You can use these methods to control the browser window's behavior as needed.

Use Headless Mode

A Headless Browser is a web browser without a graphical user interface (GUI) that is controlled using a command-line interface.

As a rule, this approach is used so that the open browser window does not interfere with the scraping process and does not waste PC resources. In headless mode, the browser strips off all GUI elements and lets it run silently in the background, which reduces resource consumption by more than 10 times.

To use headless mode, just add the following:

options = driver.ChromeOptions()

options.headless = True After that, the browser window will not open, and scraping data will occur in the background.

Add User-Agents and Delays

Getting around anti-scraping protections with Selenium in Python can be a complex task. Websites use various mechanisms to track and catch scrapers automatically. Therefore, to avoid this, you need to use a comprehensive approach and hide the scraper's behavior as much as possible, making it look like the behavior of a natural person.

First, websites often check the user agent string in the HTTP request headers to identify automated bots. You can set a custom user agent to mimic a real browser and reduce the chances of detection. For example:

options = webdriver.ChromeOptions()

options.add_argument("user-agent=Mozilla/5.0 (X11; CrOS x86_64 8172.45.0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.64 Safari/537.36")

driver = webdriver.Chrome(options=options)Also, you can implement delays between requests to mimic human behavior. You can use time.sleep() to pause execution for a specified duration. This can help avoid triggering rate-limiting mechanisms on the target website.

import time

time.sleep(2)To make the behavior of scraper more human, you can maintain session consistency by managing cookies and sessions effectively. Store and reuse cookies to avoid constant logins and maintain session information between requests.

Add Proxies with Selenium Wire

It is important to use a proxy when scraping web pages for several reasons:

- There is a chance to overcome the limits on the number of requests to the site.

- Because of the number of requests, the scraper will be considered a DDoS attack.

- The lack of a proxy reduces the security and privacy of online activity.

Unfortunately, Selenium has limited proxy support. As a driver option, you can add a proxy without authentication.

proxy = "12.345.67.890:1234"

options.add_argument("--proxy-server=%s" % proxy)However, for greater convenience, Selenium supports using a proxy server and proxy with authentication. For this, you need to install an additional selenium package:

pip install selenium-wireAfter that one can use a function with authentication:

options = {

"proxy": {

"http": f"http://{proxy_username}:{proxy_password}@{proxy_url}:{proxy_port}",

"verify_ssl": False,

},

}This article will not discuss where to find free or paid proxy providers, what proxies are, or how to use them with Python. However, you can find all of this information in our other articles.

Block Images and JavaScript

Blocking images and JavaScript can be useful for enhancing Selenium web scraping performance. When you access a website using Selenium for scraping purposes, you may not need to load and render all the resources, such as images and JavaScript, as these elements can significantly slow down the process.

Images are one of the most resource-intensive elements on web pages. When scraping data, especially text-based data, you can disable image loading to speed up the process. In Selenium, you can configure the Chrome WebDriver to disable images:

options.add_argument("--disable-images")Disabling images can significantly reduce the time it takes to load web pages. To disable JavaScript, you can also use the options:

options.add_argument("--disable-javascript")This approach is more extreme and might only suit some websites, as many rely on JavaScript for core functionality.

Interact with Web Pages

We will use the Chromium WebDriver in this example because it is the most popular. For this example, we will download the necessary version manually and place it on drive C. As mentioned earlier, you can also use the webdriver-manager library to work with Selenium instead.

Go to the Page

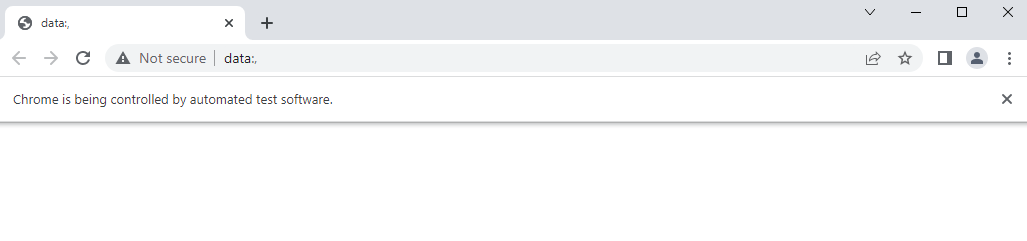

Before we explore all the capabilities of Selenium, let's launch a browser window simply. To do this, we just need to import the library into the script, specify the path to the web driver file, and run the launch command:

from selenium import webdriver

DRIVER_PATH = 'C:\chromedriver.exe' #or any other path of webdriver

driver = webdriver.Chrome(executable_path=DRIVER_PATH)If all is correct, you will see the chrome window:

Afterward, you can navigate to the desired page or take the necessary actions.

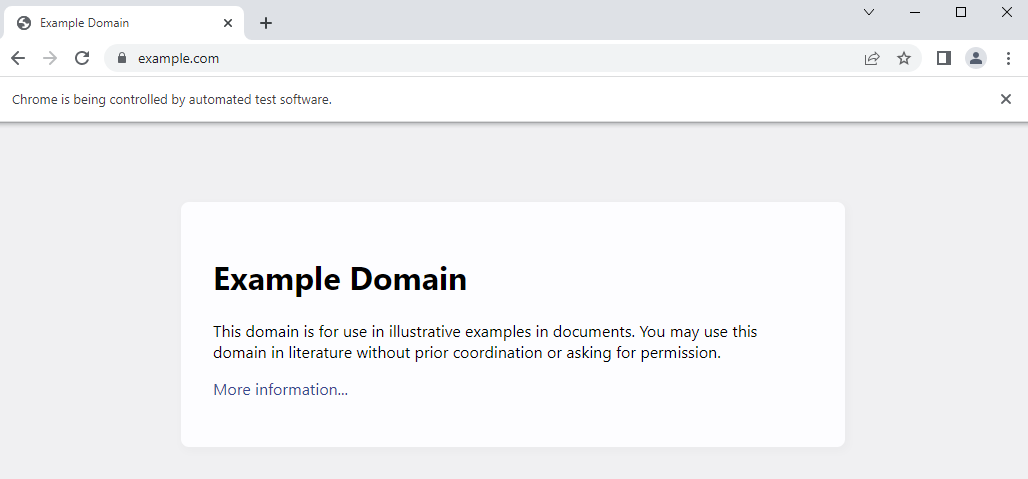

driver.get('https://example.com/')As a result, you will see something like that:

Process Data on the Page

With Selenium, you can extract both various elements from a page and information about the page itself. For example, you can get the URL of the current page:

url = driver.current_url

print(f"URL: {url}")Or its title:

title = driver.title

print(f"Title: {title}")Of course, you can also get the HTML code for the entire page for further processing or print it on the screen:

page = driver.page_source

print(f"Page source: {page}")To close the browser session with all tabs and complete the script execution, use this method:

driver.quit()Selenium is a powerful tool that can be used for a variety of purposes, including web scraping. It is easy to learn and use, and it supports both XPath and CSS selectors, making it very flexible.

Interact with Tabs

You can use Selenium to open a new tab or window and navigate to a different URL. This is typically achieved by simulating keyboard shortcuts like Ctrl+T for a new tab and then switching to the new tab:

driver.find_element_by_tag_name('body').send_keys(Keys.CONTROL + 't')

driver.switch_to.window(driver.window_handles[1])To close the active tab without closing the browser, use this method:

driver.close()This will only close the current tab if there are other tabs open. If the current tab is the only one, the browser will also be closed.

Interact with Elements

Selenium is a framework that was originally developed for testing. As a result, it has a wider range of features and functionality than a typical scraping library. Here are some tips for improving the functionality of a scraper using Selenium.

Find Elements

To get the content of an element or elements, you need first to identify it. Selenium provides a variety of locators to help you do this. The most common way is to use CSS selectors:

element = driver.find_element_by_css_selector("#price")You can also use XPath:

element = driver.find_element_by_xpath("/html/body/div/p")In addition, Selenium supports other popular locators, such as tags, IDs, and element names:

element = driver.find_element_by_name("element_name")

element = driver.find_element_by_id("element_id")

elements = driver.find_elements_by_tag_name("a")If you need to work with links, they have separate locators. You can use this method to locate anchor elements by their link text:

element = driver.find_element_by_link_text("Link Text")Or, by partial link text:

element = driver.find_element_by_partial_link_text("Partial Link")To scrape all links from a page, you can use a combination of Selenium's element location methods and a loop to iterate through all the links on the page:

all_links = driver.find_elements_by_tag_name("a")

for link in all_links:

print(link.get_attribute("href")) As you can see, Selenium supports enough locators to find any element anywhere on a page. You can also use multiple locators to find an element, such as by finding the parent and child elements within that parent element.

Wait for an element

Waiting for an element to be present is a crucial concept in web automation using Selenium. Often, web pages are dynamic, and elements may only appear after some time. You need to implement waiting strategies to ensure your automation scripts work reliably.

When working with Selenium, you have two options: wait for the page to load for a specified amount of time or for a specific element to load. The right option for you will depend on the goals of your project and the specific website you are scraping. To wait for a specified amount of time, you can use the following function:

driver.implicitly_wait(10)You can use a different approach to wait for a specific element to load on a page. First, import additional modules:

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.common.by import ByThen, add a check every 10 seconds to see if the element you need is loaded:

wait = WebDriverWait(driver, 10)

element = wait.until(EC.presence_of_element_located((By.ID, "element_id")))If the element is found within that time, it will be assigned to the element variable, and your Python project can proceed. Explicit waits are more specific and can be used for specific conditions, such as an element to be visible or clickable.

Click on Link

Clicking on elements is a fundamental interaction in web automation using Selenium. You can use Selenium to locate and click on various web page elements, including links, buttons, checkboxes, and more.

If you want to click on a specific link (anchor element) on a web page, you can use Selenium to locate the link and then use the click() method to interact with it:

link = driver.find_element_by_xpath("//a[@href]")

link.click()Click on Button

You can also use this method to interact with various types of web page elements, including buttons, radio buttons, checkboxes, and more:

button = driver.find_element_by_id("button_id")

button.click()

radio_button = driver.find_element_by_name("radio_button_name")

radio_button.click()You can use element clicks to submit forms, send data, or navigate through multi-step processes. The ability to click elements allows you to simulate user interactions, making it ideal for web scraping tasks.

Zillow API Python is a Python library that provides convenient access to the Zillow API. It allows developers to retrieve real estate data such as property details,…

Our Shopify Node API offers a seamless solution, allowing you to get data from these Shopify stores without the need to navigate the complexities of web scraping,…

Fill Forms

Almost every site has forms, whether a filter or an authentication form. A web form contains input fields, checkboxes, radio buttons, search forms, links, dropdown menus, and submit buttons to collect user data.

First, to receive data from the form and transfer it back, one needs to find the form and then enter text into it or select the suggested value.

The most common form is the input field. An input field is a text field that stores data entered by the user. To work with it, it is necessary to understand how information is entered (send_keys() method), removed (clear() method), and get input text (get_attribute() method). For example:

driver.find_element_by_id('here_id_name').send_keys("Test")

driver.find_element_by_id('here_id_name').clear()

nameTest = driver.find_element_by_id('here_id_name').get_attribute("value")Point a Checkbox

A checkbox is a small box in which two options are available: either select or deselect. However, before selecting a checkbox, one must know its current state because a second selection will change it to the opposite.

You can use a boolean variable (0 or 1, True or False, etc.) for such a check. This will print True if the checkbox is already selected or False if not:

acc_boolean = driver.find_element_by_id('acception').is_selected()

print(type(acc_boolean))To click the button on the website or checkbox, just use the:

driver.find_element_by_id('acception').click()To submit a form using Selenium, simply call the submit() method on the form element instead of click().

Execute JavaScript

Running JavaScript using Selenium in Python is a powerful feature that allows you to execute custom JavaScript code within the context of a web page. This can be incredibly useful when interacting with web elements, modifying the DOM, or handling complex scenarios that cannot be achieved through standard Selenium commands.

You can execute JavaScript code in Selenium using the execute_script() method provided by the WebDriver instance (usually associated with a browser instance). For example:

result = driver.execute_script("return 2 + 2")You can use JavaScript to interact with elements not immediately accessible through regular Selenium commands. For example, you can change the CSS properties of an element to make it visible.

Scroll Down the Page

You can use Selenium to simulate scrolling down a web page, which is particularly useful when you need to access content that becomes visible only as you scroll. The easiest way to scroll down in Selenium is to use JavaScrip:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight)")Alternatively, you can simulate scrolling by sending the 'END' key to the body element.

Infinite Scroll

If you want to handle infinite scroll, you can use a loop to keep scrolling and collecting data until no more content is loaded:

while(1):

driver.execute_script("window.scrollTo(0, document.body.scrollHeight)")

# Save or proccess your data hereRemember that the specific implementation of scrolling may vary depending on the website's structure and behavior, so you may need to adapt your code accordingly.

File Operations

Selenium offers several methods for interacting with web pages, including downloading files and capturing screenshots. This section will cover both processes in detail, providing clear and concise instructions.

Download Files

Selenium allows you to automate the process of clicking download links or buttons, specifying the download location, and managing the downloaded files.

To download a file, simply identify the element containing the download link and сlick on it, as in previous examples. However, you can optionally specify the directory in which the files will be saved before clicking:

options.add_experimental_option("prefs", {

"download.default_directory": "/path/to/download/directory"

})To ensure the download is complete before proceeding, you can introduce a wait, such as an implicit or explicit wait, or monitor the downloads folder for the completion of the file transfer.

Take screenshots

Taking screenshots of web pages programmatically using Selenium with Python programming language is a helpful feature for web scraping, testing, and monitoring. Selenium allows you to capture screenshots of the entire web page or specific elements within a page.

You can take a screenshot of the entire web page, including content that may require scrolling, with this command:

driver.save_screenshot("page.png")You can also screenshot a specific element on a web page using its location and size. For example, to capture a screenshot of a button with a specific CSS selector:

element = driver.find_element_by_css_selector("#button")

element.screenshot("element_screenshot.png")You can save screenshots to different file formats, such as PNG, JPEG, and BMP, by specifying the desired file extension when calling the save_screenshot() method. So, you can use Selenium to take screenshots for your scraping and monitoring tasks.

Conclusion and Takeaways

Selenium is a popular and powerful Python framework for web scraping. It can be used to extract data from web pages, as well as perform actions on them, such as logging in or navigating between pages. Selenium is also relatively easy to learn and use, compared to other scraping frameworks such as Puppeteer.

Selenium is particularly adept at handling dynamically loaded content on web pages. Unlike other libraries, which may struggle to extract data that appears only after user interactions or AJAX requests, Selenium can easily navigate this terrain. This capability is valuable for modern, data-rich websites that rely on JavaScript to load information.

Selenium is a powerful tool for web scraping and automation. It can handle dynamic data, emulate human interactions, and automate web actions, making it a go-to choice for developers and data professionals.