Scraping Zillow using Python can be useful for a variety of reasons. It can help real estate agents and investors to keep up with current market trends, identify potential properties quickly, analyze data from listings and stay informed about local listing activities. Additionally, scraping is an efficient way to find discounted or undervalued properties which can be great opportunities for those looking for it for themselves or a business.

Our article covers the basics of what is needed to scrape data from the website, including utilizing packages like BeautifulSoup and Selenium to get the data you need. Finally, the article explores some tips on how to scrape more effectively from Zillow by avoiding detection or getting blocked due to excessive requests.

How to scrape Zillow property data

There are several options for getting real estate data from Zillow. We will give both no-code options and an example of writing your own scraper. It is also worth noting that Zillow has its own API for data extraction.

Using Zillow API

Zillow presently offers 22 distinct Application Programming Interfaces (APIs). These are developed to assemble a range of data: including Listings and Reviews, Property, Rental, and Foreclosure Zestimates.

Access to some services provided through the API is charged depending on the level of use. In addition, Zillow moved its data operations to Bridge Interactive, a firm that concentrates on MLS information and brokerages. To utilize Bridge, users must get the system's okay before using its endpoints - even individuals who previously utilized Zillow's API.

To learn more and try out the Zillow API, visit the official API website for developers.

Using no-code Zillow scraper

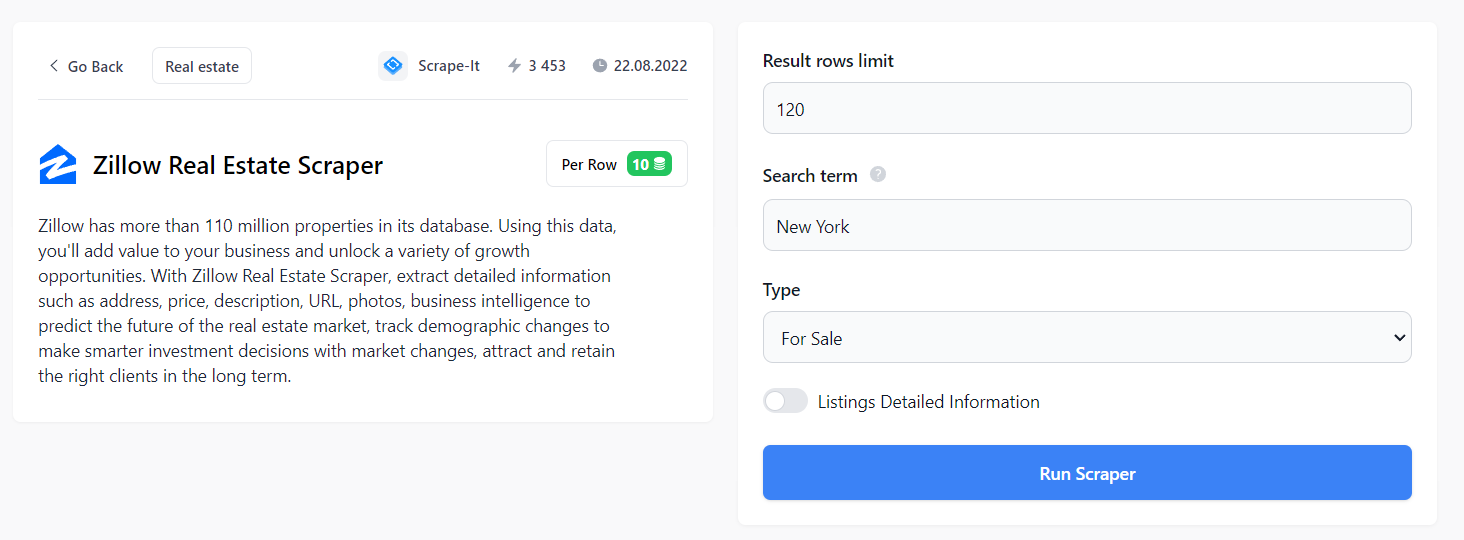

The most convenient is to use a ready-made no-code scraper written specifically for Zillow.

To use it, sign-up at Scrape-It.Cloud and go to the no-code scrapers page. Here you will find the Real Estate category containing a ready-made Zillow scraper.

Zillow Scraper is the tool for real estate agents, investors, and market researchers. Its easy-to-use interface requires no coding knowledge and allows users to…

Use ready-made scrapers to collect data from real estate sites and aggregators about real estate, agents, and consumers in a few clicks. Predict trends, identify…

Here, you can customize all aspects of your real estate search: number of lines, region, and type (for sale, for rent, sold). Also, you can get detailed property listings that include the URL link to each listing, an image if available, a price tag, a description provided by the agent or broker in charge, name and contact information of said agent or broker, as well as agency they are affiliated with, etc.

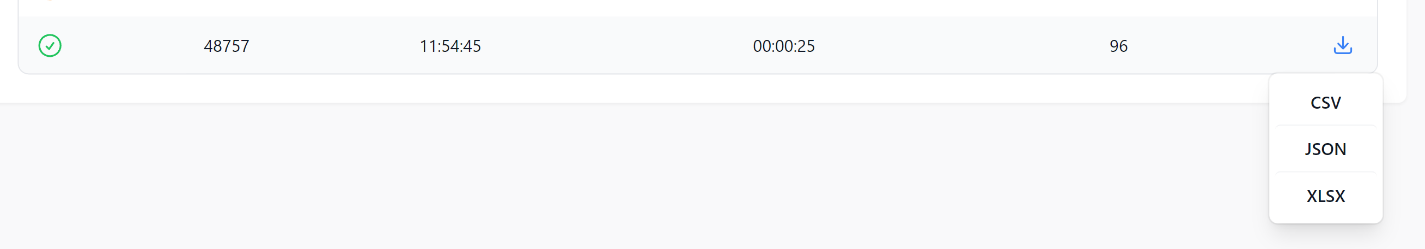

The resulting data can be loaded in CSV, JSON, or XSLX formats.

The resulting data is easy to work with, and there is no need to know programming languages to use the scraper. In addition, there is no need to worry about ways to avoid blocks.

Build your own Zillow web scraping tool

Scraping data from Zillow can be done using a variety of programming languages. Popular choices for scraping webpages include Python and NodeJS. Depending on the complexity of the tasks needed to access the desired information, each language may have advantages or disadvantages regarding speed, accuracy, scalability, and analytics capabilities.

The choice of programming language in Zillow web scraping largely depends on the specific needs and preferences of the user. Both languages have their own pros and cons depending on what kind of tasks must be fulfilled during extraction time.

NodeJS provides an asynchronous environment where webpages can be scraped using JavaScript code. NodeJS offers excellent scalability due to its event-driven architecture, allowing multiple requests simultaneously while maintaining low CPU utilization rates.

On the other hand, Python is a powerful programming language that has been used for web scraping for years. It is easy to learn and offers a wide range of libraries and frameworks that can be used for data analysis, visualization, and parsing. Python's flexibility means it can reliably extract structured information from most webpages.

Scraping Zillow with Python

Let's take a step-by-step look at writing a Zillow scraper in Python. At the end of the article, we will also give additional recommendations to avoid blocking and make scraping more secure.

Zillow API Node.js is a programming interface that allows developers to interact with Zillow's real estate data using the Node.js platform. It provides a set of…

Zillow API Python is a Python library that provides convenient access to the Zillow API. It allows developers to retrieve real estate data such as property details,…

Installing the libraries

First, let's choose the library. There are two options:

- Using query library (Requests, UrlLib, or others) and parsing library (BeautifulSoup, Lxml).

- Using a complete library or scraping framework (Scrapy, Selenium, Pyppeteer).

The first option will be easier for beginners, but the second one will be more secure. So, let's start by writing a simple scraper using Requests and BeautifulSoup libraries to get and parse data. Then we will give an example of a scraper using Selenium.

First, install the Python interpreter. To check, or to make sure it is already installed, type it at the command line:

python -VIf an interpreter has already been installed, its version will be displayed. To install the libraries, enter at the command line:

pip install requests

pip install beautifulsoup4

pip install seleniumSelenium also requires a webdriver and a Chrome browser of the same version.

Zillow page analysis

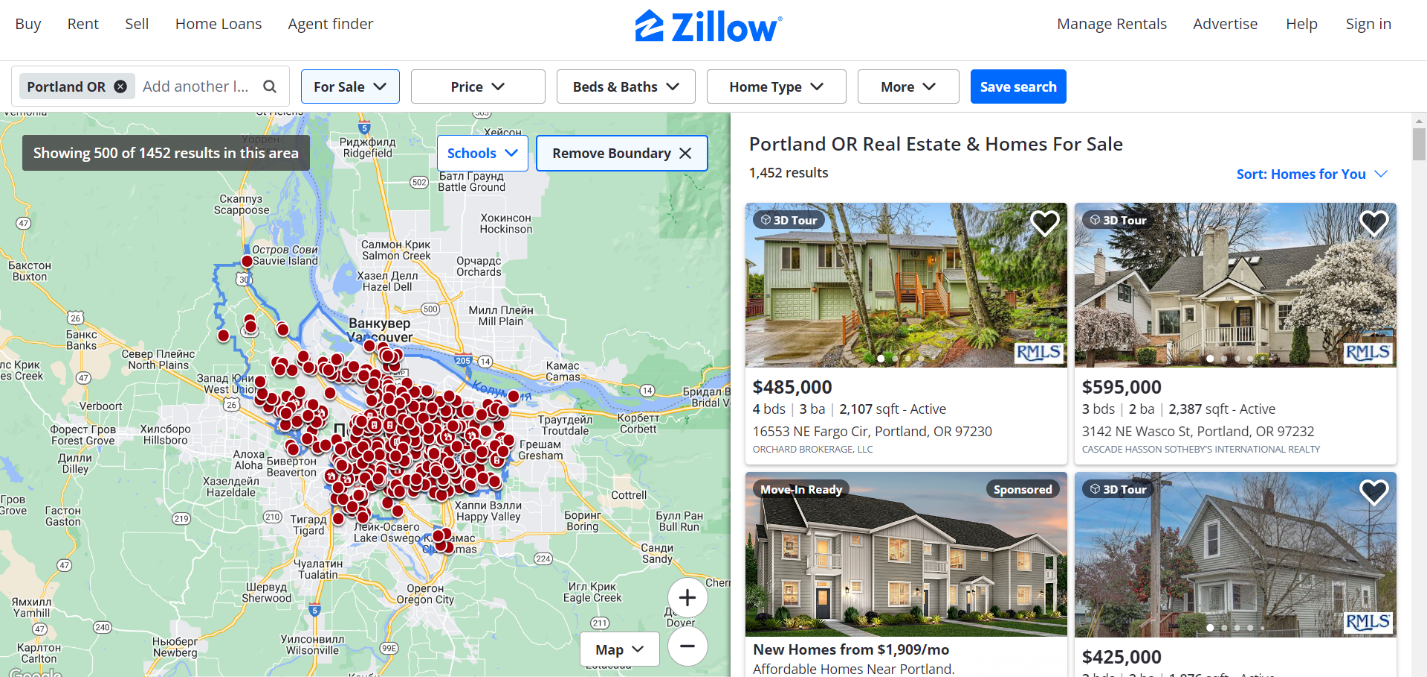

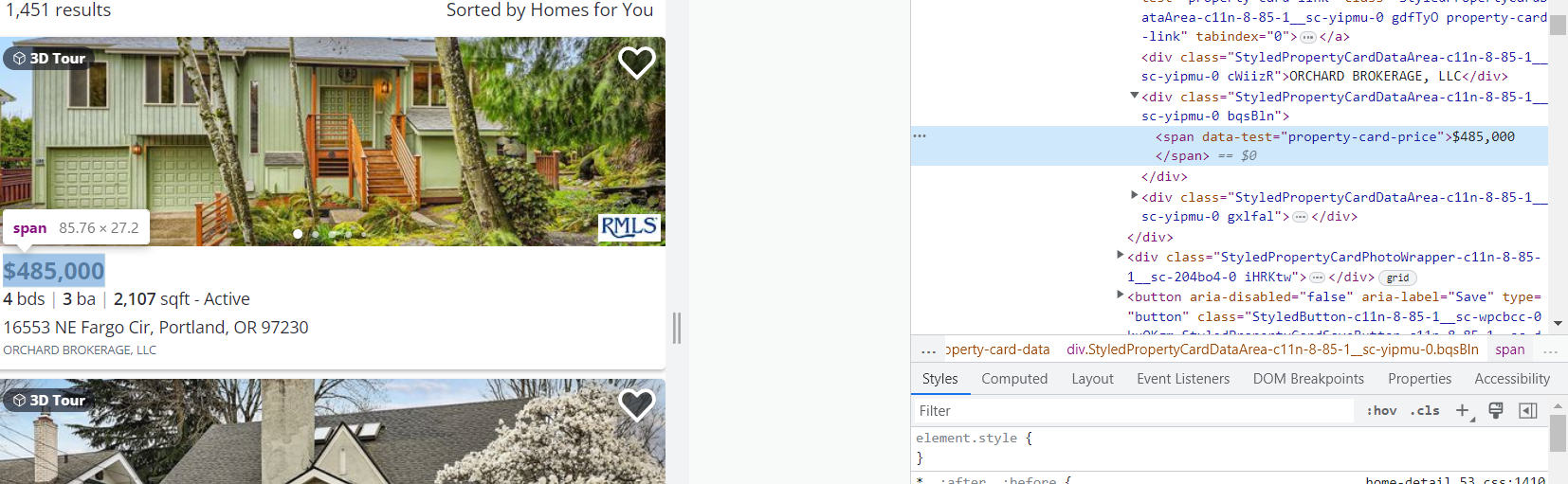

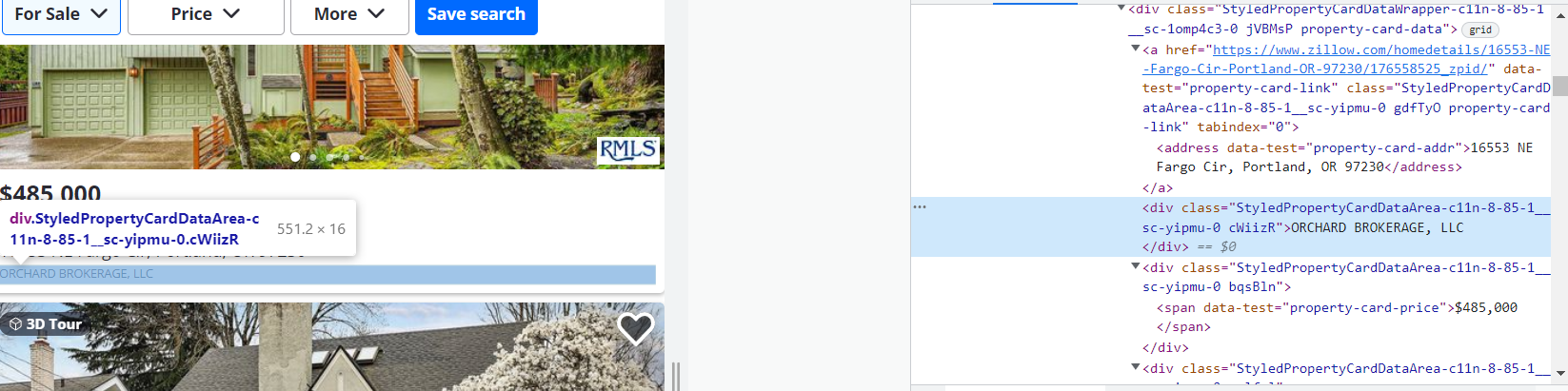

Let's analyze the page to find tags that contain the necessary data. Let's go to the Zillow website to the buy section. In this tutorial, we will collect data about real estate in Portland.

Now let's review the page HTML code to determine the elements we will scrape.

To open the HTML page code, go to DevTools (press F12 or right-click on an empty space on the page and go to Inspect).

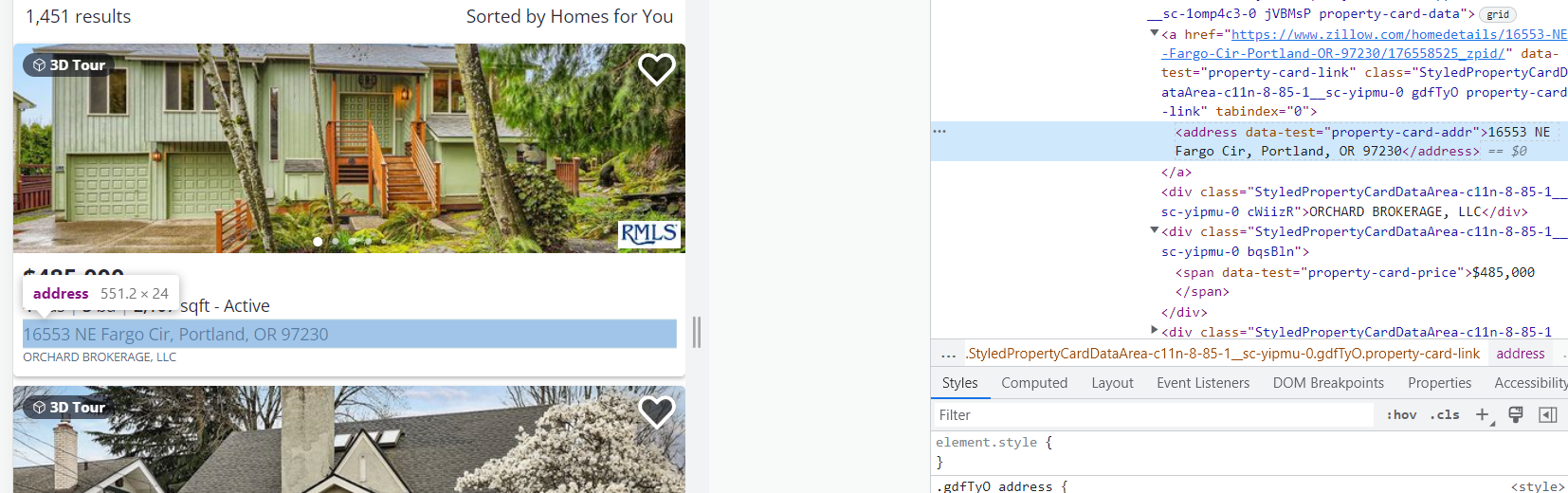

Let's define the elements to scrape:

1. Address. The data is in the <address data-test="property-card-addr">...</address> tag.

2. Price. Data is in <span data-test="property-card-price">…</span> tag.

3. Seller or Realtor. The data is in the <div class= "cWiizR">…</div> tag.

For all other cards, the tags will be similar.

Read more: The Ultimate CSS Selectors Cheat Sheet for Web Scraping

Now, using the information we've gathered, let's start writing a scraper.

Creating a web scraper

Create a file with the *.py extension and include the necessary libraries:

import requests

from bs4 import BeautifulSoupLet's make a request and save the code of the whole page in a variable.

data = requests.get('https://www.zillow.com/portland-or/')Process the data using the BS4 library.

soup = BeautifulSoup(data.text, "lxml")Create variables address, price, and seller, in which we will enter the executed data using the information collected earlier.

address = soup.find_all('address', {'data-test':'property-card-addr'})

price = soup.find_all('span', {'data-test':'property-card-price'})

seller = soup.find_all('div', {'class':'cWiizR'})Unfortunately, if we try to display the contents of these variables, we get an error because Zillow returned the captcha, not the page code.

To avoid this, add headers to the body of the request:

header = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'referer':'https://www.zillow.com/homes/Missoula,-MT_rb/'}

data = requests.get('https://www.zillow.com/portland-or/', headers=header)Now let's try to display the result on the screen:

print(address)

print(price)

print(seller)The result of such a script would be the following:

[<address data-test="property-card-addr">3142 NE Wasco St, Portland, OR 97232</address>, <address data-test="property-card-addr">4801 SW Caldew St, Portland, OR 97219</address>, <address data-test="property-card-addr">16553 NE Fargo Cir, Portland, OR 97230</address>, <address data-test="property-card-addr">3064 NW 132nd Ave, Portland, OR 97229</address>, <address data-test="property-card-addr">3739 SW Pomona St, Portland, OR 97219</address>, <address data-test="property-card-addr">1440 NW Jenne Ave, Portland, OR 97229</address>, <address data-test="property-card-addr">3435 SW 11th Ave, Portland, OR 97239</address>, <address data-test="property-card-addr">8023 N Princeton St, Portland, OR 97203</address>, <address data-test="property-card-addr">2456 NW Raleigh St, Portland, OR 97210</address>]

[<span data-test="property-card-price">$595,000</span>, <span data-test="property-card-price">$395,000</span>, <spanspan data-test="property-card-price">$485,000</span>, <span data-test="property-card-price">$1,185,000</span>, <span data-test="property-card-price">$349,900</span>, <span data-test="property-card-price">$599,900</span>, <span data-test="property-card-price">$575,000<span/span>, <span data-test="property-card-price">$425,000</span>, <span data-test="property-card-price">$1,195,000</span>]

[<div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">CASCADE HASSON SOTHEBY'S INTERNATIONAL REALTY</div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">PORTLAND CREATIVE REALTORS</div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">ORCHARD BROKERAGE, LLC</div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">ELEETE REAL ESTATE</div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">URBAN NEST REALTY</div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">KELLER WILLIAMS REALTY PROFESSIONALS<div/div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">KELLER WILLIAMS PDX CENTRAL<div/div>, <div class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">EXP REALTY, LLC</div>, <divdiv class="StyledPropertyCardDataArea-c11n-8-85-1__sc-yipmu-0 cWiizR">CASCADE HASSON SOTHEBY'S INTERNATIONAL REALTY</div>]Let's create additional variables and put only the property listings text from the received data into them:

adr=[]

pr=[]

sl=[]

for result in address:

adr.append(result.text)

for result in price:

pr.append(result.text)

for results in seller:

sl.append(result.text)

print(adr)

print(pr)

print(sl)The result:

['16553 NE Fargo Cir, Portland, OR 97230', '3142 NE Wasco St, Portland, OR 97232', '8023 N Princeton St, Portland, OR 97203', '3064 NW 132nd Ave, Portland, OR 97229', '1440 NW Jenne Ave, Portland, OR 97229', '10223 NW Alder Grove Ln, Portland, OR 97229', '5302 SW 53rd Ct, Portland, OR 97221', '3435 SW 11th Ave, Portland, OR 97239', '3739 SW Pomona St, Portland, OR 97219']

['$485,000', '$595,000', '$425,000', '$1,185,000', '$599,900', '$425,000', '$499,000', '$575,000', '$349,900']

['ORCHARD BROKERAGE, LLC', 'CASCADE HASSON SOTHEBY'S INTERNATIONAL REALTY', 'EXP REALTY, LLC', 'ELEETE REAL ESTATE', 'KELLER WILLIAMS REALTY PROFESSIONALS', 'ELEETE REAL ESTATE', 'REDFIN', 'KELLER WILLIAMS PDX CENTRAL', 'URBAN NEST REALTY']Full script code:

import requests

from bs4 import BeautifulSoup

header = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'referer':'https://www.zillow.com/homes/Missoula,-MT_rb/'}

data = requests.get('https://www.zillow.com/portland-or/', headers=header)

soup = BeautifulSoup(data.text, 'lxml')

address = soup.find_all('address', {'data-test':'property-card-addr'})

price = soup.find_all('span', {'data-test':'property-card-price'})

seller =soup.find_all('div', {'class':'cWiizR'})

adr=[]

pr=[]

sl=[]

for result in address:

adr.append(result.text)

for result in price:

pr.append(result.text)

for results in seller:

sl.append(result.text)

print(adr)

print(pr)

print(sl)Now the data is in a convenient format, and you can work with it further.

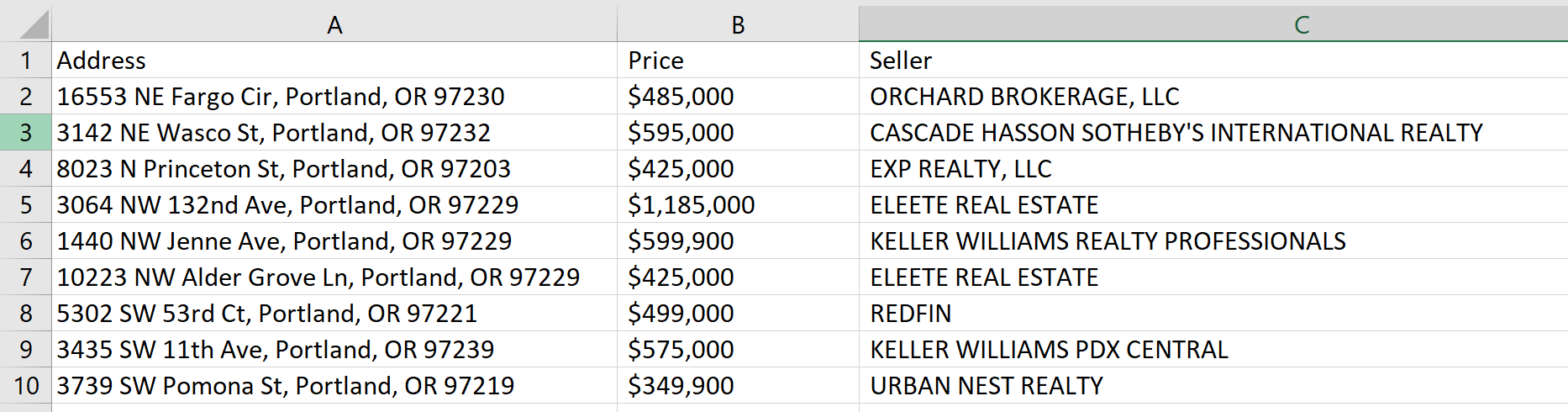

Saving data

So that we don't have to copy the data into the file ourselves, let's save it to a CSV file. To do this, let's create a file and enter the names of the columns in it:

with open("zillow.csv", "w") as f:

f.write("Address; Price; Seller\n")The letter "w" indicates that if a file named zillow.csv does not exist, it will be created. In case such a file exists, it will be deleted and re-created. You can use the "a" attribute to avoid overwriting the content every time you run the script.

Go through the elements and put them in the table:

for i in range(len(adr)):

with open("zillow.csv", "a") as f:

f.write(str(adr[i])+"; "+str(pr[i])+"; "+str(sl[i])+"\n")As a result, we got the following table:

Full script code:

import requests

from bs4 import BeautifulSoup

header = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36',

'referer':'https://www.zillow.com/homes/Missoula,-MT_rb/'}

data = requests.get('https://www.zillow.com/portland-or/', headers=header)

soup = BeautifulSoup(data.text, 'lxml')

address = soup.find_all('address', {'data-test':'property-card-addr'})

price = soup.find_all('span', {'data-test':'property-card-price'})

seller =soup.find_all('div', {'class':'cWiizR'})

adr=[]

pr=[]

sl=[]

for result in address:

adr.append(result.text)

for result in price:

pr.append(result.text)

for results in seller:

sl.append(result.text)

with open("zillow.csv", "w") as f:

f.write("Address; Price; Seller\n")

for i in range(len(adr)):

with open("zillow.csv", "a") as f:

f.write(str(adr[i])+"; "+str(pr[i])+"; "+str(sl[i])+"\n")Thus, we created a simple Zillow scraper in Python.

How to scrape Zillow without getting blocked

Zillow strictly prohibits using scrapers and bots for data collection from their website. They closely monitor and take action against any attempts to gather data using these methods.

Let's take a quick look at how we can change or improve the resulting code to reduce the risk of blocking.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Using Proxies

The easiest way is to use proxies. We have already written about proxies and where you can get free ones.

Let's create a proxy file and put some working proxies in it. Then connect the proxy file to scraper:

with open('proxies.txt', 'r') as f:

proxies = f.read().splitlines()To select proxies randomly, let's connect the random library to the project:

import randomNow write a random value from the proxies list to the proxy variable and add a proxy to the request body:

proxy = random.choice(proxies)

data = requests.get('https://www.zillow.com/portland-or/', headers=header, proxies={"http": proxy})This will reduce the number of errors and help to avoid blocking.

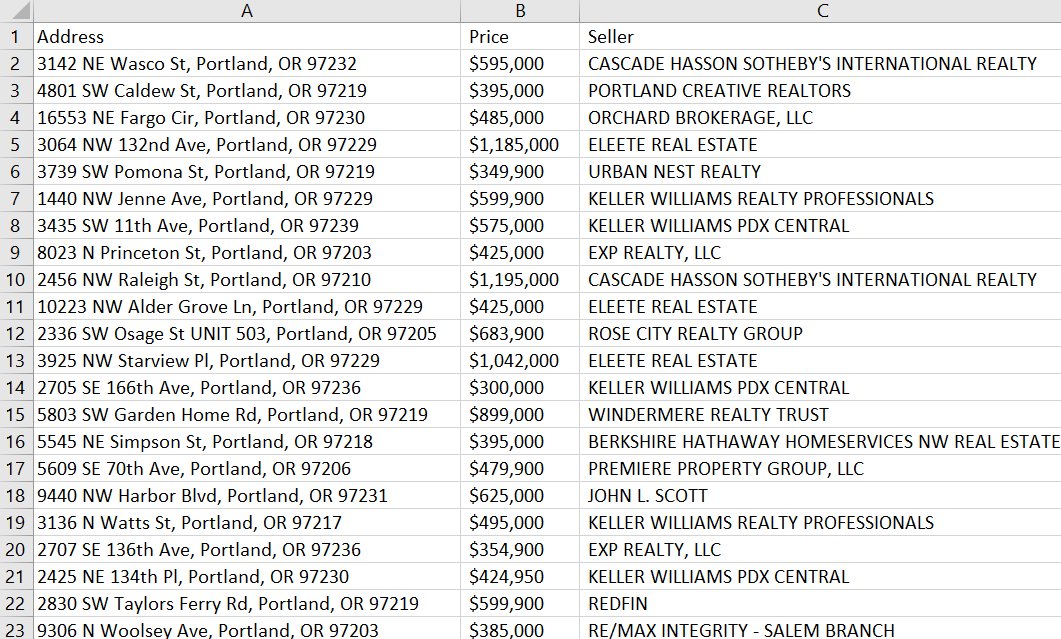

Using the headless browser

Another way to avoid blocking is to use a headless browser. The most convenient library for this is Selenium.

Create a new file with the *.py extension, import the library and the necessary modules, as well as the web driver:

from selenium import webdriver

from selenium.webdriver.common.by import By

DRIVER_PATH = 'C:\chromedriver.exe'

driver = webdriver.Chrome(executable_path=DRIVER_PATH)

To make the example more complete, we will use XPath to perform the necessary data:

address = driver.find_elements(By.XPATH,'//address')

price = driver.find_elements(By.XPATH,'//article/div/div/div[2]/span')

seller = driver.find_elements(By.XPATH,'//div[contains(@class, "cWiizR")]')Now use some of the code from the last example and add saving data to a file:

adr=[]

pr=[]

sl=[]

for result in address:

adr.append(result.text)

for result in price:

pr.append(result.text)

for results in seller:

sl.append(result.text)

with open("zillow.csv", "w") as f:

f.write("Address; Price; Seller\n")

for i in range(len(adr)):

with open("zillow.csv", "a") as f:

f.write(str(adr[i])+"; "+str(pr[i])+"; "+str(sl[i])+"\n")In the end, close the webdriver:

driver.quit()Full code:

from selenium import webdriver

from selenium.webdriver.common.by import By

DRIVER_PATH = 'C:\chromedriver.exe'

driver = webdriver.Chrome(executable_path=DRIVER_PATH)

driver.get('https://www.zillow.com/portland-or/')

address = driver.find_elements(By.XPATH,'//address')

price = driver.find_elements(By.XPATH,'//article/div/div/div[2]/span')

seller = driver.find_elements(By.XPATH,'//div[contains(@class, "cWiizR")]')

adr=[]

pr=[]

sl=[]

for result in address:

adr.append(result.text)

for result in price:

pr.append(result.text)

for results in seller:

sl.append(result.text)

with open("zillow.csv", "w") as f:

f.write("Address; Price; Seller\n")

for i in range(len(adr)):

with open("zillow.csv", "a") as f:

f.write(str(adr[i])+"; "+str(pr[i])+"; "+str(sl[i])+"\n")

driver.quit()After launching, Chromium will open with the page we specified. When the page is fully loaded, the necessary data is collected from it and saved to a file, after which the web driver closes.

File Contents:

Thus, we have pretty simply increased the security of our scraper.

Using web scraping API

Using the web scraping API is the best choice because it combines the use of a headless browser, automatic proxy rotation, and other ways to bypass blocking.

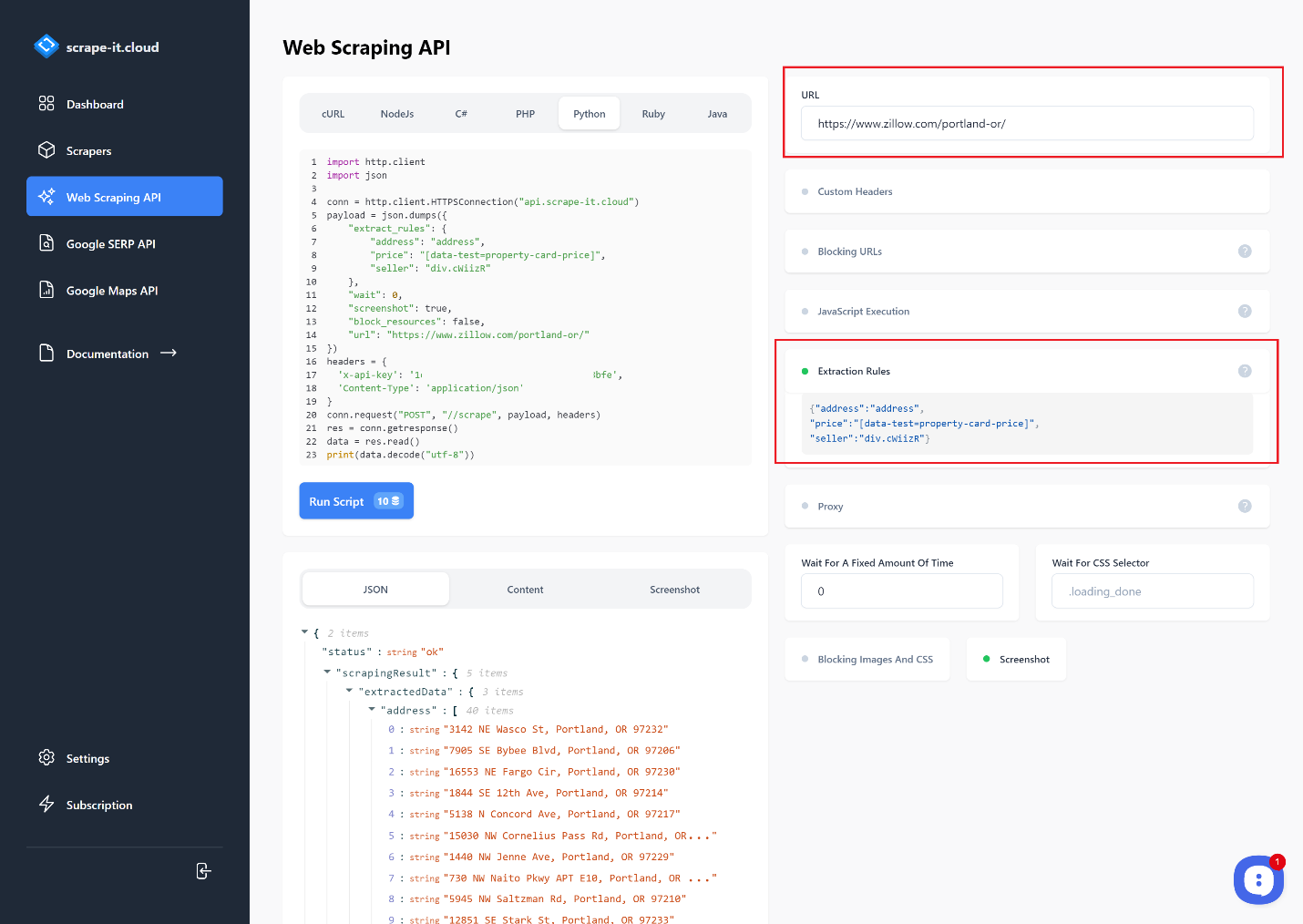

Let's use Scrape-It.Cloud to these tasks. Sign-up and verify your email to get 1000 free credits. Then go to the Web Scraping API page and enter the link from which you want to extract data. You can choose a programming language and customize the request.

Let's use Extraction Rules to get only the address, price, and seller:

Depending on the purpose, the resulting data can be copied and processed or used immediately. For convenience, let's create a script and, based on the resulting request, make a scraper that includes a function to save data to a CSV file. To make the example more complete, let's use the Requests library and rewrite the request:

import requests

import json

url = "https://api.scrape-it.cloud/scrape"

payload = json.dumps({

"extract_rules": {

"address": "address",

"price": "[data-test=property-card-price]",

"seller": "div.cWiizR"

},

"wait": 0,

"screenshot": True,

"block_resources": False,

"url": "https://www.zillow.com/portland-or/"

})

headers = {

'x-api-key': 'YOUR-API-KEY',

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)Now let's transform the response to the form in which it is more convenient to work with the structure:

data = json.loads(response.text)Create variables address, price, and seller, in which we put the data from the query:

address = []

price = []

seller = []

for item in data["scrapingResult"]["extractedData"]["address"]:

address.append(item)

for item in data["scrapingResult"]["extractedData"]["price"]:

price.append(item)

for item in data["scrapingResult"]["extractedData"]["seller"]:

seller.append(item)Now let's save the data to a file:

with open("result.csv", "w") as f:

f.write("Address; Price; Seller\n")

for i in range(len(address)):

with open("result.csv", "a") as f:

f.write(str(address[i])+"; "+str(price[i])+"; "+str(seller[i])+"\n")As a result, we got the same *.csv file as before, but now we don't need to use a headless browser, proxy, or connect service to solve the captcha. All these functions are already performed on the Scrape-It.Cloud side.

Read also about cURL Python

Conclusion and takeaways

Scraping Zillow with Python has much potential for gathering valuable insights into the real estate market. It is an efficient way to collect data on listings, prices, neighborhoods, and much more. With well-crafted requests and code utilizing Python’s libraries, such as Beautiful Soup or Selenium, anyone can leverage Zillow’s website to access this data to analyze their local or regional market trend.

However, it is worth remembering that the structure or names of classes may be changed on the site, so before using our examples, you should ensure that the data is still up to date.

If writing the scraper itself is still quite difficult for you, try a no-code scraper. Our no-code scraper is relatively quick and easy to set up without coding experience or knowledge.