Email scraping is the process of automatically extracting email addresses from a variety of sources, such as websites, business directories, or social media. The choice of data source depends on how the data will be used.

In this article, we will explore the option of collecting a list of websites from Google SERP or Google Maps and then extracting email data from those websites. To simplify the process of collecting data from websites, we will use various API and integration services, such as Zapier and Make.com.

How Email Scraping Works

To scrape email addresses from websites, you first need to define a list of websites from which you want to scrape emails. Then, you need to crawl all of these websites, or pages (if you are scraping social media), and extract the email addresses. This process can be used to create databases for a variety of purposes, from digital marketing campaigns to lead generation and CRM.

Find and extract emails from any website with ease. Build targeted email lists for lead generation, outreach campaigns, and market research. Download your extracted…

Extract valuable business data such as names, addresses, phone numbers, websites, ratings, and more from a wide range of local business directories. Download your…

In this tutorial, we will cover how to automatically scrape links from websites using the Google SERP API and Google Maps API. We will also show how to save the data to a CSV file. If you already have a list of resources from which you want to scrape data, you can also configure scraping of those websites. Here are the steps involved in scraping emails from websites:

- Define a list of websites from which you want to scrape email addresses. This can be done manually or by using bots, chrome extensions, or any other scraping tool.

- Use a web scraping tool to crawl the websites in your list. The tool will extract the email addresses from the websites.

- Save the email addresses to a database or a file. This will make it easy to access the data later.

In addition, to make the scraping more comprehensive, you can also extract additional data, such as phone numbers, pricing, or addresses, when scraping links from Google Maps. We will demonstrate all of these examples in practice below. We will also provide examples of how to use integrations for those who are not familiar with programming, and we will develop a Python script for those who want to customize their script for later integration into their program.

Email Scraping in Practice

Let's talk about practical ways to scrape email addresses. We've already discussed various ways to scrape email addresses from Google Maps, so we'll focus on the process of collecting links to resources from Google SERP and sequentially crawling websites to collect emails. We'll consider the following options for collecting email addresses:

- Using ready-made no-code email scraper. This method requires no programming skills, and the process of collecting emails is fully automated.

- Using integration services. This method requires setting up parameters and integrations. This option includes using services such as Make.com and Zapier.

- Creating your email extractor. This will require programming skills, as the scraping and data processing process will be set up in a separate script.

Below, we'll review each of these options, from the simplest and requiring no additional skills, to writing a separate scraper based on various APIs to simplify the process.

Web Scraping Emails using Python

Now, we will demonstrate how to create your own Python email scraper. We're using Python because it's one of the simplest and most popular programming languages for scraping. We'll explore two methods: an email scraping API and with regular expressions. We'll start with the API approach, as it's simpler.

Email Scraping API

In this section, we'll walk through the steps of building your own email scraper. Before we start coding, let's outline our goals:

- Extract a list of websites from Google SERP based on a keyword.

- Scrape emails from the found websites.

- Save the extracted emails to a file for future use.

To simplify the scraping process, we're also using the HasData APIs, since we're more concerned with processing the data we collect than with how we collect it. However, you can modify the scripts yourself to collect data independently, without using the API. Previously, we wrote about how to scrape any website in Python and how to scrape Google SERP in Python.

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

We will provide the final script in Colab Research for your convenience. If you get confused or have any problems, you can simply copy our final version. So, let’s start writing the script. First, import the libraries that we will use to make requests, work with JSON responses, and process and save data, respectively:

import requests

import json

import pandas as pdNext, we'll declare variables to store changing parameters or those that will be used multiple times, such as the HasData API key or the keyword for searching for relevant websites in Google SERP.

api_key = 'YOUR-API-KEY

keyword = 'scraping api'Customize the body and headers of future requests. You can change the language or localization, and more. See the official documentation for a full list of available parameters.

params_serp = {

'q': keyword,

'filter': 1,

'domain': 'google.com',

'gl': 'us',

'hl': 'en',

'deviceType': 'desktop'

}

url_serp = "https://api.hasdata.com/scrape/google"

url_scrape = "https://api.hasdata.com/scrape"

headers_serp = {'x-api-key': api_key}

headers_scrape = {

'x-api-key': api_key,

'Content-Type': 'application/json'

}Make a request to the Google SERP API and parse the JSON response for further processing.

response_serp = requests.get(url_serp, params=params_serp, headers=headers_serp)

data_serp = response_serp.json()Extract links from the received JSON response.

links = [result['link'] for result in data_serp.get('organicResults', [])]Create a variable to store the link-email address mappings, and create a loop to iterate over all the received links.

data = []

for link in links:Create a request body for a Web Scraping API to extract email addresses from the current link.

payload_scrape = json.dumps({

"url": link,

"js_rendering": True,

"extract_emails": True

})Make an API request and parse the JSON response.

response_scrape = requests.post(url_scrape, headers=headers_scrape, data=payload_scrape)

json_response_scrape = response_scrape.json()Extract the emails from the response and add them to the previously created variable data.

emails = json_response_scrape.get("scrapingResult", {}).get("emails", [])

data.append({"link": link, "emails": emails})Finally, create a DataFrame from the collected data and save it to a CSV file.

df = pd.DataFrame(data)

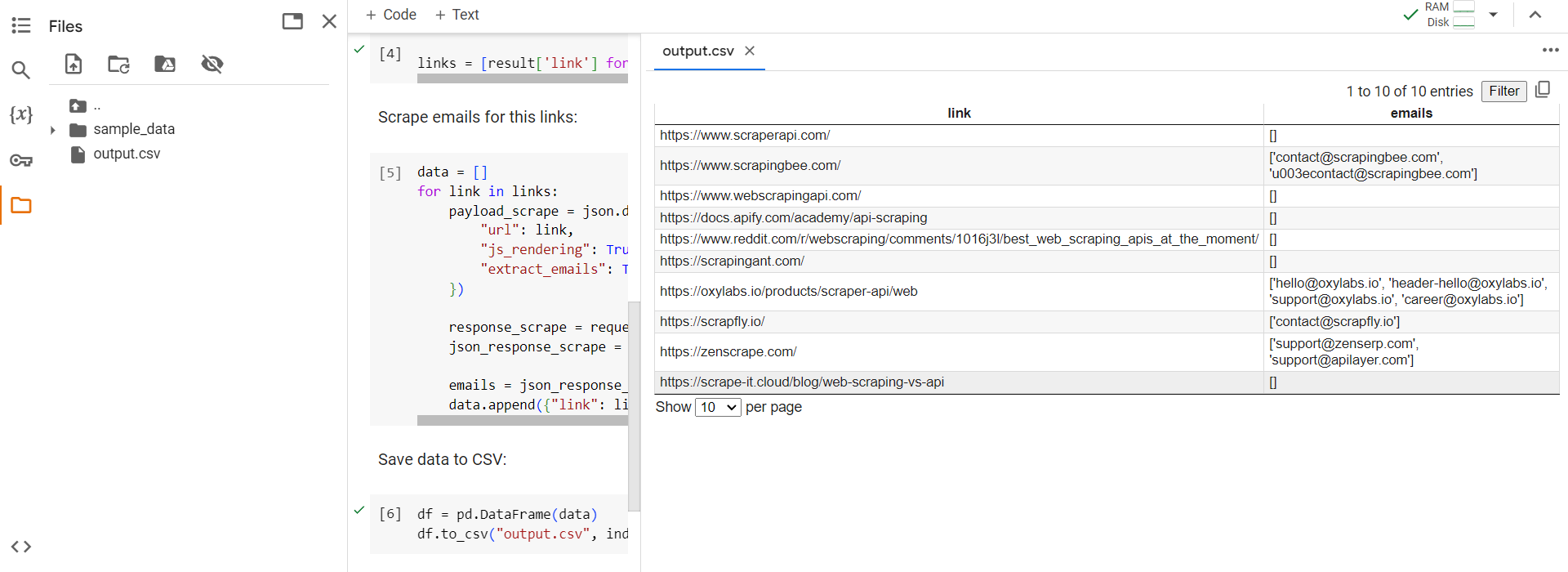

df.to_csv("output.csv", index=False)To test the script, we will run it directly in Colab Research and review the generated output file.

As you can see from the example, the script collects all available emails and saves them in a convenient CSV or Excel file. You can further develop the script to use, for example, your file with a list of links to websites from which you need to scrape email addresses, instead of searching for websites in Google SERP.

Regular Expressions

Using an API provides a ready-made list of email addresses for each website. However, if you choose not to use an API, the second most convenient method is to use regular expressions. They allow you to conveniently extract emails from HTML code that match a specific pattern.

Let's modify the task and write a script that follows this algorithm:

- Assume we have a file with a list of websites to collect emails from. You can collect this list manually or use the Google SERP API from the previous example.

- Iterate through all the websites in the file and extract emails using regular expressions.

- Save the extracted data to a file.

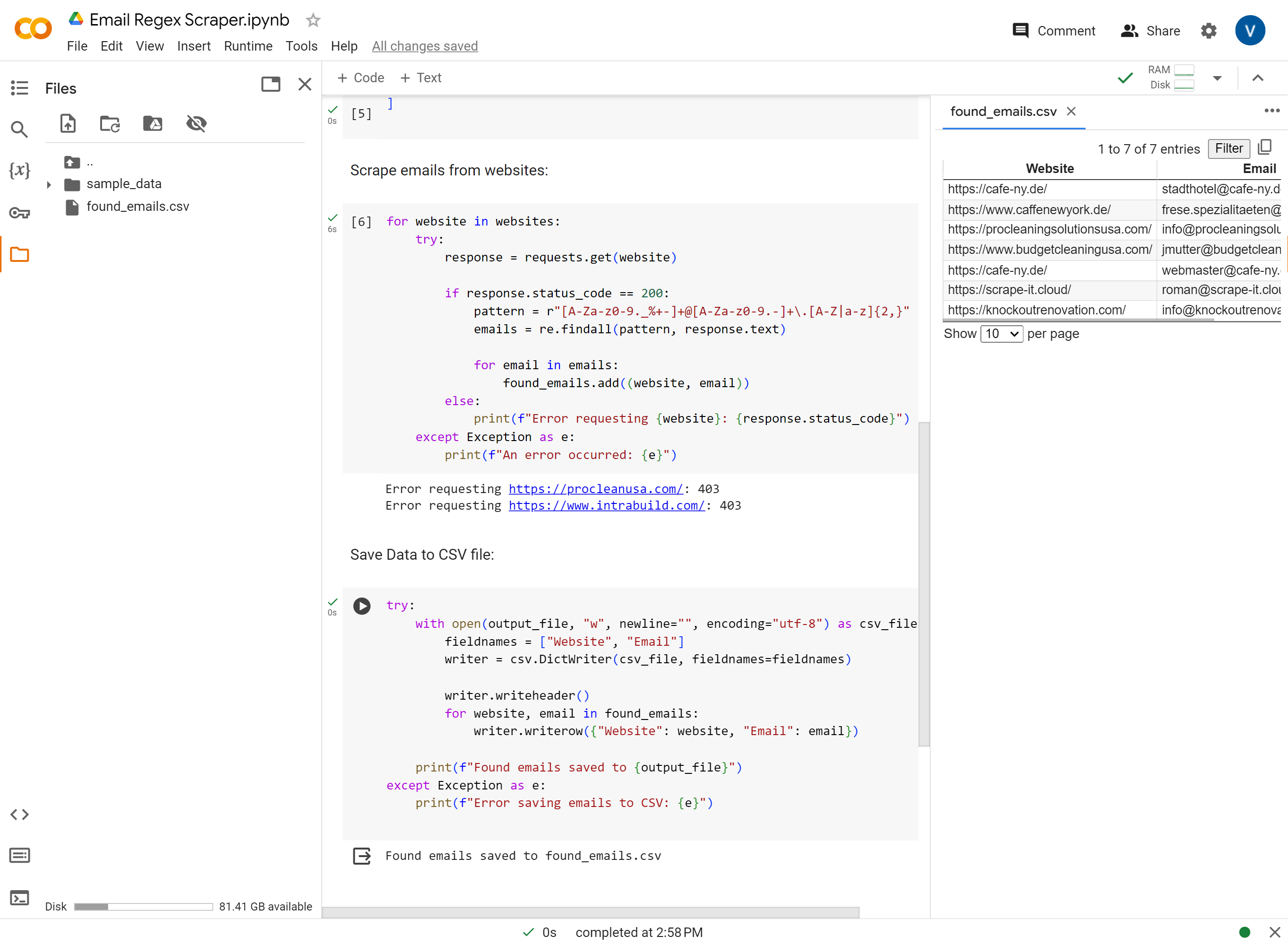

Move on to developing the script. If you are stuck or just want to see the result, you can go to the Colab Research page with the script.

Let's start by importing the necessary libraries. This time, we'll use the CSV library to save data to a file instead of the Pandas library. This is a bit more complicated, but it will help to make our example more diverse. We'll also import the regular expressions library:

import requests

import re

import csvDefine a set to store the emails we scrape:

found_emails = set() Then define the path to the file where we'll save the extracted emails::

output_file = "found_emails.csv"We have two options for obtaining the list of websites. As initially planned, we can read the website URLs from a file:

file_path = "websites_list.txt"

try:

with open(file_path, "r") as file:

websites = file.read().splitlines()

except FileNotFoundError:

print(f"File {file_path} not found.")

exit()Alternatively, we can specify the websites directly in the code as a list:

websites = [

"https://hasdata.com/",

"https://www.caffenewyork.de/"

]You can choose the most convenient option for you. We will then iterate through all the websites and make a request to each one. If the request is successful, we will collect the data. If the request is unsuccessful, we will display an error message. Let's define the basic code:

for website in websites:

try:

response = requests.get(website)

if response.status_code == 200:

# Here will be code

else:

print(f"Error requesting {website}: {response.status_code}")

except Exception as e:

print(f"An error occurred: {e}")Let’s refine this section to collect emails upon successful completion. It's worth noting that there is a widely accepted regex pattern for email address extraction:

[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}Let's integrate it into our code:

pattern = r"[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}"

emails = re.findall(pattern, response.text)

for email in emails:

found_emails.add((website, email))Finally, let's implement data saving to a file:

try:

with open(output_file, "w", newline="", encoding="utf-8") as csv_file:

fieldnames = ["Website", "Email"]

writer = csv.DictWriter(csv_file, fieldnames=fieldnames)

writer.writeheader()

for website, email in found_emails:

writer.writerow({"Website": website, "Email": email})

print(f"Found emails saved to {output_file}")

except Exception as e:

print(f"Error saving emails to CSV: {e}")Email scraping with regular expressions is possible, but it requires obtaining the HTML code of the page and can be quite technical. For most users, using an Email Scraping API is a much better option. It is convenient, efficient, and requires less technical expertise.

No-Code Scrapers for Business Emails

Using a ready-made no-code scraping tool is the simplest and yet most complex way to collect email addresses. This is because you can only extract data with a ready-made powerful tool if the resource for which the tool was developed initially provides data about the email addresses of companies or users.

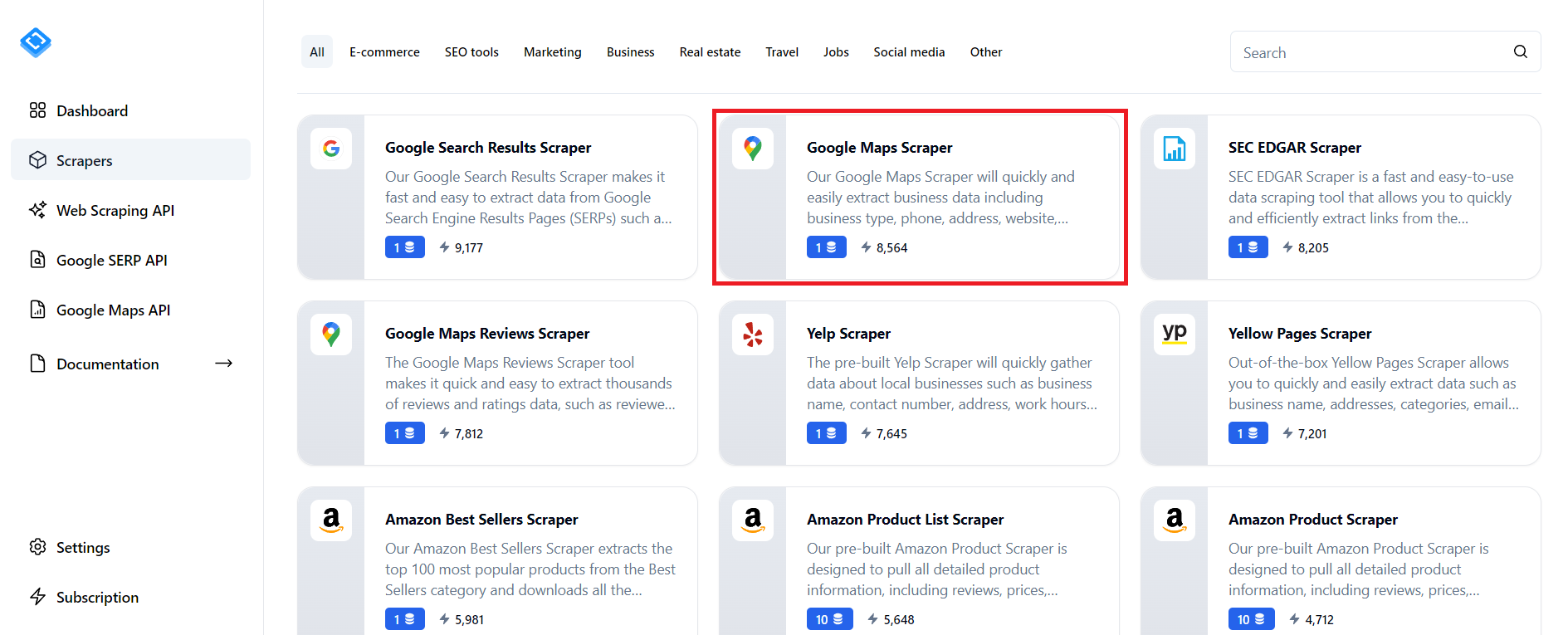

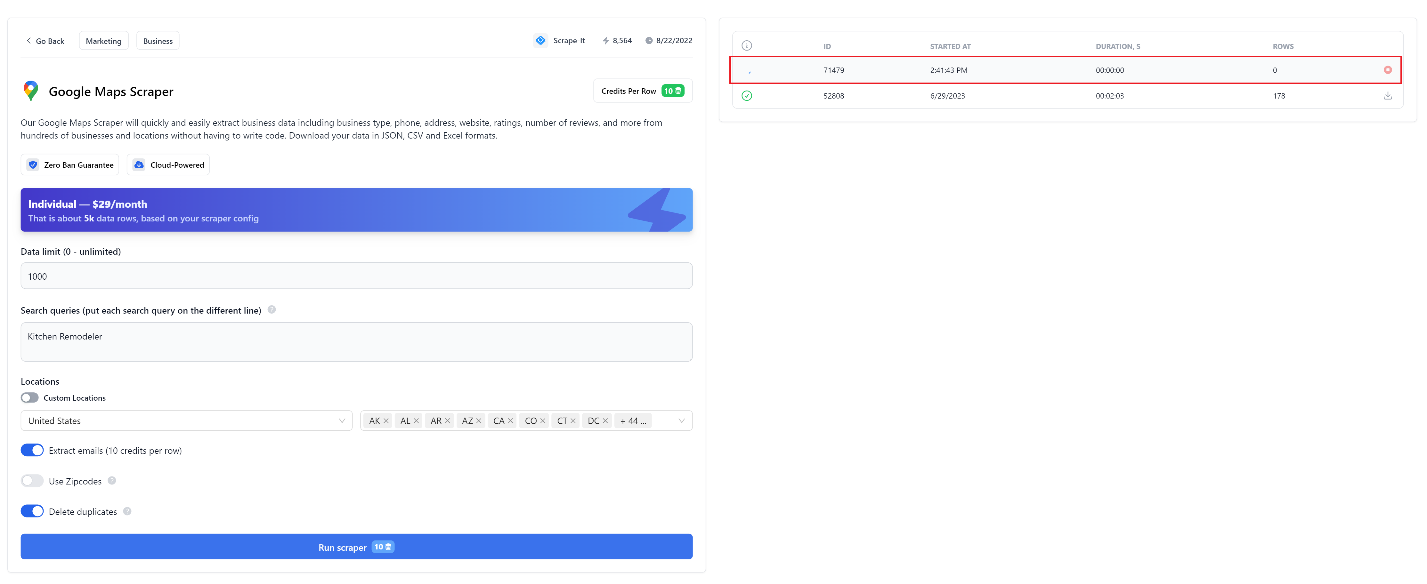

One such resource is Google Maps, which not only provides data about places, but also their contact information, including email addresses. To quickly get email addresses from Google Maps, sign up at the HasData website and go to the section of ready-made web scraping tools.

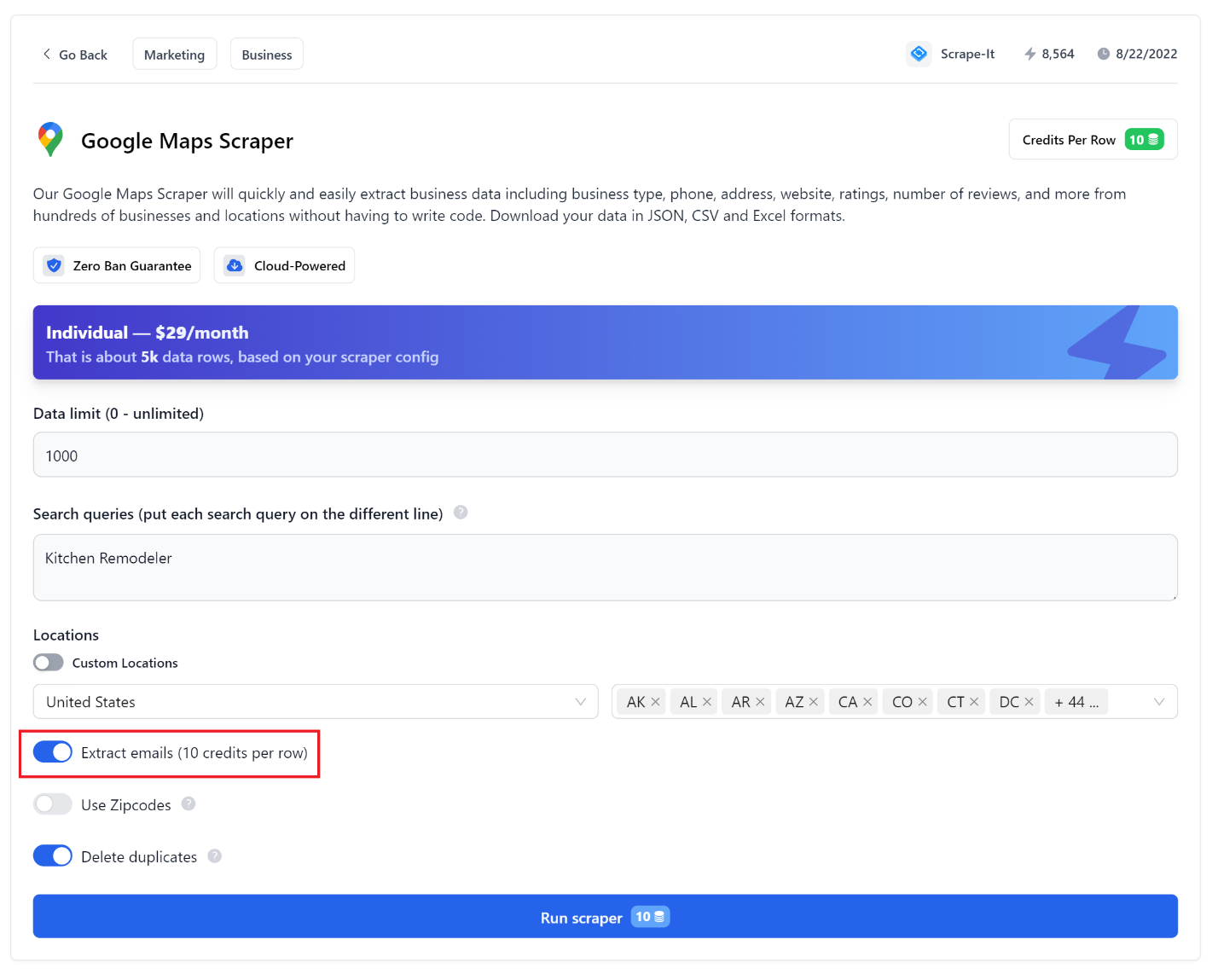

Then find the Google Maps scraper and configure the necessary parameters according to your requirements. Make sure to check the box to collect email addresses.

Once you have done this, click the start scraping button and wait for the data collection to finish.

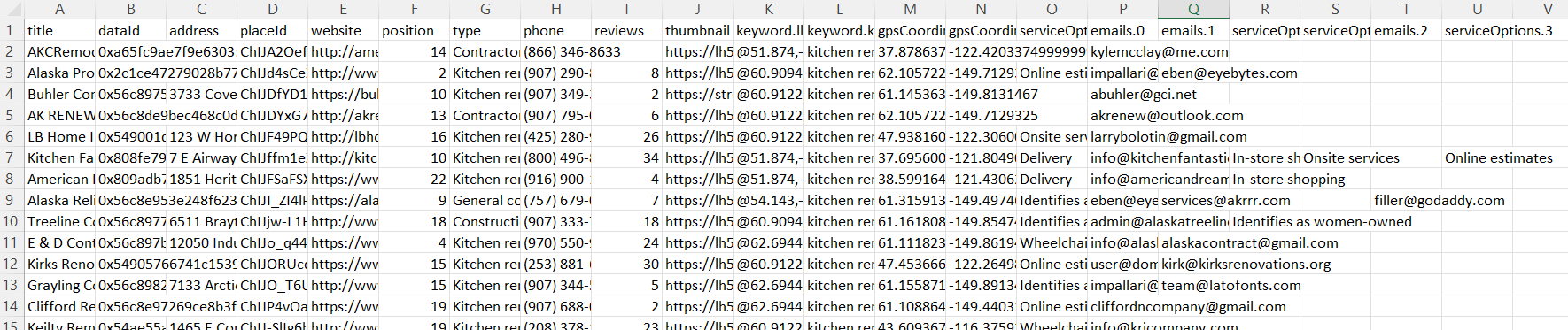

Once the data collection is finished, you can download the data file in a convenient format. For example, CSV data will look similar to this:

All of the parameters are easily adjustable and intuitive, but you can find a more detailed description of the parameters in our previous article.

Extracting Emails with Zapier

One of the most popular integration services today is Zapier. We have already written in detail about what it is and how to use it, so we will not go into detail about the pros, cons, and principles of working with this service. Let's compose the algorithm for the future Zap and proceed with its implementation:

- Get the keyword from Google Sheets, which will be used to search for target websites for further crawling. You can change this step, for example, to getting a link, then you can go directly to step 3. This may be suitable if you already have a list of websites from which you need to collect email addresses.

- Collect links to websites by keyword using the HasData's SERP API.

- Crawl the websites for which links were obtained and extract Emails from each using the web scraping API.

- Enter the obtained data into another sheet of the table.

As you can see from the algorithm, we will use various HasData APIs to simplify the process of scraping data. To use them, you just need to sign up on the website and get an API key in your account.

To make things easier, we can split this into two separate Zaps. The first one will collect a list of websites for a given keyword from Google SERP. The second Zap will crawl the already collected websites and extract their email addresses. If you already have a list of the websites you need, you can skip to the second Zap.

Scrape List of Websites

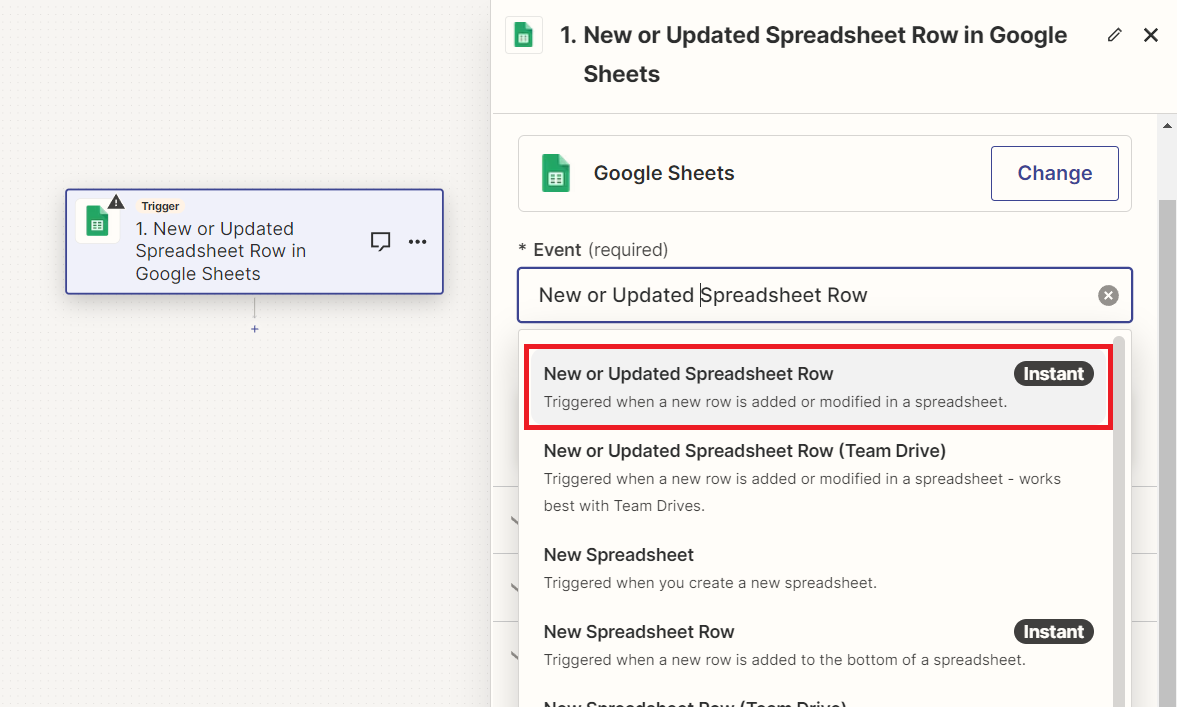

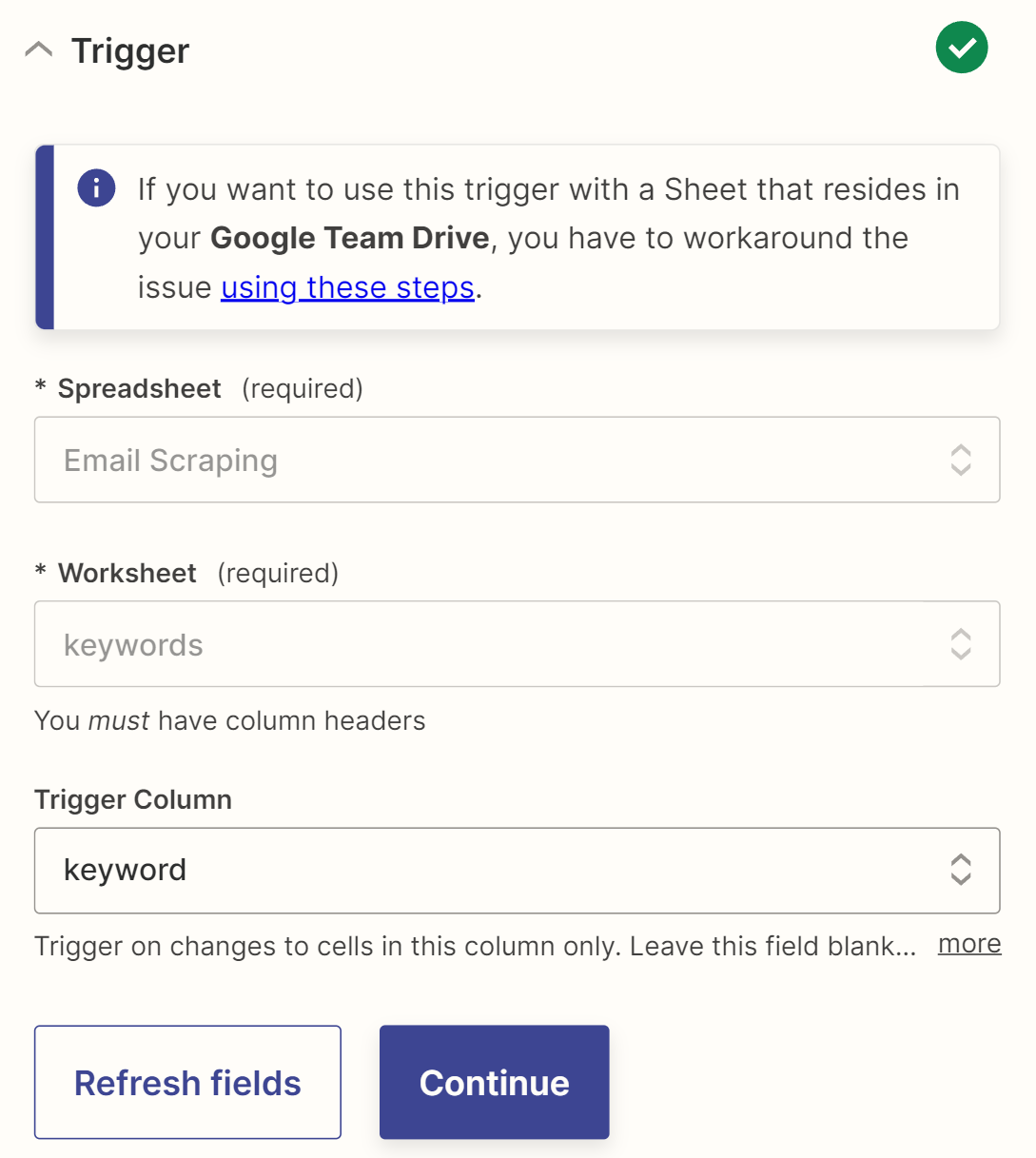

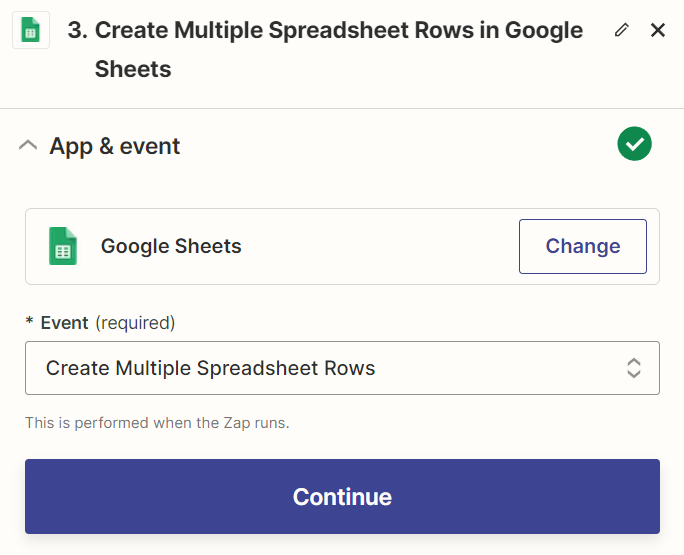

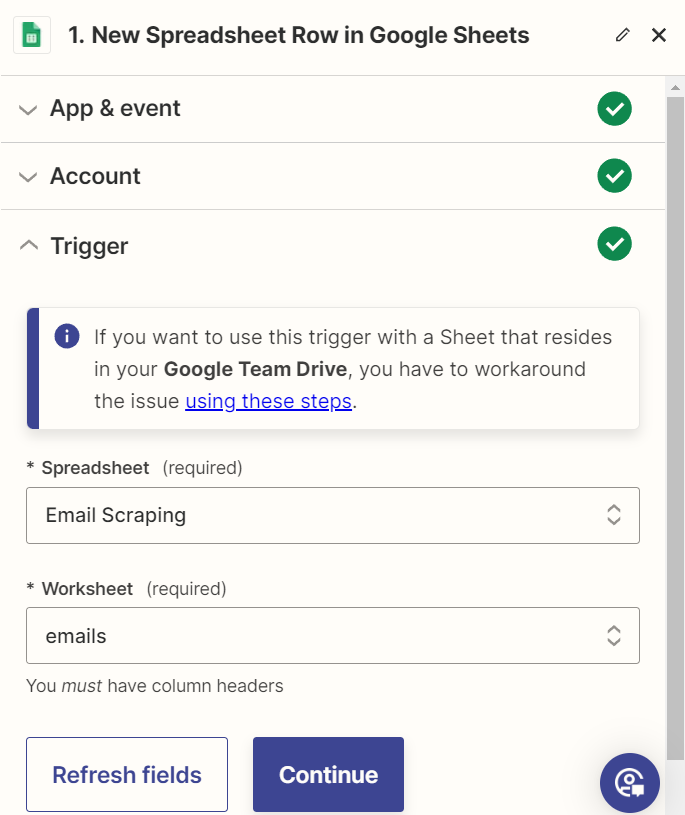

Now let's return to Zapier and create a new Zap. We will use adding or updating a row in Google Sheets as a trigger.

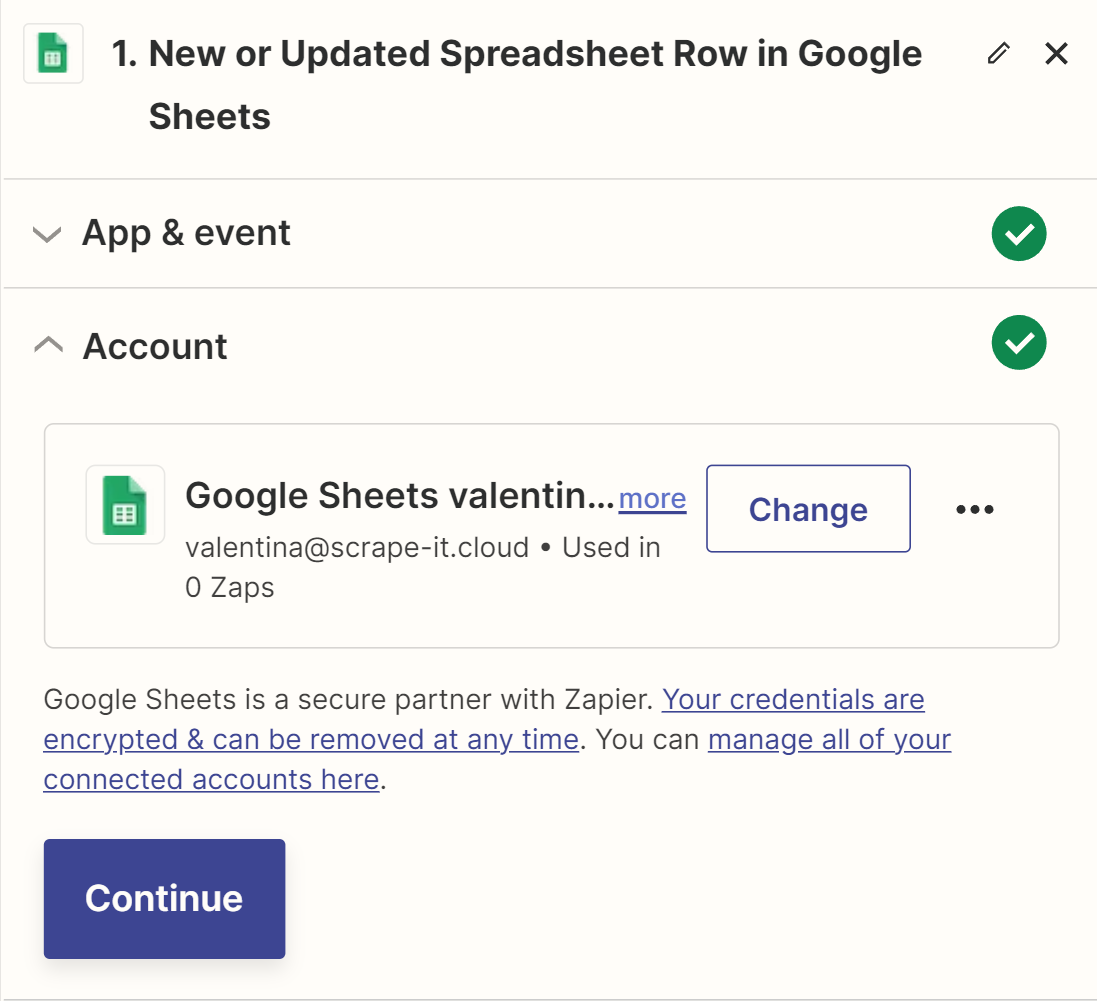

Next, sign in to your Google account, which contains a document with a table to store the data.

Create a Google Sheet file with two sheets: keywords (with a keyword column where you will record keywords for searching for target websites) and an email list (with link, title, and emails columns for storing website and email data). Then connect this sheet as a trigger and mark the column where the keywords will be stored as the tracking column.

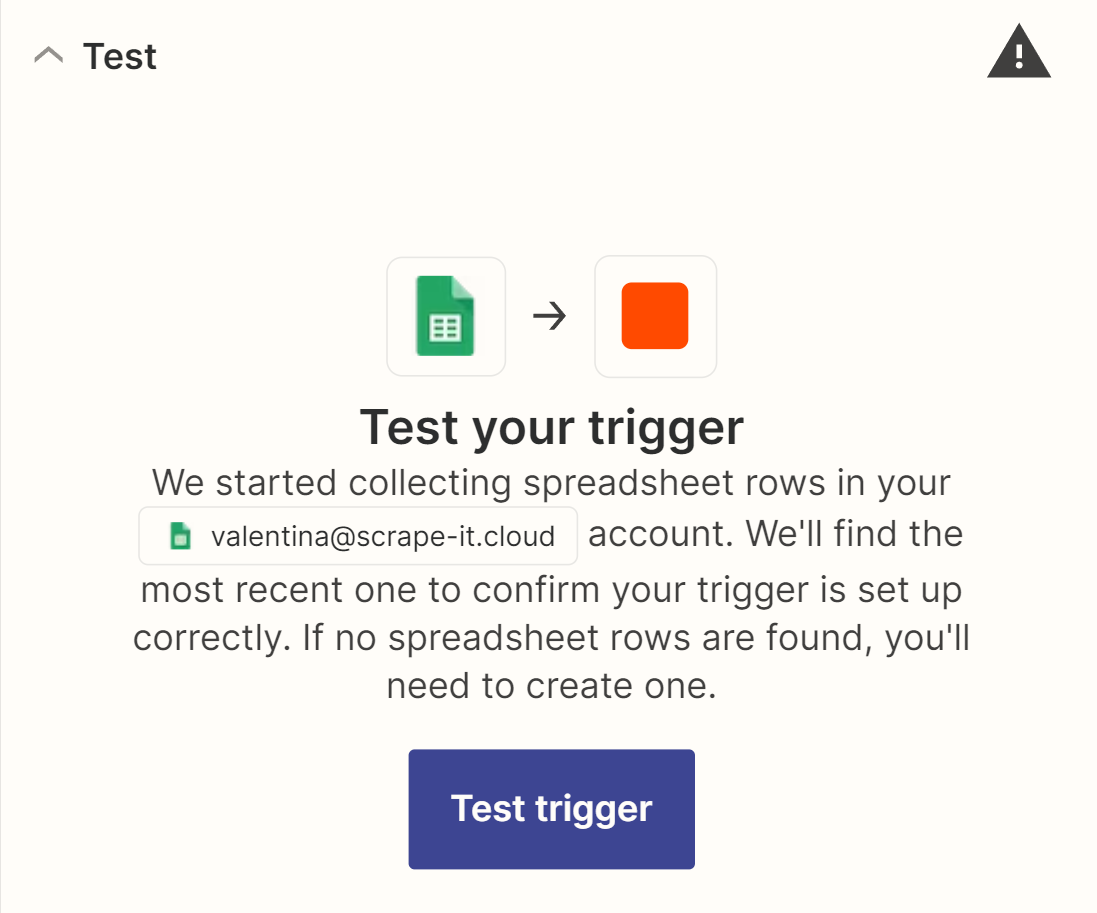

Before moving on to the next step, ensure that the trigger is working correctly.

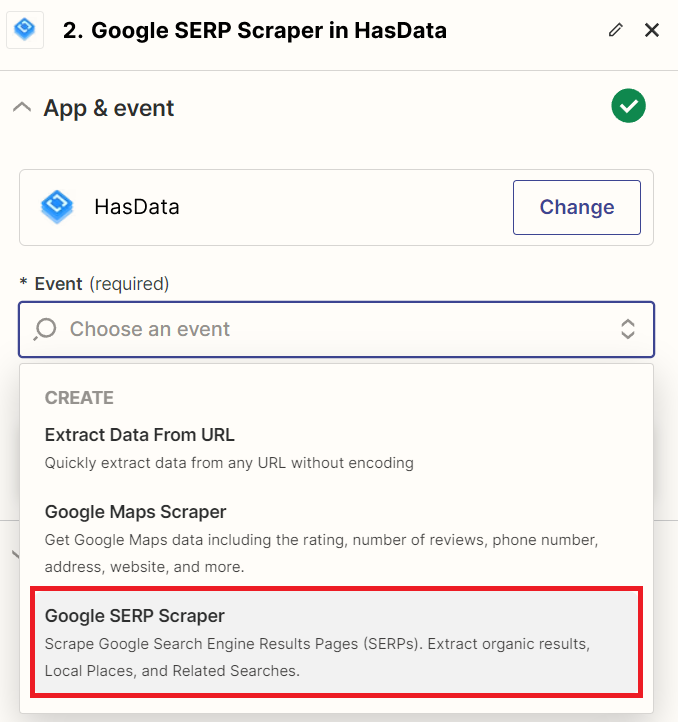

To do this, add data to the keyword column and run the trigger test. If the data is found and retrieved, the trigger is working correctly. Then, you can proceed to the next action. To do this, find the HasData integration and select the Google SERP action.

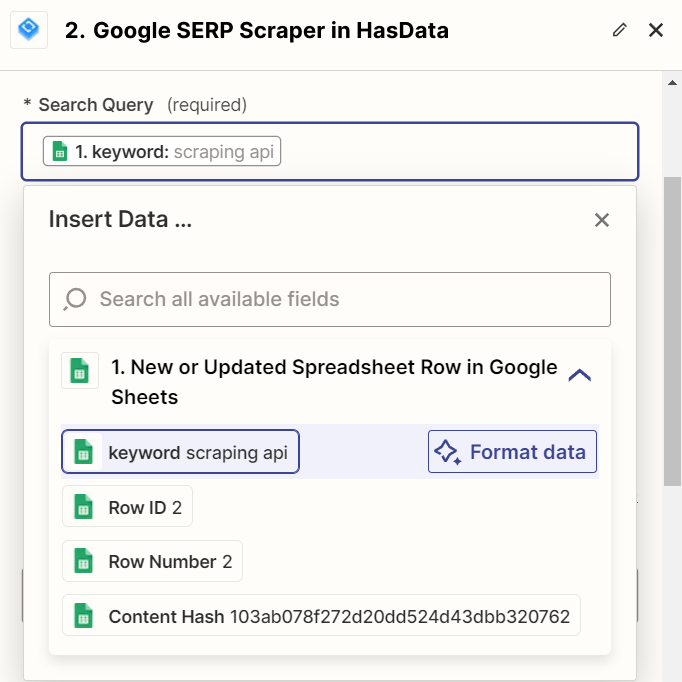

To connect your HasData account, log in with your API key, and go to the settings page. In the Search Query field, enter the keywords from the table. You can customize the rest of the parameters, including language and location settings, as needed. More information about all the available parameters can be found in the official documentation.

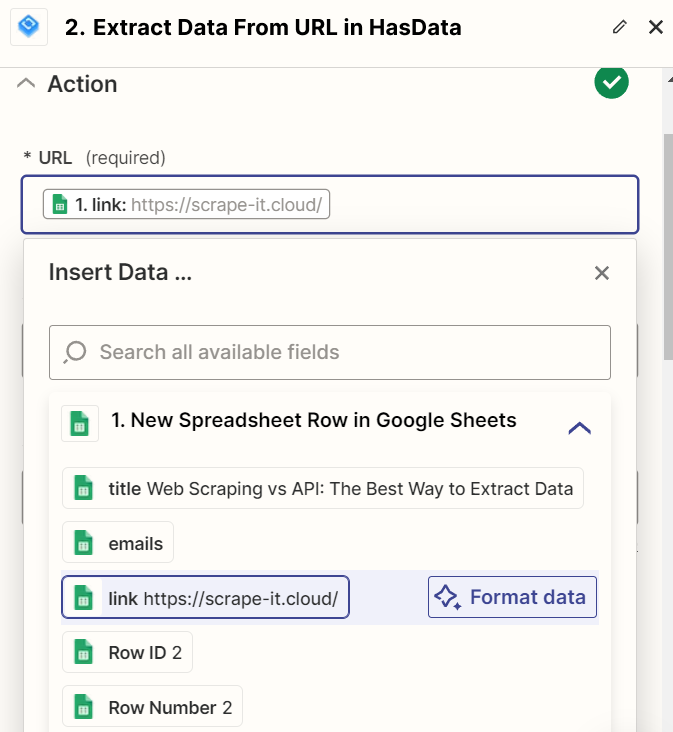

Before proceeding to the next step, verify the functionality of the action. The final step in this Zap is to save the data to a separate sheet in the same Google Sheet.

Zapier does not support loops that we need, so we cannot process the data immediately. Instead, we will save the links and website names to a new sheet and process them in a subsequent Zap.

Let's save the Zap we just created and create another one.

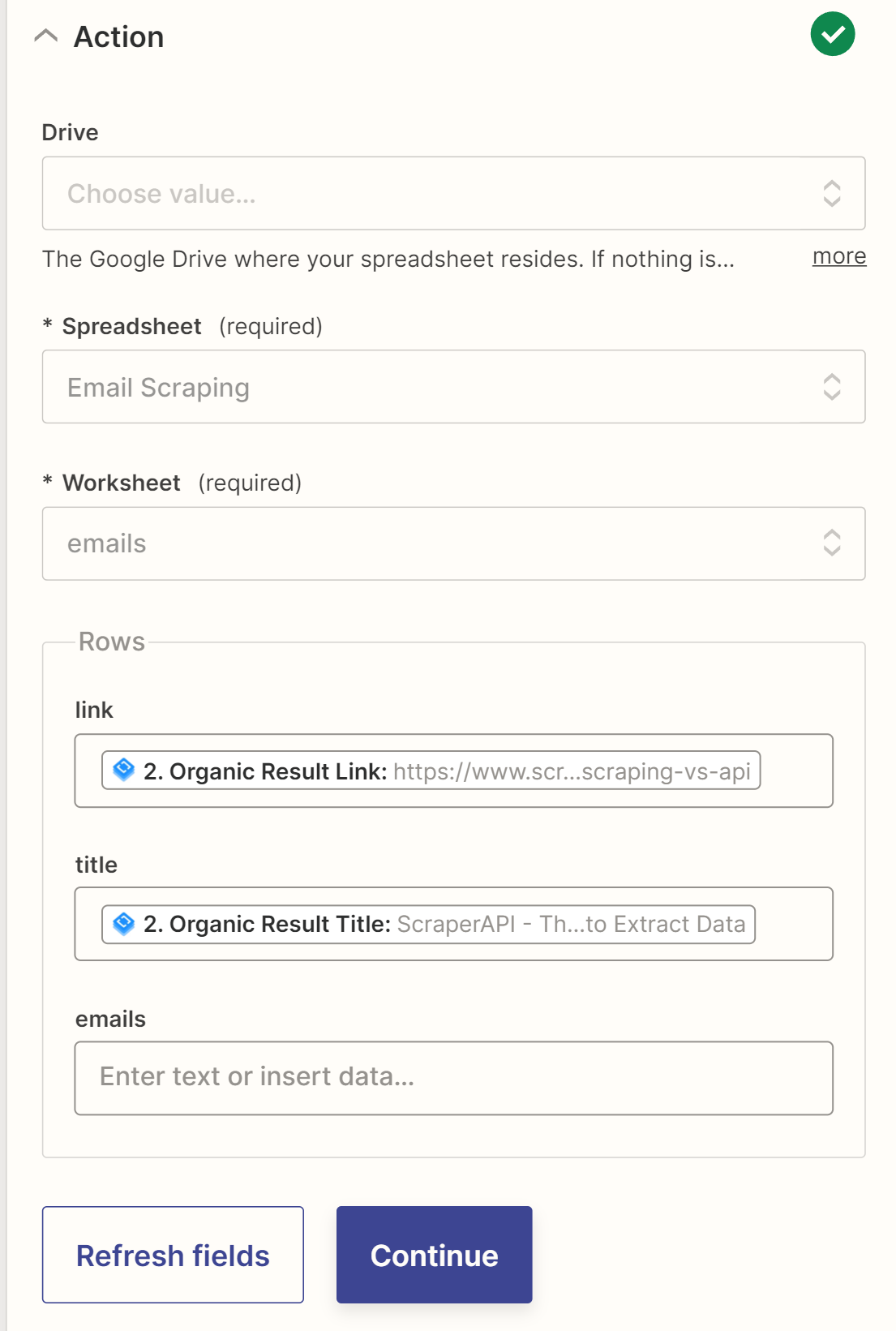

Scrape Emails from Websites

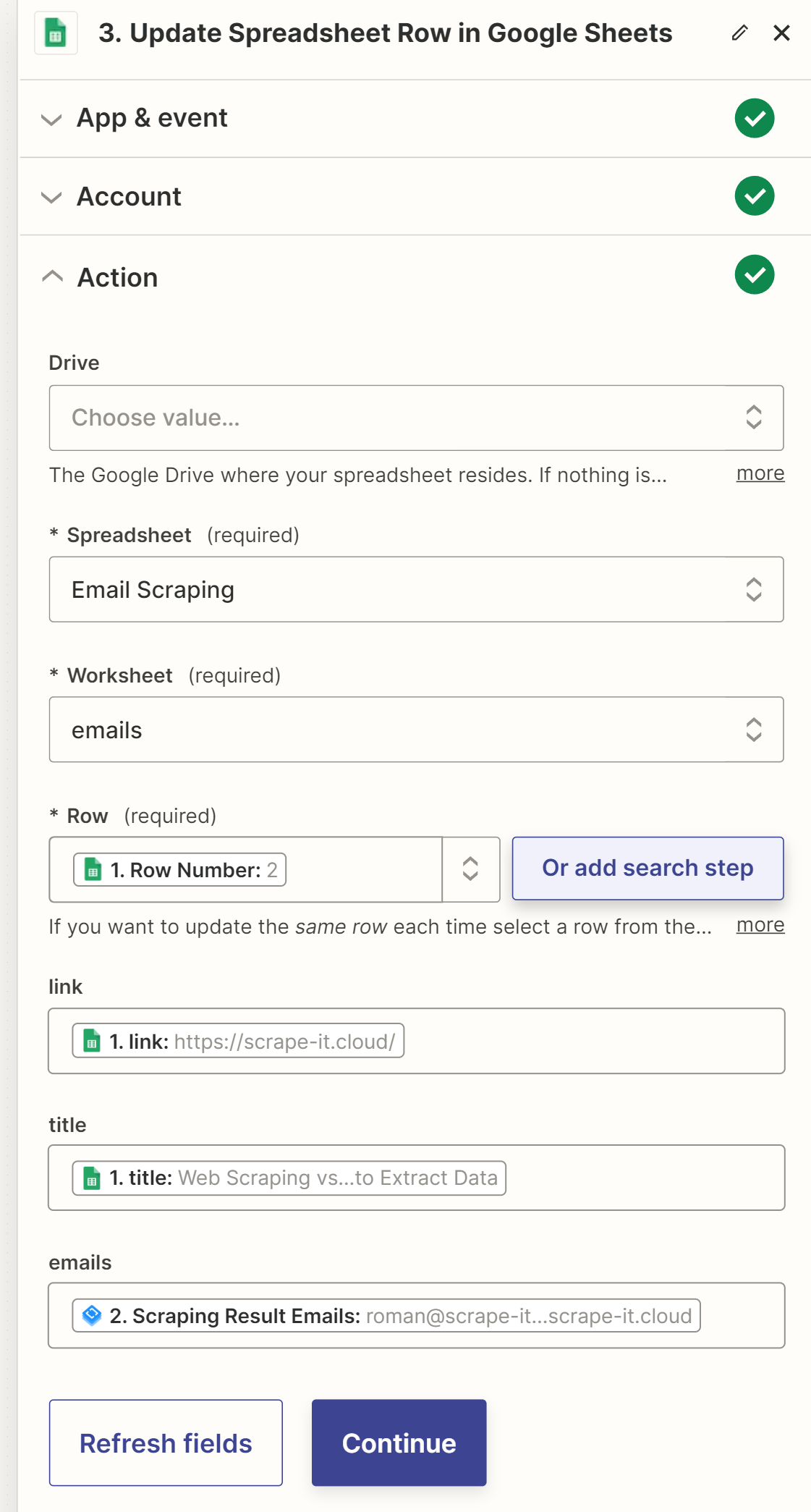

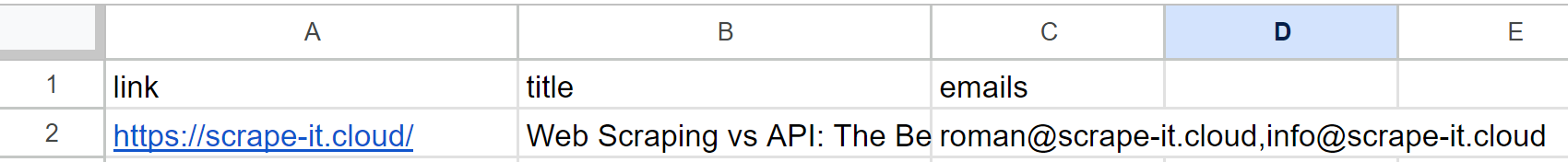

To start, we'll add a trigger that will activate our Zap. In our case, we need to track the appearance of new rows in a Google Sheet and then process them.

Therefore, after we detect a new row in the emails table, we call the HasData action. We need to extract data from the site using the URL to retrieve email addresses from the site using the previously collected links.

To complete the process, we should update the table row that we received in the trigger. The row number is passed into the trigger as a parameter. We can use it to identify the row to update. Set the values for all cells in the row, including the collected emails.

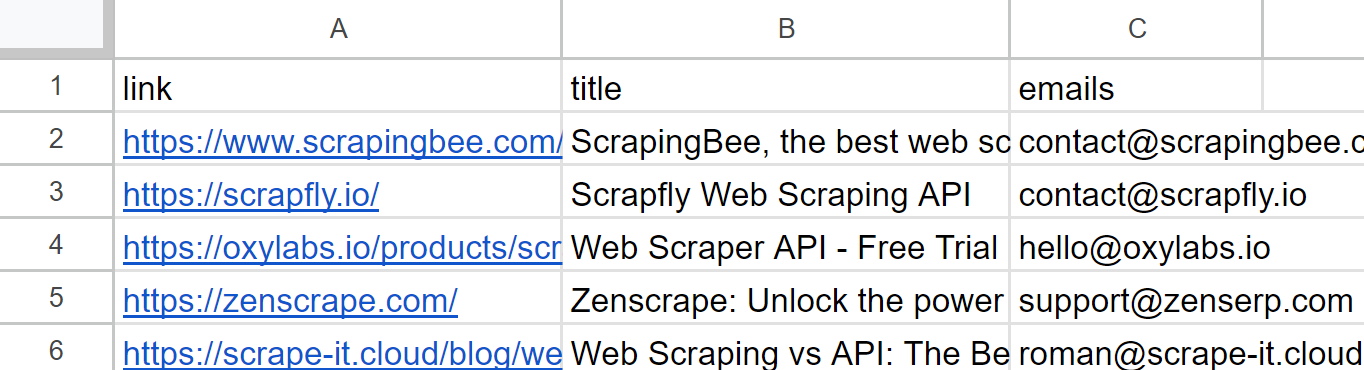

After the test, the table we created initially will contain updated data:

As we mentioned earlier, you are not required to use the first Zap. You can use a list of websites obtained in any other way. Additionally, you can further customize the Zap as needed to save additional information or to set up email delivery to the collected email addresses using Gmail integration.

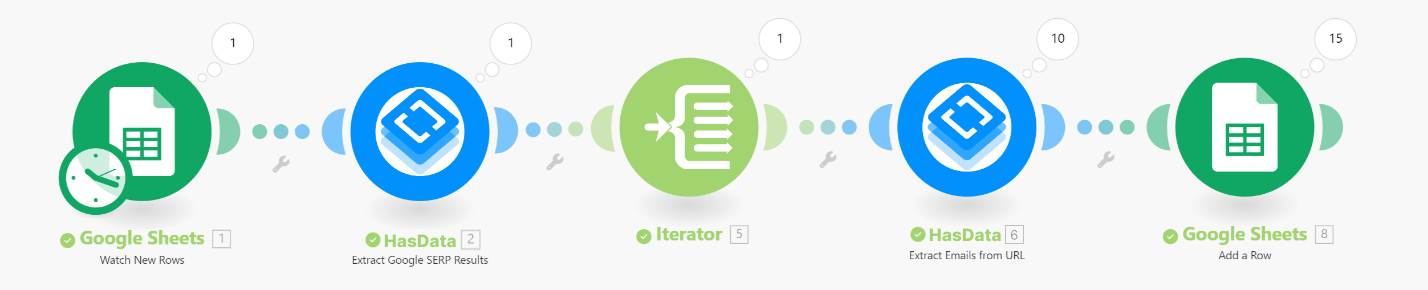

Extracting Emails with Make.com

Another popular integration service is Make.com, formerly known as Integromat. Therefore, we are now using the same algorithm as in the previous example, but we are implementing it using the Make.com service. In addition, it supports a wider range of data processing functionality and we will be able to process all the received links within a single scenario.

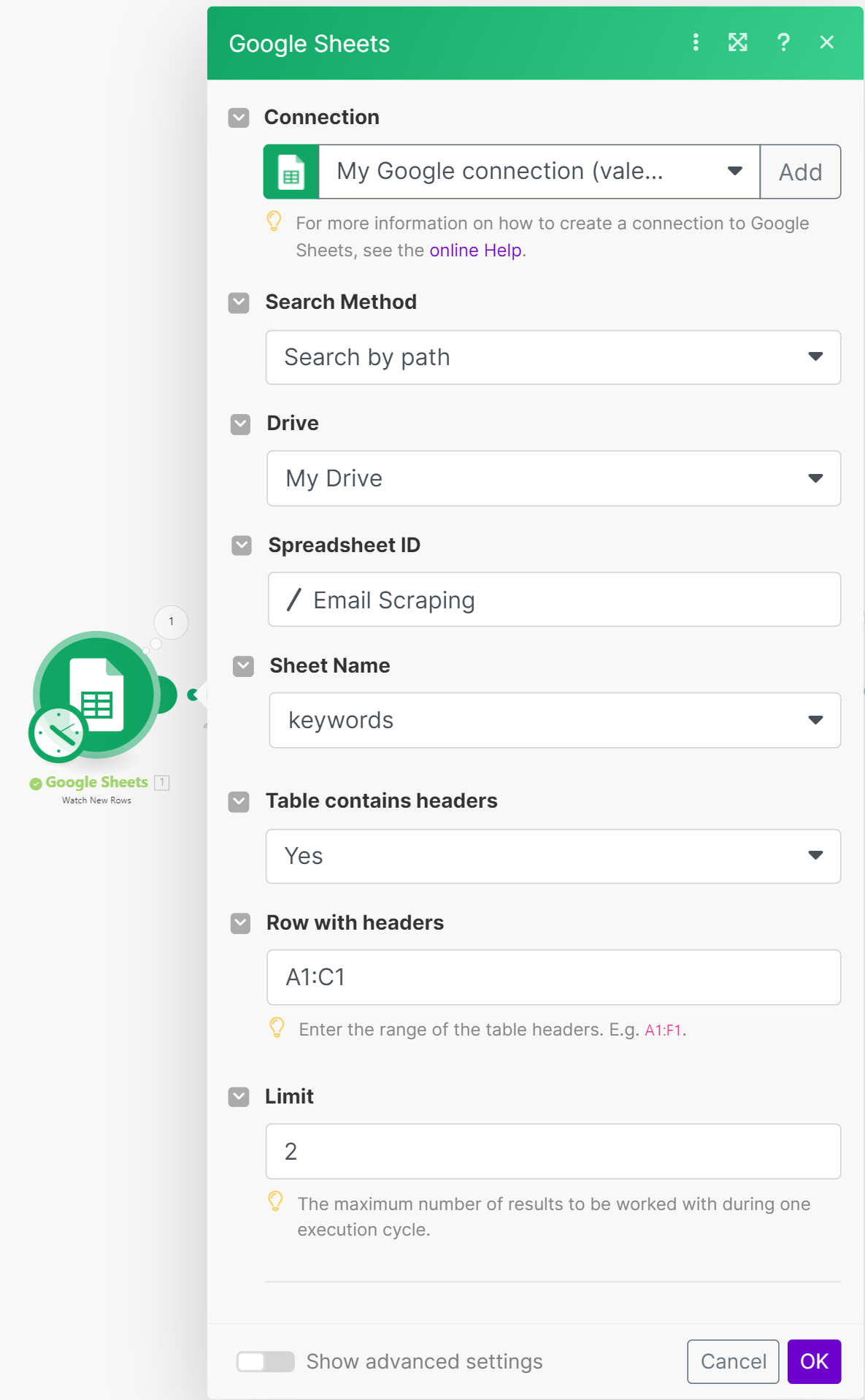

To get started, sign up and create a new scenario. The first step is to get new rows from the Google Sheets table that contain a list of keywords for which we will search for websites. To do this, select a new action and find the Google Sheets integrations and the method for searching for new rows. We will use the same table as in the previous example.

Customize the row limit as you see fit. If you already have website links, you can skip to the link processing section.

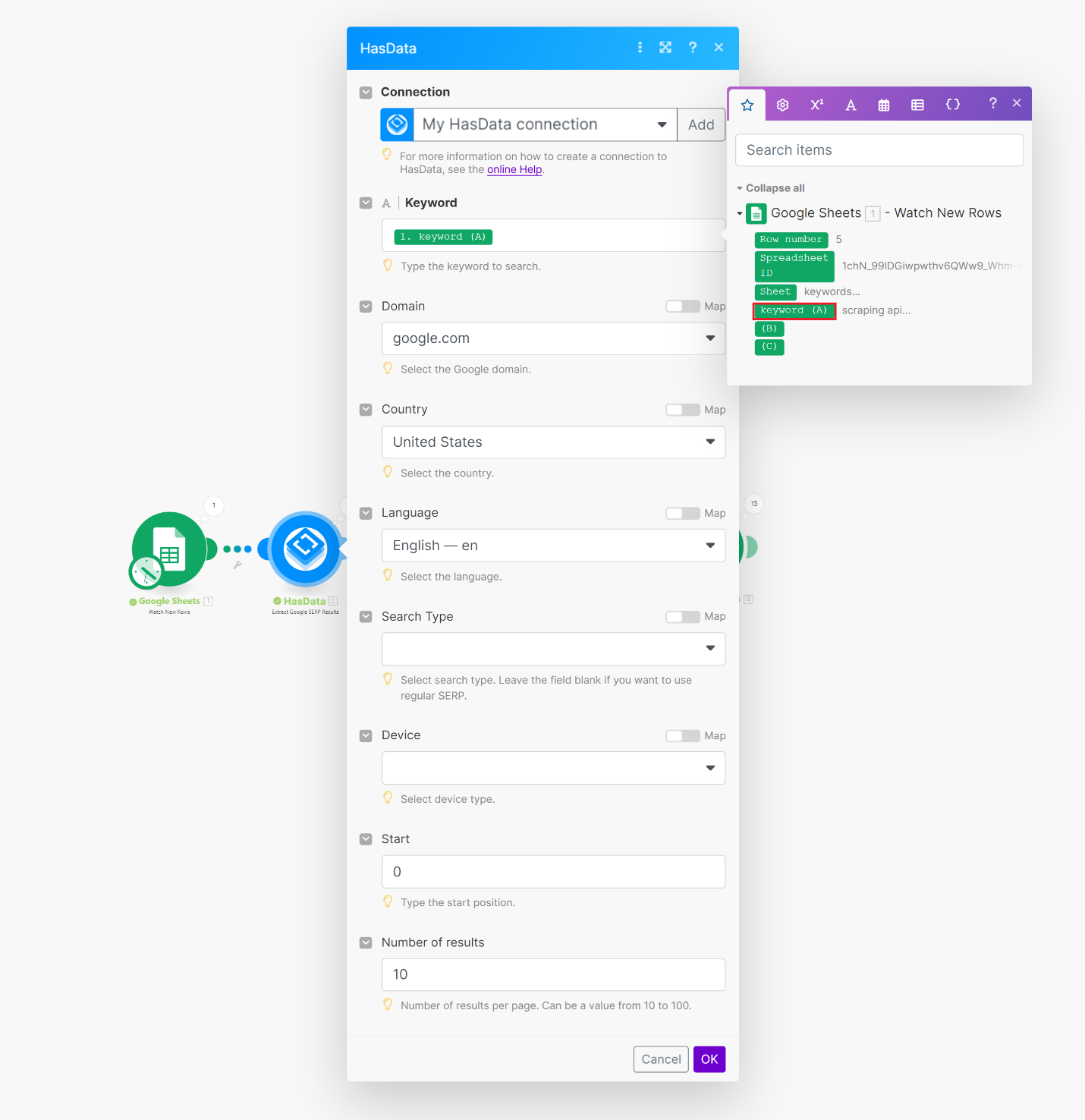

Add the following action and find the HasData integration. We need the method for extracting data from Google SERP Results. In the Keyword field, insert the value from the previous step. You can also customize the language, number of results, and localization.

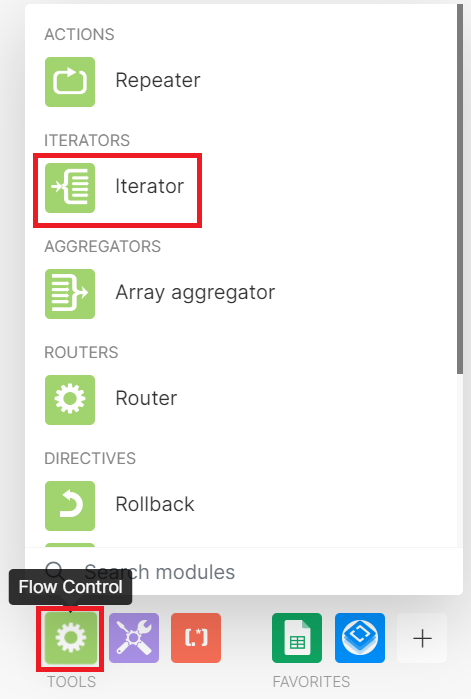

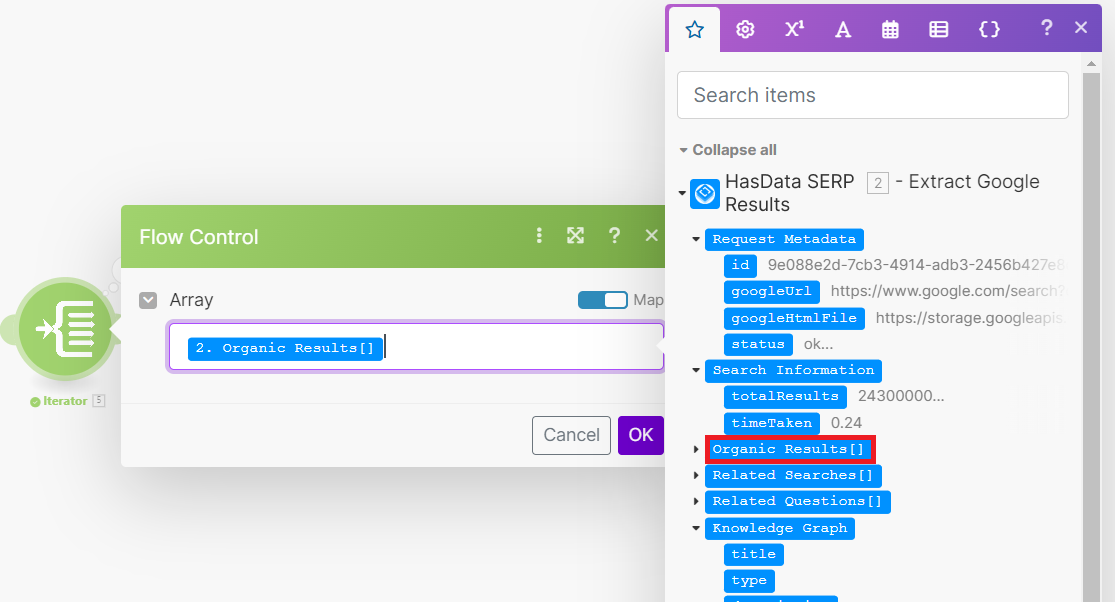

Now that we have a list of websites from search results, we need to iterate over them all and collect the company email addresses from each one. To do this, we will use an iterator, which can be found in the App Flow menu at the bottom of the screen.

An iterator is an object that allows you to sequentially access and process each element of a data structure. In other words, it works like a loop in programming. For our task, we only need to process the Organic Results. The other data returned by Google SERP API is not necessary.

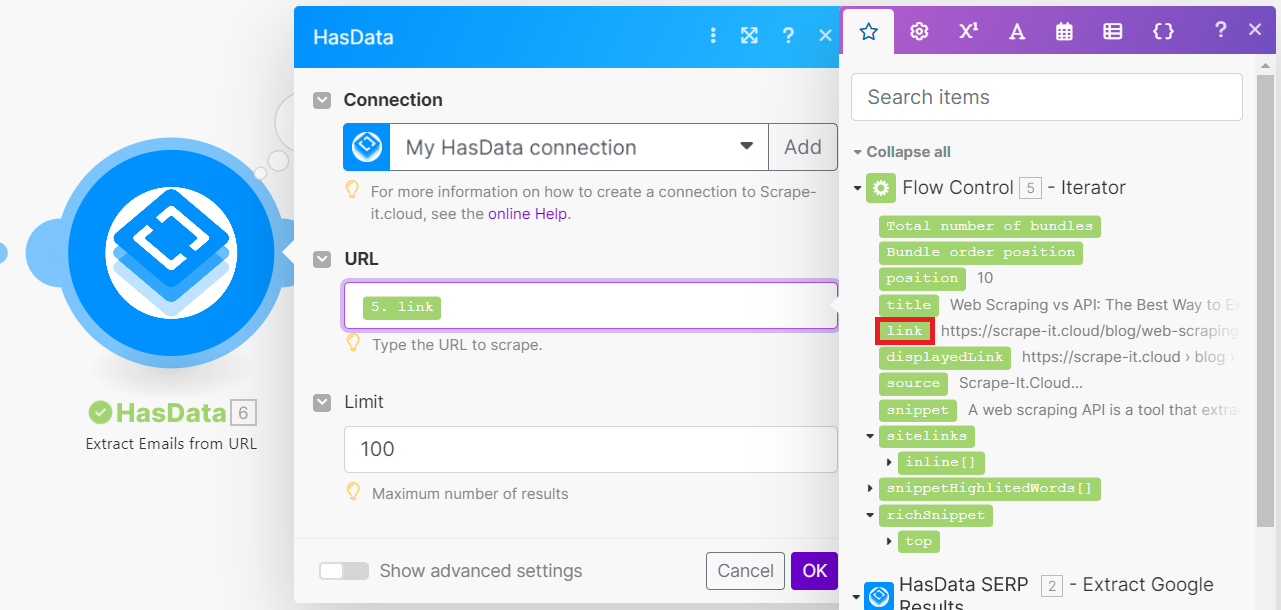

Now, let's use another HasData method: retrieving a list of email addresses from a website URL. To do this, we'll pass the link from the current iteration to the email scraping method.

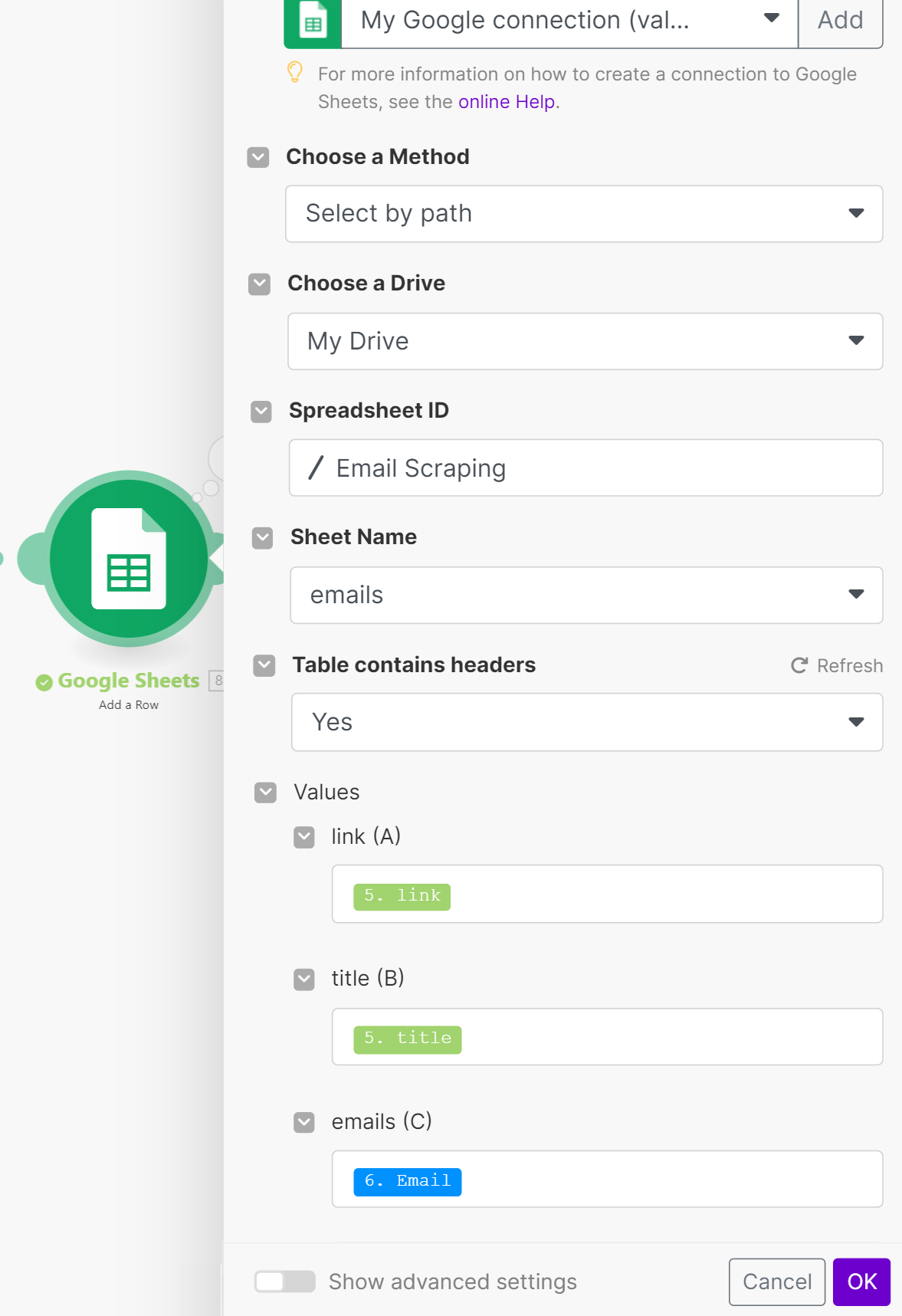

The final step is to import all of the collected data into Google Sheets. If desired, you can pre-group the collected data using a reverse iterator function, an array aggregator. Alternatively, you can simply import all of the data into a sheet with email addresses.

Let's run the resulting scenario and look at the result.

After completing the task, review the Google Sheets spreadsheet:

Based on our experience, Make.com offers a broader range of data processing capabilities than Zapier, including the ability to work with arrays and variables. This makes Make.com a better choice for users who need to perform complex data processing tasks.

Use Cases and Practical Applications

As we said before, email scraping is a valuable lead generation strategy that can help businesses quickly and efficiently identify potential customers. Here are some examples of how you can use email addresses that you have scraped from websites:

- Marketing campaigns. You can use emails to send SEO marketing campaigns (cold email) to potential customers.

- Lead generation. You can use email addresses to generate leads for your business.

- You can use emails to research a target audience.

The source of business email address collection should be determined based on the intended use of the data. Since emails are typically collected for lead generation, we will use the most relevant sources for this purpose, such as search results for keywords and Google Maps.

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Yellow Pages Scraper is the perfect solution for quickly and easily extracting business data! With no coding required, you can now scrape important information from…

Best Practices for Email Scraping

To make email scraping more productive, it is important to follow some rules and recommendations. Some of these recommendations are for improving the quality of scraping, such as using proxies, CAPTCHA solvers, or dedicated scraping services or APIs. Other recommendations are specific to effective email collection, which can help you collect more and higher-quality email addresses from companies and individuals.

Here are some specific recommendations for effective email collection:

- Perform data quality checks. After collecting data, carefully filter and clean it to remove invalid or outdated email addresses. You can use a dedicated email validation service to check email addresses before adding them to your database or file.

- Set a limit on the number of requests. Limit the number of requests you make to websites to avoid being blocked or overloading servers. You can use delays between requests to reduce your load.

- Consider implementing data backup. Create backups of your collected data to ensure that you don't lose it.

- Regularly update your data. Regularly update your collected data to avoid using outdated addresses. This is especially important if you are using the data for email marketing campaigns and sales prospecting.

In addition to the above, it is important to note that when collecting email addresses, it is best to use reliable sources that users have explicitly provided. For individual users, this may include social media platforms such as LinkedIn. For companies, it is best to scrape data from their website contact pages or home web pages.

The Legality of Email Scraping

In most cases, collecting email addresses from public sources of data for personal purposes, excluding the purpose of transferring data to third parties, is legal. For example, collecting contact information for companies from public sources of information for research purposes is legal, provided that it does not conflict with the company's policy.

However, it is slightly more complicated with the emails of individual users, as they cannot be used without the user's explicit consent. For example, compliance with GDPR (General Data Protection Regulation) is especially important when working with the data of European citizens.

To avoid problems in the future, before collecting data, familiarize yourself with the website's policies and follow their rules. Also, make sure that your use of the data is consistent with its original purpose and does not violate the law.

Conclusion

In this article, we explored a variety of ways to collect email addresses, suitable for both those who are not very strong in programming and those who want to create and use their script. In addition, we looked at examples of creating scripts for data collection on two popular integration platforms, Zapier and Make.com.

In addition to the practical examples we provided, we also provided recommendations that can help you develop your email address collection automation in the future. The knowledge gained from the section on legality will help you collect the necessary data without violating privacy rules.