Data scraping is an essential part of any business. To offer up-to-date information, it is necessary to track the interests and trends of customers and ordinary users.

One of the most valuable uses of scrapers is scraping Google search results. Using search engine result scraping, you can track what data users are getting and gather leads.

In our experience, Python is one of the most suitable programming languages for scraping. It allows you to easily and quickly write scripts to collect data. So, in this tutorial, we'll look at how to scrape Google search result page in Python, what challenges you'll face, and how you can get around them.

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Google SERP Page Analysis

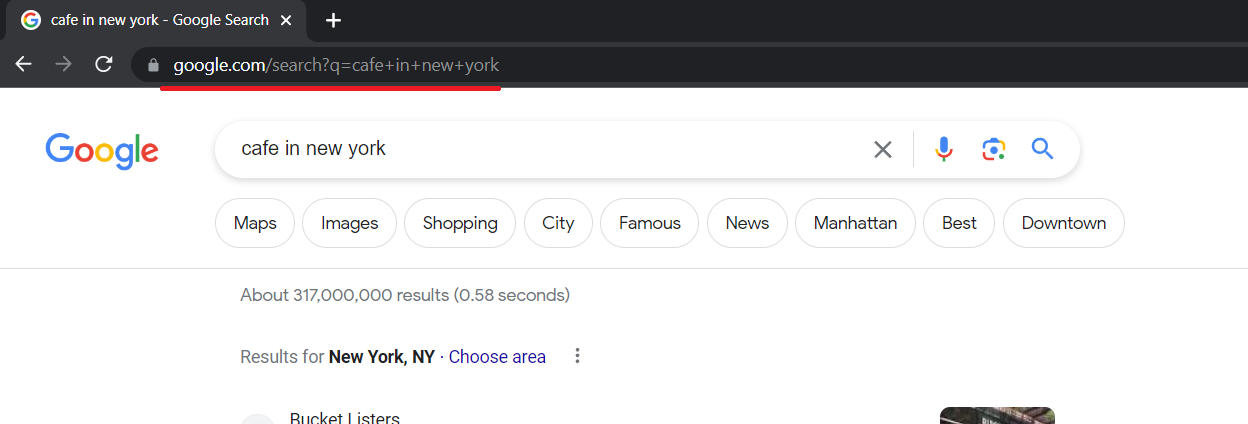

Before making a Google scraper, you need to analyze the page you will scrape to know where the necessary elements are located. You must first consider the link Google generates during a search query.

The link is quite simple, and we can generate it ourselves. The "https://www.google.com/search?q=" part will remain unchanged, followed by the query text with a "+" instead of a space.

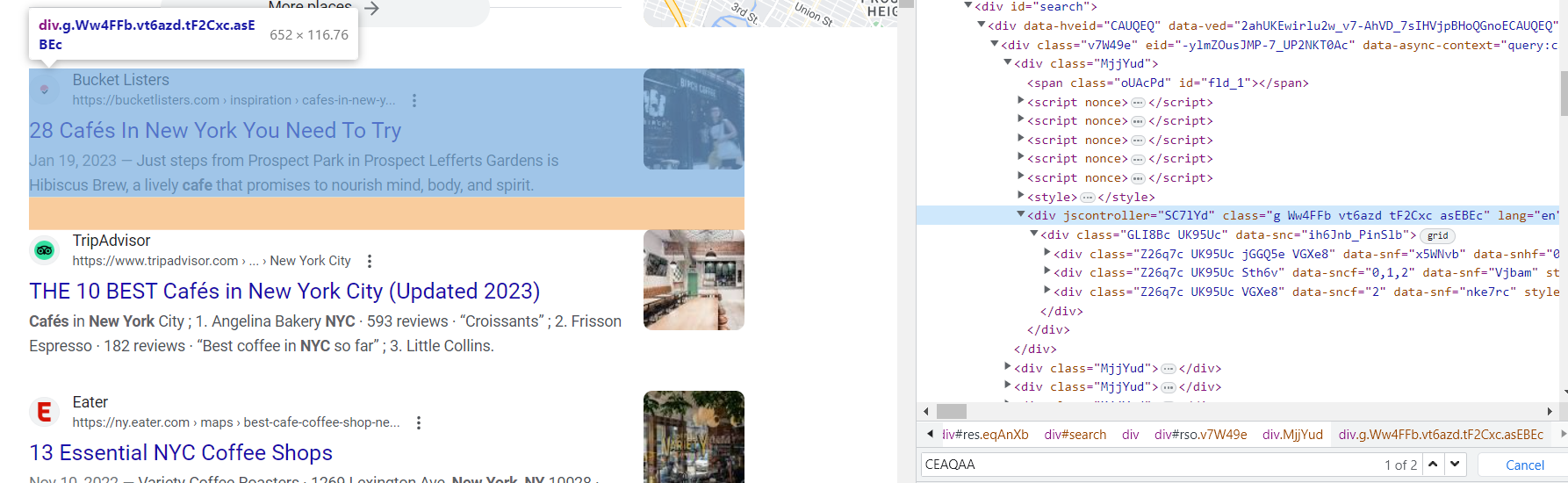

Now we need to understand where the data we need is located. To do this, open DevTools (right-click on the screen and press Inspect, or just press F12).

Unfortunately, almost all classes on the search engine result pages are generated automatically. Therefore, it is challenging to get the data by the class name. However, the structure of the site remains unchanged. The 'g' class of the search results items also remains unchanged.

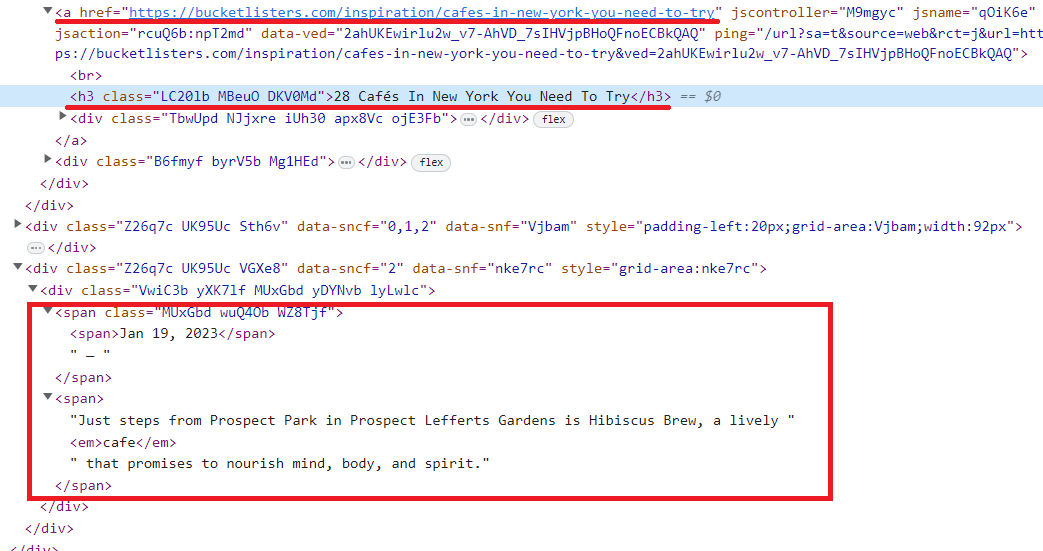

If we look carefully at the elements that are inside this class, we can identify the tags where the link, title, and description of the element:

Now that we've looked at the page and found the elements we'll scrape let's get down to creating the scraper.

Setting up the Development Environment

Before we dive into web scraping Google search results, it's essential to set up a proper development environment in Python. This involves installing the necessary libraries and tools that will enable us to send requests to Google, parse HTML responses, and handle the data effectively.

First, ensure that you have Python installed on your machine. To check it, you can use the following:

python -vIf you got a version of Python, then you have it. We will use Python version 3.10.7. If you do not have Python installed, visit the official Python website and download the latest version compatible with your operating system. Follow the installation instructions, and add Python to your system's PATH variable.

To show the different ways of scraping the Google SERP, let's install the following libraries:

pip install beautifulsoup4

pip install selenium

pip install google-serp-apiWe will also use the requests library in our Python script, a pre-installed Python library. However, if for some reason you do not have it, you can use the command:

pip install requestsYou can also use the urllib library instead of the requests library.

Additionally, to use Selenium headless browser, you'll need to download the appropriate WebDriver executable for your browser. Selenium requires a separate WebDriver to interface with each browser. For example, if you're using Google Chrome, you'll need the ChromeDriver. You can download it from the official website.

The Google SERP API library is a comprehensive solution that allows developers to integrate Google Search Engine Results Page (SERP) data. It provides a simplified…

The Google SERP API library is a comprehensive solution that allows developers to integrate Google Search Engine Results Page (SERP) data. It provides a simplified…

Scraping Google Search with Python using BeautifulSoup

Now that we have set up the development environment let's dive into the process of parsing and extracting data from Google search results. We'll use the requests library to send HTTP requests and retrieve the HTML response, along with the BeautifulSoup library to parse and navigate the HTML structure. Let's create a new file and connect the libraries:

import requests

from bs4 import BeautifulSoupTo disguise our scraper and reduce the chance of blocking, let's set query headers:

header={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36'}It is desirable to specify in the request the existing headings. For example, you can use your own, which can be found in DevTools on the Network tab. Then we execute the query and write the answer into a variable:

data = requests.get('https://www.google.com/search?q=cafe+in+new+york', headers=header)At this stage, we already get the page's code, and then we must process it. Create a BeautifulSoup object and parse the resulting HTML code of the page:

soup = BeautifulSoup(data.content, "html.parser")Let's also create a variable result in which we will write the data about the elements:

results = []So that we can handle the data element by element, recall that each element has a class 'g.' That is, to go through all the elements and get data from them, get all the elements with class 'g' and go through them one by one, getting the necessary data. To do this, let's create a for loop:

for g in soup.find_all('div', {'class':'g'}):Also, if we look closely at the page's code, we see that all elements are children of the <a> tag, which stores the page link. Let's use that and get the title, link, and description data. We will take into account that the description can be empty and put it in the try...except block:

if anchors:

link = anchors[0]['href']

title = g.find('h3').text

try:

description = g.find('div', {'data-sncf':'2'}).text

except Exception as e:

description = "-"Put the data about the elements in the results variable:

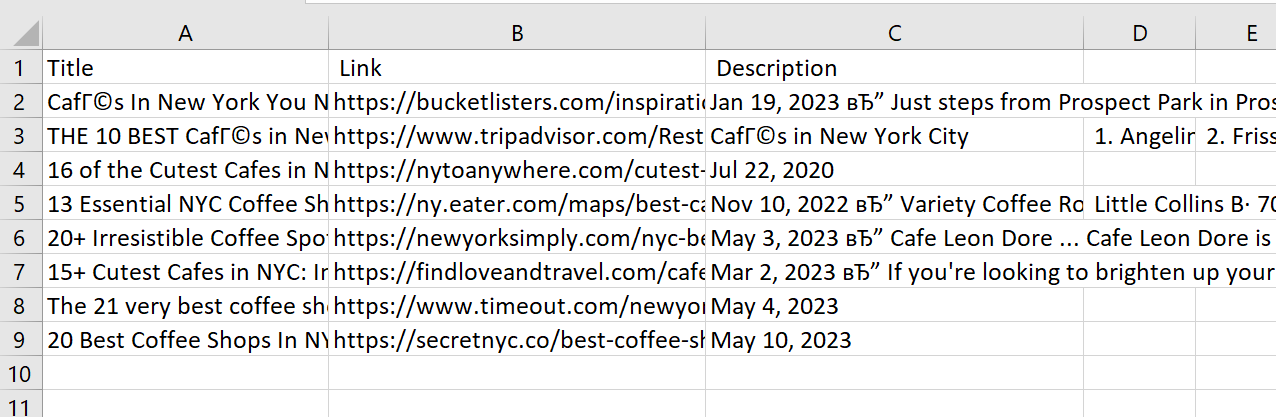

results.append(str(title)+";"+str(link)+';'+str(description))Now we could display the variable on the screen, but let's complicate things and save the data to a file. To do this, create or overwrite a file with columns "Title", "Link", "Description":

with open("serp.csv", "w") as f:

f.write("Title; Link; Description\n")And let's enter the data from the results variable line by line:

for result in results:

with open("serp.csv", "a", encoding="utf-8") as f:

f.write(str(result)+"\n")Currently, we have a simple search engine scraper that collects results in a CSV file.

Full code:

import requests

from bs4 import BeautifulSoup

url = 'https://www.google.com/search?q=cafe+in+new+york'

header={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.169 Safari/537.36'}

data = requests.get(url, headers=header)

if data.status_code == 200:

soup = BeautifulSoup(data.content, "html.parser")

results = []

for g in soup.find_all('div', {'class':'g'}):

anchors = g.find_all('a')

if anchors:

link = anchors[0]['href']

title = g.find('h3').text

try:

description = g.find('div', {'data-sncf':'2'}).text

except Exception as e:

description = "-"

results.append(str(title)+";"+str(link)+';'+str(description))

with open("serp.csv", "w") as f:

f.write("Title; Link; Description\n")

for result in results:

with open("serp.csv", "a", encoding="utf-8") as f:

f.write(str(result)+"\n")Unfortunately, this script has several drawbacks:

- It collects little data.

- It doesn't collect dynamic data.

- It doesn't use proxies.

- It doesn't perform any actions on the page.

- It doesn't solve a captcha.

Let's write a new script based on the Selenium library to solve some of these problems.

Use Headless Browser to Get Data From Search Results

A headless browser can be advantageous when dealing with websites that employ dynamic content, CAPTCHA challenges, or require JavaScript rendering. Selenium, a powerful web automation tool, allows us to interact with web pages using a headless browser and extract data from them.

Create a new file and connect the libraries:

from selenium import webdriver

from selenium.webdriver.common.by import ByNow let's point out where the previously downloaded web driver is located:

DRIVER_PATH = 'C:\chromedriver.exe'

driver = webdriver.Chrome(executable_path=DRIVER_PATH)In the previous script, we specified the query with a link. But we saw that all the queries looked the same. So, let's improve that and make it possible to generate a link based on a text query:

search_query = "cafe in new york"

base_url = "https://www.google.com/search?q="

search_url = base_url + search_query.replace(" ", "+")Now we can go to the page we need:

driver.get(search_url)Let's extract the data using the CSS selectors from the previous script:

results = []

result_divs = driver.find_elements(By.CSS_SELECTOR, "div.g")

for result_div in result_divs:

result = {}

anchor = result_div.find_elements(By.CSS_SELECTOR, "a")

link = anchor[0].get_attribute("href")

title = result_div.find_element(By.CSS_SELECTOR, "h3").text

description_element = result_div.find_element(By.XPATH, "//div[@data-sncf='2']")

description = description_element.text if description_element else "-"

results.append(str(title)+";"+str(link)+';'+str(description))Close the browser:

driver.quit()And then save the data to a file:

with open("serp.csv", "w") as f:

f.write("Title; Link; Description\n")

for result in results:

with open("serp.csv", "a", encoding="utf-8") as f:

f.write(str(result)+"\n")Using Selenium, we solved some of the problems associated with scraping. For example, we have significantly reduced the risk of blocking since the headless browser completely simulates the work of a real user, thus making the script indistinguishable from a person.

Also, this approach allows us to work with different elements on the page and scrap dynamic pages.

However, we still can’t control our location, we can’t solve the captcha in case it occurs, and we don’t use proxies. Let's move on to the following library to solve these problems.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Web Scraping with Google SERP API Python Library

To work with our library, you need an API key, which you can find in your account. So, to create a feature-rich Google SERP scraper, let's use our library:

from google_serp_api import ScrapeitCloudClientLet's also connect the built-in json library to handle the response, which comes in JSON format:

import jsonLet's specify the API key and set the parameters:

client = ScrapeitCloudClient(api_key='YOUR-API-KEY')

response = client.scrape(

params={

"q": search_key,

"location": "Austin, Texas, United States",

"domain": "google.com",

"deviceType": "desktop",

"num": 100

}

)You can set the search keyword, the country you want to get data from, the number of results to be scraped, and the Google domain. If you execute this code, it will return a JSON-formatted response with data in the following view:

- requestMetadata (request metadata)

- id (request ID)

- googleUrl (Google search URL)

- googleHtmlFile (URL of the Google HTML file)

- status (request status)

- organicResults (organic results)

- position (result position)

- title (result title)

- link (result link)

- displayedLink (displayed result link)

- source (result source)

- snippet (result snippet)

- snippetHighlitedWords (highlighted words in the result snippet)

- sitelinks (site links embedded in the result)

- localResults (local results)

- places (places)

- position (place position)

- title (place title)

- rating (place rating)

- reviews (number of reviews for the place)

- reviewsOriginal (original format of the number of reviews for the place)

- price (place price)

- address (place address)

- hours (place hours of operation)

- serviceOptions (service options)

- placeId (place ID)

- description (place description)

- moreLocationsLink (link to more locations)

- relatedSearches (related searches)

- query (related query)

- link (link to related search results)

- relatedQuestions (related questions)

- question (related question)

- snippet (snippet of the answer to the related question)

- link (link to related question results)

- title (title of the related question result)

- displayedLink (displayed link of the related question result)

- pagination (pagination)

- next (link to the next page of results)

- knowledgeGraph (knowledge graph)

- title (title)

- type (type)

- description (description)

- source (source)

- link (source link)

- name (source name)

- peopleAlsoSearchFor (people also search for)

- name (name)

- link (link to related search results)

- searchInformation (search information)

- totalResults (total number of results)

- timeTaken (time taken for the request)Let's save the data from "organicResults" and "relatedSearches" to separate files. First, format the received answer from the string into JSON and put the data from the desired attributes into variables.

data = json.loads(response.text)

organic_results = data['organicResults']

keywords = data['relatedSearches']Create variables with the desired result.

rows_organic = []

rows_keys =[]Go through all the lanes element by element and put them into the variables in the right form:

for result in organic_results:

rows_organic.append(str(result['position'])+";"+str(result['title'])+";"+str(result['link'])+";"+str(result['source'])+";"+str(result['snippet']))

for result in keywords:

rows_keys.append(str(result['query'])+";"+str(result['link']))Let's save the data to files:

with open("data_organic.csv", "w") as f:

f.write("position; title; url; domain; snippet'\n")

for row in rows_organic:

with open("data_organic.csv", "a", encoding="utf-8") as f:

f.write(str(row)+"\n")

with open("data_keys.csv", "w") as f:

f.write("keyword; path'\n")

for row in rows_keys:

with open("data_keys.csv", "a", encoding="utf-8") as f:

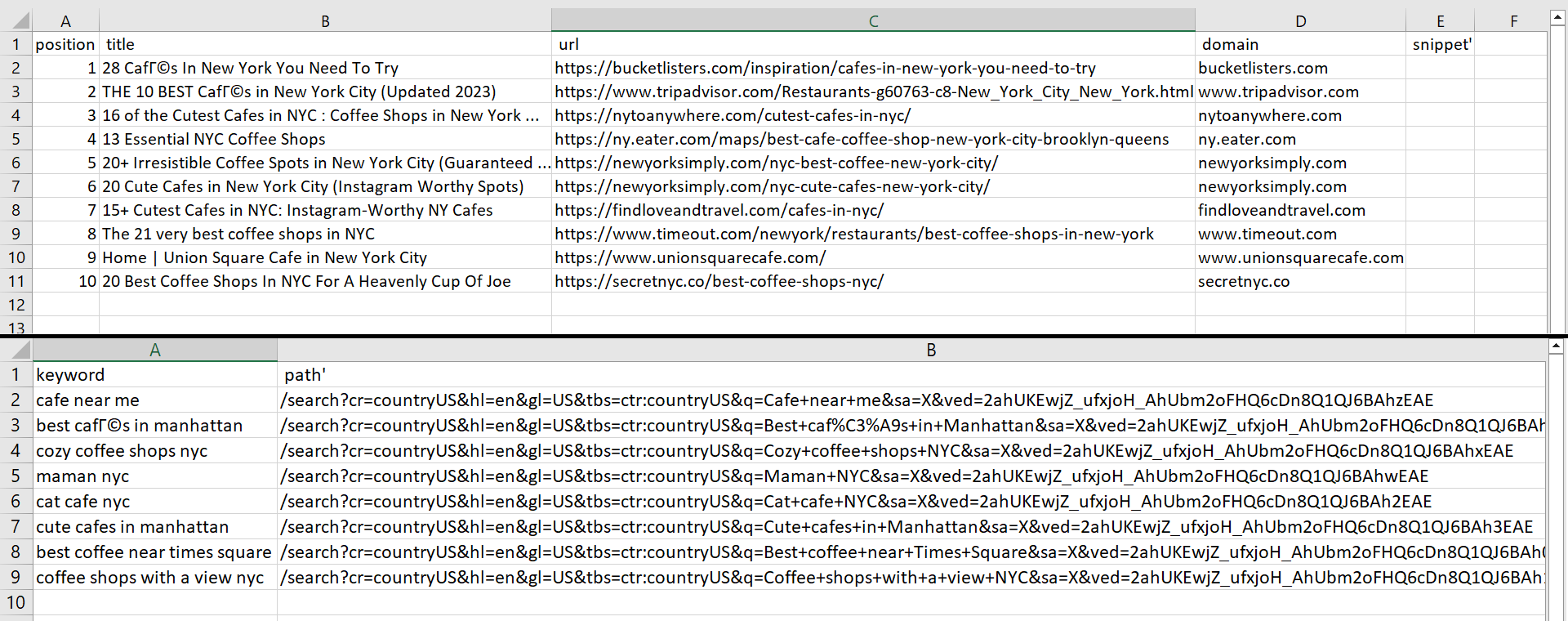

f.write(str(row)+"\n")As a result of running the script, we got two files:

We can change the number of results by changing the num_results parameter in "params". However, let's assume we have a file that stores all the keywords we need to scrape data. We need to open the file first and then go through it line by line using a new keyword:

with open("keywords.csv") as f:

lines = f.readlines()

with open("data_keys.csv", "w") as f:

f.write("search_key; keyword; path'\n")

with open("data_organic.csv", "w") as f:

f.write("search_key; position; title; url; domain; snippet'\n")

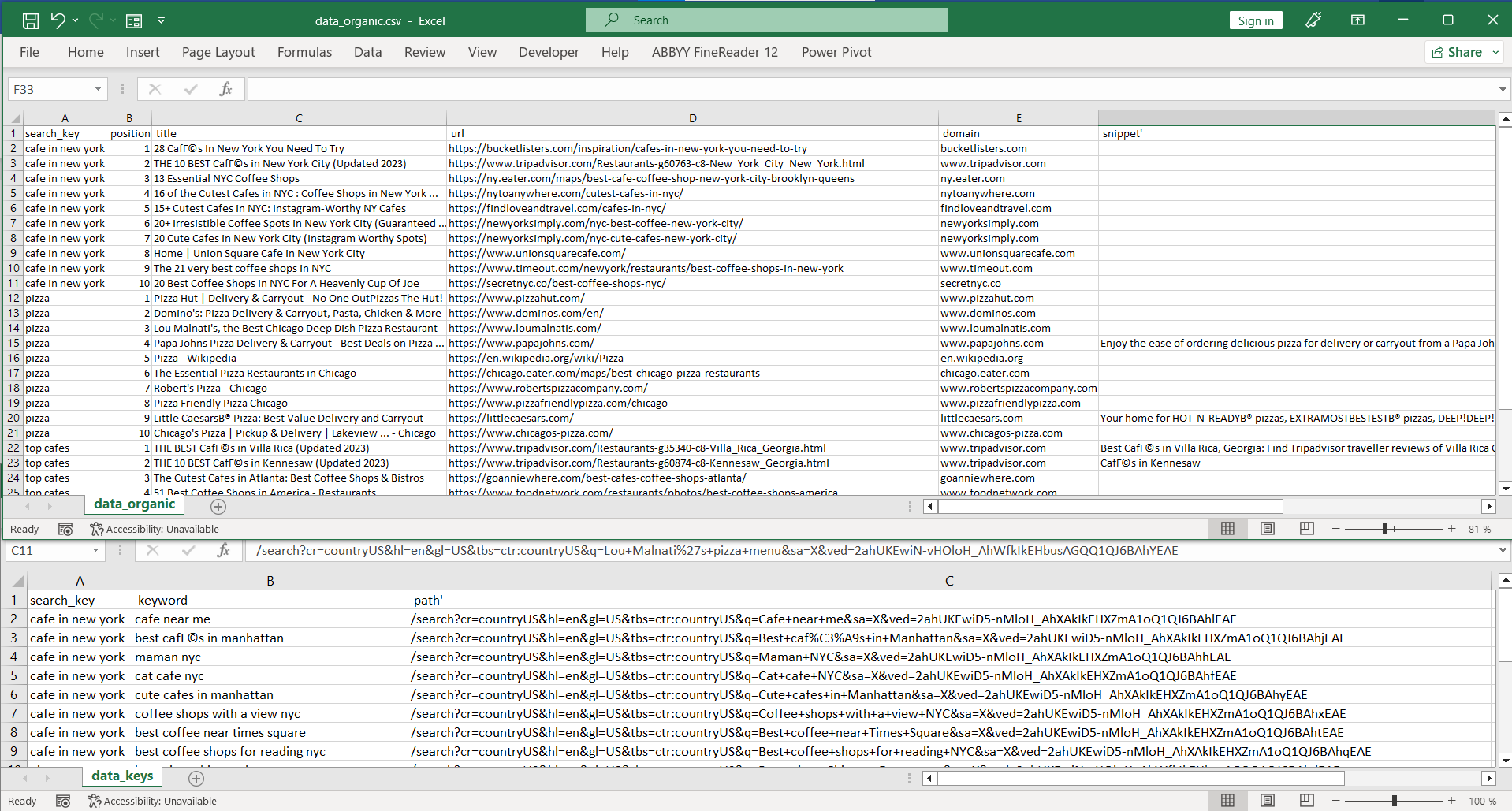

for line in lines:We have specified column headers for new files before the keyword passes. This means the file is not overwritten when you search a new keyword. Instead of this, the file is augmented with more data. We have also added another column that contains the text search query to which the results match.

Now let's change the response and specify the keyword that will be the same as the variable line:

response = client.scrape(

params={

"q": search_key,

"location": "Austin, Texas, United States",

"domain": "google.com",

"deviceType": "desktop",

"num": 100

}

)The rest of the code will be similar. Only a new column will be added with the variable line. As a result, we get the following two files:

Full Python code:

from google_serp_api import ScrapeitCloudClient

import json

with open("keywords.csv") as f:

lines = f.readlines()

with open("data_keys.csv", "w") as f:

f.write("search_key; keyword; path'\n")

with open("data_organic.csv", "w") as f:

f.write("search_key; position; title; url; domain; snippet'\n")

for line in lines:

search_key = str(line.replace("\n", ""))

client = ScrapeitCloudClient(api_key='YOUR-API-KEY')

response = client.scrape(

params={

"q": search_key,

"location": "Austin, Texas, United States",

"domain": "google.com",

"deviceType": "desktop",

"num": 100

}

)

data = json.loads(response.text)

organic_results = data.get('organicResults', [])

keywords = data.get('relatedSearches', [])

rows_organic = []

rows_keys = []

for result in organic_results:

rows_organic.append(f"{search_key};{result.get('position', '')};{result.get('title', '')};{result.get('link', '')};{result.get('source', '')};{result.get('snippet', '')}")

for result in keywords:

rows_keys.append(f"{search_key};{result.get('query', '')};{result.get('link', '')}")

for row in rows_organic:

with open("data_organic.csv", "a", encoding="utf-8") as f:

f.write(row + "\n")

for row in rows_keys:

with open("data_keys.csv", "a", encoding="utf-8") as f:

f.write(row + "\n")The primary benefit of utilizing the HasData library means you don't need to think about proxy rotation, JavaScript rendering, or captcha solving - the API will provide prepared data.

Scraping Google Search with Python: Challenges & Limitations

Scraping Google search results using Python can be tricky. There are several challenges to overcome when trying to scrape Google search results, from the technical complexities associated with the search engine's ever-changing algorithm to the built-in anti-scraping protections. There is also the possibility of IP address blocking and captcha entry, as well as many other challenges, which we'll discuss below.

Geolocation Limitations

Google search results can vary by location. For example, if you are searching from the United States, your results might be different than someone searching in Canada. If you have permission to access location data or have made enough queries from one place, Google will provide located search results just for that area. To find out what search results look like for other countries or cities, you need to use a proxy server from that location or you can use a ready-made Web Scraping API, that will take care of all this.

Country-Specific Domains

Google supports different search domains for different countries. For example, google.co.uk for the UK or google.de for Germany. Each has its own features, its own Google SERP and its own Google Maps, which must be taken into attention when scraping.

Restrictions on Result Pages

The page shows a limited number of results. To view the next set, you need to either scroll through the existing search results or move on to the following page. Remember, that each time you switch pages is considered a new query by Google, and too many requests your IP address may be blocked or you will need to complete a captcha challenge for verification purposes.

Captcha and Anti-Scraping Measures

As we just mentioned, Google has various methods for dealing with web scraping. Captcha and IP address blocking are two of the most common strategies.

To solve captcha challenges, you can use a third-party services where real people will solve them in exchange for a small fee. However, you must integrate this into your own application and set it up correctly.

The same applies to IP blocking. You can avoid this challenge by renting proxies to hide your IP address and bypass these blocks as well - but only if you find related proxy provider that is reliable enough to trust with this task. You can also check out our list of free proxies.

Zillow API Python is a Python library that provides convenient access to the Zillow API. It allows developers to retrieve real estate data such as property details,…

Our Shopify Node API offers a seamless solution, allowing you to get data from these Shopify stores without the need to navigate the complexities of web scraping,…

Dynamic HTML Structure

JavaScript rendering is another way to protect against scrapers and bots. This means that page content is created dynamically, changing each time the page is loaded. For example, classes on a web page may have different names each time you open it - but the overall structure of the webpage will remain consistent. This makes scraping the page more difficult, but still possible.

Unpredictable Changes

Google is always evolving and improving its algorithms and processes. This means that all web scraping tools can't be used forever without maintenance - it needs to be kept up-to-date with the latest changes from Google.

Conclusion and Takeaways

Several ways and libraries can help with data extraction from Google SERPs in Python. Everyone chooses the option that best suits their project and capabilities.

If you are new to scraping and want to get a small amount of data, you may be satisfied with the Requests library to run queries and the BeautifulSoup library for parsing obtained data. If you are familiar with headless browsers and automation tools, then Selenium is for you.

And if you want to avoid connecting third-party services to solve captchas, rent proxies, and not think about how to avoid blocking or getting the data you want, you can use the google_serp_api library.