WordPress, coupled with the WooCommerce plugin, is a popular and convenient choice for creating online marketplaces. It offers a user-friendly platform for building beautiful and functional e-commerce stores with minimal effort.

WooCommerce web scraping is a method of automated data extraction from WooCommerce stores. This method allows for various purposes, including price monitoring and dynamic pricing, tracking competitors' product prices, and automatically updating product prices to stay competitive. It also allows for the automation of routine tasks, such as retrieving product descriptions and specifications, automatically updating product information, and collecting product reviews.

Get instant, real-time access to structured Google Shopping results with our API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Shopify scraper is the ultimate solution to quickly and easily extract data from any Shopify-powered store without needing any knowledge of coding or markup! All…

Thus, WooCommerce scrapers not only facilitate the work of store owners by providing them with quick access to important competitor information but also help them manage their business more effectively by making data-driven decisions.

This article will explore the different types of scrapers and guide you through creating a universal solution for scraping most WooCommerce stores with Python. We will provide ready-made scripts that anyone can use even with minimal programming skills. However, if you're interested in scraping but have no programming experience, we recommend that you read our article on web scraping with Google Sheets. In that article, we walked through the process using a WooCommerce store as an example.

Types of WooCommerce scrapers

WooCommerce scrapers are tools designed to extract data from WooCommerce-based online stores. These scrapers come in various types depending on their functionality, complexity, and purpose. Here are some common types of WooCommerce scrapers:

- Product scrapers. Collect product information like name, description, price, images, categories, and SKUs.

- Price scrapers. Track price changes, discounts, special offers, and shipping costs.

- Review scrapers. Gather customer feedback, ratings, comments, and other helpful information.

While categorizing web scrapers into different types can be helpful when choosing a ready-made tool, it's worth remembering that building your own scraper gives you complete control over the information you obtain. One of the main advantages of a custom scraper is the ability to extract all the necessary information without any restrictions.

When creating your scraper, you can precisely define the data you need and configure the scraper accordingly. You won't be limited by predefined scraper types or restrictions imposed by third-party tools.

Moreover, building your own scraper gives you the flexibility to customize it to the unique needs of your project. You can add new features, improve performance, and flexibly adapt the scraper to changes in the website or your business requirements.

How to Create a WooCommerce Scraper

Before you start scraping WooCommerce, it is important to decide on the method of operation. There are two main approaches:

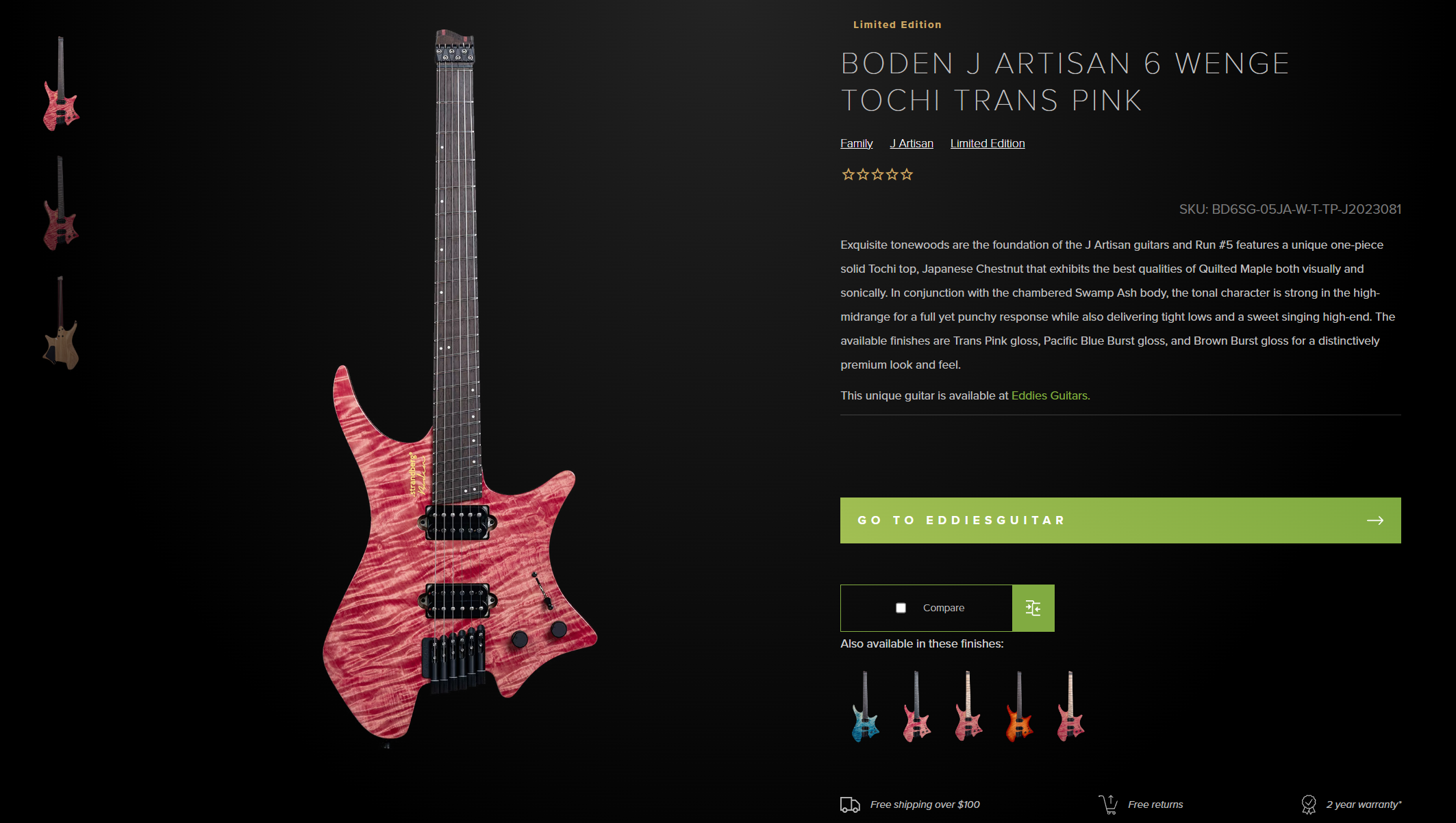

- Scraping data from the products page. This method involves extracting product information directly from the pages where they are presented. Using this approach, we can get basic information such as product name, price, and photo. However, we cannot get reviews, descriptions, or any other detailed information from the general product page.

- Use SiteMap to get a list of products and then scrape them. The second option is to access the site's SiteMap, which lists all product pages. You can then iterate through these pages and collect information about each product. The advantage is that we can get absolutely all available information for each product, and the disadvantage is that we need to make requests to absolutely all pages, which can take more time and resources.

Both approaches have advantages and disadvantages, and their choice depends on the project's specific requirements. In this article, we will consider both of these methods, describe their features, and provide ready-made scripts that you can immediately use to scrape data from WooCommerce.

Scrape Product Data from a Search Page

Let's start with the most straightforward approach: analyze the product page, identify the element selectors, and extract them. To make the example more diverse, we will get the page code differently, using the Requests and BeautifulSoup libraries and more complex options using a Web Scraping API.

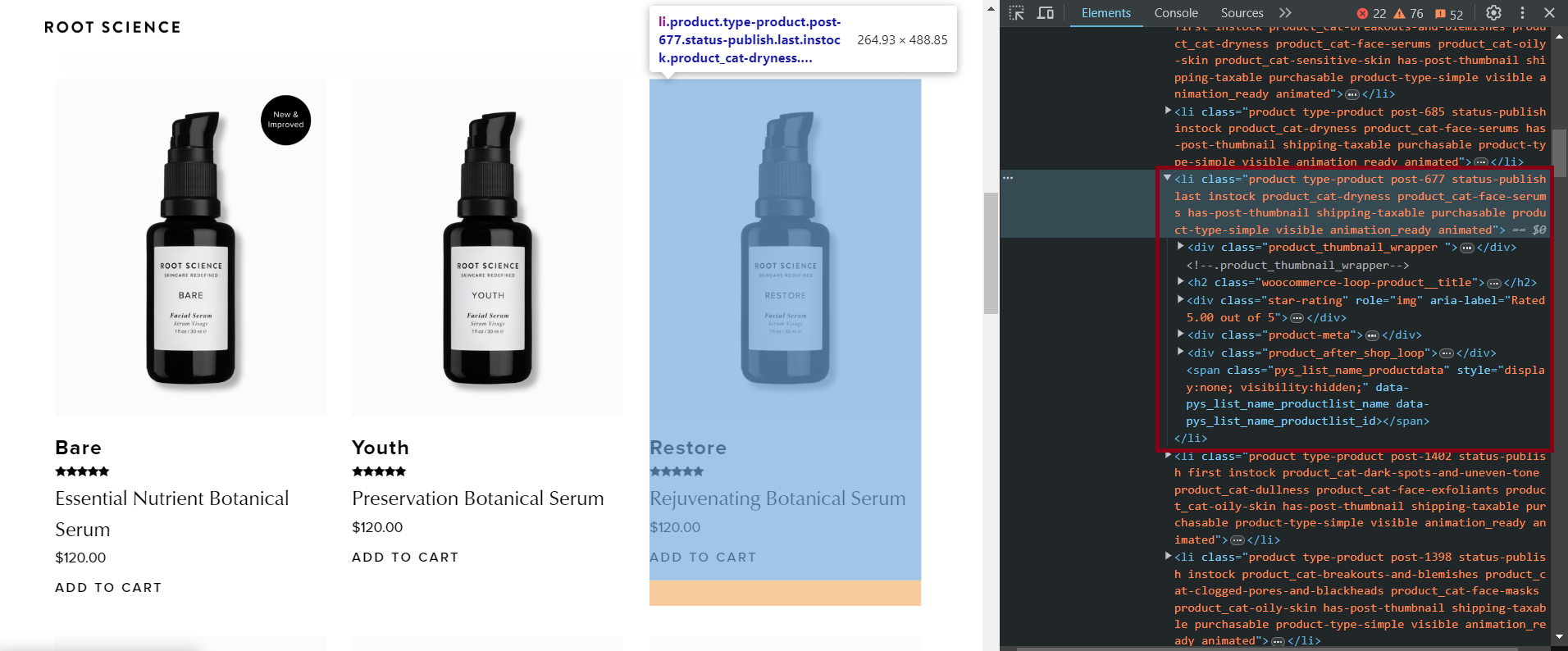

Identify Target Data

To begin, let's define target elements using one of the sites as an example. To do this, go to the product page for a WooCommerce site that will look like "https://example.com/shop," and open DevTools (F12 or right-click and Inspect).

Here are the CSS selectors for various elements that can be used to extract all available product information:

- Title:

h2.woocommerce-loop-product__title - Rating:

.star-rating - Description:

.product-meta - Price:

.price .woocommerce-Price-amount - Image Link:

img - Product Page Link:

.woocommerce-LoopProduct-link

These selectors have been tested on multiple WooCommerce websites and found to work for most sites. However, some resources may not have certain elements, such as product descriptions.

Static Scraping

We will be using Python for scripting, but don't worry if you don't have any programming experience. We chose Python because it's simple and functional and allows us to easily share ready-made scripts using Google Colaboratory, which you can run and use immediately.

You can find and use a ready-made script here. To use it, simply replace the website URL with the one you want to scrape, then run each code block sequentially.

To run a script on your PC (not in Colaboratory), you'll need Python version 3.10 or higher. For instructions on setting up your environment and creating scrapers, see our introductory article on Python scraping.

Create a new *.py file and import the necessary libraries:

import requests

from bs4 import BeautifulSoupDefine a variable to store the WooCommerce website URL and append the "shop" section, where all products reside.

base_url = "https://www.shoprootscience.com/"

url = base_url + "shop"Create a variable to store the products:

all_products = []Send a GET request to the URL and retrieve the HTML code of the entire page:

response = requests.get(url)Check if the response status code is 200, indicating a successful response:

if response.status_code == 200:Create a BeautifulSoup object and parse the page for easy navigation and element retrieval:

soup = BeautifulSoup(response.content, 'html.parser')Identify the most suitable selector for finding product elements. Based on observations from multiple WooCommerce websites, products are likely stored in either "li" or "div" tags with the "product" class:

products = soup.find_all(['li', 'div'], class_='product')Iterate through all the found products one by one:

for product in products:Extract all available data:

# Extract product title

title_elem = product.find('h2', class_='woocommerce-loop-product__title')

title_text = title_elem.text.strip() if title_elem else '-'

# Extract product rating

rating_elem = product.find('div', class_='star-rating')

rating_text = rating_elem['aria-label'] if rating_elem else '-'

# Extract product description

description_elem = product.find('div', class_='product-meta')

description_text = description_elem.text.strip() if description_elem else '-'

# Extract product price

price_elem = product.find('span', class_='price')

price_text = price_elem.text.strip() if price_elem else '-'

# Extract product image link

image_elem = product.find('img')

image_src = image_elem['src'] if image_elem else '-'

# Extract product page link

product_link_elem = product.find('a', class_='woocommerce-LoopProduct-link')

product_link = product_link_elem['href'] if product_link_elem else '-'In this code, we handle the possibility of missing product data. Instead of displaying a value, we simply insert a "-" in its place. This approach helps us to not only organize the output but also avoid errors if an element is missing.

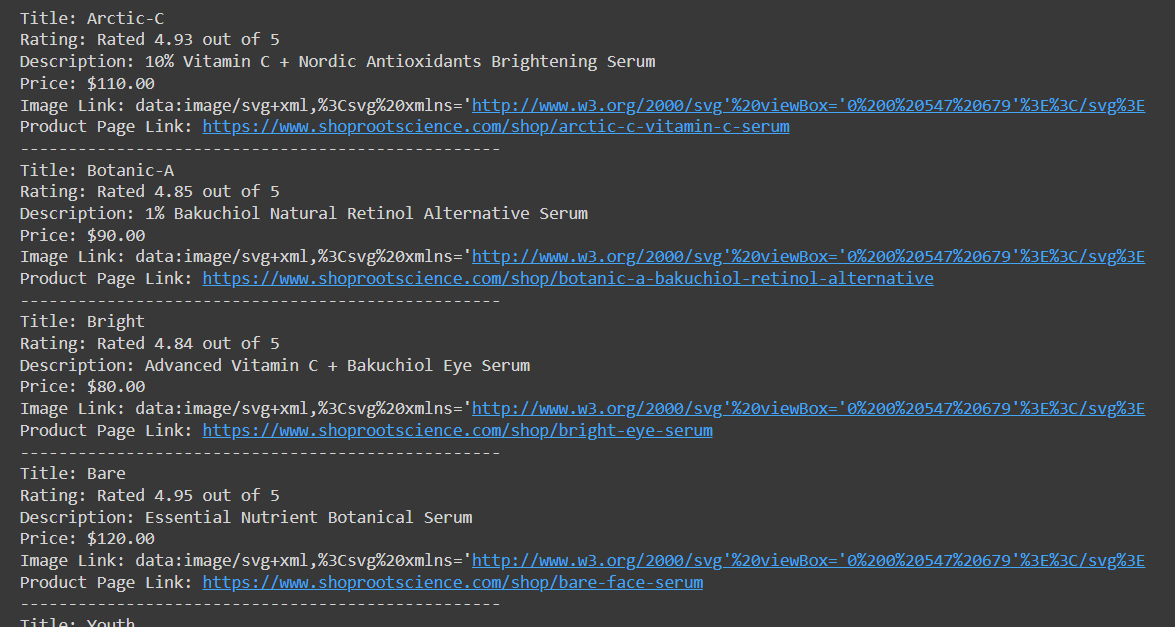

Print the list of products with all the collected data:

print(f"Title: {title_text}")

print(f"Rating: {rating_text}")

print(f"Description: {description_text}")

print(f"Price: {price_text}")

print(f"Image Link: {image_src}")

print(f"Product Page Link: {product_link}")

print('-' * 50)Save the product data to a variable for later storage:

all_products.append([title_text, rating_text, description_text, price_text, image_src, product_link])Handle errors if the status code is not 200:

else:

print("Error fetching page:", response.status_code)This code retrieves a list of products with all the data we managed to collect:

A bit later, we will talk about saving data in CSV, but for now, let's talk about the downsides of this data collection method. Although we can successfully extract data this way, some websites easily recognize such a script as a bot and block its access to the resource.

For example, you can see this if you try to scrape another WooCommerce website with protection like this one. If you try to collect data from it using the previously created script, you will get a status code "403 Forbidden". But don't worry, this problem can be easily solved in one of two ways:

- Use the Selenium, Pyppeteer or PlayWright library for scraping with a Headless browser to simulate real user behavior. You will also need to connect a proxy to protect yourself and achieve greater anonymity and a captcha solving service, which will be useful if they appear.

- Use the Web Scraping API. This third-party resource already uses proxies, bypasses captchas, and requests the target site. In this case, you simply get the HTML page of the site, and you don't need anything else.

We will use the second option since it is much simpler and, unlike headless browsers, it can also be used in Google Colaboratory.

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Aliexpress Product Data Scraper is an easy-to-use, no-coding solution for collecting product data from Aliexpress. It lets you quickly scrape product information…

Scraping using API

As mentioned, not all websites respond positively when using the Requests library. Therefore, let's consider using Hasdata's web scraping API. To use it, you just need to register on the website and log in to your personal account to find your API key.

You can also find the ready-made script in Colab Research, and to use it, you just need to insert your API key and the URL of the WooCommerce website you want to scrape.

Now, let's take a step-by-step look at how to create such a script. First, create a new file with the *.py extension and import the necessary libraries:

import requests

from bs4 import BeautifulSoup

import csv

import jsonAs you can see, we also import the JSON library here because the Web Scraping API returns data in JSON format.

Then, we'll create variables and specify the API key, the URL of the website to be scraped, and the API endpoint:

api_key = "YOUR-API-KEY"

base_url = "https://bloomscape.com/"

url = base_url + "shop"

base_api_url = "https://api.hasdata.com/scrape/web"

all_products = []Now, let's define the request body and headers for the API. Here, you can specify the preferred proxy type and country, whether to scrape a page screenshot, extract emails, and much more. You can find a complete list of parameters on the documentation page.

payload = json.dumps({

"url": url,

"js_rendering": True,

"proxy_type": "datacenter",

"proxy_country": "US"

})

headers = {

"x-api-key": api_key,

"Content-Type": "application/json"

}Make a request:

response = requests.request("POST", base_api_url, headers=headers, data=payload)Then, the script is identical to the previous example, except that we get the HTML code of the page from the JSON response of the web scraping API:

if response.status_code == 200:

data = json.loads(response.text)

html_content = data["content"]

soup = BeautifulSoup(html_content, 'html.parser')

products = soup.find_all(['li', 'div'], class_='product')

for product in products:

title_elem = product.find('h2', class_='woocommerce-loop-product__title')

title_text = title_elem.text.strip() if title_elem else '-'

rating_elem = product.find('div', class_='star-rating')

rating_text = rating_elem['aria-label'] if rating_elem else '-'

description_elem = product.find('div', class_='product-meta')

description_text = description_elem.text.strip() if description_elem else '-'

price_elem = product.find(['span','div'], class_='price')

price_text = price_elem.text.strip() if price_elem else '-'

image_elem = product.find('img')

image_src = image_elem['src'] if image_elem else '-'

product_link_elem = product.find('a', class_='woocommerce-LoopProduct-link')

product_link = product_link_elem['href'] if product_link_elem else '-'

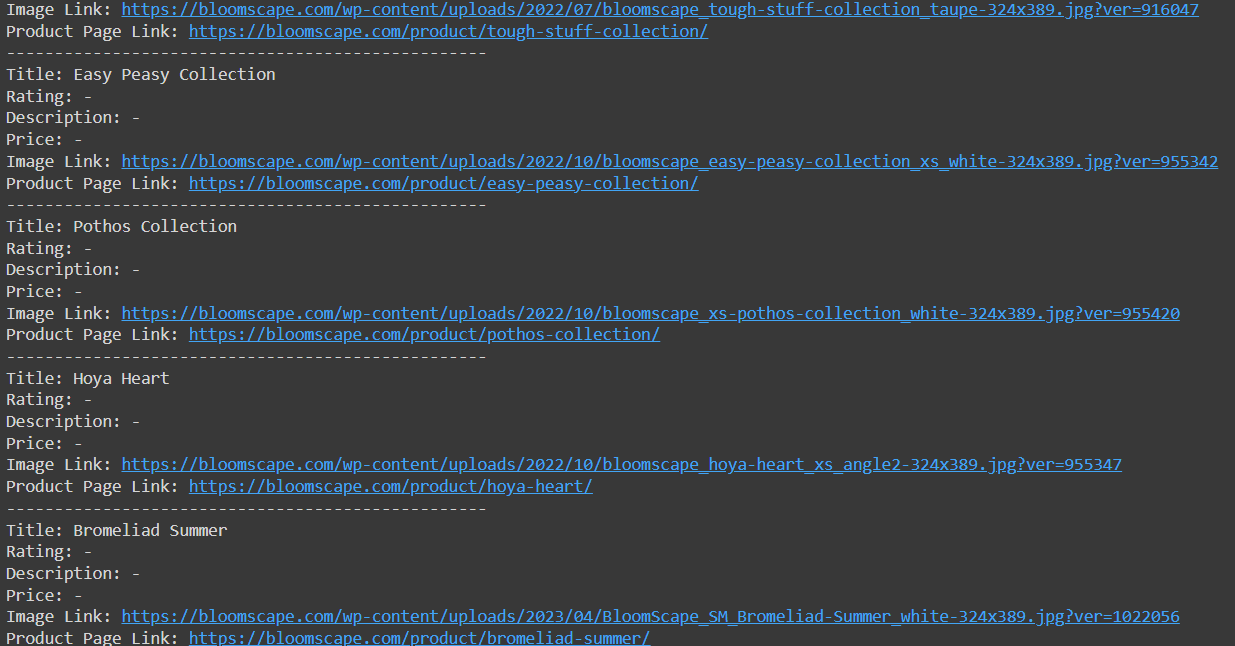

print(f"Title: {title_text}")

print(f"Rating: {rating_text}")

print(f"Description: {description_text}")

print(f"Price: {price_text}")

print(f"Image Link: {image_src}")

print(f"Product Page Link: {product_link}")

print('-' * 50)

all_products.append([title_text, rating_text, description_text, price_text, image_src, product_link])

else:

print("Error fetching data from the API:", response.status_code)This approach enables access to previously unavailable data:

Now all that's left is to save the data we've already collected.

Save Scraped Data

To save data to a CSV file in Python, we'll use the csv library and the data from the all_products variable. Create a csvwriter object using the csv.writer() function. Then specify the file name, including the extension *.csv, and open it in write mode ('w'), write the column headers using the writerow() method and write the data rows using the writerows() method. Pass the all_products variable as an argument:

with open('products.csv', 'w', newline='', encoding='utf-8') as csvfile:

csvwriter = csv.writer(csvfile)

csvwriter.writerow(['Title', 'Rating', 'Description', 'Price', 'Image Link', 'Product Page Link'])

csvwriter.writerows(all_products)You can choose a different file name based on your needs. For example, if you have data from multiple websites, you can use the website name as the file name. You can also include the current date in the file name to keep track of the data's freshness.

Scraping Product Links and Data from Sitemap

Most websites built on WordPress with the WooCommerce plugin have a similar HTML page structure and sitemap. We can use this to quickly get links to all product pages, crawl them, and collect complete information about all products on the site. Although the structure may vary slightly depending on the theme, let's consider the most common scenario.

If you want a ready-made script, skip to the Google Colaboratory script page, set your parameters, and get all the necessary information.

Fetching the sitemap XML file

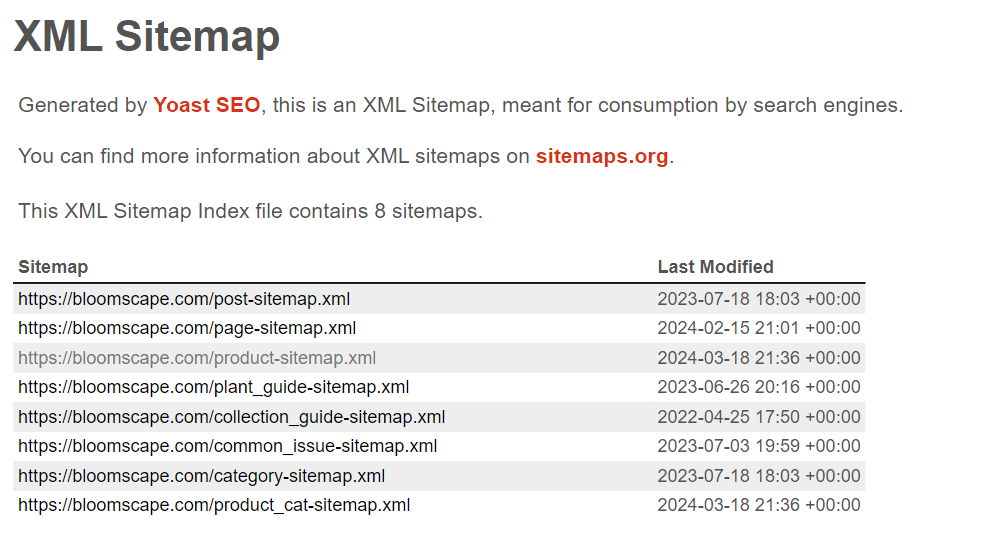

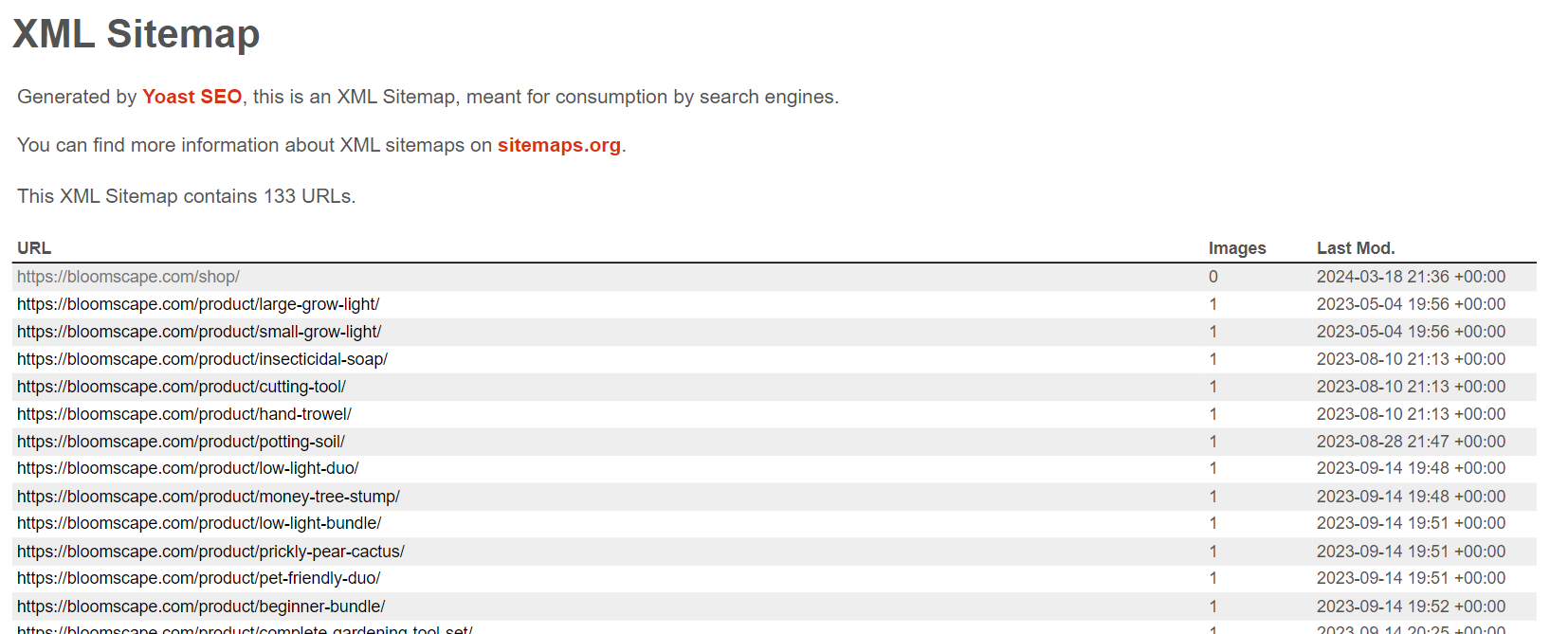

First, let's create a script to collect product page links from various WooCommerce websites. We'll navigate to the Sitemap page and researchits structure to do this. The majority of Sitemaps will follow this format:

Some details may vary depending on the installed theme. However, the most important thing is finding a list of product links on the product-sitemap.xml page.

To obtain the content from this page, we will need the previously discussed requests library for making requests and the LXML library for processing the page's XML data. Let's create a new file and import the necessary libraries:

import requests

from lxml import etreeTo fetch the contents of the page, we can use the requests library as before:

domain = "shoprootscience.com"

sitemap_url = f"https://{domain}/product-sitemap.xml"

response = requests.get(sitemap_url)Having successfully retrieved the XML page content, we only need to parse it to extract the required links.

Parsing the XML to extract product URLs

Let's build on the previous example and verify whether we received a status code of 200:

if response.status_code == 200:If so, let's store all found links in a variable:

sitemap_content = response.content

root = etree.fromstring(sitemap_content)

urls = []

for child in root:

for sub_child in child:

urls.append(sub_child.text)

if urls:

print(f"Sitemap found for {domain}:")

for url in urls:

if domain in url:

print(url)

else:

print(f"No URLs found in the sitemap for {domain}.")Otherwise, print the notification:

else:

print(f"No sitemap found for {domain}.")If you simply need to collect product links from WooCommerce websites, you can use our Colab Research script.

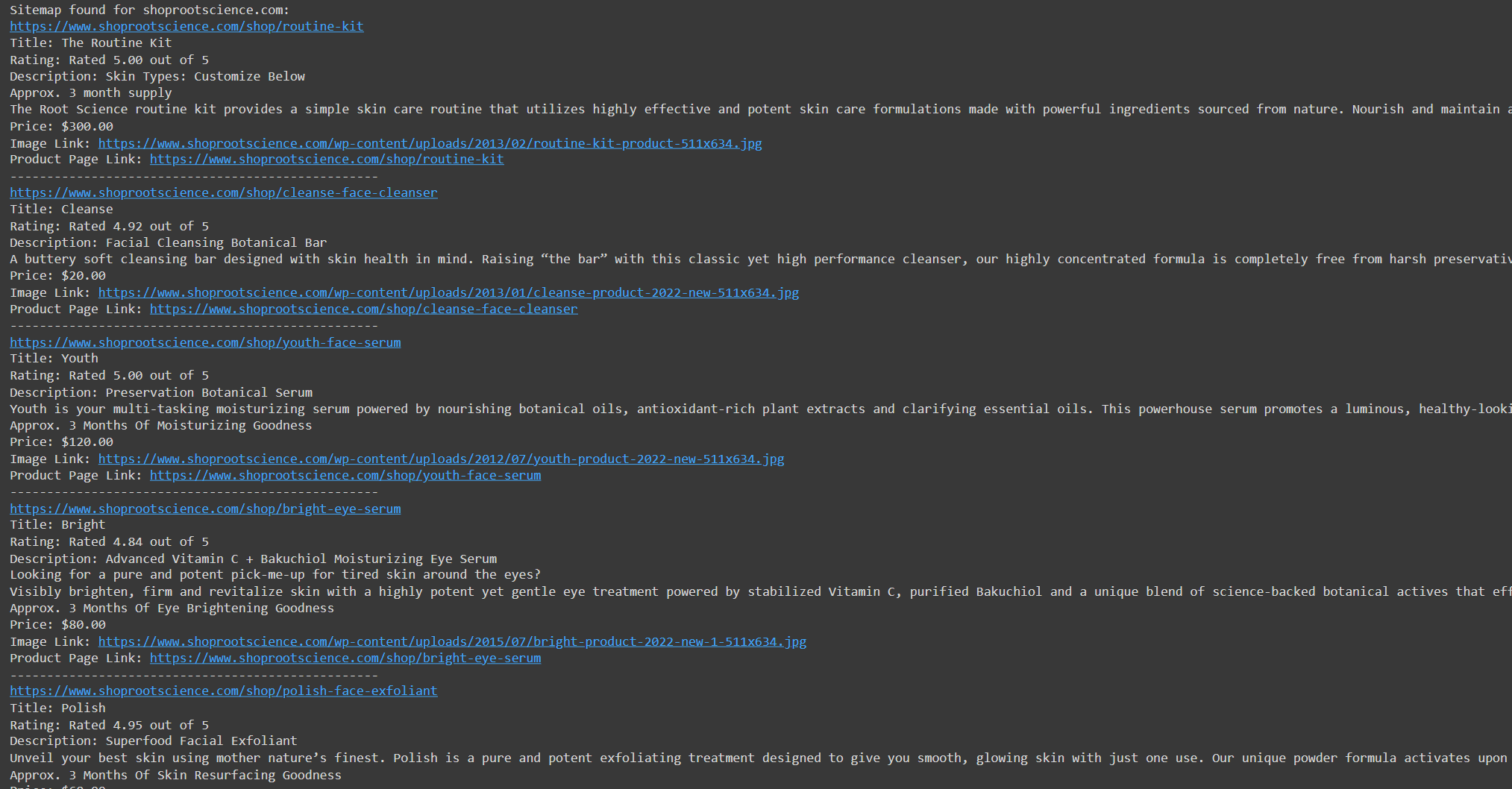

Scrape all Product Pages

Let's improve our script further by following the links instead of displaying them on the screen and collecting all the necessary data about each product.

Create a separate variable where we will store the product data.

all_products = []Import the BeautifulSoup library to be able to parse the data on the page:

from bs4 import BeautifulSoupNow, let's move on to the part where we iterate through the collected links and display them on the screen. You might have noticed that when scraping links from a sitemap, the first one is always to the page with all products and ends with "shop." So, let's add a check that the current URL is not it:

if domain in url and not url.endswith("shop"):The rest is the same as what we have already done, only we use slightly different selectors that are suitable for the product page:

response_product = requests.get(url)

if response_product.status_code == 200:

soup = BeautifulSoup(response_product.content, 'html.parser')

title_elem = soup.find('h1', class_='product_title')

title_text = title_elem.text.strip() if title_elem else '-'

rating_elem = soup.find('div', class_='star-rating')

rating_text = rating_elem['aria-label'] if rating_elem else '-'

description_elem = soup.find('div', class_='woocommerce-product-details__short-description')

description_text = description_elem.text.strip() if description_elem else '-'

price_elem = soup.find(['div', 'span'], class_='woocommerce-Price-amount')

price_text = price_elem.text.strip() if price_elem else '-'

product = soup.find(['li', 'div'], class_='product')

image_elem = product.find('img')

image_src = image_elem['src'] if image_elem else '-'

product_link = urlDisplay the data on the screen and store it in a variable for further saving to a file:

print(f"Title: {title_text}")

print(f"Rating: {rating_text}")

print(f"Description: {description_text}")

print(f"Price: {price_text}")

print(f"Image Link: {image_src}")

print(f"Product Page Link: {product_link}")

print('-' * 50)

all_products.append([title_text, rating_text, description_text, price_text, image_src, product_link])Let's execute the code and verify its functionality:

Now, let's save the extracted data in various formats.

Save Data to CSV, JSON, or DB

The most common data storage formats are CSV and JSON. We will also demonstrate how to store data in a database. We will choose the most straightforward option and use the SQLite3 library to write data to a DB file. We will use another library, Pandas, to save data in JSON and CSV, as it will be much easier to implement.

Let's import the libraries:

import pandas as pd

import sqlite3At the end of the script, we will implement saving data to a CSV file using the pandas library:

df = pd.DataFrame(all_products, columns=['Title', 'Rating', 'Description', 'Price', 'Image Link', 'Product Page Link'])

df.to_csv('products.csv', index=False)We will use the same data frame and save the data in JSON:

df.to_json('products.json', orient='records')Finally, we will create a connection to the database file, insert the data, and close the connection:

conn = sqlite3.connect('products.db')

df.to_sql('products', conn, if_exists='replace', index=False)

conn.close()As you can see, using pandas allows you to save data quickly enough, thanks to dataframes. This library supports many more saving options than were considered. You can learn more about this library and its capabilities in the official documentation.

Test the Scraper on Different WooCommerce Sites

Let's test this script on three WooCommerce websites to ensure its versatility. We'll modify the script to iterate over multiple websites instead of just one and save the extracted data to a CSV file.

You can find the script on Google Colaboratory. Here, we'll only show the results of its execution on different websites:

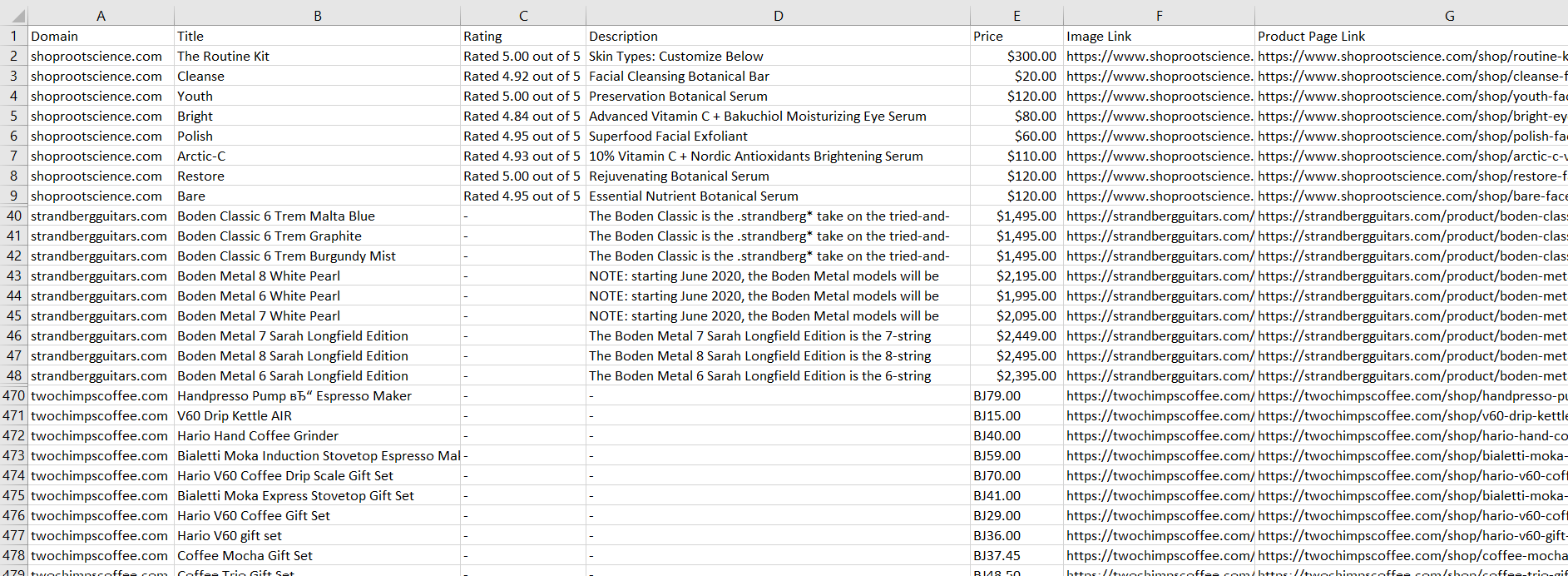

As you can see from the screenshot, we can easily extract the necessary data from all WooCommerce pages using a universal tool because of their similar structure.

Conclusion and Takeaways

This guide has provided an overview of constructing a WooCommerce scraper for accessing product data and other crucial elements of an online store. We've delved into two primary methodologies: scraping product pages directly and utilizing the SiteMap for product listings. The scripts provided are adaptable to most WooCommerce websites due to their similar structures.

When scraping product pages, we've demonstrated the utilization of Python and BeautifulSoup to extract essential product details such as titles, prices, descriptions, and images. Alternatively, by parsing the SiteMap using Python and lxml, we've illustrated how to extract product URLs and pertinent information.

While scraping presents challenges like bot protection or technical limitations, a web scraping API can offer a more efficient and reliable solution. You can utilize the script provided earlier, which doesn't require bypassing blocks or solving CAPTCHAs; it simply employs the Web Scraping API.

In conclusion, constructing a WooCommerce scraper empowers businesses to gain a competitive advantage. Because selectors remain identical, adjusting them when scraping a different WooCommerce site is unnecessary. By harnessing the capabilities of scraping and automation, new avenues for growth and success can be unlocked.