Scraping data off the web is a powerful tool for people who need to quickly gather online information. With the right tools and some know-how, it's easy to extract large amounts of data from websites for use in other applications or projects.

Google Sheets is one of those powerful tools that enable users to easily extract data from websites without having any programming knowledge. It's great for beginners because you don't have to write code to get data in Excel (XSLX or CSV format) — all you need is some basic knowledge about spreadsheets and formulas.

Main formulas for Google Sheets web scraping

Web Scraping with Google Sheets has many advantages over traditional methods, such as not needing a lot of technical expertise or expensive software packages; being able to quickly pull up-to-date information with just a few clicks; having access to powerful query language features which make it easier than ever before; and most importantly, being able to do all this within an environment familiar to everyone.

Google Sheets offer a variety of ways to scrape data from websites. The easiest method is to use one of four formulas:

- IMPORTFEED – Retrieving RSS feed data;

- IMPORTHTML – Scraping lists and tables;

- IMPORTDATA – Import any structured data;

- IMPORTXML – Using XPath query to retrieve any kind of data from a web page.

For those with programming skills, there is another way to scrape data in Google Sheets - custom scripts written in GS, which resembles JavaScript coding language. This method provides more flexibility than simply relying on the existing formulas, as it allows users to customize their own code for particular needs.

Scrape WooCommerce products using Google Spreadsheet

WooCommerce is an open-source e-commerce platform that allows users to build online stores with WordPress. It is one of the most popular (with over 5 million active installations) and versatile solutions available, providing a wide range of features such as customizable product pages, multiple payment methods, inventory management tools, and more. WooCommerce also offers powerful marketing capabilities like coupons, discounts, and automated sales emails. That's why we chose WooCommerce as an example when writing our scraper.

Good news for those who want to scrape sites using WooCommerce - all sites using this plugin have the same product structure, tags, and scraping process. This means that once you figure out how to scrape one WooCommerce site, you'll be able to easily scrape data from almost any other store on that platform.

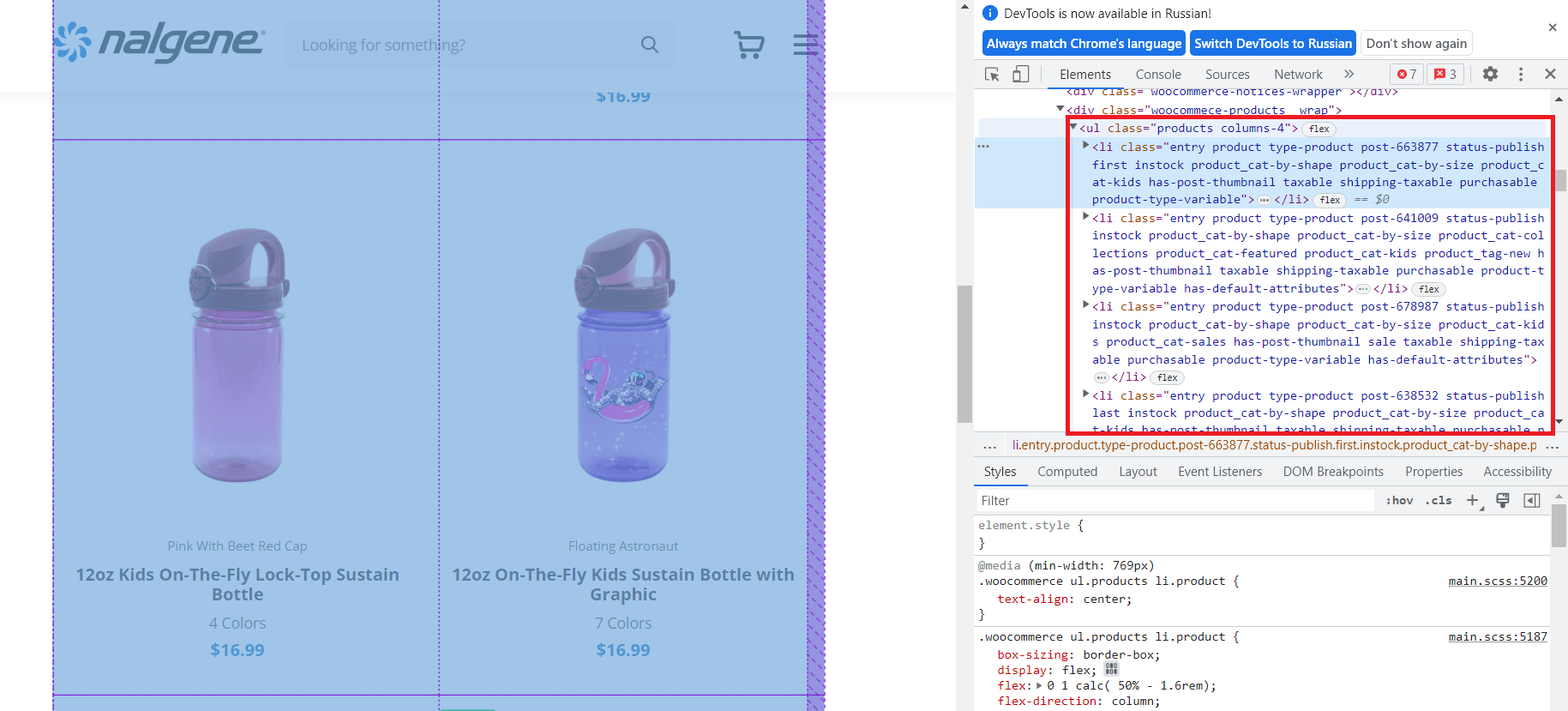

Analyzing the page with Woocommerce

Before you can start extracting data from a website and processing it in Google Sheets, you need to take a good look at the webpage you want to scrape and decide which data you want to collect.

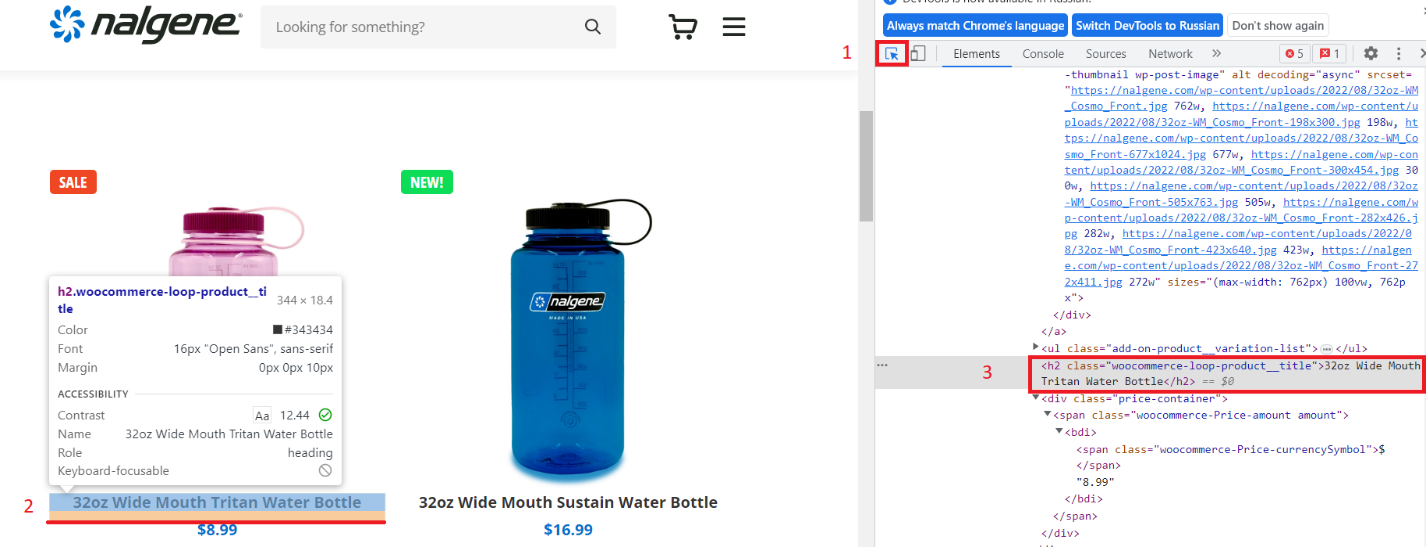

Let's look at a random store that is built on the WooCommerce platform. To view the HTML code for the page, you can use your Chrome Developer Tools. To do this, press F12 or right-click on an empty space on the page and select 'Inspect' from the menu.

As we can see, all products are in the <ul> tag with the class "products". Thus, all products are inside this tag in a structured form. We will use this to get the names, links, and prices.

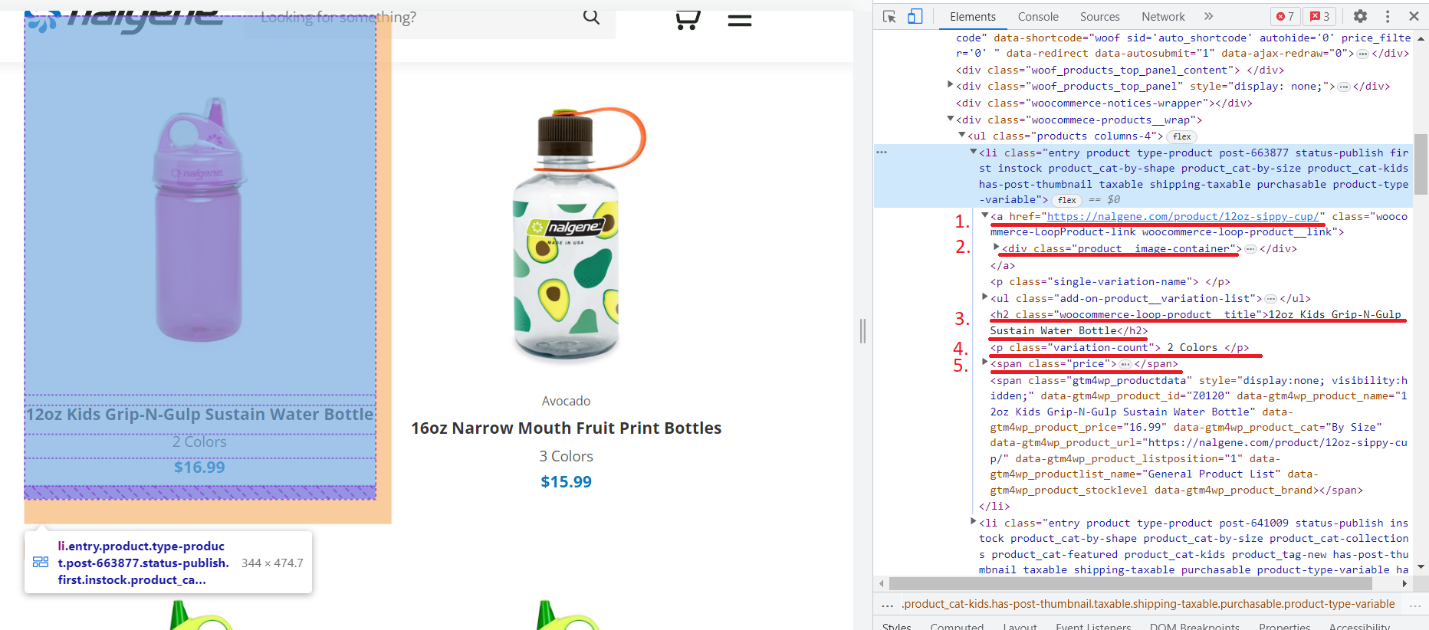

Let's take a closer look at one of the products:

We numbered the tags containing the data we want to scrape:

- The link to the product page.

- An image of the product.

- Product's name.

- Product variations.

- The price.

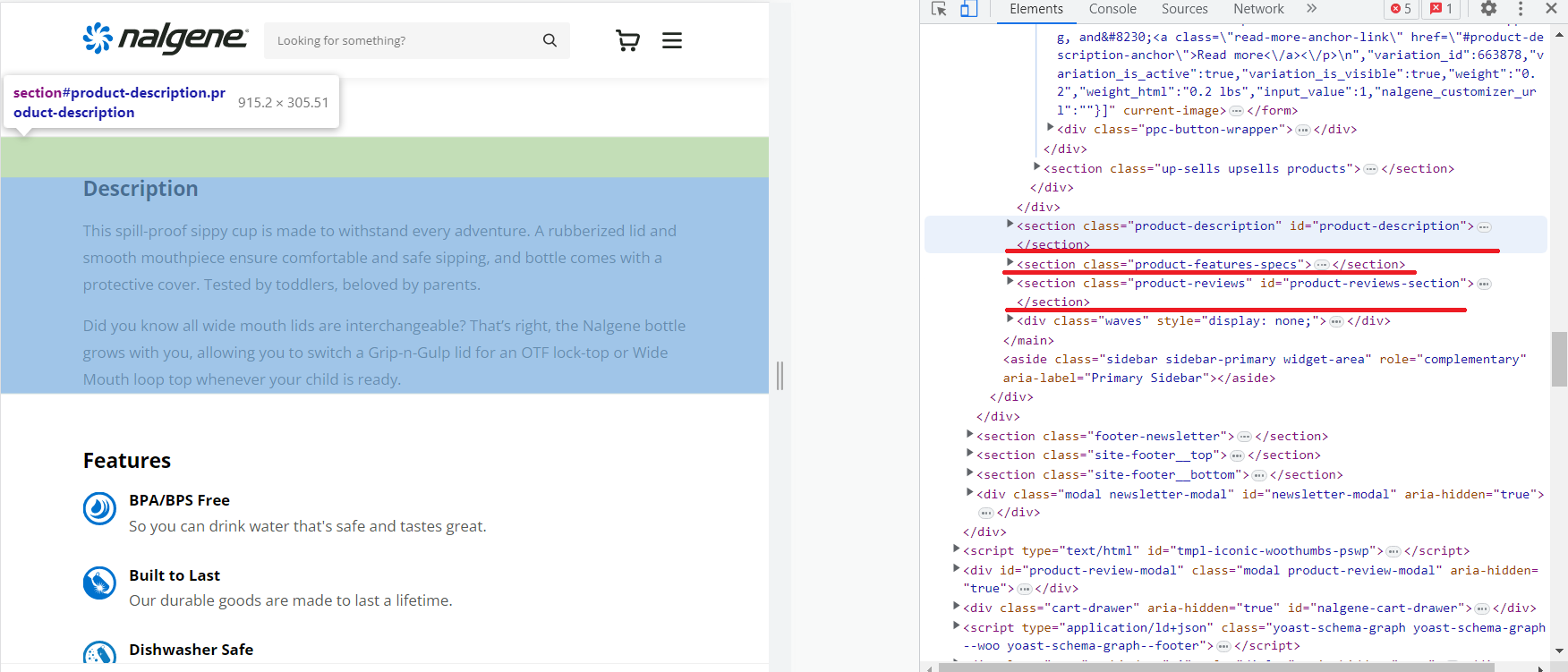

Now let's go to the product page and see what information we can get on it.

Here we could get a description of the product, its features, and reviews. Now that we have all the information we need, let's start adding data to Google Sheets.

How to use IMPORTXML for web scraping in Google Sheets

To scrape data from the example store, we'll use the IMPORTXML() function, which allows us to extract data from a web page using XPath queries. XPath is a query language used for navigating XML documents. It takes some basic knowledge of HTML or XML structure and syntax, as well as familiarity with XPath expressions. To make your scraping process easier, look out for our tutorial that will guide you through the basics of writing an effective XPath expression.

Although using IMPORTXML() formula requires some coding knowledge, it's not overly difficult. We'll need a link to the product page and the XPath of the items we need to scrape. In general, the formula looks like this:

=IMPORTXML(URL, XPath)Scraping the store category page with Google Sheets

Let's take the link to the product page of one of the sections. There are two options for getting XPath:

- Compose it yourself.

- Copy from the browser.

First, let's copy from the browser, and then let's see what a self-compiled XPath would look like.

Go to the category page in the browser and open DevTools. Select the item selection function and click on the item name.

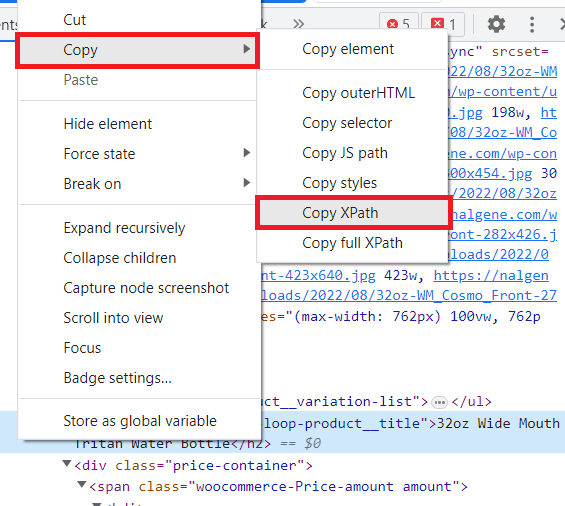

Then right-click on the tag with the name of the product and select "Copy" - "Copy XPath".

The resulting XPath looks like this:

/html/body/div[1]/div[2]/div/main/article/div/div[3]/div/div/div/div[1]/h2Let's go to Google Sheets and try to get the titles using the copied XPath. In cell A1 let's put a link to the product page, and in cell B1 let's enter the formula:

=IMPORTXML(A1,"/html/body/div[1]/div[2]/div/main/article/div/div[3]/div/div/div/div[1]/h2")As a result, we get only one title. To fix this, let's change the XPath:

=IMPORTXML(A1,"/html/body/div[1]/div[2]/div/main/article/div/div[3]/div/div/div/div/h2")Now we have all the items. However, this XPath is long and hard to understand. So, let's make a suitable XPath ourselves.

Let's take a close look at the tag with the title:

<h2 class="woocommerce-loop-product__title">32oz Wide Mouth Tritan Water Bottle</h2>As we can see, this is an h2 tag with the class "woocommerce-loop-product__title". In XPath it will look like this:

//h2[@class='woocommerce-loop-product__title']Replace the contents in the cell with a new formula:

=IMPORTXML(A1,"//h2[@class='woocommerce-loop-product__title']")As a result, we get the same list as before, but now XPath looks clearer and even more, it will be suitable for use not only on this site but also on other sites that use WooCommerce.

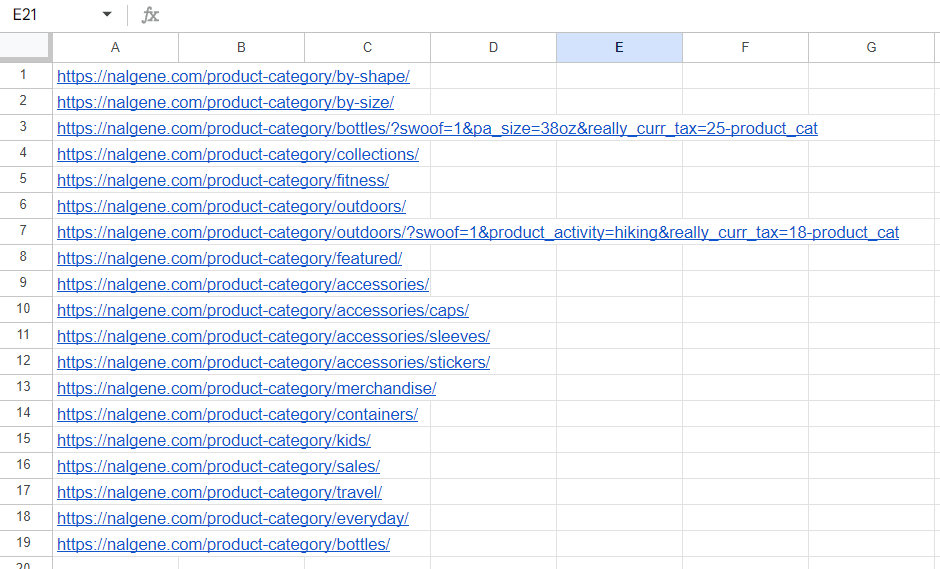

Scraping the category links with Google Sheets

However, it is difficult and time-consuming to manually bypass all the category pages and get their links. Here we used a link to a category we found ourselves. But let's gather all category links automatically with the IMPORTLXML() formula.

First, let's get all the links on the page:

=IMPORTXML("https://nalgene.com/#","//li/a/@href")However, some links are duplicated and can get in the way. Therefore, let's use the formula QUNIQUE() to leave only unique values.

=UNIQUE(IMPORTXML("https://nalgene.com/#","//li/a/@href"))Now use the REGEMATCH() in FILTER() function to leave only those links that contain "product-category":

=FILTER(UNIQUE(IMPORTXML("https://nalgene.com/#","//li/a/@href")),REGEXMATCH(UNIQUE(IMPORTXML("https://nalgene.com/#","//li/a/@href")),"product-category"))In this way, we get links to all categories:

Unfortunately, the IMPORTXML() function does not support arrays. So, we can't specify a column with links and get data for all products. Thus, we still have to substitute the links manually, but now we have links to all the categories.

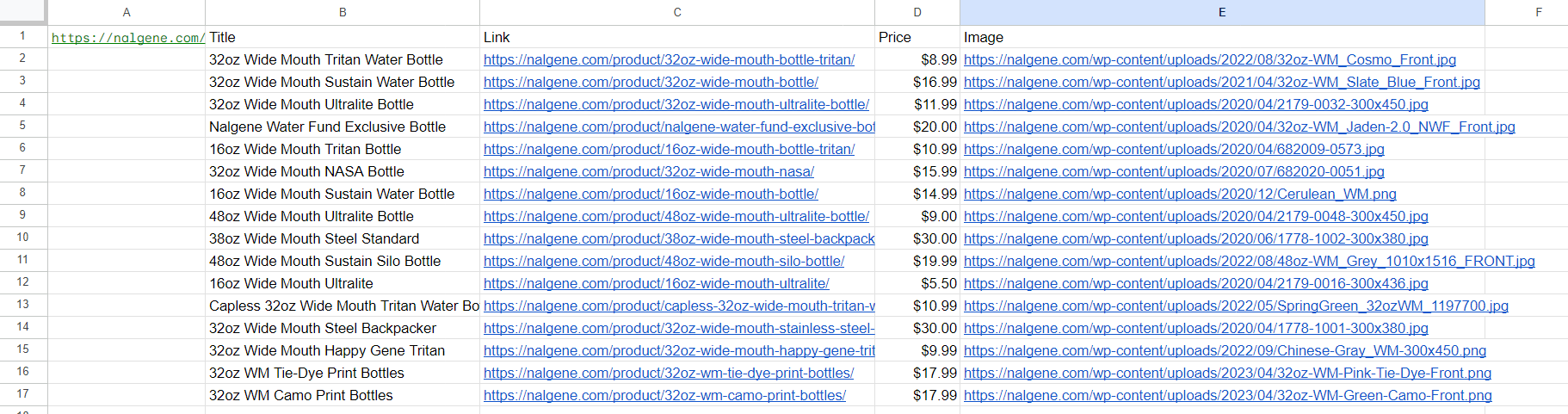

Scraping products data from the WooCommerce store

Let's continue to collect data on products from the category page. Sometimes it happens that we need to get not the text from a tag but the attribute of that tag. To do this, specify an attribute at the end of your XPath and retrieve its content using an '@' symbol. For example, let’s get links to items. First, look at the item link tag:

<div class="add-on-product">

<a href="https://nalgene.com/product/32oz-wide-mouth-bottle-tritan/">

…

</a>

</div>Write the appropriate XPath:

//div[@class='add-on-product']/a/@hrefAs you can see, everything is simple enough. Let's write down the XPath for the last two elements:

- Price: //div[@class='price-container']

- Image: //div[@class='add-on-product__image-container']/img/@src

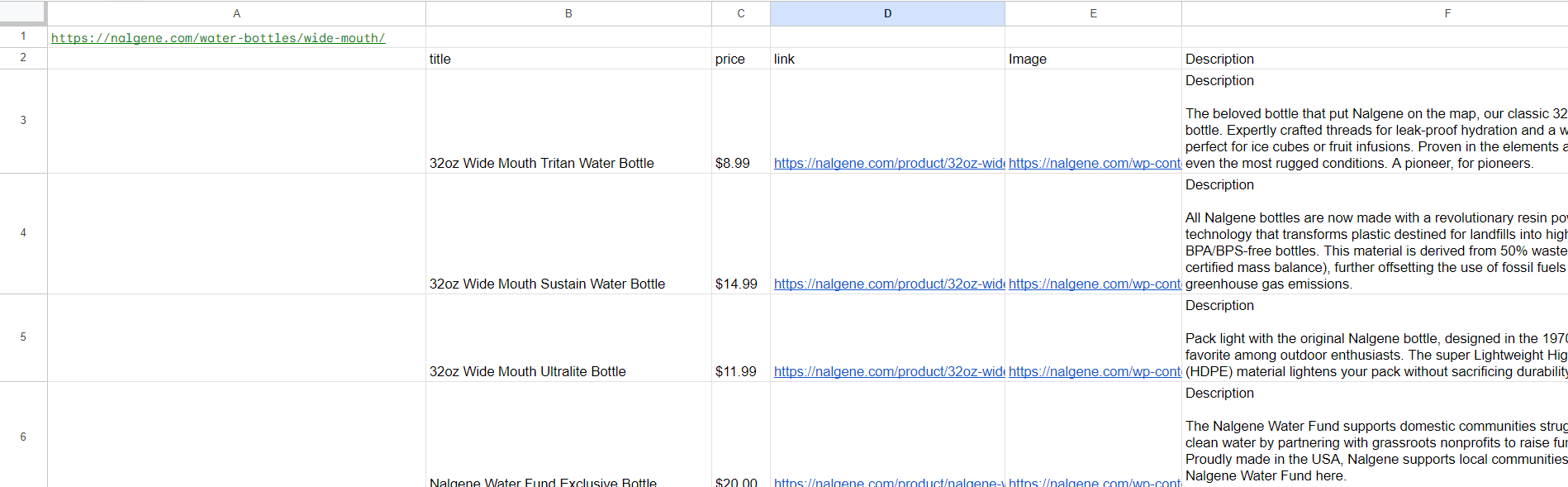

Let's put all formulas in the table (only XPath will be changed) and see the result:

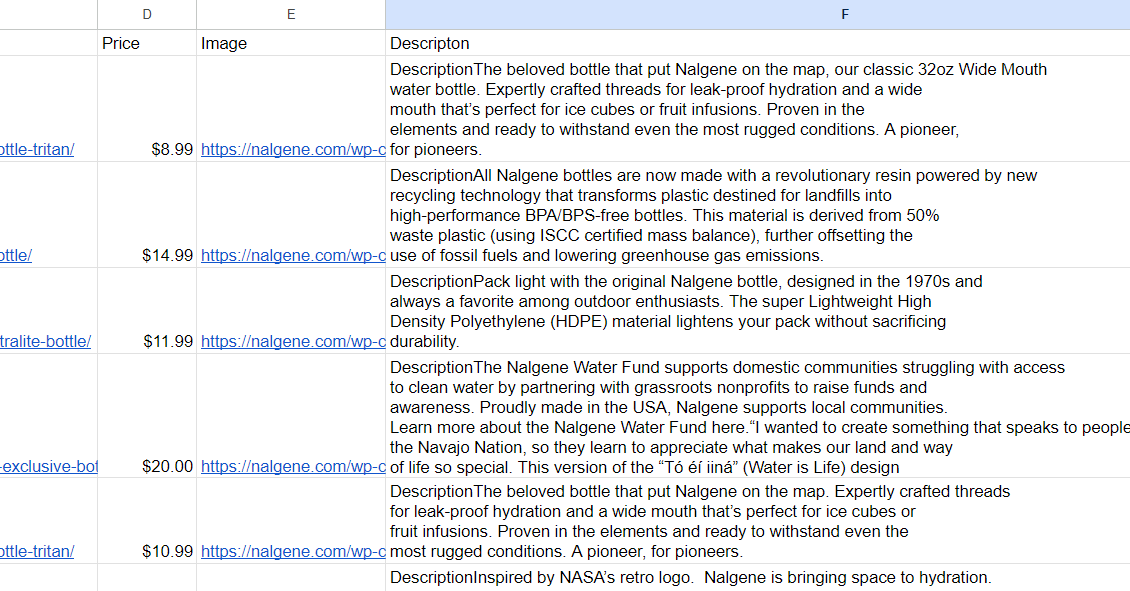

Now let's add a description of the products. We've already got links to product pages in column C, so let's use that. Put in column F the formula to get the description:

=IMPORTXML(C4,"//div[@class='product-description__wrap']")As a result, we get a complete table with data about products and their descriptions. If we leave it that way, the description will be divided into several columns, the way they are divided into paragraphs on the site. To put them together, we use the CONCATENATE() function:

=concatenate(IMPORTXML((C2),"//div[@class='product-description__wrap']"))The result will be a table with descriptions:

Unfortunately, the CONCATENATE() function has one major disadvantage: it joins parts without a separator, so it is not very convenient. Because the data is returned as an array instead of a string, we cannot use the TEXTJOIN() function, which allows us to specify delimiters.

Pros and cons of IMPORTXML() for web scraping with Google Sheets

IMPORTXML() is a powerful tool for quickly and easily collecting large amounts of information in Google Sheets. It helps to scrape data from websites directly into a spreadsheet. However, it's important to weigh up the pros and cons before deciding if this is the right option for you.

| Pros | Cons |

|---|---|

| Easy to use with no coding knowledge required | Limited number of queries (1000 queries per hour) |

| Can quickly import data into a spreadsheet | Limited ability to navigate dynamic websites |

| Works with the majority of websites | Can be slow when scraping large amounts of data |

| Free to use | Scrape only one URL in one formula |

The IMPORTXML formula is perfect for web scraping projects where you need specific pieces of information to analyze trends or process other tasks. So, it is the best choice for those who don’t good at programming. However, if you are familiar with programming and need to perform more complex tasks, then it's time to consider Google App Scripts.

Web scraping with Google Sheets Apps Script

Google Sheets have a powerful tool for making scripts - App Scripts. With this tool, you can quickly and easily automate many tasks that would otherwise take hours to complete. And if you want to access more complex data than what is available in the spreadsheet interface, then you can use Web Scraping APIs.

Google App Scripts uses JavaScript as its programming language. With this tool, users have access to functions such as creating custom menus or buttons; accessing third-party services; manipulating documents stored in Drive; sending emails right from their sheets, or even accessing external resources.

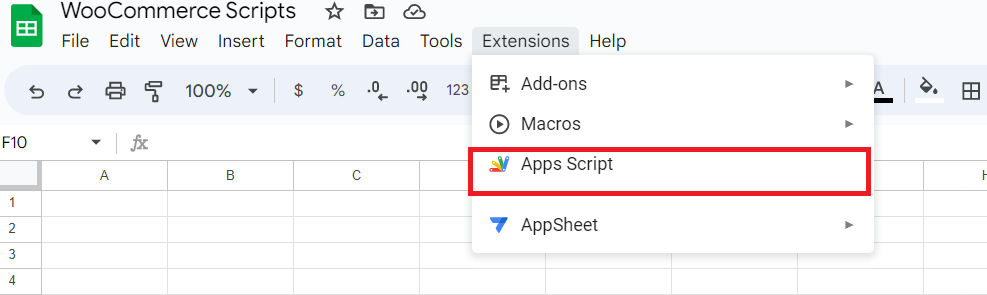

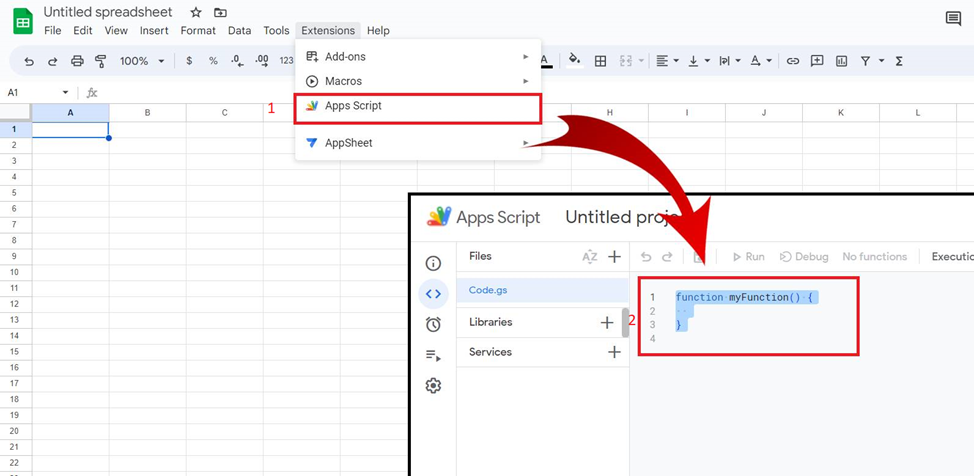

To open the App Scripts area, go to Extensions - App Scripts.

As an example, we will use Scrape-It.Cloud web scraping API. After signing up, you'll get 1000 free credits to help get you started.

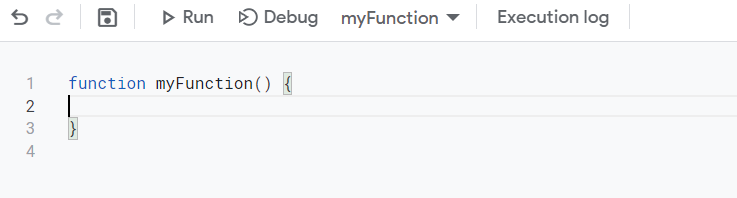

To start, let's create a simple script that will get all the code of the page. After launching App Script, we get to the scripting window.

We can name the function differently to make it more convenient, or we can leave the same name. This affects how we refer to our script on the sheet. The function name will be the name of the formula.

If you don't want to learn how to create your own script and want to use the finished result, skip to the final code and an explanation of how it works.

To execute a query, we need to set its parameters. You can find the API key in your account in the dashboard section. First, let's set the headers:

var headers = {'contentType':'application/json','x-api-key': 'YOUR-API-KEY};Next, let's specify the body of the request. Since we need the code of the whole page, we just specify the link to the site from which we need to collect data:

var data ={'url': 'https://nalgene.com/water-bottles/wide-mouth/'};Finally, let's gather the query and execute it, and display the result:

var options = {

'method': 'post',

'headers': headers,

'payload': data

};

var response = UrlFetchApp.fetch('https://api.scrape-it.cloud/scrape', options).getContentText();

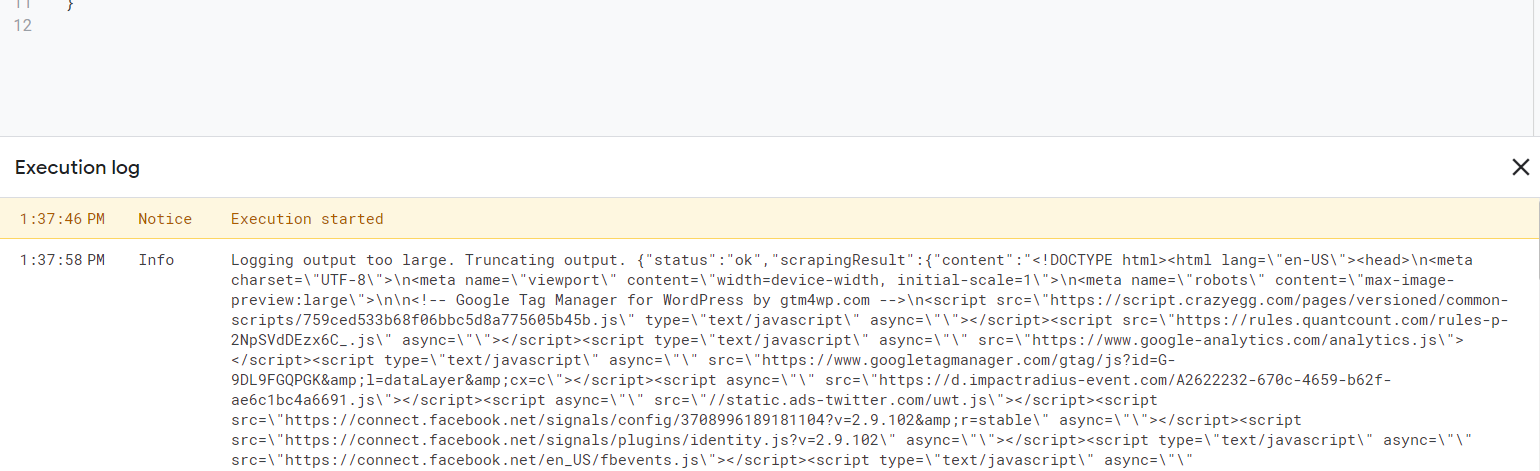

Logger.log(response);As a result, we get the following:

You can configure the data extraction settings in your account in the Web Scraping API section. There you can also visually configure the query using special functions.

For example, we can use the execution rules function to extract only the product names and prices:

"extract_rules": {

"title": "h2.woocommerce-loop-product__title",

"price": "div.price-container"

},Adding new rules is specified in the format "Name": "CSS selector". It's not very convenient to write these parameters hard in the script - to make the formula dynamic, it would be convenient to pass these parameters on the sheet. So, let's add these data to the input. Input parameters are specified in parentheses next to the function name.

function myFunction(...rules) {…}This means that we get several parameters that need to be put into the rules variable. For convenience, let's rename the function and add another parameter to the input - a link to the page from which the data will be collected.

function scrape_it(url, ...rules) {…}Now let's declare the variables and select the headers for the future table from the extraction rules we got:

var extract_rules = {};

var headers = true;

// Check if the last argument is a boolean

if (typeof rules[rules.length - 1] === "boolean") {

headers = rules.pop();

}Let's write the rules in the extract_rules variable in the form we need:

for (var i = 0; i < rules.length; i++) {

var rule = rules[i].split(":");

extract_rules[rule[0]] = rule[1].trim();

}Let's change the body of the query by putting in variables instead of values and then execute it.

var data = JSON.stringify({

"extract_rules": extract_rules,

"wait": 0,

"screenshot": false,

"block_resources": true,

"url": url

});

var options = {

"method": "post",

"headers": {

"x-api-key": "YOUR-API-KEY",

"Content-Type": "application/json"

},

"payload": data

};

var response = UrlFetchApp.fetch("https://api.scrape-it.cloud/scrape", options);

This query returns data as JSON with attributes that contain the required data and whose name is identical to the one we set in the extraction rules. To get this data, let's parse the JSON response:

var json = JSON.parse(response.getContentText());Let's set the attribute variable that contains the result of the extraction rules:

var result = json["scrapingResult"]["extractedData"];Get all the keys and the length of the largest array to know its dimensionality:

// Get the keys from extract_rules

var keys = Object.keys(extract_rules);

// Get the maximum length of any array in extractedData

var maxLength = 0;

for (var i = 0; i < keys.length; i++) {

var length = Array.isArray(result[keys[i]]) ? result[keys[i]].length : 1;

if (length > maxLength) {

maxLength = length;

}

}

Create a variable output, in which we put the first row of data, which will be the column names of the future table. To do this, check the headers and keys so that we can enter only those columns for which the data were found.

// Create an empty output array with the first row being the keys (if headers is true)

var output = headers ? [keys] : [];Let's go through all the elements from the extraction rules and add them to the output variable line by line.

// Loop over each item in the extractedData arrays and push them to the output array

for (var i = 0; i < maxLength; i++) {

var row = [];

for (var j = 0; j < keys.length; j++) {

var value = "";

if (Array.isArray(result[keys[j]]) && result[keys[j]][i]) {

value = result[keys[j]][i];

} else if (typeof result[keys[j]] === "string") {

value = result[keys[j]];

}

row.push(value.trim());

}

output.push(row);

}

Finally, let's put the result back on the sheet:

return output;Now you can save the resulting script and call it directly from the sheet, specifying the necessary parameters.

Final code for web scraping with Google App Script

Before we dive into how to get started with the pre-made App Script, let's look at what needs to be done for those who skipped the section on creating a script but want to use it.

Go to Google Sheets and create a new Spreadsheet.

Go to Extensions and open App Scripts. In the window that appears, select all the text and replace it with our script code:

Script code:

function scrape_it(url, ...rules) {

var extract_rules = {};

var headers = true;

// Check if the last argument is a boolean

if (typeof rules[rules.length - 1] === "boolean") {

headers = rules.pop();

}

for (var i = 0; i < rules.length; i++) {

var rule = rules[i].split(":");

extract_rules[rule[0]] = rule[1].trim();

}

var data = JSON.stringify({

"extract_rules": extract_rules,

"wait": 0,

"screenshot": false,

"block_resources": true,

"url": url

});

var options = {

"method": "post",

"headers": {

"x-api-key": "YOUR-API-KEY",

"Content-Type": "application/json"

},

"payload": data

};

var response = UrlFetchApp.fetch("https://api.scrape-it.cloud/scrape", options);

var json = JSON.parse(response.getContentText());

var result = json["scrapingResult"]["extractedData"];

// Get the keys from extract_rules

var keys = Object.keys(extract_rules);

// Get the maximum length of any array in extractedData

var maxLength = 0;

for (var i = 0; i < keys.length; i++) {

var length = Array.isArray(result[keys[i]]) ? result[keys[i]].length : 1;

if (length > maxLength) {

maxLength = length;

}

}

// Create an empty output array with the first row being the keys (if headers is true)

var output = headers ? [keys] : [];

// Loop over each item in the extractedData arrays and push them to the output array

for (var i = 0; i < maxLength; i++) {

var row = [];

for (var j = 0; j < keys.length; j++) {

var value = "";

if (Array.isArray(result[keys[j]]) && result[keys[j]][i]) {

value = result[keys[j]][i];

} else if (typeof result[keys[j]] === "string") {

value = result[keys[j]];

}

row.push(value.trim());

}

output.push(row);

}

return output;

}

Put your API key, which you can find in your account in the dashboard section at Scrape-It.Cloud instead of "YOUR-API-KEY" and save it.

Now let's look at the result of the work. Let's go back to the sheet, put the link to the page in cell A1, and in the next cell specify the formula. In general, the formula looks like this:

=scrape_it(URL, element1, [element2], [false])Where:

- The URL is a link to the page to be scraped.

- Elements (you can specify more than one). Elements are specified in the format "Title: CSS_Selector @attribute". Instead of Title specify the name of the element, then via a colon specify the CSS selector of the element and, if necessary, via a space and @ specify the attribute, which value is necessary. If only element text is needed, no attribute is specified. For example:

- "title: h2" – to get product name.

- "link: a @href" – to get product link.

- [false]. This is an optional parameter that defaults to true. It indicates whether or not the headers for the columns should be saved. If you don't specify anything, the header will be specified in the first cell and will be taken from the Elements parameter. If you specify false, column headers will not be specified and saved.

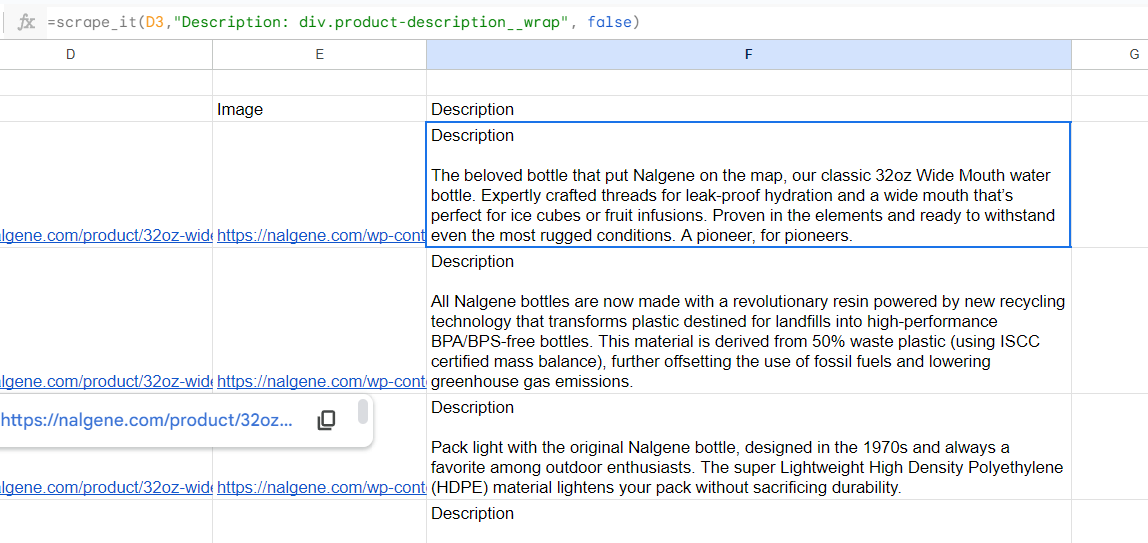

Now, using all the skills we learned, let's get a table identical to the one we got with IMPORTXML but using the scrape_it formula:

=scrape_it(A1,"title: h2.woocommerce-loop-product__title", "price: div.price-container","link:div.add-on-product>a @href", "Image:div.add-on-product__image-container>img @src")As a result, we got the following table:

However, we have to get the description from every single product page, so we don't need to specify headers. In this case, we need to specify the third additional parameter false, at the end of the formula:

Scraping with Google Sheets and App Scripts can be a powerful tool for data collection and analysis. With the help of Google's scripting language, you can easily access external websites to retrieve information, use web scraping API for automation processes or even manipulate results in whatever way suits your purpose.

It comes in handy where IMPORTXML cannot be used. For example, you can't use IMPORTXML for sites with dynamic rendering. However, using Google App Scripts together with the Web Scraping API can solve this problem. The considered script is suitable for scraping any site, no matter what platform it is built on, whether it has dynamic rendering or not.

Conclusion and takeaways

Web scraping data with Google Sheets is a great way to quickly collect and organize data from websites. It's an easy-to-use tool that can be used by anyone, even those who don't have any coding knowledge or experience. With the right steps, you can create powerful web scrapers in just a few minutes. From there, it's up to you how you want to use your newly collected information — whether it’s for marketing research or creating custom reports of your own.

With scraping tools like Google Sheets, everyone has access to valuable website data without needing extra resources or expensive software programs. Additionally, its built-in features make cleaning up messy datasets simple and quick - saving time on manual tasks so you can focus on what matters most: leveraging the insights derived from your scraped data.