Web scraping is an automated technique for extracting data from websites. It has become increasingly important in recent years as the amount of available online data grows exponentially. Web scraping can be used for several purposes, including market and competitive research, price monitoring, and data analysis.

R is an incredibly powerful programming language that enables users to easily scrape information from websites using packages and tools designed specifically for this purpose. With the right knowledge at your fingertips you can use R to quickly gather large amounts of data from web sources without having to do it manually.

In this article we'll look into how web scraping works in R; ranging from setting up necessary packages all the way through structuring and cleaning scraped datasets. We will tell about basic techniques such as extracting static content and move onto more advanced methods like handling dynamic content or using APIs with R web scraping capabilities.

Whether you're a researcher, analyst, developer, or scientist, if you want to take advantage of automatic website extraction, this guide will provide enough information to be successful.

Getting Started with Web Scraping in R

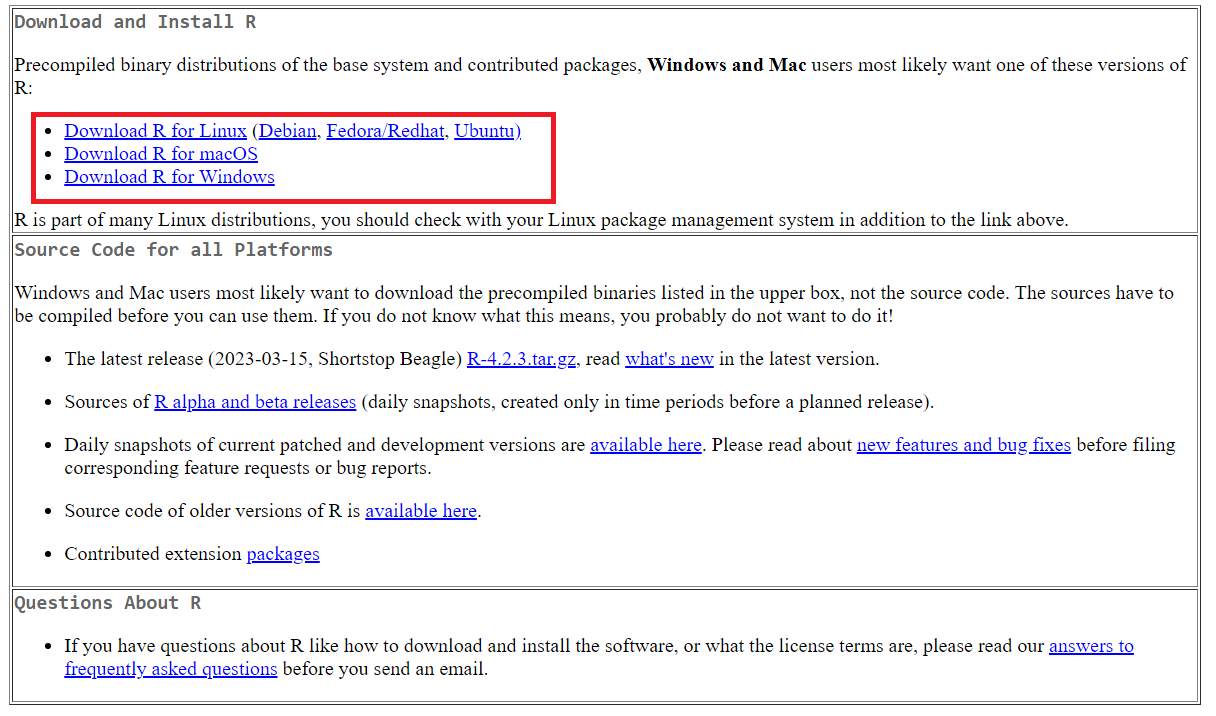

To get started, we need to prepare the environment and the tools that we will use in this tutorial. The first thing is to download and install R. To do this, go to the official website of R and download the necessary package depending on your operating system (Windows, Linux, MacOS).

Then download and install the development environment. In fact, the choice is quite wide: Visual Studio Code, PyCharm or any other that supports R language. However, we recommend RStudio, which was designed specifically to work with this programming language, is quite functional and has many useful built-in features. We will use this development environment at this tutorial.

To install, go to the official RStudio web site and download the installation file. It is important to remember that installation must be performed in strict order: first R, then RStudio.

For other operating systems the installation files are at the bottom of the page.

Given that you can install packages in the RStudio environment itself, we will do this a little later, as we use them. For now, let's explore the site from which we will collect data.

Researching the structure of the site

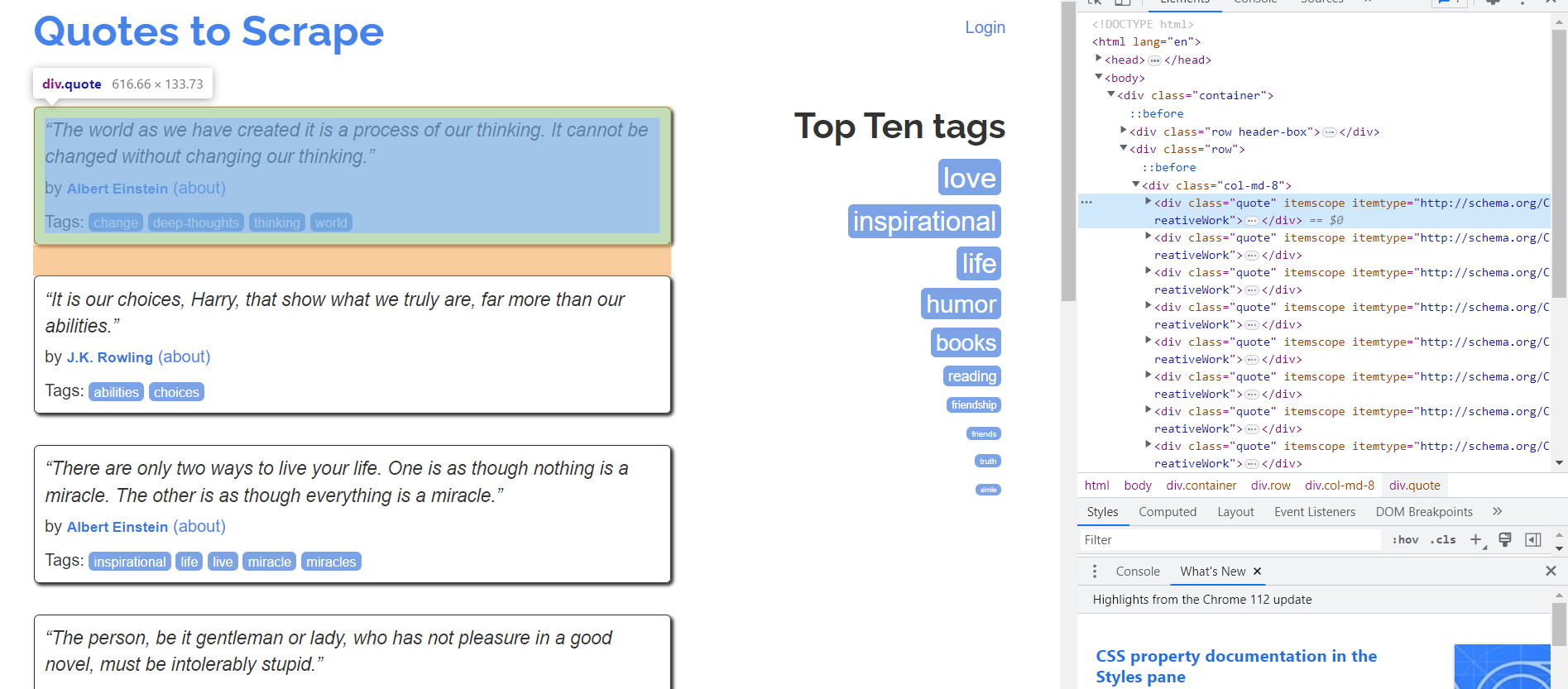

Before writing a scraper, no matter what programming language it is in, you need to research the site's structure and understand where and in what form the necessary data is.

Let's take the quotes.toscrape.com site as an example to consider the step-by-step writing of the script. First, let's go to the site and open the page code. To open the HTML page code, go to DevTools (press F12 or right-click on an empty space on the page and go to Inspect).

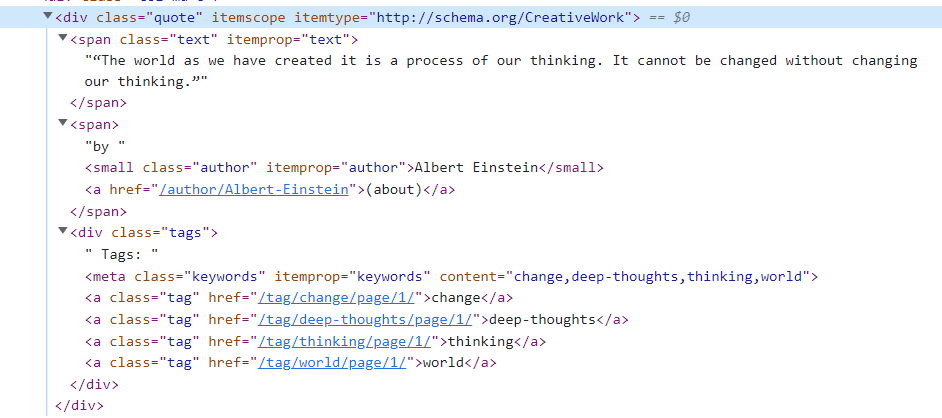

As we can see, all quotes are in the <div> tag with the "quote" class. If you're unfamiliar with tags and selectors, we recommend reading our article on CSS selectors. This will help you understand them better.

Let's explore the structure in more detail:

Now we see that the quote itself is in the <span> tag with the class "text", the author is in the <small> tag with the class "author", and the quote tags in the <a> tag with the class "tag". With this structure in place, you can begin writing the scraper in R.

Retrieving Data from Web Pages

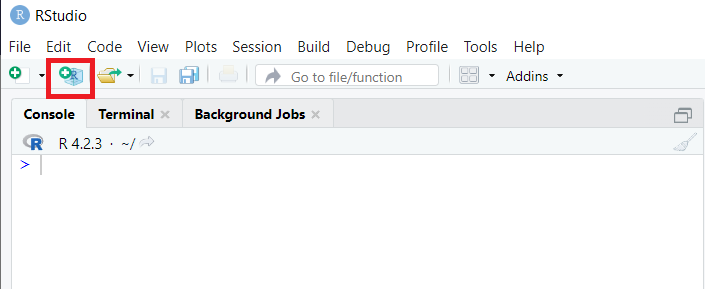

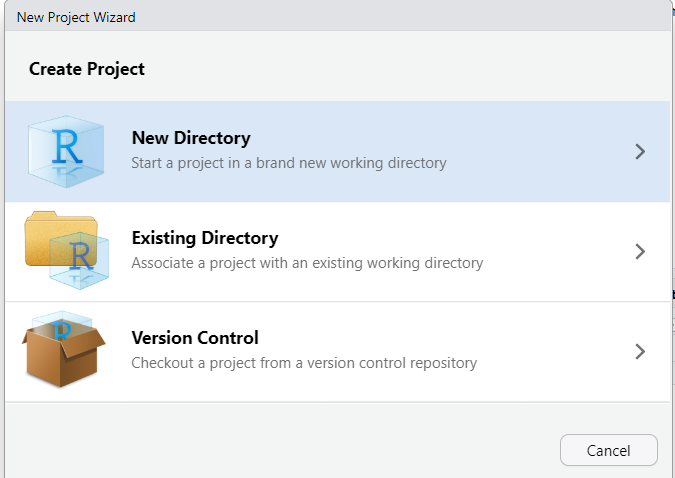

Let's look at extracting data from pages with different structures: HTML, XML and JSON. First, let's go into RStudio and create a new project:

We can choose from three options: create a new folder, place our project in an existing folder, or use version control to manage the project.

Create a new folder to make it easier to manage the files, then select "Create New Project" and enter its name. After that, the workspace is completely adjusted to the new project.

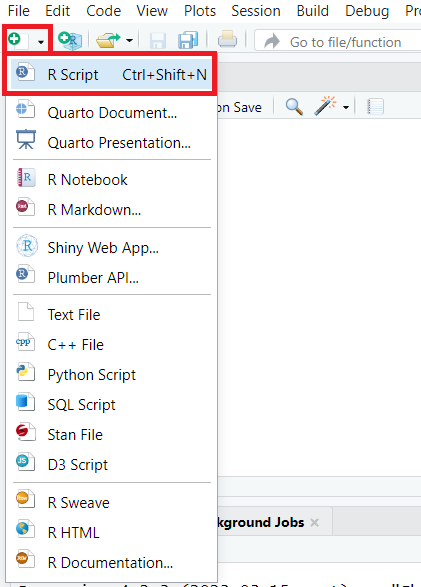

Let's also create a new script and save it in our project:

At this point the preparations are done and you can proceed to the creation of the scraper.

Scraping HTML pages

To write a simple scraper to extract data from web pages, we need the rvest package. To install it, run the following command in the RStudio console:

install.packages("rvest")After that, wait for the download, unzip and install packages and go to the top window - we will write the script in it.

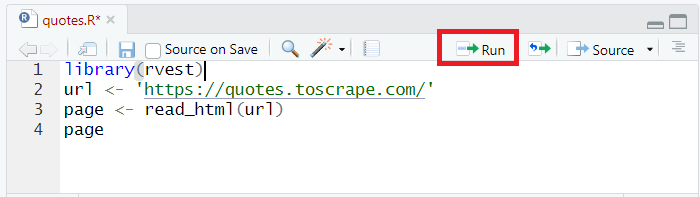

First, we connect the library we just installed to the project:

library(rvest)Let's query quotes.toscrape.com and get all the page code with the read_html command and place it in the page variable:

url <- 'https://quotes.toscrape.com/'

page <- read_html(url)To output the data to the console, we just specify the name of the variable:

page

Let's make sure that everything is OK and run the resulting script. To do this, press Ctrl+Enter, or press the Run button.

Keep in mind that the code will be executed line by line, so place the cursor on the first line.

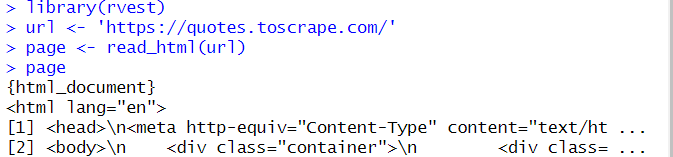

The script will retrieve all the code on the page and display it:

As mentioned earlier, the data on quotes.toscrape.com is stored in the <div> tag with the class "quote". To execute this data, use the html_nodes command, which will retrieve the contents of the specified selector:

quotes <- page %>%

html_nodes("div.quote")Now we have a list that stores data about all quotes on the page. Let's extract from this data only the quotes themselves:

quote <- quotes %>%

html_nodes("span.text")%>%

html_text()Here we used the html_text command. If this is not done, the quote variable will contain extra information that is not needed. The command html_text is used to get only text from HTML tags.

Similarly, we obtain data on the authors of quotes and also put them into a variable:

author <- quotes %>%

html_nodes("small.author")%>%

html_text()Now let's display the contents of the variables on the screen:

quote

authorResult:

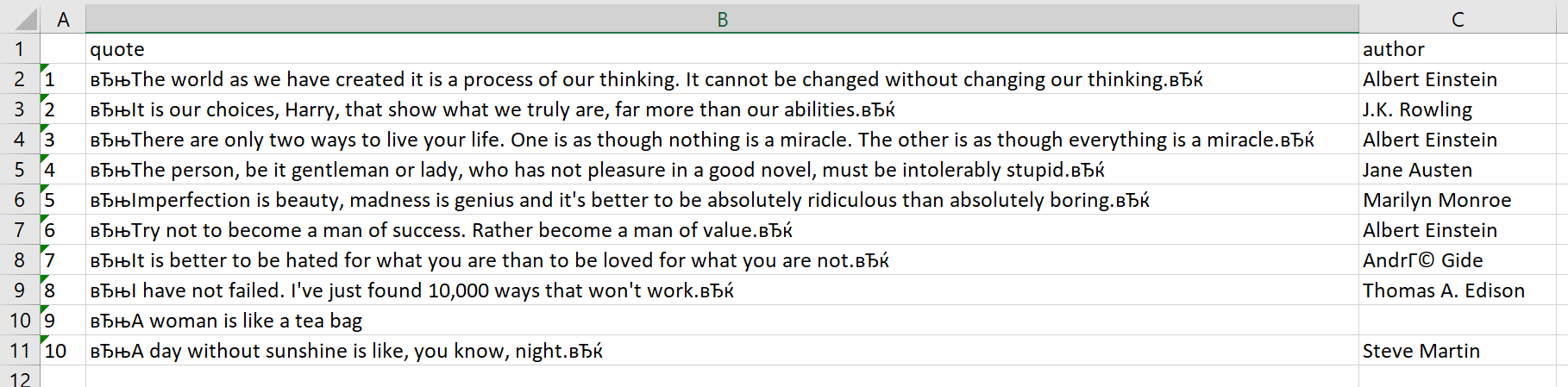

>quote

[1] "The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.

[2] "It is our choices, Harry, that show what we truly are, far more than our abilities."

[3] "There are only two ways to live your life. One is as though nothing is a miracle. The other is as though everything is a miracle."

[4] "The person, be it gentleman or lady, who has no pleasure in a good novel, must be intolerably stupid.

[5] "Imperfection is beauty, madness is genius and it's better to be absolutely ridiculous than absolutely boring.

[6] "Try not to become a man of success. Rather become a man of value."

[7] "It is better to be hated for what you are than to be loved for what you are not.

[8] "'I have not failed. I've just found 10,000 ways that won't work."

[9] "A woman is like a tea bag; you never know how strong it is until it's in hot water."

[10] "A day without sunshine is like, you know, night.

> author

[1] "Albert Einstein" "J.K. Rowling"

[3] "Albert Einstein" "Jane Austen"

[5] "Marilyn Monroe" "Albert Einstein"

[7] "André Gide" "Thomas A. Edison"

[9] "Eleanor Roosevelt" "Steve Martin"

> The whole code:

library(rvest)

url <- 'https://quotes.toscrape.com/'

page <- read_html(url)

quotes <- page %>%

html_nodes("div.quote")

quote <- quotes %>%

html_nodes("span.text")%>%

html_text()

author <- quotes %>%

html_nodes("small.author")%>%

html_text()

quote

authorWe'll come back to this example a little later to clean the result, where we'll describe in detail how to remove extra characters and perform other additional operations. Let's structure the data and save it to a CSV file for now.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

To create a structure, we will use dataframes, a standard way to store data. To begin, we will create a data frame in which we will add the data from quote and author variables:

all_quotes <- data.frame(quote, author)Now set the column headers of the future table:

names(all_quotes) <- c("quote", "author")And finally, let's save the data to a CSV file:

write.csv(all_quotes, file = "./quotes.csv")The quotes.csv file will be saved in the project folder. Let's open it, arrange the text into columns, and look at the result:

As we can see, there are extra characters in the quotes column, but we'll remove them a little later.

Scraping XML and JSON data

When scraping data from websites, you will often work with data in XML or JSON format. These formats are commonly used to exchange data between web applications and are also used to store and transport data. Fortunately, there are a lot of tools for web scraping using R in both of these formats.

Scraping XML Data

XML stands for "Extensible Markup Language" and is a popular format for data exchange. XML data is structured similarly to HTML, with elements nested within other elements. To scrape XML data in R, we can use XML or xml2 package, which provides functions for parsing and navigating XML documents.

To demonstrate how to work with XML structure, let's create a file with the *.xml format and put an example of such a file into it. Let’s take an example code from this website and look at using the XML and xml2 libraries one by one.

Parsing XML with the xml2 library

Let's use the console and install the xml2 package with the command:

install.packages("xml2")After that, create a new script in the project, where we connect the xml2 library:

library(xml2)Put the contents of the whole XML data file into a variable:

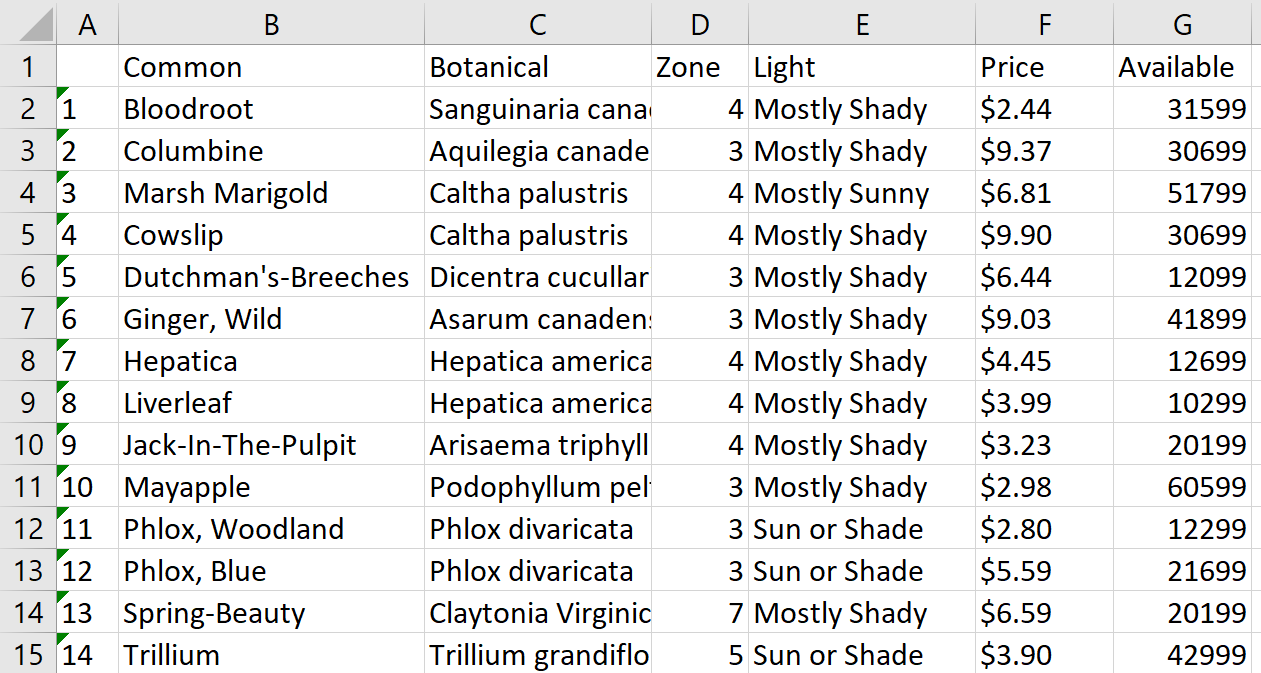

doc <- read_xml("./example.xml")Now we need to handle the structure of this document and put the data into variables using attributes:

plant <- xml_find_all(doc, ".//PLANT")

common <- xml_find_all(plant, ".//COMMON")

botanical <- xml_find_all(plant, ".//BOTANICAL")

zone <- xml_find_all(plant, ".//ZONE")

light <- xml_find_all(plant, ".//LIGHT")

price <- xml_find_all(plant, ".//PRICE")

avail <- xml_find_all(plant, ".//AVAILABILITY") In order to save the data as a table, we put the data into a data frame, set the column headers and save it in the file plants.csv:

all_plants <- data.frame(xml_text(common), xml_text(botanical), xml_text(zone), xml_text(light), xml_text(price), xml_text(avail))

names(all_plants) <- c("Common", "Botanical", "Zone", "Light", "Price", "Available")

write.csv(all_plants, file = "./plants.csv")Let's open the file, put the text over the columns, and look at the result:

In this way, we have a CSV file from an XML structure that is easy to process. This method is suitable for processing local files and web pages in XML format. In the case of web pages, it is necessary to specify the page address instead of the file address:

doc <- read_xml("URL")The full code:

library(xml2)

doc <- read_xml("./example.xml")

plant <- xml_find_all(doc, ".//PLANT")

common <- xml_find_all(plant, ".//COMMON")

botanical <- xml_find_all(plant, ".//BOTANICAL")

zone <- xml_find_all(plant, ".//ZONE")

light <- xml_find_all(plant, ".//LIGHT")

price <- xml_find_all(plant, ".//PRICE")

avail <- xml_find_all(plant, ".//AVAILABILITY")

all_plants <- data.frame(xml_text(common), xml_text(botanical), xml_text(zone), xml_text(light), xml_text(price), xml_text(avail))

names(all_plants) <- c("Common", "Botanical", "Zone", "Light", "Price", "Available")

write.csv(all_plants, file = "./plants.csv")That was the first option, using the xml2 library. Let's look at the same thing but with the xml library.

Processing XML with the xml library

We will use the xml library with the methods library, which will short and simplify our code. First, let's install the XML library in the console:

> install.packages("XML")Import the necessary libraries into the new script:

library("XML")

library("methods")Now put the data from the xml file directly into the data frame:

all_plants <- xmlToDataFrame("./example.xml")Let's add column headers and save them to a CSV file:

names(all_plants) <- c("Common", "Botanical", "Zone", "Light", "Price", "Available")

write.csv(all_plants, file = "./plants2.csv")Let's look at full code:

library("XML")

library("methods")

all_plants <- xmlToDataFrame("./example.xml")

names(all_plants) <- c("Common", "Botanical", "Zone", "Light", "Price", "Available")

write.csv(all_plants, file = "./plants2.csv")Thus, we got the same option, but much faster and easier.

Scraping JSON Data

JSON stands for "JavaScript Object Notation" and is a lightweight format for data exchange. JSON data is structured as a collection of key-value pairs, with values that can be strings, numbers, arrays, or other objects. To scrape JSON data in R, we can use the jsonlite package, which provides parsing and manipulating JSON data functions.

First, let's use the console and install the necessary library:

> install.packages("jsonlite")Now we import it into the project:

library(jsonlite)We will use the following JSON file as an example:

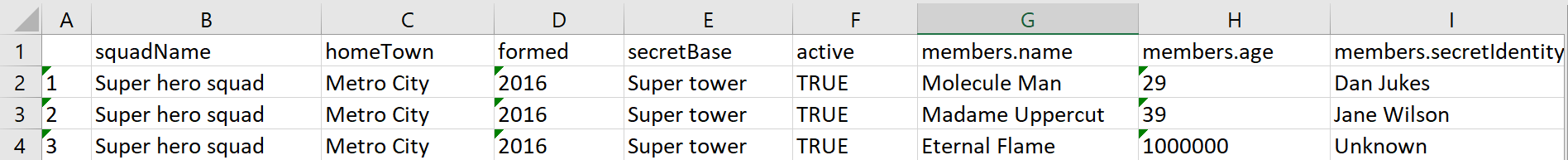

{

"squadName": "Super hero squad",

"homeTown": "Metro City",

"formed": 2016,

"secretBase": "Super tower",

{ "active": true,

{ "members": [

{

"name": "Molecule Man",

"age": 29,

"secretIdentity": "Dan Jukes"

},

{

"name": "Madame Uppercut",

{ "age": 39,

"secretIdentity": "Jane Wilson"

},

{

"name": "Eternal Flame",

"age": 1000000,

"secretIdentity": "Unknown"

}

]

}As in the case of the xml library, the jsonlite library has the ability to save data immediately in the data frame. Let's take advantage of this feature and get the data, which is convenient for further processing:

heroes <- fromJSON("./example.json")Let's save this data frame to a CSV file:

write.csv(heroes, file = "./heroes.csv")As a result, we get a table with the following contents:

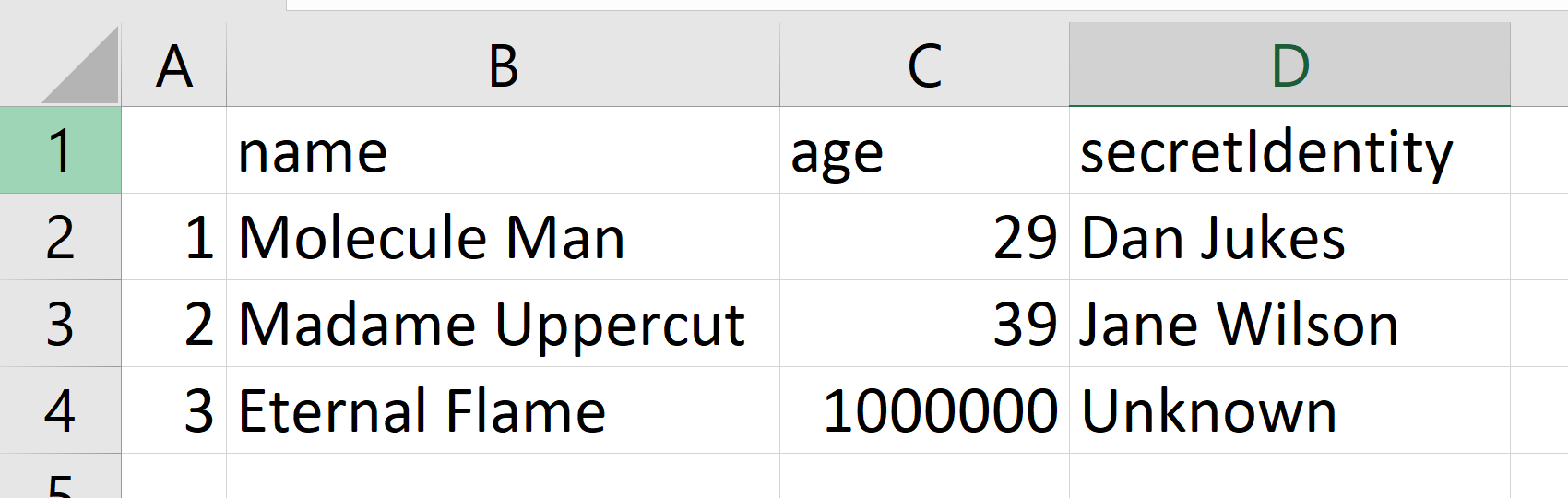

Now let's change the task a little bit. Suppose we want to save only the data embedded in the attribute members into the table. To do this, in the save command we will specify not only the data frame, but also the attribute whose contents we want to save:

write.csv(heroes$members, file = "./heroes.csv")Now, if we look at the file, it looks different:

Scraping data in XML or JSON format can be more complex than scraping HTML data, but it is not difficult in R with the right tools and techniques.

Executing dynamic content using RSelenium

We have already written about scraping using Selenium in Python. This library also has a counterpart for R language - RSelenium. It is necessary for scraping dynamic data and using a headless browser, which allows you to simulate the behavior of a real user, reducing the risk of blocking.

To use RSelenium, you need to install the package and download a driver for the web browser you want to use. We will use the Chromium web driver. To install the library, run the command in the RStudio console:

> install.packages("RSelenium")You can download the web driver from the official site, and then it must be placed in the project folder. To work correctly, you must have a web driver of the same version as the installed Chrome browser.

We need a server part and a client part to work. To run the server part, we need Java, so if it is not installed on the computer, it will need to be installed.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Now download any selenium-server-stadalone from the official site. Any version will do, but it's better to download the latest one. We will use selenium-server-standalone-4.0.0-alpha-2. It should be downloaded and placed in the project folder.

When all the preparations are done, go to the project folder and in the address line specify cmd to run the command line from under the selected folder. We need to start the server. To do this, use the command:

java -jar selenium-server-standalone-VERSION.jarFor example, in our case the command would look like this:

java -jar selenium-server-standalone-4.0.0-alpha-2.jarThe result of its execution, if everything is done correctly, will start the server (pay attention to the server port, we will need it later):

C:\Users\user\Documents\Examples>java -jar selenium-server-standalone-4.0.0-alpha-2.jar

17:16:24.873 INFO [GridLauncherV3.parse] - Selenium server version: 4.0.0-alpha-2, revision: f148142cf8

17:16:25.029 INFO [GridLauncherV3.lambda$buildLaunchers$3] - Launching a standalone Selenium Server on port 4444

17:16:25.529 INFO [WebDriverServlet.<init>] - Initialising WebDriverServlet

17:16:26.061 INFO [SeleniumServer.boot] - Selenium Server is up and running on port 4444Now go back to RStudio and create a new script in the project. Import the RSelenium library into it:

library(RSelenium)Next, let's specify the web driver settings: the name of the browser, the server port and the version of the web driver. In our case, the settings look like this:

rdriver <- rsDriver(browser = "chrome",

port = 4444L,

chromever = "112.0.5615.28",

)Create a client that will navigate through pages and scrape data:

remDr <- rdriverGo to the site from which we are going to collect data:

remDr$navigate("https://quotes.toscrape.com/")After you go to the site, let's collect all the quotes into a variable:

html_quotes <- remDr$findElements(using = "css selector", value = "div.quote")Let's create variables of list type and put quotes and authors into them:

quotes <- list()

authors <- list()

for (html_quote in html_quotes) {

quotes <- append(

quotes,

html_quote$findChildElement(using = "css selector", value = "span.text")$getElementText()

)

authors <- append(

authors,

html_quote$findChildElement(using = "css selector", value = "small.author")$getElementText()

)

}Now, as we did before, let's enter the data into the data frame, create column headers and save the data in CSV format:

all_qoutes <- data.frame(

unlist(quotes),

unlist(authors)

)

names(all_quotes) <- c("quote", "author")

write.csv(all_quotes, file = "./quotes.csv")At the end, close the web driver:

remDr$close()As a result, we got exactly the same file as before but with the ability to control elements on the web page, to run a headless browser, to emulate the behavior of a real user and reduce the chance of blocking.

Full script code:

library(RSelenium)

rdriver <- rsDriver(browser = "chrome",

port = 4444L,

chromever = "112.0.5615.28",

)

remDr <- rdriver

remDr$navigate("https://quotes.toscrape.com/")

html_quotes <- remDr$findElements(using = "css selector", value = "div.quote")

quotes <- list()

authors <- list()

for (html_quote in html_quotes) {

quotes <- append(

quotes,

html_quote$findChildElement(using = "css selector", value = "span.text")$getElementText()

)

authors <- append(

authors,

html_quote$findChildElement(using = "css selector", value = "small.author")$getElementText()

)

}

all_quotes <- data.frame(

unlist(quotes),

unlist(authors)

)

names(all_quotes) <- c("quote", "author")

write.csv(all_quotes, file = "./quotes.csv")

remDr$close()Overall, RSelenium is a powerful tool for scraping data from web pages with dynamic content, and it opens up a whole new world of possibilities for web scraping in R.

For example, let's say you want to scrape data from a web page that requires you to click a "Load More" button to display additional content. Without RSelenium, you would have to manually click the button every time you wanted to load more data. With RSelenium, however, you can automate this process.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Tips and Tricks for Effective Web Scraping in R

Here you can find some tips and tricks to help you improve your skills at web scraping with R. These tips will help you scrape multiple pages and websites, extract specific types of data, handle authentication, deal with missing or incorrect data, and remove unwanted elements or symbols.

Use delays for scraping multiple pages and websites

When scraping data from multiple websites, it's important to be respectful of website owners and avoid overloading their servers. To do this, you can use the Sys.sleep() function in R to add a delay between requests. However, it's best practice to randomize the delay time - this reduces the chance that your scraper is detected and your IP address is blocked.

The runif(n,x,y) function returns a random number in R which can be used for setting delays between requests:

Sys.sleep(runif(1, 0.3, 10))This code adds a delay ranging from 0.3 up to 10 seconds before making another request, N (equal to 1 here) indicates how many values runif should return back as output.

Extracting specific types of data

Sometimes, you may be interested in extracting specific types of data from a web page, such as images or tables. To do this, you need to identify the specific HTML tags or functions associated with the data you want to extract.

For example, the rvest library has some functions that allow you to work with specific data. If the page contains tabular data you can convert it directly to a data frame with html_table().

Another option may be obtaining an address or downloading an image. You can get the address as follows:

imgsrc <- read_html(url) %>%

html_node(xpath = '//*/img') %>%

html_attr('src')To download an image whose address has already been obtained:

download.file(paste0(url, imgsrc), destfile = basename(imgsrc))

The rvest library is enough to use these functions.

Scraping with authentication

Some websites require authentication before you can access their data. In such cases, you need to use techniques such as OAuth to authenticate your requests.

OAuth is a protocol that allows you to authenticate your requests without revealing your login credentials to the website. The httr package in R provides functions to work with OAuth and authenticate your requests.

For simple authorization, the httr library must be connected. To install it, use the following command in the RStudio console:

> install.packages("httr")The authorization command will then be as follows:

GET("http://example.com/basic-auth/user/passwd",authenticate("user", "passwd") )In addition, this library also has oauth1.0_token, oauth2.0_token, oauth_app, oauth_endpoint, oauth_endpoints, and oauth_service_token functions.

Scraping in R with authentication can be a bit more complex, but the httr library is designed to make it easier.

Dealing with missing or incorrect data

During the processing of large amounts of information, there is a high probability of losing some of the data, or obtaining it in an incorrect form. For example, such errors may occur due to formatting problems, encoding problems, or no data at all. Given that this problem occurs quite often, it is necessary to provide methods of solving this problem in advance.

For example, you can use the ifelse() function, which allows you to check for any inconsistencies in HTML documents and replace the data with an alternative value, such as NA. This way, you will ensure the accuracy of the collected data, despite possible problems along the way.

Using Web Scraping API in R

In addition to web scraping techniques covered earlier, another approach to extracting data from web pages is through the use of APIs (Application Programming Interfaces).

To use an API in R, you can use packages like httr, jsonlite, or curl. These packages allow you to send HTTP requests to the API endpoint and receive JSON or XML data back. Let's see an example of how to retrieve data from Zillow using API using the httr package.

Connect the library and set the header and body of the request:

library(httr)

headers = c(

'x-api-key' = YOUR-API-KEY',

'Content-Type' = 'application/json'

)

body = '{

{ "extract_rules": {

{ "address": { "address",

"price": "[data-test=property-card-price]",

{ "seller": "div.cWiizR"

},

{ "url": "https://www.zillow.com/portland-or/"

}';Now let's run the query and print the result:

res <- VERB("POST", url = "https://api.hasdata.com/scrape", body = body, add_headers(headers))

cat(content(res, 'text'))You can further refine the script and save the resulting data. Using API allows you not to worry about using proxies, not to worry about captchas and restrictions. In this case, all these issues are solved by the service that provides API for scraping.

Removing Unwanted Elements or Symbols

Sometimes the data you scrape will be messy or inconsistent, with extra spaces, punctuation, or other unwanted characters. Regular expressions can help you quickly and accurately clean up this data. For example, you might use the gsub() function to remove all non-alphanumeric characters from a string or the str_extract() function to extract a specific pattern from a larger string.

For example, when we collected data with quotes, we obviously had extra characters that were not recognized correctly because of the encoding in the final file. These characters were curly quotes.

To remove all unnecessary quotation marks from quotes in the quote variable, we could simply add the following line:

quote <- gsub('|', '',quote)This would remove unnecessary characters and make the result more convenient.

Grouping and Summarizing data

Sometimes it is necessary not only to collect data, but also to process it and summarize it in summary tables. Some operations can be performed on R and then shine the data into summary tables. You can create a data frame by combining the scraped data into columns, and then use functions such as filter(), select(), and arrange() from the dplyr or tidyverse package to manipulate and summarize the data.

To install it, enter the following command in the RStudio console:

> install.packages("dplyr")Suppose we have a data frame that stores data and we need to group them by Name field and summarize the values:

df %>% group_by(Name) %>% summarise(count = n())This library has many features and functions that will be useful at data science.

Conclusion and Takeaways

Web scraping is a powerful way of gathering data from websites and R provides the perfect environment for it. With tools like rvest and xml2, you can easily scrape content from web pages without any difficulties. It doesn't matter if you lack programming experience - R makes web scraping simple with its wide range of functions and packages.

It opens up a world of possibilities to anyone wanting to extract valuable information off the internet. You can harvest data from multiple sources, pull specific types such as images or tables, and handle common issues like missing or inaccurate information all at once. Web scraping in R is an incredibly beneficial skill that helps uncover hidden insights regardless if you're a developer, researcher, or data scientist.