Yelp is a large and popular directory featuring local businesses such as restaurants, hotels, spas, plumbers, and just about every type of business you can find. The platform is ideal for finding B2B potential customers.

However, looking up prospecting on the platform manually is time-consuming. Therefore, you can use a web scraping tool to collect data from Yelp. Such a strategy will pay off in the long run.

Why Scrape Yelp?

Yelp data can be valuable and bring success to your business if used correctly. There are many benefits of gathering information from this platform. For example:

- competitive research;

- improving the user experience;

- validating marketing campaigns;

- monitoring customer sentiment;

- generating leads.

Yellow Pages Scraper is the perfect solution for quickly and easily extracting business data! With no coding required, you can now scrape important information from…

Each business listed on Yelp has its own page. This page has information about the business name, phone number, address, link to the site, and opening hours. Business search results are filtered by geographic location, price range, and other unique characteristics. To obtain the required data, you can either use the official Yelp API or create your own web scraper.

We’ve prepared a tutorial in which we will make a yelp scraper that can take a link to a business directory, go to each business page, and collect that information:

- business name;

- phone number;

- address;

- website link;

- rating;

- the number of reviews.

Analyzing the Site

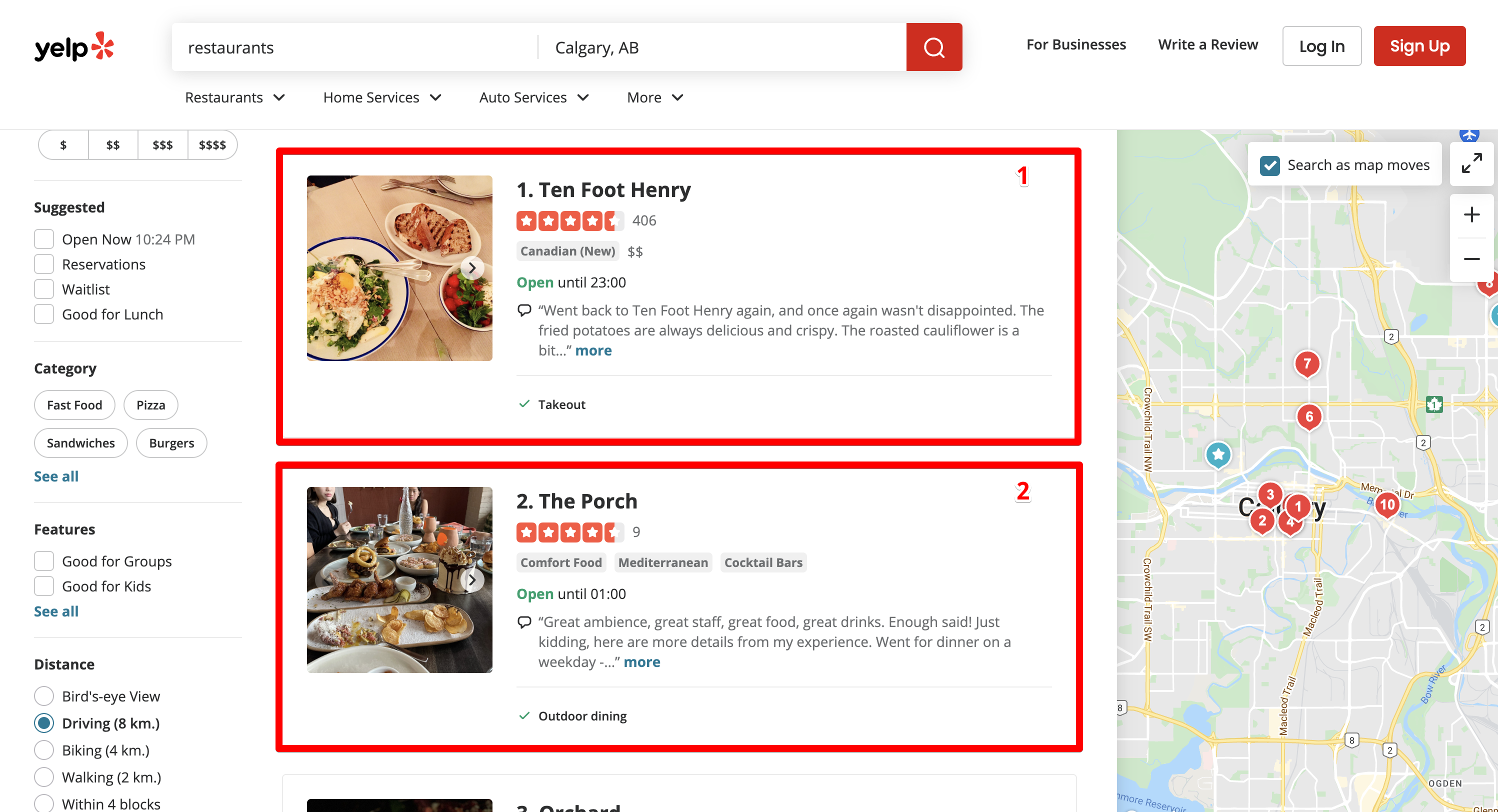

For example, we need to get information about all the restaurants in Calgary's downtown area. To do this, we entered a request "restaurants" and selected Calgary city.

Yelp sent us to the search engine results page:

https://www.yelp.ca/search?find_desc=restaurants&find_loc=Calgary%2C+AB

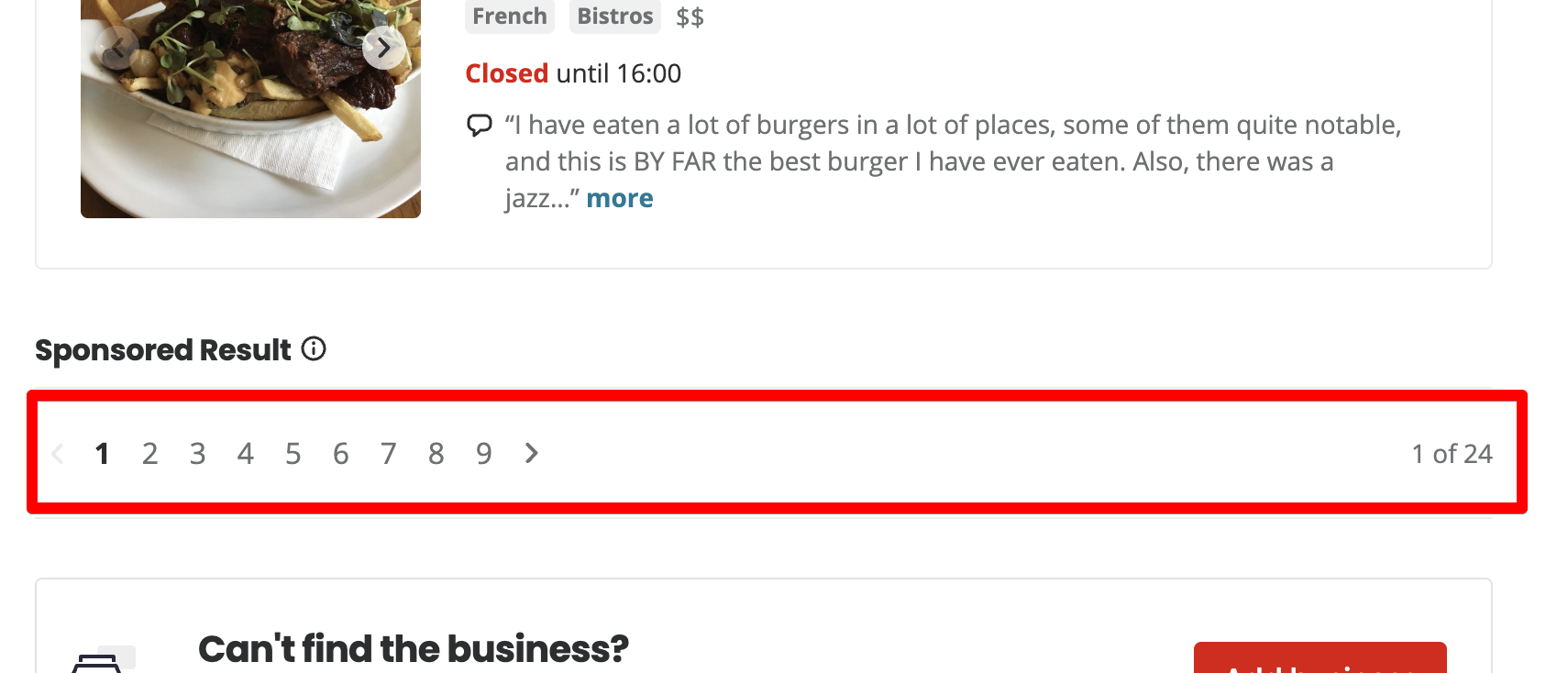

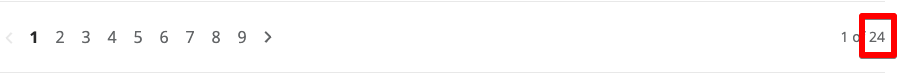

There are 10 restaurants on each page. 24 pages overall.

If you click on page 2, Yelp adds the start parameter to the URL.

https://www.yelp.ca/search?find_desc=restaurants&find_loc=Calgary%2C+AB&start=10If you move to page 3, the value of the start parameter will change to 20.

https://www.yelp.ca/search?find_desc=restaurants&find_loc=Calgary%2C+AB&start=20Okay, now we understand the logic of how pagination works in Yelp - start is an offset from the zero records. If you want to display the first 10 entries, then the start parameter should be set to 0. If you want to display entries from 11 to 20, then we set the start parameter to 10.

Business Page

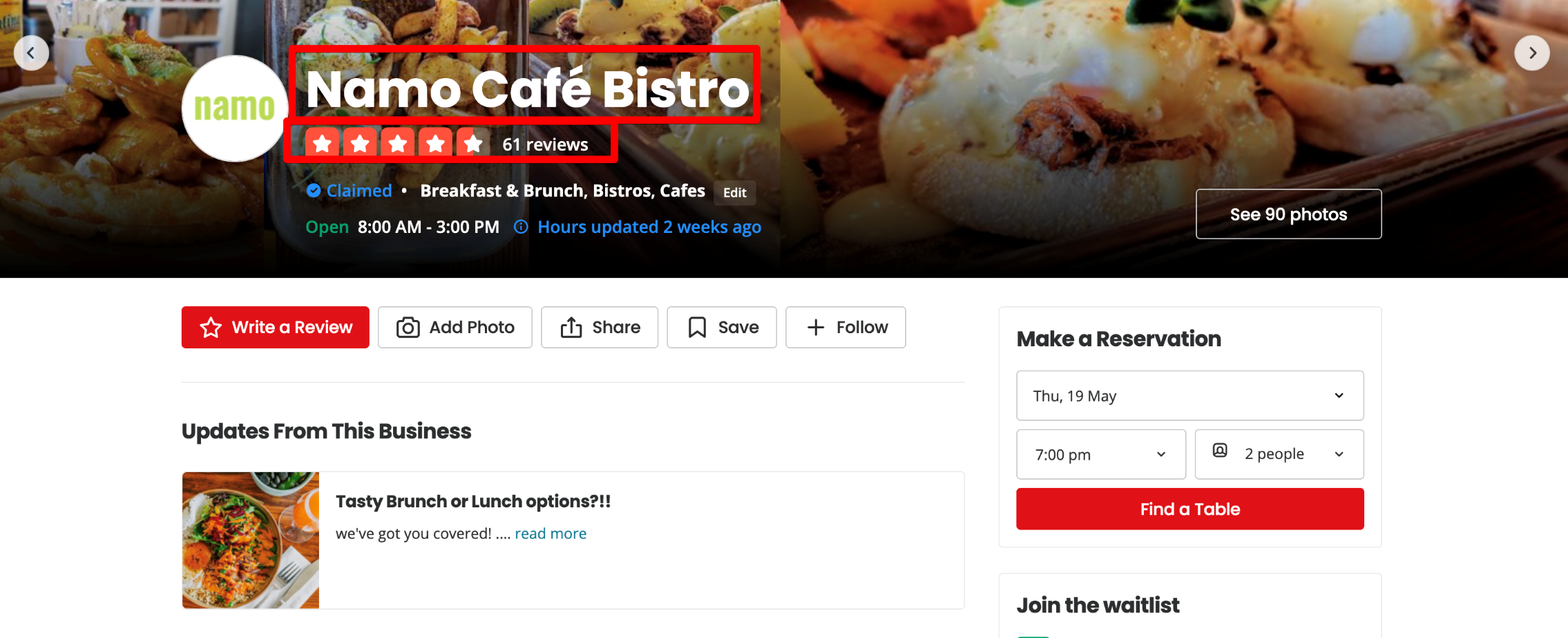

At the top of the business page is the name, rating, and the number of reviews.

Scroll down the page.

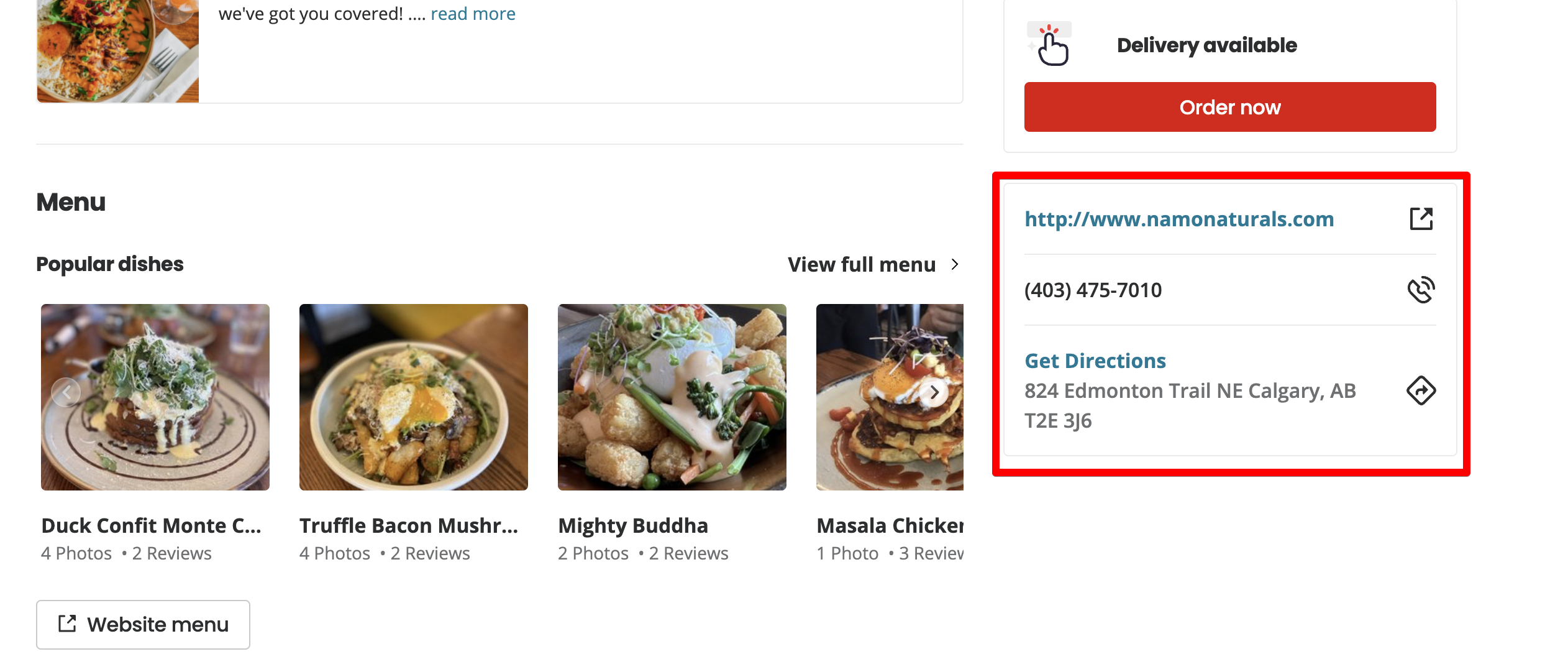

In the second part of the page, we see the rest of the data we’re interested in: a site link, phone number, and address.

Writing a NodeJS Scraper with Puppeteer

You can create your scraper using Yelp API JavaScript example from the official Yelp website, but that's quite easy. Let's make the task more challenging and build our own scraper with Puppeteer.

Create a Directory for the Scraper

scrapeit@MBP-scrapeit scrapeit-cloud-samples % mkdir yelp-scraper

scrapeit@MBP-scrapeit scrapeit-cloud-samples % cd yelp-scraper

scrapeit@MBP-scrapeit yelp-scraper %Initializing the Project via npm

You can nitialize the Project via npm, for example:

scrapeit@MBP-scrapeit yelp-scraper % npm init

This utility will walk you through creating a package.json file.

It only covers the most common items, and tries to guess sensible defaults.

See `npm help init` for definitive documentation on these fields

and exactly what they do.

Use `npm install ` afterwards to install a package and

save it as a dependency in the package.json file.

Press ^C at any time to quit.

package name: (yelp-scraper)

version: (1.0.0)

description: Yelp business scraper

entry point: (index.js)

test command:

git repository:

keywords:

author: Roman M

license: (ISC)

About to write to /Users/scrapeit/workspace/scrapeit-cloud-samples/yelp-scraper/package.json:

{

"name": "yelp-scraper",

"version": "1.0.0",

"description": "Yelp business scraper",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"author": "Roman M",

"license": "ISC"

}

Is this OK? (yes) yesSetting the Dependencies We Need

We'll use several dependencies:

- Puppeteer - NodeJS library that provides an API for Chrome browser interaction and control.

- Cheerio - jQuery kernel developed specifically for server use.

We’re going to use Puppeteer to open Yelp pages and get HTML.

npm i puppeteerCheerio is used for HTML parsing.

npm i cheerioScraper Architecture

We create several classes and divide the responsibility between them:

- YelpScraper - the class responsible for getting HTML from Yelp.

- scrape(url)

- YelpParser - the class responsible for HTML parsing we've got from the YelpScraper class.

- getPagesAmount(listingPageHtml)

- getBusinessesLinks(listingPageHtml)

- extractBusinessInformation(businessPageHtml)

We’ll build the app that uses 2 classes and the index.js to link all these things together.

Programming

First, let's transform the architecture into code. In simple words, let's outline the classes and declare the methods, for example.

Create two files: yelp-scraper.js and yelp-parser.js in the root folder of the project.

// yelp-scraper.js

class YelpScraper {

async scrape(url) {

}

}

export default YelpScraper;// yelp-parser.js

class YelpParser {

getPagesAmount(listingPageHtml) {

}

getBusinessLinks(listingPageHtml) {

}

extractBusinessInformation(businessPageHtml) {

}

}

export default YelpParser;Implementing the scrape method of the YelpScrape class

// yelp-scraper.js

import puppeteer from "puppeteer";

class YelpScraper {

async scrape(url) {

let browser = null;

let page = null;

let content = null;

try {

browser = await puppeteer.launch({ headless: false });

page = await browser.newPage();

await page.goto(url, { waitUntil: 'load' });

content = await page.content();

} catch(e) {

console.log(e.message);

} finally {

if (page) {

await page.close();

}

if (browser) {

await browser.close();

}

}

return content;

}

}

export default YelpScraper;

The main and only responsibility of the scrape method is to return the HTML of the passed page.

In order to do this you have to:

Open the browser:

browser = await puppeteer.launch();

page = await browser.newPage();Switch to the page and wait for its load:

await page.goto(url, { waitUntil: 'load' });Write the page's HTML into the content variable:

content = await page.content();In case of an error, log it:

console.log(e.message);Close the page and the browser:

if (page) {

await page.close();

}

if (browser) {

await browser.close();

}

Return the content:

return content;Implementing YelpParser class methods

// yelp-parser.js

import * as cheerio from 'cheerio';

class YelpParser {

getPagesAmount(listingPageHtml) {

const $ = cheerio.load(listingPageHtml);

const paginationTotalText = $('.pagination__09f24__VRjN4 .css-chan6m').text();

const totalPages = paginationTotalText.match(/of.([0-9]+)/)[1];

return Number(totalPages);

}

getBusinessLinks(listingPageHtml) {

const $ = cheerio.load(listingPageHtml);

const links = $('a.css-1m051bw')

.filter((i, el) => /^\/biz\//.test($(el).attr('href')))

.map((i, el) => $(el).attr('href'))

.toArray();

return links;

}

extractBusinessInformation(businessPageHtml) {

const $ = cheerio.load(businessPageHtml);

const title = $('div[data-testid="photoHeader"]').find('h1').text().trim();

const address = $('address')

.children('p')

.map((i, el) => $(el).text().trim())

.toArray();

const fullAddress = address.join(', ');

const phone = $('p[class=" css-1p9ibgf"]')

.filter((i, el) => /\(\d{3}\)\s\d{3}-\d{4}/g.test($(el).text()))

.map((i, el) => $(el).text().trim())

.toArray()[0];

const reviewsCount = $('span[class=" css-1fdy0l5"]')

.filter((i, el) => /review/.test($(el).text()))

.text()

.trim()

.match(/\d{1,}/g)[0] || '';

let rating = '';

const headerHtml = $('div[data-testid="photoHeader"]').html();

if (!headerHtml || headerHtml.indexOf('aria-label="') === -1) {

rating = '';

}

const startIndx = headerHtml.indexOf('aria-label="') + 'aria-label="'.length;

rating = Number(headerHtml.slice(startIndx, startIndx + 1)) || '';

return {

title,

fullAddress,

phone,

reviewsCount,

rating

}

}

}

export default YelpParser;

The getPagesAmount method takes the first listing page HTML and parses the number of Yelp output pages.

Initialize cheerio and take out the text that is in the element with the .pagination__09f24__VRjN4 .css-chan6m selector.

const $ = cheerio.load(listingPageHtml);

const paginationTotalText = $('.pagination__09f24__VRjN4 .css-chan6m').text();To find which element's selector, right-click on the page and click Inspect in the Chrome context menu. In the page's source code, find the desired element and copy the selector.

In this case, the element that displays the number of pages has a .css-chan6m class, but with this class on the page there are other elements, so we also take the element selector which is the parent. We took .pagination__09f24__VRjN4. The result is .pagination__09f24__VRjN4 .css-chan6m.

To get the total number of pages from the text 1 of 24, we use a regular expression. For example, you can use this:

const totalPages = paginationTotalText.match(/of.([0-9]+)/)[1];

return Number(totalPages);As a result, this function will return the number 24, which is the number of pages.

The same algorithm for parsing links to a business pages is used in the getBusinessLinks function, which should return an array of links to business pages.

The extractBusinessInformation function takes the HTML of the business page and parses the information we need by selectors in the same way.

Zillow API Node.js is a programming interface that allows developers to interact with Zillow's real estate data using the Node.js platform. It provides a set of…

Shopify API Node.js framework can be used to create custom eCommerce solutions, integrate Shopify functionality into existing applications, and automate various…

Application Entry Point

To connect the parser and scraper classes we'll create an index.js file. This is the application's entry point that starts and controls the data retrieval process.

import YelpScraper from "./yelp-scraper.js";

import YelpParser from "./yelp-parser.js";

const url = 'https://www.yelp.ca/search?find_desc=restaurants&find_loc=Calgary%2C+AB&start=0';

const main = async() => {

const yelpScraper = new YelpScraper();

const yelpParser = new YelpParser();

const firstPageListingHTML = await yelpScraper.scrape(url);

const numberOfPages = yelpParser.getPagesAmount(firstPageListingHTML);

const scrapedData = [];

for (let i = 0; i < numberOfPages; i++) {

const link = `https://www.yelp.ca/search?find_desc=restaurants&find_loc=Calgary%2C+AB&start=${i * 10}`;

const listingPage = await yelpScraper.scrape(link);

const businessesLinks = yelpParser.getBusinessLinks(listingPage);

for (let k = 0; k < businessesLinks.length; k++) {

const businessesLink = businessesLinks[k];

const businessPageHtml = await yelpScraper.scrape(`https://www.yelp.ca${businessesLink}`);

const extractedInformation = yelpParser.extractBusinessInformation(businessPageHtml);

scrapedData.push(extractedInformation);

}

}

console.log(scrapedData);

};

main();

First we initialize our scraper and parser classes.

const yelpScraper = new YelpScraper();

const yelpParser = new YelpParser();Next, we get Yelp search results page content and extract the number of pages.

const firstPageListingHTML = await yelpScraper.scrape(url);

const numberOfPages = yelpParser.getPagesAmount(firstPageListingHTML);Run the loop from 0 to 23. In each iteration we generate a link to a particular search results page and parse the links to the business pages.

const link = `https://www.yelp.ca/search?find_desc=restaurants&find_loc=Calgary%2C+AB&start=${i * 10}`;

const listingPage = await yelpScraper.scrape(link);

const businessesLinks = yelpParser.getBusinessLinks(listingPage);Run the loop on business pages. In each iteration, we retrieve the content of the business page and get the desired information.

for (let k = 0; k < businessesLinks.length; k++) {

const businessesLink = businessesLinks[k];

const businessPageHtml = await yelpScraper.scrape(`https://www.yelp.ca${businessesLink}`);

const extractedInformation = yelpParser.extractBusinessInformation(businessPageHtml);

scrapedData.push(extractedInformation);

}

Running the scraper.

node index.jsWait for the end of scraping and as a result, in the scrapedData variable, we get the final data, which we can write to the database or generate a CSV.

[

{

title: 'Ten Foot Henry',

fullAddress: '1209 1st Street SW, Calgary, AB T2R 0V3',

phone: '(403) 475-5537',

reviewsCount: '406',

rating: 4

}

{

title: 'The Porch',

fullAddress: '730 17th Avenue SW, Calgary, AB T2S 0B7',

phone: '(587) 391-8500',

reviewsCount: '9',

rating: 4

}

{

title: 'Orchard',

fullAddress: '134 - 620 10 Avenue SW, Calgary, AB T2R 1C3',

phone: '(403) 243-2392',

reviewsCount: '12',

rating: 4

},

...

]

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Refining of the Scraper

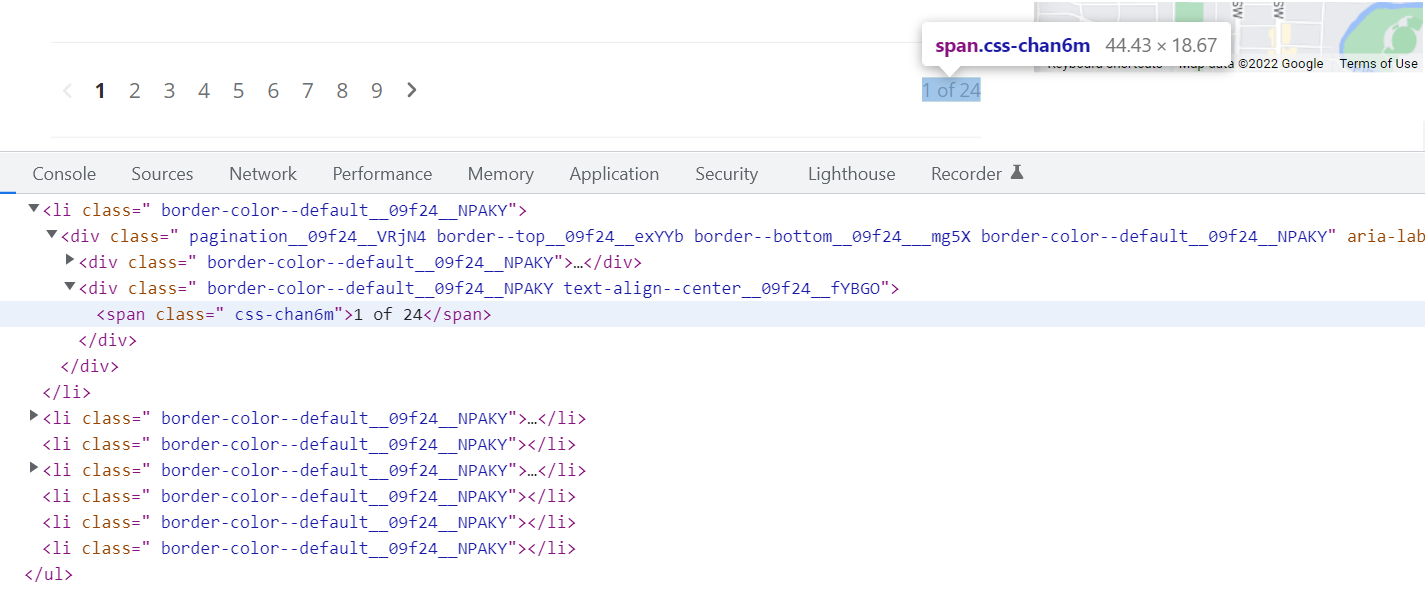

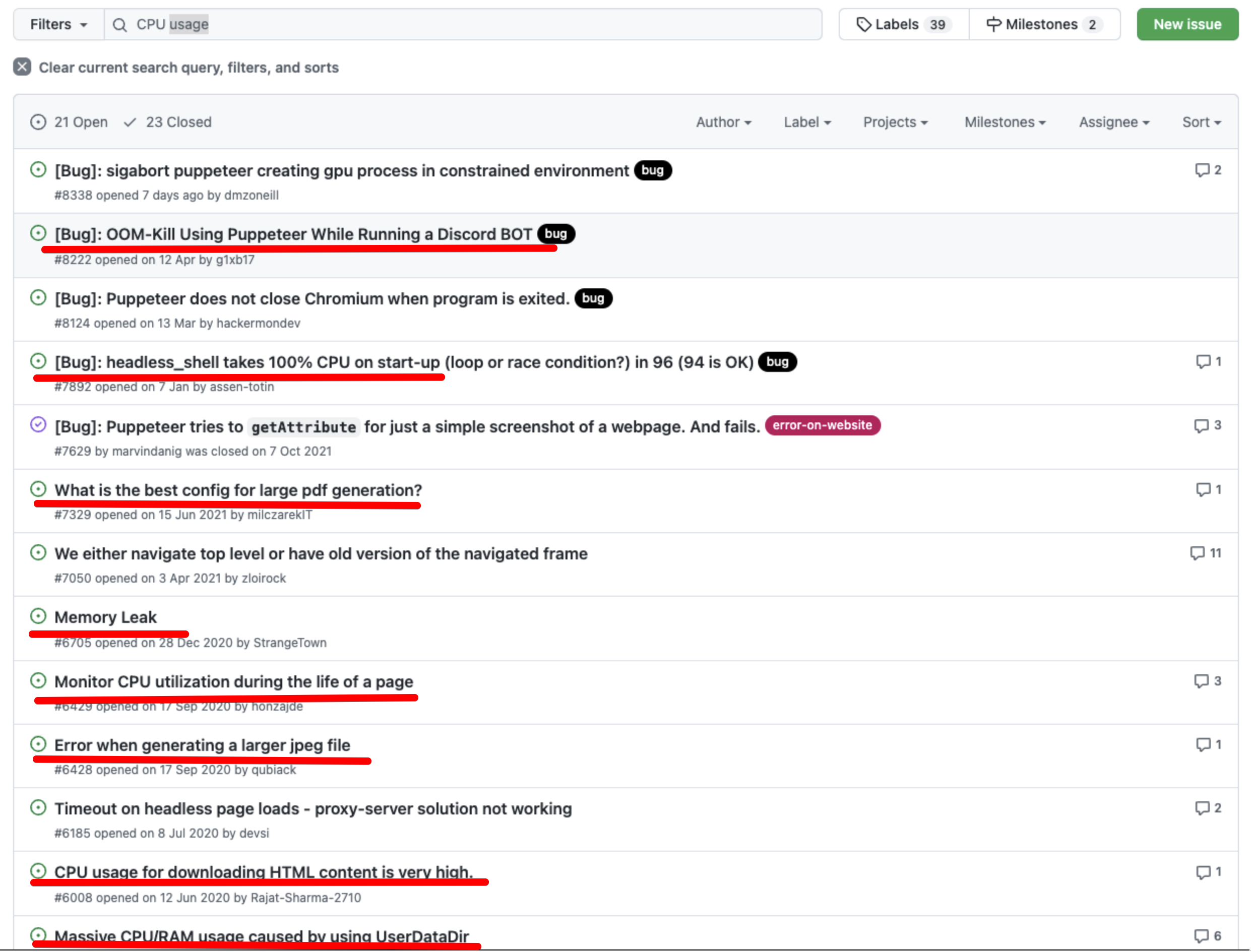

Yelp protects the site from scraping by blocking frequent requests from the same IP address. You can connect a proxy, but we’ll use the Scrape-it.Cloud's web scraping API, which rotates proxies and runs Puppeteer in the cloud.

Using Scrape-it.Cloud will allow us to not burden the system by running headless browsers locally or on our server. This can be a problem if you want to scrape quickly using multiple threads.

https://github.com/puppeteer/puppeteer/issues?q=CPU+usage+

Scrape Yelp with Scrape-it.Cloud

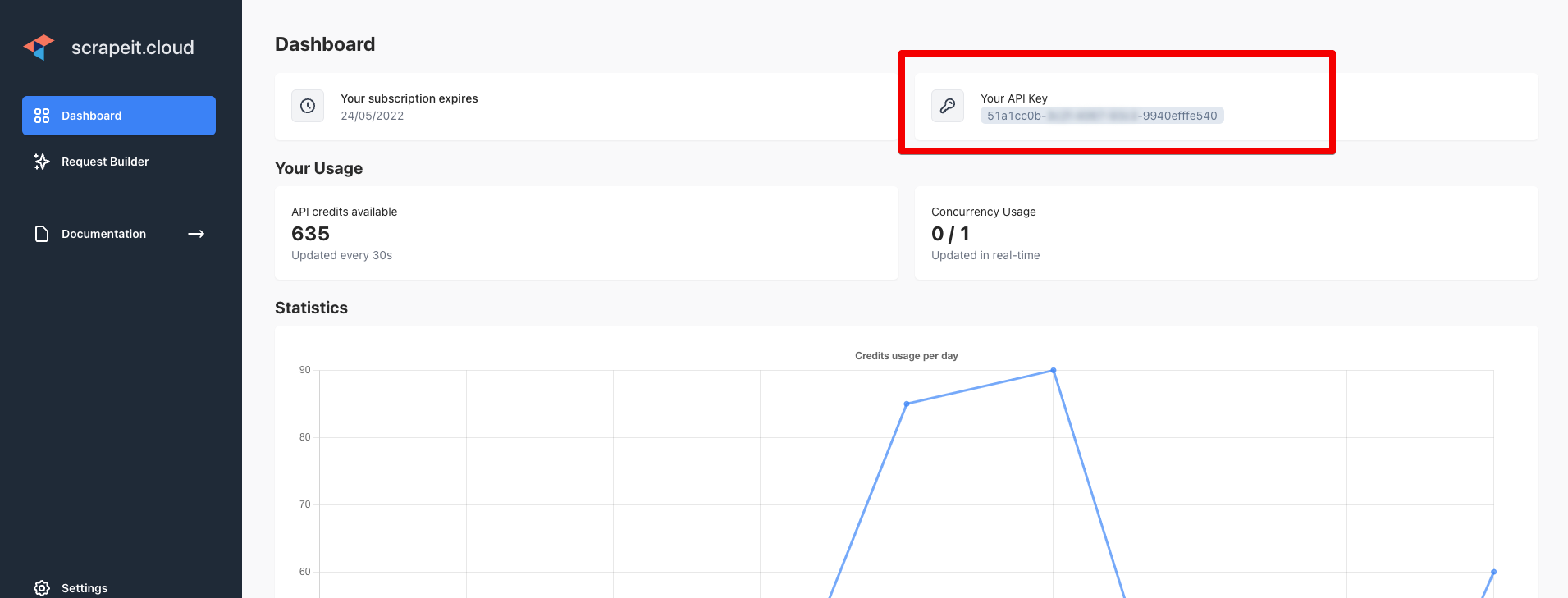

Sign up at HasData and copy the API key from the Dashboard page:

Install axios to make requests to Scrape-it.Cloud.

npm i axiosRewriting the YelpScraper

// yelp-scraper.js

import axios from "axios";

class YelpScraper {

async scrape(url) {

const data = JSON.stringify({

"url": url,

"window_width": 1920,

"window_height": 1080,

"block_resources": false,

"wait": 0,

"proxy_country": "US",

"proxy_type": "datacenter"

});

const config = {

method: 'post',

url: 'https://api.scrape-it.cloud/scrape',

headers: {

'x-api-key': 'YOUR_API_KEY',

'Content-Type': 'application/json'

},

data : data

};

try {

let response = await axios(config);

if (response.data.status === 'error') {

console.log('1 attempt error');

response = await axios(config);

}

return response.data.scrapingResult.content;

} catch(e) {

console.log(e.message);

return '';

}

}

}

export default YelpScraper;

Specify parameters for the Scrape-it.Cloud API. Using these parameters Scrape-it.Cloud will make requests using US IP addresses. Each request is made with a new IP address. This way we bypass the protection on the number of requests from one IP.

const data = JSON.stringify({

"url": url,

"window_width": 1920,

"window_height": 1080,

"block_resources": false,

"wait": 0,

"proxy_country": "US",

"proxy_type": "datacenter"

});

The axios settings. In headers we pass the API key you got on the Dashboard page.

const config = {

method: 'post',

url: 'https://api.scrape-it.cloud/scrape',

headers: {

'x-api-key': 'YOUR_API_KEY',

'Content-Type': 'application/json'

},

data : data

};

Make a request to the Scrape-it.Cloud API to get the content and another attempt if the request fails. This can happen if the IP address used by Scrape-it.Cloud has already been banned.

try {

let response = await axios(config);

if (response.data.status === 'error') {

console.log('1 attempt error');

response = await axios(config);

}

return response.data.scrapingResult.content;

} catch(e) {

console.log(e.message);

return '';

}

If you can't get content using datacenter proxy, try changing the proxy_type parameter to residential, which is the real devices IP

const data = JSON.stringify({

"url": url,

"window_width": 1920,

"window_height": 1080,

"block_resources": false,

"wait": 0,

"proxy_country": "US",

"proxy_type": "residential"

});

Ones done, let’s start the scraper:

node index.jsAnd we get the same result:

[

{

title: 'Ten Foot Henry',

fullAddress: '1209 1st Street SW, Calgary, AB T2R 0V3',

phone: '(403) 475-5537',

reviewsCount: '406',

rating: 4

}

{

title: 'The Porch',

fullAddress: '730 17th Avenue SW, Calgary, AB T2S 0B7',

phone: '(587) 391-8500',

reviewsCount: '9',

rating: 4

}

{

title: 'Orchard',

fullAddress: '134 - 620 10 Avenue SW, Calgary, AB T2R 1C3',

phone: '(403) 243-2392',

reviewsCount: '12',

rating: 4

},

...

]

Finally

Now, staying off the Internet is not an option, because then you turn your back on a huge group of possible customers.

Web scraping is another step in business progression - adding automation to the data collection process. Its advantage is that it can be versatile, and many activities become easier with the right tool.

Thank you for reading to the end, we hope this tutorial will be really helpful to you.