We have a lot of articles about scraping using Python, but it isn't the only language suitable for data extraction. Therefore, today we will talk about NodeJS scraping.

Firstly, let's look at general information about what it is and how to prepare your computer to work with NodeJS and build the scraper using Axios and Cheerio.

Introduction to NodeJS

NodeJS is not a different programming language but some kind of environment that allows you to work with JavaScript outside the browser. It has the same base engine as Chrome - V8.

So it will be easy to start for those who have already dealt with JavaScript in the frontend.

To put it simply, Node.js is a C++ application that takes JavaScript code as input and executes it.

The primary advantage of NodeJS is that it allows you to implement an asynchronous architecture in a single thread. In addition, thanks to an active community, NodeJS has ready-made solutions for all occasions, but we will discuss this later.

So let's prepare for scraping:

- Install NodeJS.

- Let's update NPM.

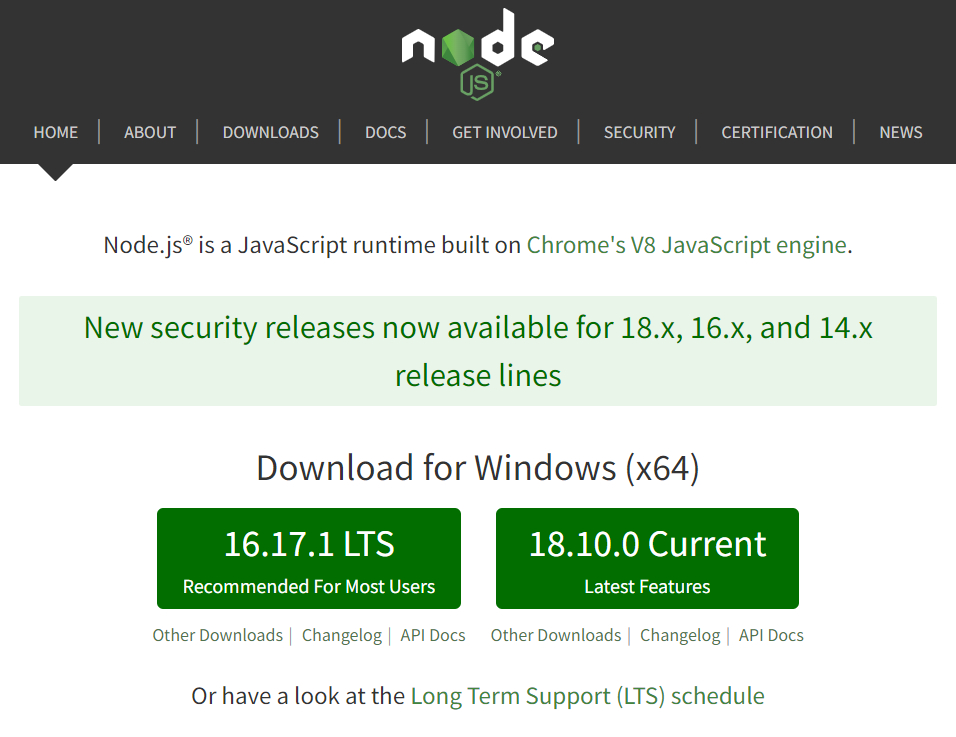

To install NodeJS, visit the official site and download the installation file. Two versions are currently available:

Version 16.17.1 LTS is stable, and 18.9.1 is the latest. The choice of version is up to the user and will not affect the example in this article.

After downloading and installing the package, check that everything works well. You can do this on the command line using the commands:

node -v

npm -vTo update NPM, you can run the following command:

npm install -g npmAfter that, you can start working with NPM packages.

NPM packages or Solutions for All Situations

NPM (node package manager) is a standard package manager used to download packages from the npm cloud server or upload packages to these servers by any user. It means that every user who has written a JavaScript library or tool to perform their tasks can upload it to the server, after which anyone can upload it.

With this approach, there are many ready-made NPM packages today, and if you wish, you can find a ready-made solution for almost any problem or task.

To write a simple scraper, we need two of these tools:

- A tool that allows you to execute a request and get the page code.

- A tool that processes the source code and gets specific data.

Let’s try to decide which libraries will be better for us.

Work with Requests

There are two popular libraries for getting the page code and sending POST and GET requests in NodeJS: Axios and Fetch. However, even though Axios has a lot of flaws, and Fetch is a relatively new library that doesn't have such errors, Axios is still more popular. And all because:

- Code using Axios is more concise.

- The Axios library reports network errors, while the Fetch API doesn't.

- Axios can monitor the progress of unloading data, but Fetch isn't.

In addition to these two libraries, also worth mentioning are Ky (a tiny and well-thought-out HTTP client based on window.fetch) and Superagent (a small HTTP client library based on XMLHttpRequest).

When the project is small, and it is possible to implement the last two libraries, it is better to use them.

Parse Library

To date, Cheerio is the most popular parser that creates the DOM tree of the page and allows you to work with it conveniently. Cheerio parses the markup and provides functions to process the received data.

As an analog of Cheerio, you can use Osmosis, which is similar in functionality but has fewer dependencies.

Creating a project and adding Axios

So, we will use Axios and Cheerio. Let’s specify a directory and initiate NPM in it. Go to the folder where your scraper will be and run the command line in this folder. Then enter the command:

npm init -yThis will create a package.json file in the folder describing our project and specifying its dependencies.

Let's get down to the central part of creating a scraper. We will consider it on the example page example.com. Axios allows you to make requests and receive data. For this project, we only need HTTP GET requests.

Write the dependency on the command line:

npm install axiosLet's now try to get the page code. To do this, in the same folder, create an index.js file. In it, we will connect Axios, execute the request and display it on the screen:

const axios = require('axios');

const url = 'https://example.com';

axios.get(url)

.then(response => {

console.log(response.data);

})

Also, in the end, we will add an error handler so that the program, if an error occurs, is not interrupted and display it on the screen:

.catch(error => {

console.log(error);

})To run the project, go to the command line and run the command:

node index.jsExample.com page source code will be displayed on the screen:

<!doctype html>

<html>

<head>

<title>Example Domain</title>

<meta charset="utf-8" />

<meta http-equiv="Content-type" content="text/html; charset=utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<style type="text/css">

body {

background-color: #f0f0f2;

margin: 0;

padding: 0;

font-family: -apple-system, system-ui, BlinkMacSystemFont, "Segoe UI", "Open Sans", "Helvetica Neue", Helvetica, Arial, sans-serif;

}

div {

width: 600px;

margin: 5em auto;

padding: 2em;

background-color: #fdfdff;

border-radius: 0.5em;

box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02);

}

a:link, a:visited {

color: #38488f;

text-decoration: none;

}

@media (max-width: 700px) {

div {

margin: 0 auto;

width: auto;

}

}

</style>

</head>

<body>

<div>

<h1>Example Domain</h1>

<p>This domain is for use in illustrative examples in documents. You may use this

domain in literature without prior coordination or asking for permission.</p>

<p><a href="https://www.iana.org/domains/example">More information...</a></p>

</div>

</body>

</html>Now let's try to get only the title in the <h1> tag.

Processing a request with Cheerio

Cheerio allows you to parse materials that are HTML and XML markup. So, we can use it to get specific data from the source code we just received.

Let's go to the command line and add dependencies:

npm install cheerioAnd also include it in the index file index.js:

const cheerio = require('cheerio');Let's assume that we can have more than one header, and we want to get them all:

axios.get(url)

.then(({ data }) => {

const $ = cheerio.load(data);

const title = $('h1')

.map((_, titles) => {

const $titles = $(titles);

return $titles.text()

})

.toArray();

console.log(title)

});

You can set up more complex queries or select specific data using CSS selectors.

Read more about CSS selectors: "The Ultimate CSS Selectors Cheat Sheet for Web Scraping."

Example vs. Reality

Our code worked on a simple HTML page, but for most sites, the structure isn't static – it's dynamic. Moreover, if we constantly scrape the site, we will be banned or offered to solve a captcha. Such a simple scraper, unfortunately, will not cope with this.

However, to solve the first problem, it is enough to use headless browsers, to solve the second problem - proxies, and to solve the third one - services for solving captchas. But there is an even simpler solution - to use the web scraping API, which will solve all these difficulties.

Let's try to explore the medium.com home page and get the article titles from it using the web scraping API and NodeJS. To use the API, register on the Scrape-It.Cloud and copy the API key from your account. We will use it later.

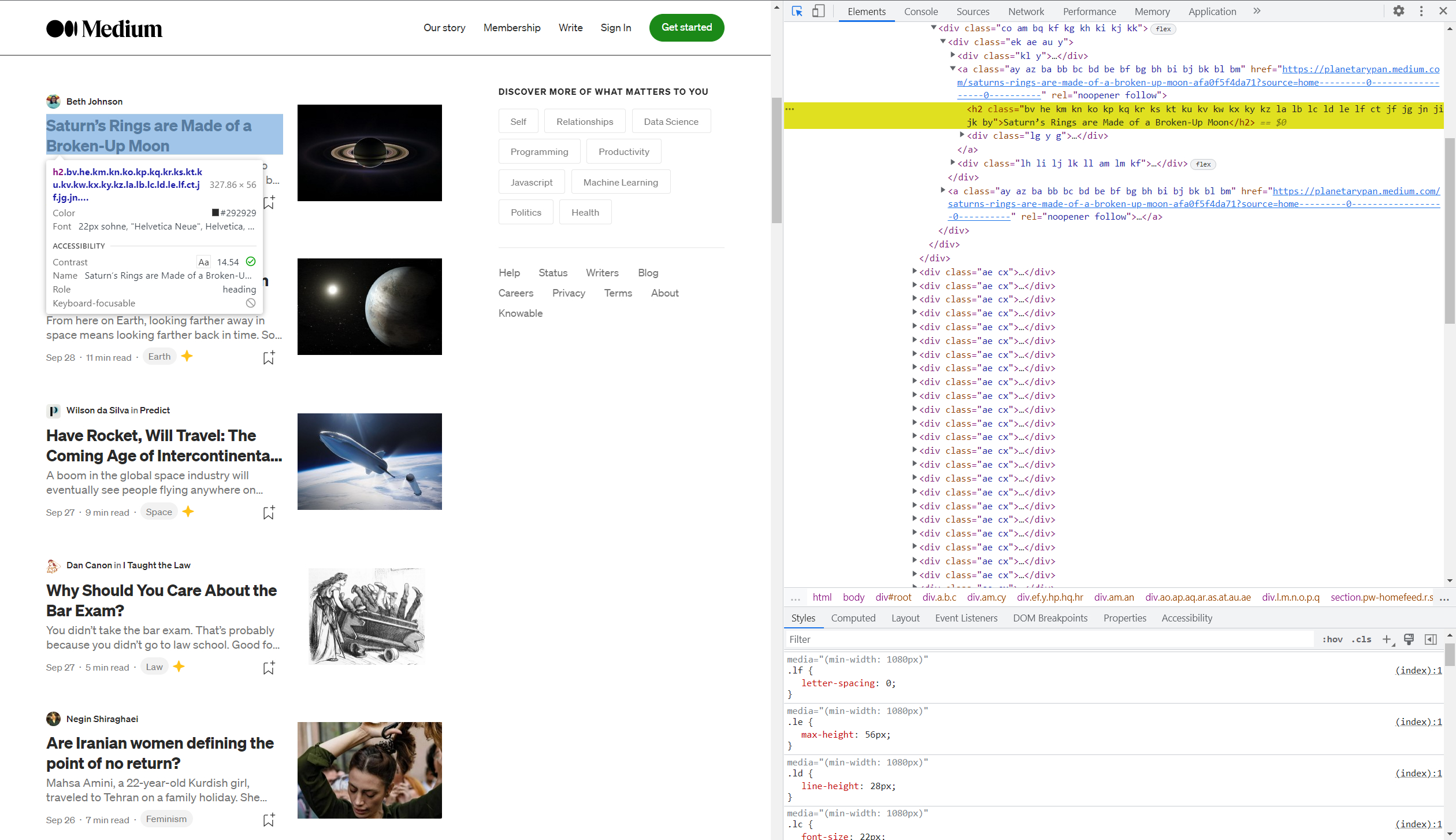

To understand which selectors contain the information we need, go to the site and the developer tools or DevTools (F12).

If you haven't worked with DevTools before, we recommend reading our article: «5 Chrome DevTools Tips & Tricks for Scraping».

After getting on DevTools, press Shift+Ctrl+C to be able to select an element on the page and view its code, then choose the title of any article.

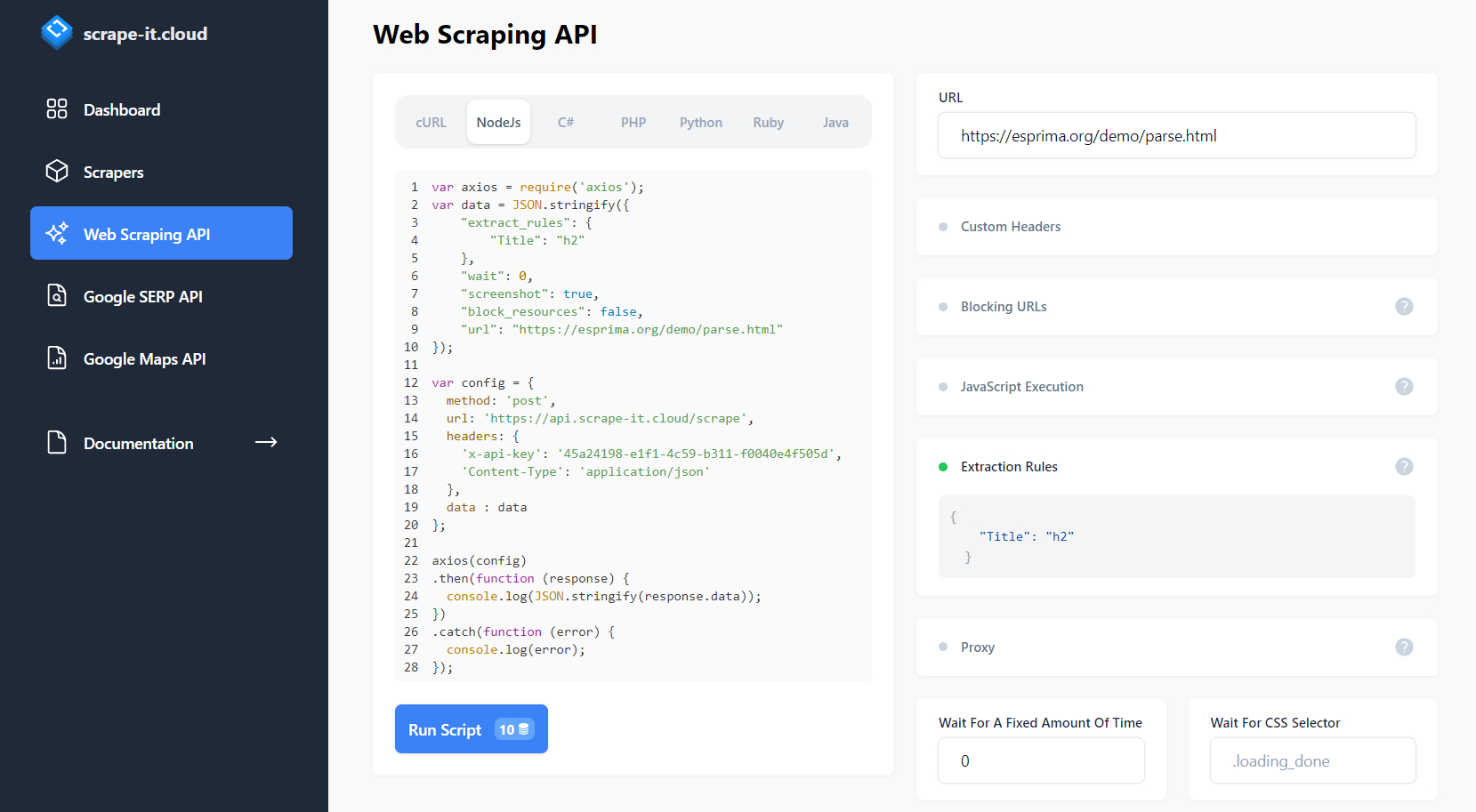

The code highlights this element on the right side of the window. Great, now let's go to the scrape-it.cloud site and go to the Web Scraping API tab. In the request builder, select NodeJS and get ready-made code for page scraping.

However, this is not enough. Let's set up our request so that it returns precisely the titles of the articles that are stored in the h2 tag. To do this, we use Extraction Rules.

We can run the resulting script from the site or save it to a file. Let's save it to a file and change the result output line (line 24) to the following code:

console.log(JSON.stringify(response.data.scrapingResult.extractedData.Title));This is necessary to display only the titles of the articles. If we do it, we will get the list:

[

"Saturn’s Rings are Made of a Broken-Up Moon",

"What does Earth look like from across the Universe?",

"Have Rocket, Will Travel: The Coming Age of Intercontinental Spaceflight",

"Why Should You Care About the Bar Exam?",

"Are Iranian women defining the point of no return?",

"Corn is in Nearly Everything We Eat. Does It Matter?",

"Health Care Roulette",

"America’s Brief Experiment With Universal Healthcare Is Ending",

"There is More than Meets the Eye in Patagonia’s Bold Activism",

"It’s What You See",

"How Civil Rights Groups are Pushing Back Against Amazon’s New Show",

"Why “Microhistories” Rock",

"How to Be a Tourist",

"You Don’t Feel My Pain",

"The Servitude Of Our Times",

"10 Mental Models for Learning Anything",

"The Joy of Repairing Laptops",

"Why Adnan Syed Is Free"

]Such scraping is safe and easy and helps avoid blocks and scrape dynamic data.

Conclusion and Takeaways

NodeJS is a very flexible and user-friendly data scraping tool that allows you to scrape data from various sites quickly and efficiently.

Full example code without API:

const axios = require('axios');

const cheerio = require('cheerio');

const url = 'https://example.com';

axios.get(url)

.then(({ data }) => {

const $ = cheerio.load(data);

const title = $('h1')

.map((_, titles) => {

const $titles = $(titles);

return $titles.text()

})

.toArray();

console.log(title)

});

Full example code with API:

var axios = require('axios');

var data = JSON.stringify({

"extract_rules": {

"Title": "h2"

},

"wait": 0,

"screenshot": true,

"block_resources": false,

"url": "https://medium.com/"

});

var config = {

method: 'post',

url: 'https://api.scrape-it.cloud/scrape',

headers: {

'x-api-key': 'YOUR-API-KEY',

'Content-Type': 'application/json'

},

data : data

};

axios(config)

.then(function (response) {

console.log(response.data.scrapingResult.extractedData.Title);

})

.catch(function (error) {

console.log(error);

});If the topic interests you, you can find out more in the articles “Web Scraping with node.js: How to Leverage the power of JavaScript” and “How to scrape Yelp using NodeJS.”