Imagine a vast shopping mall with products worldwide, showcasing myriad offerings, from the latest gadgets to fashion items. That's how Google Shopping could be described.

With scraping, you can extract, analyze and leverage the information in each product listing and gain insights to make smarter business decisions, employ innovative marketing strategies, and empower consumers.

In this article, we'll look at the benefits of scraping the given with Google Shopping and what tools you can use to scrape the data you need.

Get instant, real-time access to structured Google Shopping results with our API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Google Shopping Scraping Benefits

Scraping data from Google Shopping can be necessary for various reasons, from analyzing the market for a business to expanding opportunities. This data can help you quickly track market trends and learn about competitors' actions. Scraping Google product information can also be helpful for market research or price monitoring.

With Google Shopping scraping, you will track and analyze consumer prices and make new marketing strategies based on the data. In this way, you will not only improve pricing but also be able to spot trends on time.

Understanding Google Shopping Page

To extract data from Google Shopping, we must research the page and figure out what elements to scrape. To do this, research the HTML structure of the required page and conclude the necessary CSS selectors.

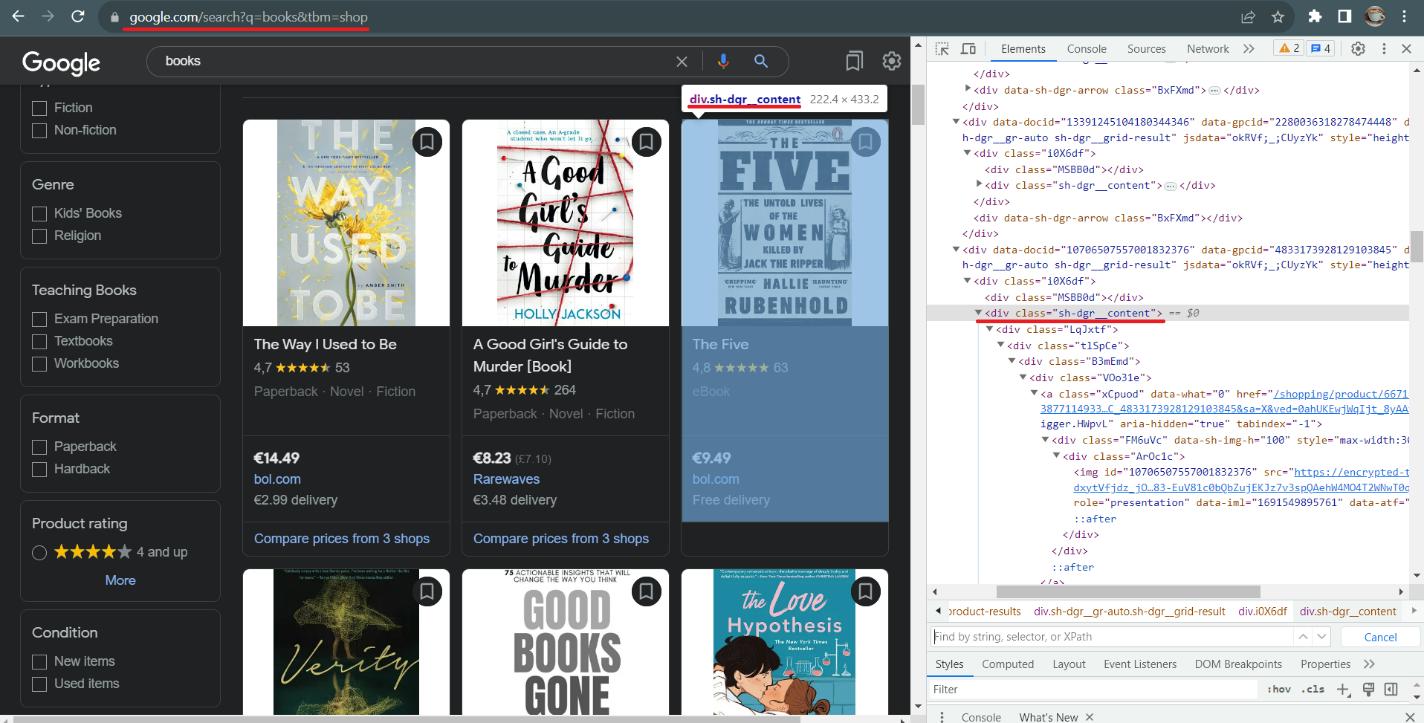

Let's start with the Google Shopping page and then go to the product page to inspect the available data. Pay attention to this page:

The first thing to look at is the link. Thanks to its structure, composing links directly in the query is possible. All you need to do is replace the keyword. Google supports several parameters that can be passed through the link, but only two are required: the keyword and the shop parameter, which shows that we are looking for data in the shopping section.

Next, go to DevTools (F12 or right-click on the page and select Inspect) and explore the page. In this case, we can see that all products have the class "sh-dgr__content". We can get the data about each product by accessing it with a CSS selector. See what tags the information is in with an example of one product.

The most straightforward data to extract is the image (img tag src parameter), the title (h3 tag), and the link to the product page (a tag, href parameter). After the page refreshes, the rest of the data has a div tag and an automatically generated class with a different name. We can extract the rest of the data using CSS selectors for the child or neighboring elements.

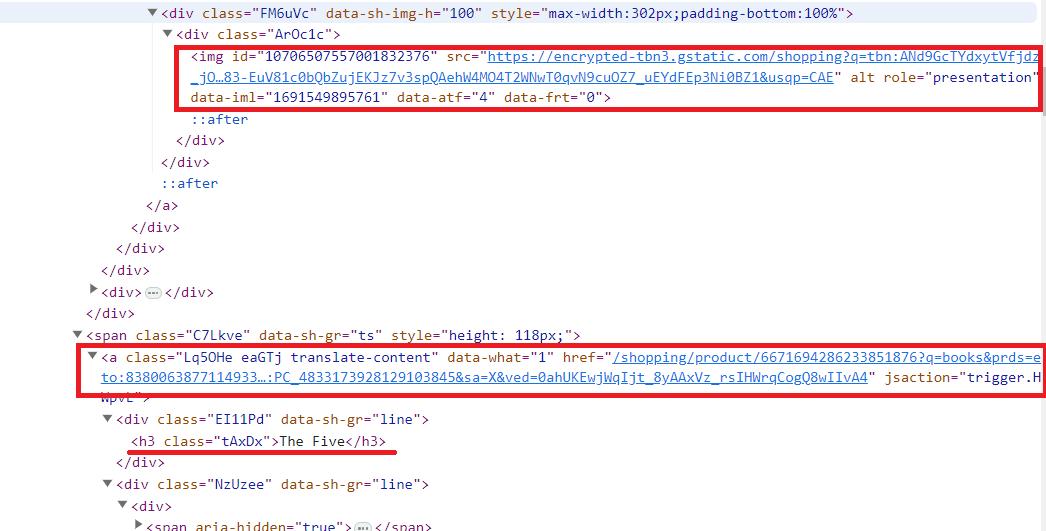

Now go to some product page and explore it in the same fashion as the page before.

From this page, we can get more detailed information about the product:

- Product name.

- Rating and number of reviews.

- Prices in different stores.

- Description.

- Additional product details (in our case, genre, ISBN, author, and number of pages).

- Reviews.

- Related Products.

Typically, the Google Shopping page is also enough to get product information. Still, if you want to know everything about a product, you can get that information from the product page.

Get Data with HasData Request Builder

Before scraping data in Python using APIs or libraries, we'd like to show you how you can quickly and easily get JSON with the required data in just a couple of clicks.

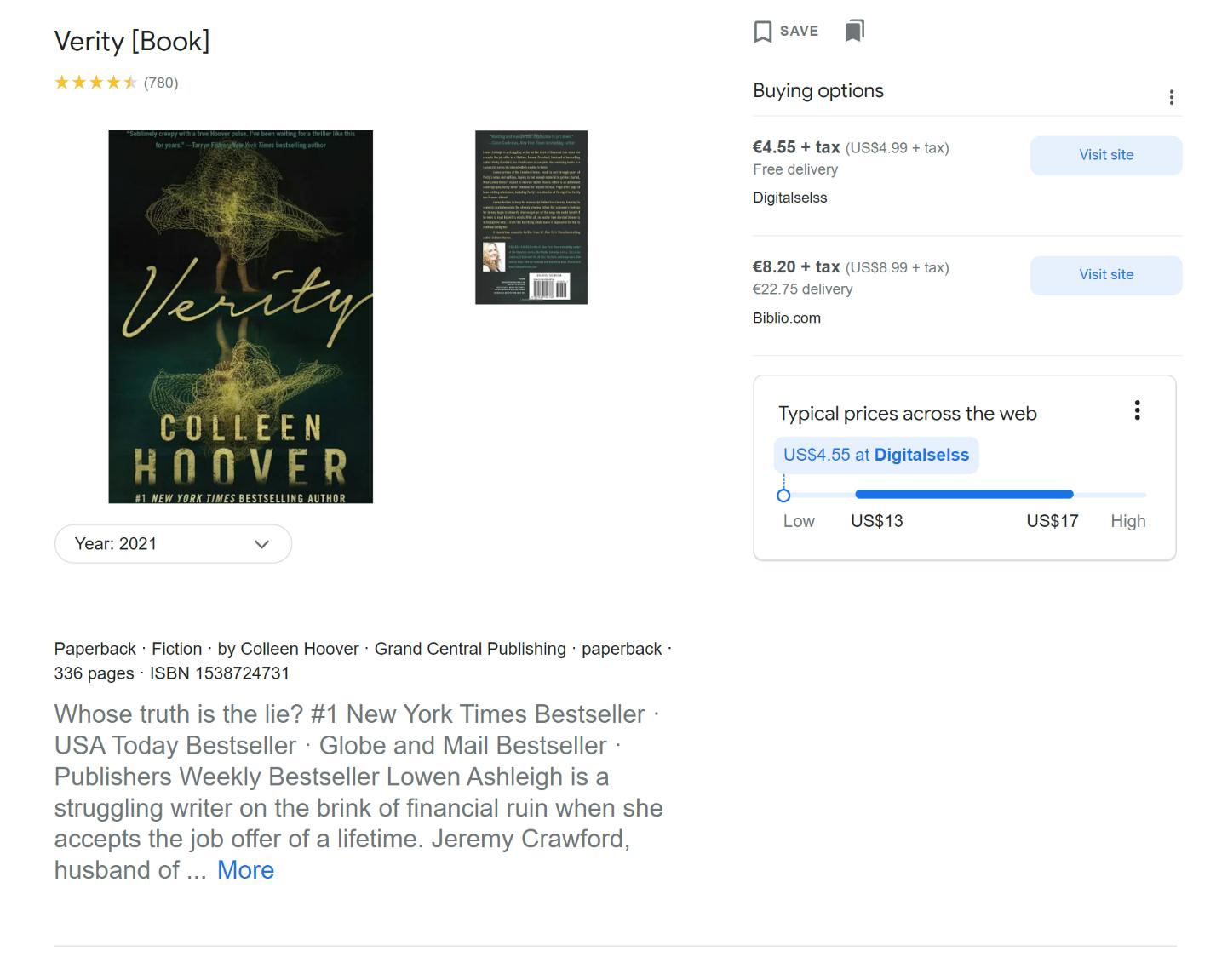

Sign up at HasData and go to the Google SERP API section. When you sign up, you get 1000 free credits, so you can try the tool and decide if it's right for you.

You can find settings on the Google SERP API page that can help to make your query more accurate. There is also a code viewer here, which will allow you to copy the code to execute the generated query in one of the popular programming languages.

Try to create your first query. To do this, customize the location and localization.

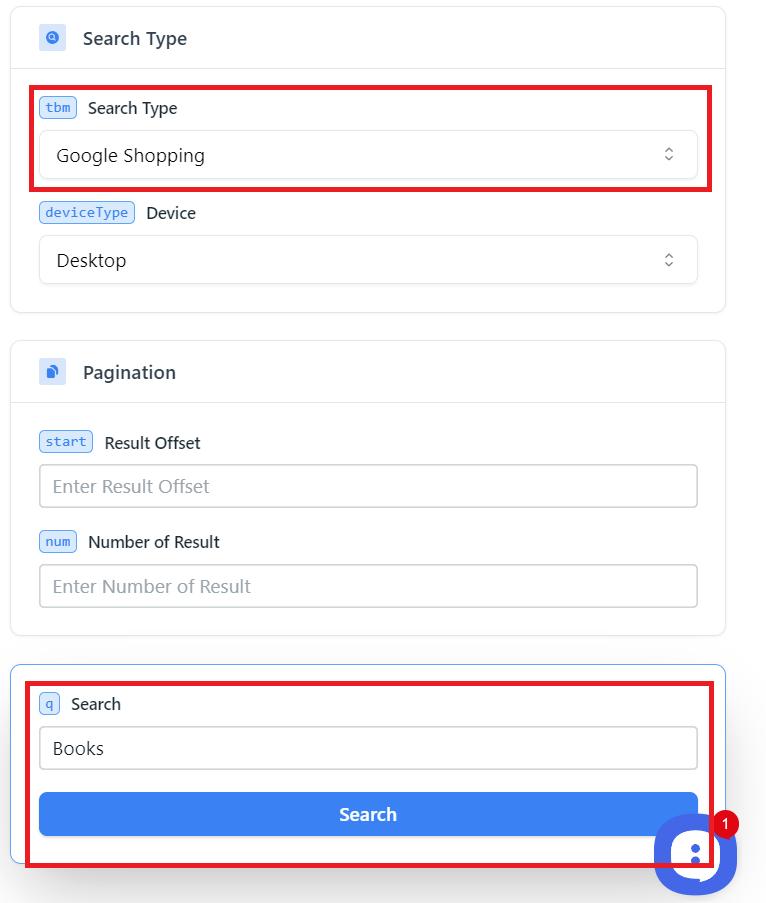

Then choose your query type, Google Shopping, to gather data from there. You will also be able to collect data from Google Images, News, SERP (search engine page results), Videos, and Locals. Customize additional settings as needed. They have intuitive names, so you can customize them yourself if needed. If you have questions about any parameters, we describe them on the documentation page.

When all parameters are filled in, specify the keyword and click the Search button.

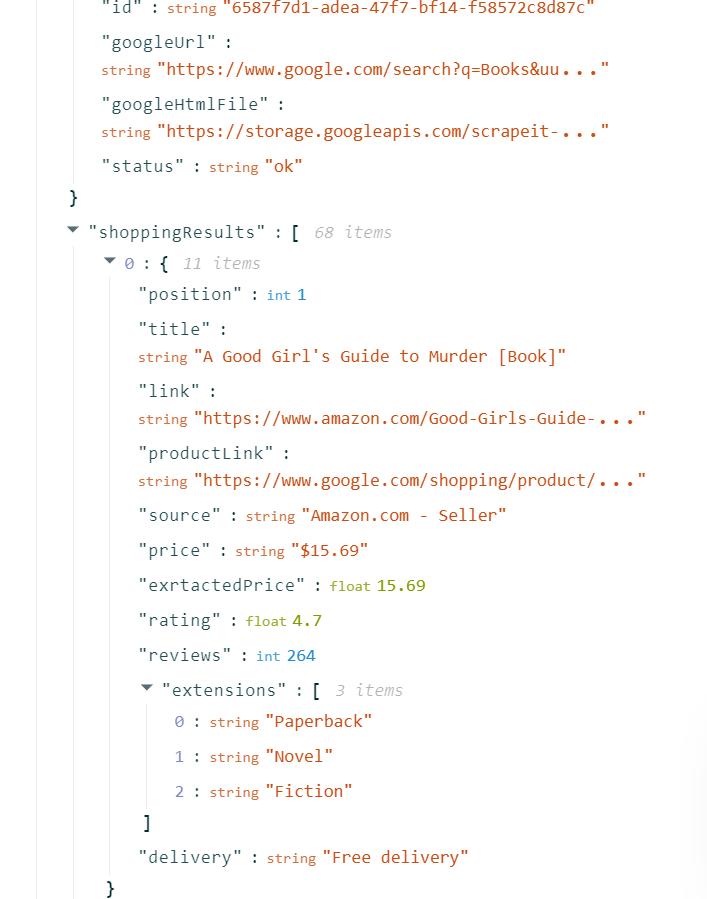

The result is a JSON response that you can use, convert to a convenient format, or import into Excel.

This way, you got the product data you needed without writing a single line of code and in the shortest possible time.

Get instant, real-time access to structured Google Shopping results with our API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Scraping Product Data with Google Shopping API & Python

Now let's use HasData's Google Shopping API, get the required data into a Python script, and then save it in *.xlsx format.

Get your API key

To do this, go back to HasData app and go to the dashboard tab in your account.

You can also see your credit utilization statistics, a list of last-used no-code scrappers, and your credit balance.

Use API in your Script

Create a new file with the *.py extension. We will use the requests library to execute requests from API and Pandas library for simple saving in *.xlsx or *.csv file format. To install them, execute these commands in the command prompt:

pip install requests

pip install pandasThen import these libraries into your file:

import requests

import pandas as pdSpecify the variables you will use. This is necessary if you want to use the same script to collect data, for example, for several keywords.

keyword = 'Books'

api_url = 'https://api.hasdata.com/scrape/google'Now set the header with your API key and form the request's body. You can use these Google Shopping parameters:

|

Category |

Parameter |

Description |

Example |

|---|---|---|---|

|

Search Query |

q |

Required. Query you want to search. |

q=Coffee |

|

Geographic Location |

location |

Optional. Google canonical location. |

location=New York, USA |

|

uule |

Optional. Encoded location for search. |

uule=SomeEncodedLocation |

|

|

Localization |

google_domain |

Optional. Google domain to use. |

google_domain=google.co.uk |

|

gl |

Optional. Country for the search. |

gl=us |

|

|

hl |

Optional. Language for the search. |

hl=en |

|

|

lr |

Optional. Limit search to specific lang. |

lr=lang_fr |

|

|

Advanced Google Params |

ludocid |

Optional. Google My Business listing ID. |

ludocid=SomeID |

|

lsig |

Optional. Knowledge graph map view ID. |

lsig=SomeID |

|

|

kgmid |

Optional. Google Knowledge Graph listing ID |

kgmid=SomeID |

|

|

si |

Optional. Cached search parameters ID. |

si=SomeID |

|

|

Advanced Filters |

tbs |

Optional. Advanced search parameters. |

tbs=SomeParameters |

|

safe |

Optional. Level of filtering for adult cont |

safe=active |

|

|

nfpr |

Optional. Exclude auto-corrected results. |

nfpr=1 |

|

|

filter |

Optional. Enable/disable filters. |

filter=0 |

|

|

Search Type |

tbm |

Optional. Type of search. |

tbm=shop |

|

Pagination |

start |

Optional. Result offset. |

start=10 |

|

num |

Optional. Max number of results. |

num=20 |

You can specify many more parameters if needed, we only use a few:

headers = {'x-api-key': 'PUT-YOUR-API-KEY'}

params = {

'q': keyword,

'domain': 'google.com',

'tbm': 'shop'

}Now run the query by collecting all the data together. To avoid interrupting the script because of errors, use the try..except block:

try:

response = requests.get(api_url, params=params, headers=headers)

if response.status_code == 200:

# Here will be your Code

except Exception as e:

print('Error:', e)Inside the above condition, let's extract the required data in JSON format. If you look at the screenshot of the JSON response in the previous example, the API returns the required data in the "shoppingResults" parameter.

data = response.json()

shopping = data['shoppingResults']Finally, we use pandas to save the data in the desired form.

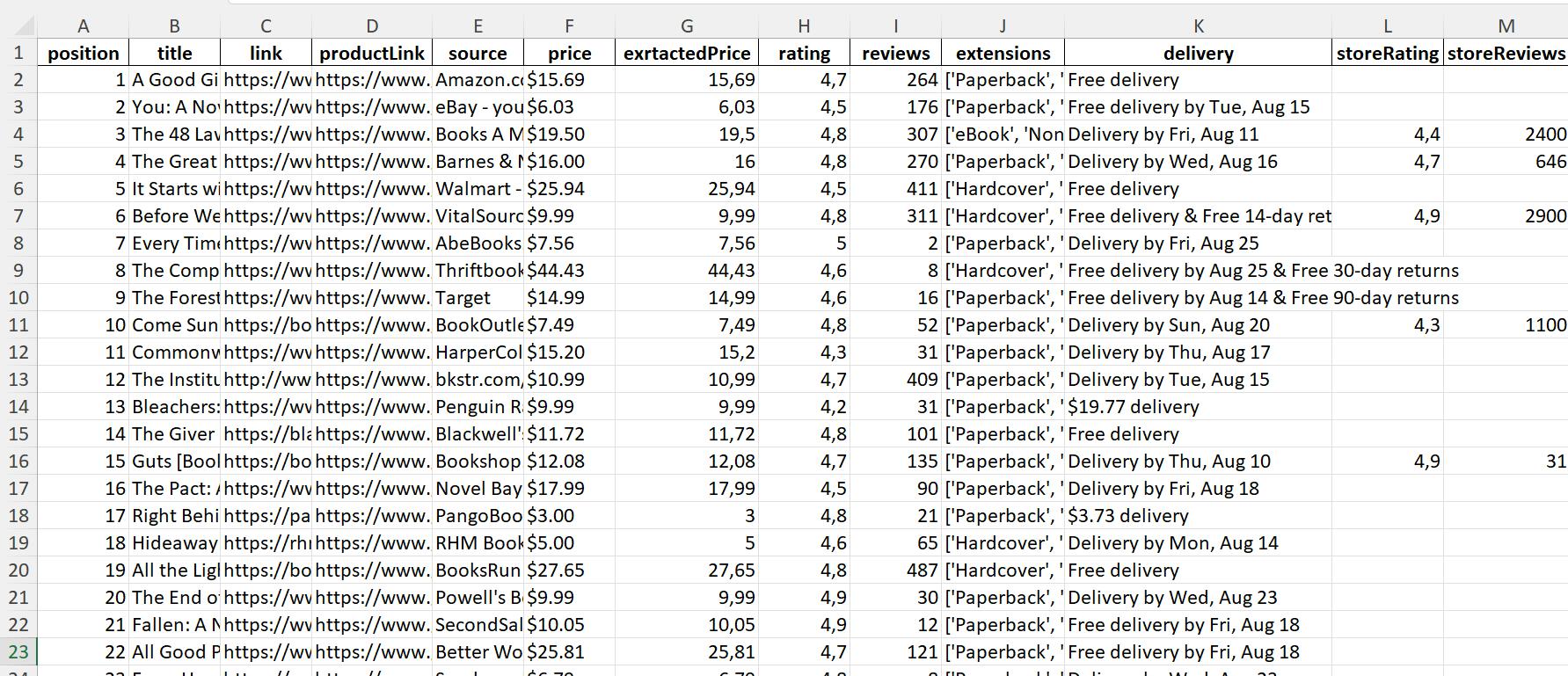

df = pd.DataFrame(shopping)

df.to_excel("shopping_result.xlsx", index=False)As a result, we will get this file, which automatically creates column headers and saves the data. Even if the content of shoppingResults changes in the future, we will still get the data with the headers in the JSON parameters. Here we get the product position, title, link, rating, reviews, and other product information.

Full code:

import requests

import pandas as pd

keyword = 'Books'

api_url = 'https://api.hasdata.com/scrape/google'

headers = {'x-api-key': 'YOUR-API-KEY'}

params = {

'q': keyword,

'domain': 'google.com',

'tbm': 'shop'

}

try:

response = requests.get(api_url, params=params, headers=headers)

if response.status_code == 200:

data = response.json()

shopping = data['shoppingResults']

df = pd.DataFrame(shopping)

df.to_excel("shopping_result.xlsx", index=False)

except Exception as e:

print('Error:', e)If you don't want to use pandas, you can create the file, name the columns, and save the data line by line.

Tools and Libraries for Scraping Google Shopping Results

Using an API dramatically simplifies the scraping process by having the most challenging part done on the side of the resource providing the API. It solves issues of JS rendering, bypassing locks and captchas, and much more. Well-structured data in a convenient format is returned to the user.

Using an API to scrape data may seem expensive, but it can save you time and money in the long run. Building a custom scraper initially looks like it could save costs, but managing proxy rotation, HTML parsing, and consistent extraction can be hard. Plus, extra challenges such as captchas and blockages could end up taking up too much resources that defeat the initial cost-savings of using your own scraper. In these cases using a dedicated scraping service is often ultimately more efficient with less hassle overall.

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Shopify scraper is the ultimate solution to quickly and easily extract data from any Shopify-powered store without needing any knowledge of coding or markup! All…

Let’s make a Google Shopping data scraper in Python using BeautifulSoup or Selenium libraries.

Scrape Google Shopping using BeautifulSoup on Python

We will use the BeautifulSoup library to parse a page in this example. It is pretty simple and is perfect even for beginners. However, it does not have such features as a headless browser or a simulation of user behavior, such as navigating to a new page or filling in fields.

First, install the BeautifulSoup library. Also, we will use the previously installed Requests library to execute queries.

pip install bs4Now create a new file with the extension *.py and corrupt the libraries into it:

from bs4 import BeautifulSoup

import requestsPut a link on your Google Shopping page:

url = 'https://www.google.com/search?q=books&tbm=shop'You can use variables to create the links you need. In this case, you have to specify a file with keywords or put them in a variable, then go through all the keywords one by one.

Now specify headers to reduce the risk of blocking. It is best to go to your own browser and use your User Agent.

headers = {

'User-Agent': 'Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1',

'Accept-Language': 'en-US,en;q=0.5'

}The only thing left is running the query and processing the resulting data. To get the html code of the page, we use the get request:

response = requests.get(url, headers=headers)Now, if the request is successful, let's create a BeautifulSoup object and parse the page:

if response.status_code == 200:

html_content = response.text

soup = BeautifulSoup(html_content, 'html.parser')To go through all the elements individually, let's find all of them first.

content_elements = soup.find_all('div', class_='sh-dgr__content')Now let's use for to go through all found items:

for content_element in content_elements:In the loop, let's specify the CSS selectors of the elements we need to find for each product. Let's start with the link to the product:

link = content_element.find('a')['href']

print("Link:", link)Now let's get all the headers and display them on the screen as well:

title = content_element.find('h3').get_text()

print("Title:", title)Now we get the ratings. However, since we can't use the class names, we use the ordinal number of the required element:

rating_element = content_element.find_all('span')[1]

rating = rating_element.get_text()

print("Rating:", rating)Finally, we get a description of the product. It is the next span after the rating. That is, it is its sibling.

description_element = rating_element.find_next_sibling('span')

description = description_element.get_text()

print("Description:", description)If you want to learn more about CSS selectors, you can use our cheat sheet and learn something new about using them.

Full code:

from bs4 import BeautifulSoup

import requests

url = 'https://www.google.com/search?q=books&tbm=shop'

headers = {

'User-Agent': 'Mozilla/5.0 (iPhone; CPU iPhone OS 13_2_3 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.3 Mobile/15E148 Safari/604.1',

'Accept-Language': 'en-US,en;q=0.5'

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_content = response.text

soup = BeautifulSoup(html_content, 'html.parser')

content_elements = soup.find_all('div', class_='sh-dgr__content')

for content_element in content_elements:

link = content_element.find('a')['href']

print("Link:", link)

title = content_element.find('h3').get_text()

print("Title:", title)

rating_element = content_element.find_all('span')[1]

rating = rating_element.get_text()

print("Rating:", rating)

description_element = rating_element.find_next_sibling('span')

description = description_element.get_text()

print("Description:", description)

print("\n")If you are unsatisfied with the option of data output to the console, you can use pandas or other tools to save the data in the format you need.

Scrape Google Shopping Results with Selenium

The last tool in this article is the Selenium library. It has analogs in almost all programming languages. In addition, it has a very active community and extensive documentation. Selenium uses a web driver to simulate user behavior and control headless browsers.

Earlier, we wrote about how to install Selenium and what else you need to use. We also gave examples of scraping in Python with Selenium: "Web Scraping Using Selenium Python".

To execute the following script, we need webdriver. We will use Chrome. It is essential to make sure that the version of the webdriver matches the version of the installed browser.

Now let's create a new *.py file and import the necessary packages:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import ByNow provide the path to the previously downloaded webdriver and a link to the Google Shopping page:

chromedriver_path = 'C://chromedriver.exe'

url = 'https://www.google.com/search?q=books&tbm=shop'Create a webdriver handler:

service = Service(chromedriver_path)

driver = webdriver.Chrome(service=service)Finally, we can launch the web browser and navigate to the target page.

driver.get(url)Perform the page parsing using the By module and its various elements. We use the same tags as last time but use different features of the module to collect data:

content_elements = driver.find_elements(By.CLASS_NAME, 'sh-dgr__content')

for content_element in content_elements:

link_element = content_element.find_element(By.TAG_NAME, 'a')

link = link_element.get_attribute('href')

print("Link:", link)

title_element = content_element.find_element(By.TAG_NAME, 'h3')

title = title_element.text

print("Title:", title)

rating_element = content_element.find_elements(By.TAG_NAME, 'span')[1]

rating = rating_element.text

print("Rating:", rating)

description_element = rating_element.find_element(By.XPATH, './following-sibling::span')

description = description_element.text

print("Description:", description)

print("\n")At the end, close webdriver to finish executing the script.

driver.quit()Full code:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

chromedriver_path = 'C://chromedriver.exe'

url = 'https://www.google.com/search?q=books&tbm=shop'

service = Service(chromedriver_path)

driver = webdriver.Chrome(service=service)

driver.get(url)

content_elements = driver.find_elements(By.CLASS_NAME, 'sh-dgr__content')

for content_element in content_elements:

link_element = content_element.find_element(By.TAG_NAME, 'a')

link = link_element.get_attribute('href')

print("Link:", link)

title_element = content_element.find_element(By.TAG_NAME, 'h3')

title = title_element.text

print("Title:", title)

rating_element = content_element.find_elements(By.TAG_NAME, 'span')[1]

rating = rating_element.text

print("Rating:", rating)

description_element = rating_element.find_element(By.XPATH, './following-sibling::span')

description = description_element.text

print("Description:", description)

print("\n")

driver.quit()

As you can see, using Selenium to scrape Google Shopping page is simple. And at the same time, Selenium allows you to emulate the behavior of a real user, control various elements on the page, and load JavaScript.

Conclusion and Takeaways

In this tutorial, we have looked at different ways to Google Shopping search results scraping, both requiring programming skills and not. If you are familiar with programming but are not ready to parse complex website structures or create ways to protect yourself from captchas or blocking, you may be better off using the Google Shopping API.