Headless browsers are a crucial component of both web scraping and automated testing. They perform all the usual browser operations, such as loading web pages, executing JavaScript, and interacting with the DOM (Document Object Model), but without any user interface.

This makes them more resource-efficient than regular browsers, consuming less memory and CPU time. Additionally, headless mode operations can be faster since processing and rendering graphics is unnecessary.

In this article, we will explore the benefits of using headless browsers in Python. We will also provide an overview of popular headless browser libraries, including Selenium, Pyppeteer, and others. We will discuss each library's key features and capabilities and guide on selecting the right library for your specific needs.

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Choosing a Headless Browser for Python

Selecting a headless browser for Python is crucial for developing programs that interact with web pages without opening a browser window. First, let's consider which headless browsers we can use in Python, which libraries provide them, and compare their capabilities and factors to consider.

Popular headless browsers for Python

Python has several libraries that provide the ability to work with headless browsers. However, the most popular ones are the following:

- Selenium. This is one of the most popular tools for web browser automation. It lets you control various browsers, including Chrome and Firefox, in headless mode. It has extensive functionality and an active user community.

- Pyppeteer. This is a Python wrapper for the well-known Puppeteer library, originally developed for NodeJS and designed to automate the Chrome and Chromium browsers. Pyppeteer provides a simple and convenient interface for controlling the browser in headless mode, including the ability to interact with the DOM and execute JavaScript.

- Playwright is a relatively new tool compared to previous ones developed by Microsoft. It supports automating Chrome, Firefox, and WebKit browsers in headless and regular modes. Playwright provides extensive functionality for testing and web scraping and also supports asynchronous execution of operations.

- MechanicalSoup. Unlike other tools, it doesn’t directly provide a headless mode but can perform web scraping without displaying a browser.

In addition to the libraries mentioned above, there are others, such as Ghost.py. However, these libraries are less popular, have less active communities, and offer a narrower range of features. Some people also consider Scrapy to be a web scraping library with a headless browser, but it is more suitable for crawling web pages and does not allow you to control any browser.

Comparison of different headless browsers

To compare these libraries more effectively, let's create a table that will provide a clear and concise overview of their capabilities:

|

Feature |

Pyppeteer |

Selenium |

Playwright |

MechanicalSoup |

|---|---|---|---|---|

|

Headless Browser |

Yes (Chrome, Chromium) |

Yes (Various browsers) |

Yes (Chrome, Firefox, WebKit) |

No (Uses real browser) |

|

Ease of Use |

Moderate |

Moderate |

Moderate |

Easy |

|

Documentation |

Moderate |

Extensive |

Extensive |

Moderate |

|

JavaScript Support |

Yes |

Yes |

Yes |

No (Limited) |

|

Asynchronous |

Yes |

No |

Yes |

No |

Based on our experience, the best libraries for working with headless browsers are Selenium and Pyppeteer.

If you need to work with multiple browsers and are new to web scraping, we recommend starting with Selenium. It's relatively simple to learn and offers many modules for data parsing, as discussed in our tutorial on scraping with Selenium WebDriver.

If you plan to work only with headless Chrome or Chromium browsers, prefer asynchronous tasks, or have experience with Node.js, Pyppeteer might be a better fit. We have also already covered web scraping with Pyppeteer in another article.

Libraries developed after Selenium and Pyppeteer often have similar functionality or may lack some features. Additionally, Selenium and Pyppeteer have variants for most modern programming languages, leading to larger communities, better documentation, and active development.

Getting Started with a Headless Browser in Python

As mentioned, Selenium and Pyppeteer are two excellent libraries for working with headless browsers. While we have dedicated articles for each, this section will briefly overview their installation and basic script functionality for launching and retrieving a page's HTML code. We hope this comparison will help you choose the best library.

Installing a headless browser in Python

Each library has its own installation specifics, so let's consider them in order, starting with Pyppeteer. Since it uses Puppeteer for operation, you need to install NodeJS to use it. Then open the terminal and run the following command to install Pyppeteer via pip:

pip install pyppeteerDuring installation, Pyppeteer will try to download Chromium for operation, but you can choose a different browser by specifying its path in the PYPPETEER_EXECUTABLE_PATH environment variable.

To install Selenium, open the terminal and use the following command:

pip install seleniumYou will also need a webdriver for your chosen browser to work with earlier Selenium versions. For example, to work with the Chrome browser, you will need Chrome WebDriver. Make sure that the driver is in your path or specify the path to it in the code. It is also important to note that the web driver version must match the version of the installed browser.

Writing your first headless browser script

For example, let's create a small script to navigate to this demo website and retrieve the page title. Let's start with the Pyppeteer library. Create a new file with the .py extension and import the necessary libraries:

import asyncio

from pyppeteer import launchDefine a function that utilizes a headless browser and call it asynchronously using the previously imported asyncio library:

async def main():

# Here will be code

asyncio.get_event_loop().run_until_complete(main())Let's improve the main function. First, we'll create a headless browser object:

browser = await launch(headless=True)To use a specific browser file, you need to specify its path:

browser = await launch(headless=True, executablePath='chrome-win/chrome.exe')Then, create a new browser window and tab:

page = await browser.newPage()Go to the demo website page:

await page.goto('https://demo.opencart.com/')Extract the page title:

title = await page.title()Finally, display the output and close the browser:

print("Page title:", title)

await browser.close()The entire code looks like this:

import asyncio

from pyppeteer import launch

async def main():

browser = await launch(headless=True, executablePath='chrome-win/chrome.exe')

page = await browser.newPage()

await page.goto('https://demo.opencart.com/')

title = await page.title()

print("Page title:", title)

await browser.close()

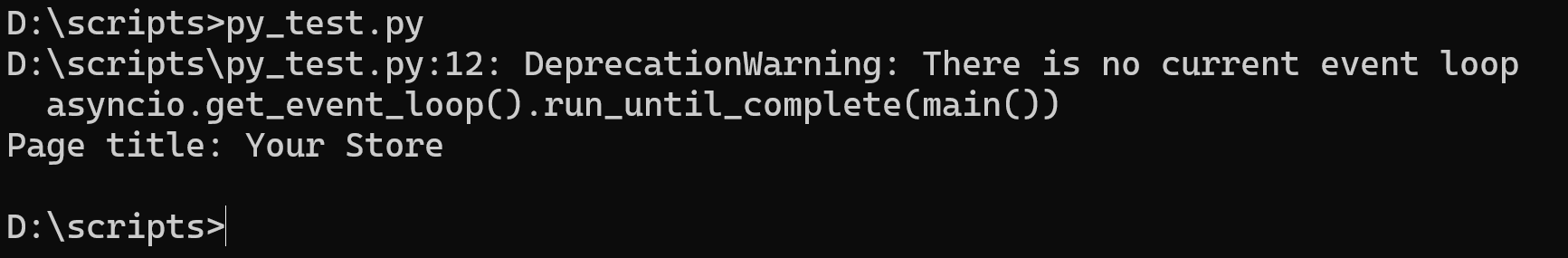

asyncio.get_event_loop().run_until_complete(main())Let's run the script and observe the outcome:

Let's achieve the same using the Selenium library. First, let's import all the required modules:

from selenium import webdriver

from selenium.webdriver.chrome.options import OptionsSpecify headless mode to run the browser in the background without a graphical user interface (GUI):

chrome_options = Options()

chrome_options.add_argument('--headless')Let's instantiate a headless browser object:

driver = webdriver.Chrome(options=chrome_options)This method of creating an object will only work if you are using the latest versions of the library. However, if you have an earlier version of Selenium, you will need to specify the path to the web driver file:

driver = webdriver.Chrome(executable_path='C:\driver\chromedriver.exe', options=chrome_options)Let's navigate to the demo website page and extract its content:

driver.get('https://demo.opencart.com/')Retrieve and display the title:

title = driver.title

print("Page title:", title)Make sure to close the browser at the end:

driver.quit()The full script is as follows:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument('--headless')

driver = webdriver.Chrome(options=chrome_options)

driver.get('https://demo.opencart.com/')

title = driver.title

print("Page title:", title)

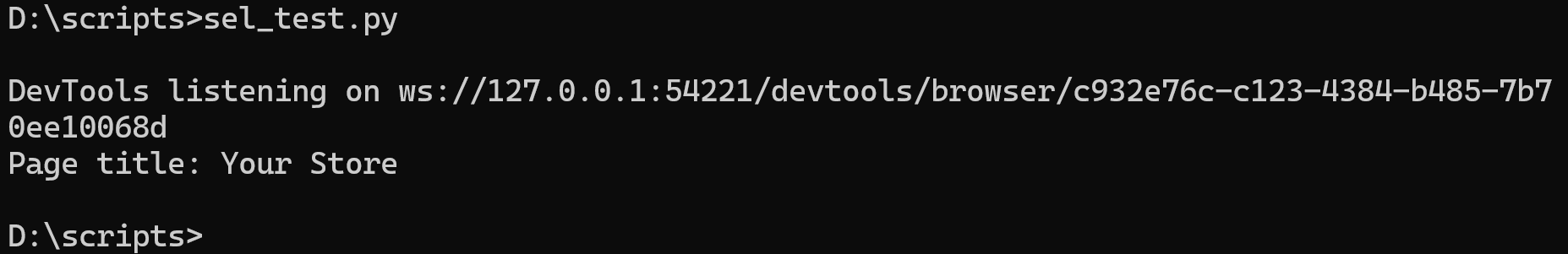

driver.quit()Let's run it and make sure we're getting the same data as we did when using Pyppeteer:

As you can see, both approaches achieved the same result. However, Pyppeteer operates exclusively in an asynchronous mode by default. Therefore, its usage requires an additional Asyncio library. On the other hand, Selenium does not require additional libraries for its operation.

Common tasks with headless browsers in Python

The considered libraries have a fairly wide functionality that allows you to solve most of the tasks for which a headless browser is needed. Let's consider some of them:

- Data scraping. As we showed in the example, headless browsers allow you to automate collecting data from web pages. This can be useful for extracting information for analysis, news aggregation, or price monitoring.

- Testing web applications. Headless browsers are also often used for automated browser testing of web applications, as they allow you to emulate the behavior of real users.

- Taking screenshots. Headless browsers can be useful if you don't want to take many screenshots manually.

These are just some of the common tasks that can be performed using headless browsers in Python. In fact, they can be useful in any area and task where many monotonous actions in the browser are necessary. In addition, because they call the browser, websites perceive such a script not as a bot but as a real user.

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Tips and tricks for headless browser development

To make headless browser usage even more useful and widespread, we will share some key points that can help you, such as using multithreading, proxies, or custom profiles in headless. This will make your scripts more useful and functional.

Multithreading

Multithreading allows you to execute multiple tasks concurrently in separate threads. In the context of headless browsers, this can be useful if you need to perform several independent actions or tasks on different web pages simultaneously. For example, you can use multithreading to invoke multiple browsers or execute multiple data-scraping scripts in parallel to improve performance.

Pyppeteer does not directly support multithreading, as its code is inherently asynchronous and can be executed in parallel. However, using multithreading is a great option for Selenium when you want to perform multiple tasks simultaneously.

For example, let's slightly modify the script to get the Title of the demo site and example.com using multithreading. First, let's import all the necessary libraries and modules:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import threadingCreate a variable to store a list of websites:

urls = ['https://www.example.com', 'https://demo.opencart.com/']Move the previous version of the script into a function that will receive the URL as an argument.:

def run_browser(url):

chrome_options = Options()

chrome_options.add_argument('--headless')

driver = webdriver.Chrome(options=chrome_options)

driver.get(url)

title = driver.title

print("Page title:", title)

driver.quit()Create a variable to store threads:

threads = []Create and start threads:

for url in urls:

thread = threading.Thread(target=run_browser, args=(url,))

threads.append(thread)

thread.start()Now all we have to do is wait for the threads to finish:

for thread in threads:

thread.join()This code waits for all started threads to finish before continuing the execution of the program's main thread.

When threads are created and started, they run concurrently with the program's main thread. To ensure that the main thread does not terminate before all created threads have finished, the join() method is used.

Asynchronicity

Asynchrony enables performing operations without blocking the thread, which is especially useful for tasks that require waiting for long-running operations, such as loading web pages or executing JavaScript.

When working with headless browsers, asynchrony is often used for operations that may take some time, such as waiting for a page to load or making asynchronous requests to the server.

Unlike the case with multithreading, on the contrary, Pyppeteer is perfect for use, and Selenium does not have built-in support for asynchrony.

To use asynchrony to start scraping data from multiple pages simultaneously, we only need to slightly modify our previous script, in which we demonstrated the work of Pyppeteer:

import asyncio

from pyppeteer import launch

urls = ['https://www.example.com', 'https://demo.opencart.com/']

async def run_browser(url):

browser = await launch(headless=True, executablePath='chrome-win/chrome.exe')

page = await browser.newPage()

await page.goto(url)

print(await page.title())

await browser.close()

async def main():

tasks = [run_browser(url) for url in urls]

await asyncio.gather(*tasks)

asyncio.run(main())We introduced a variable to store URLs and implemented the creation and launching of asynchronous tasks. Otherwise, the script remains the same.

Proxy usage

Proxy servers allow you to hide your real IP address. This can be useful for various reasons, such as concealing your location or bypassing website blocks. Additionally, some websites may block IP addresses that make excessive requests. Using a proxy distributes requests across different IP addresses, reducing the risk of being blocked.

We have previously covered the basics of proxies, including their purpose and where to find free proxies and reliable proxy providers. Therefore, this article will not dwell on those topics. Instead, we will focus on how to use proxies with headless browsers.

To integrate proxy usage into a Selenium headless browser, you need to add an additional parameter before creating the object:

proxy = 'proxy_ip:proxy_port'

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--proxy-server=%s' % proxy)To use Pyppeteer, you need to do the same thing:

proxy = 'proxy_ip:proxy_port'

browser = await launch(args=[f'--proxy-server={proxy}'], headless=True)If you want to use a proxy and specify a username and password, the variable content should be in the following format:

proxy = 'username:password@proxy_ip:proxy_port'Headless browsers support all proxies, including HTTP, HTTPS, and SOCKS.

User-Agent management

Using the User Agent is also crucial to working with headless browsers. It lets you impersonate a specific browser and operating system on the web server. This is useful to mimic user behavior and avoid being detected as a bot.

Additionally, some web servers may deliver different versions of content depending on the User Agent. You can leverage this feature to download optimized or mobile versions of web pages.

We previously covered the concept of User Agents in detail, including their purpose and a table of automatically updated latest User Agents. Now, let's explore how to utilize them with headless browsers. You can use the following code to import them into Selenium:

options = webdriver.ChromeOptions()

options.add_argument("user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36")For Pyppeteer:

browser = await launch(args=['--user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36'])Before use, replace the User Agent in the example with the latest one.

User Profiles

Selenium and Pyppeteer allow you to use user profiles to customize your browser settings, such as bookmarks, browsing history, security preferences, etc. To use them, specify the path to the desired profile in your options.

The profile folder is typically in the user's folder, inside the "AppData" folder for Windows or in the home directory for macOS and Linux. However, you can view the path to the profile on the browser's settings page. To open it, enter the following in the browser's address bar:

- Google Chrome:

chrome://version/ - Mozilla Firefox:

about:support - Microsoft Edge:

edge://version/

Look for a field labeled "Profile Path" or "Profile Folder," which contains the path to the profile folder. You can also copy the folder and transfer it to another device if needed.

To use a specific profile in Selenium, use the following code:

profile_path = r'C:\Users\Admin\AppData\Local\Google\Chrome\User Data\Profile 1'

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument(f'--user-data-dir={profile_path}')Pyppeteer provides a similar way to use user profiles. To do this, you need to pass the userDataDir option to the launch() method:

profile_path = r'C:\Users\Admin\AppData\Local\Google\Chrome\User Data\Profile 1'

browser = await launch(userDataDir=profile_path)Make sure to update the profile path to your current one before using it.

Using Extensions

Extensions can enhance browser functionality by adding extra features or tools. This can be useful for tasks like solving captchas or ad blocking.

You'll need the extension file in *.zip or *.crx format to use extensions in headless browsers. You'll also need to specify it in the browser object parameters. For example, in Selenium, this might look like:

extension_path = 'folder/extension.crx'

chrome_options = webdriver.ChromeOptions()

chrome_options.add_extension(extension_path)For Pyppeteer:

extension_path = 'folder/extension.crx'

browser = await launch(headless=False, args=[f'--disable-extensions-except={extension_path}', f'--load-extension={extension_path}'])The --disable-extensions-except option in Pyppeteer allows you to specify which extensions should be disabled, except for the one you specify.

Conclusion and Takeaways

This article explored the numerous advantages of using headless browsers in Python for web scraping and automation. Launching a browser in headless mode offers an effective solution for tasks like data scraping and web application testing and can significantly simplify the development process.

We examined various libraries and concluded that Pyppeteer and Selenium are the most functional ones, enabling headless browser operation in Python. Furthermore, we discussed key techniques and use cases, including installation, script writing, and advanced features like multithreading and proxy management.

However, if you need to perform web scraping quickly and don't want to configure and maintain the infrastructure yourself, you can turn to ready-made solutions like our Web Scraping API, which provides you with ready-made data right away. In this case, you won't have to worry about connecting proxies, solving captchas, or using a headless browser to get dynamic content, and you can fully concentrate on processing the received data.

Whether you choose to use headless browsers or ready-made API, it's crucial to select the appropriate tool for your needs and goals. Both approaches offer unique advantages and can be successfully applied in various web development and data analysis scenarios.