Scraping in the modern world is an essential part of any business. It allows you not to waste employees' time manually collecting the necessary information, whether it is data on competitors or the dynamics of price changes on the trading floor.

Often scrapers are used to collect leads. They allow you to manage current databases or update old ones quickly.

Ways to Get Data

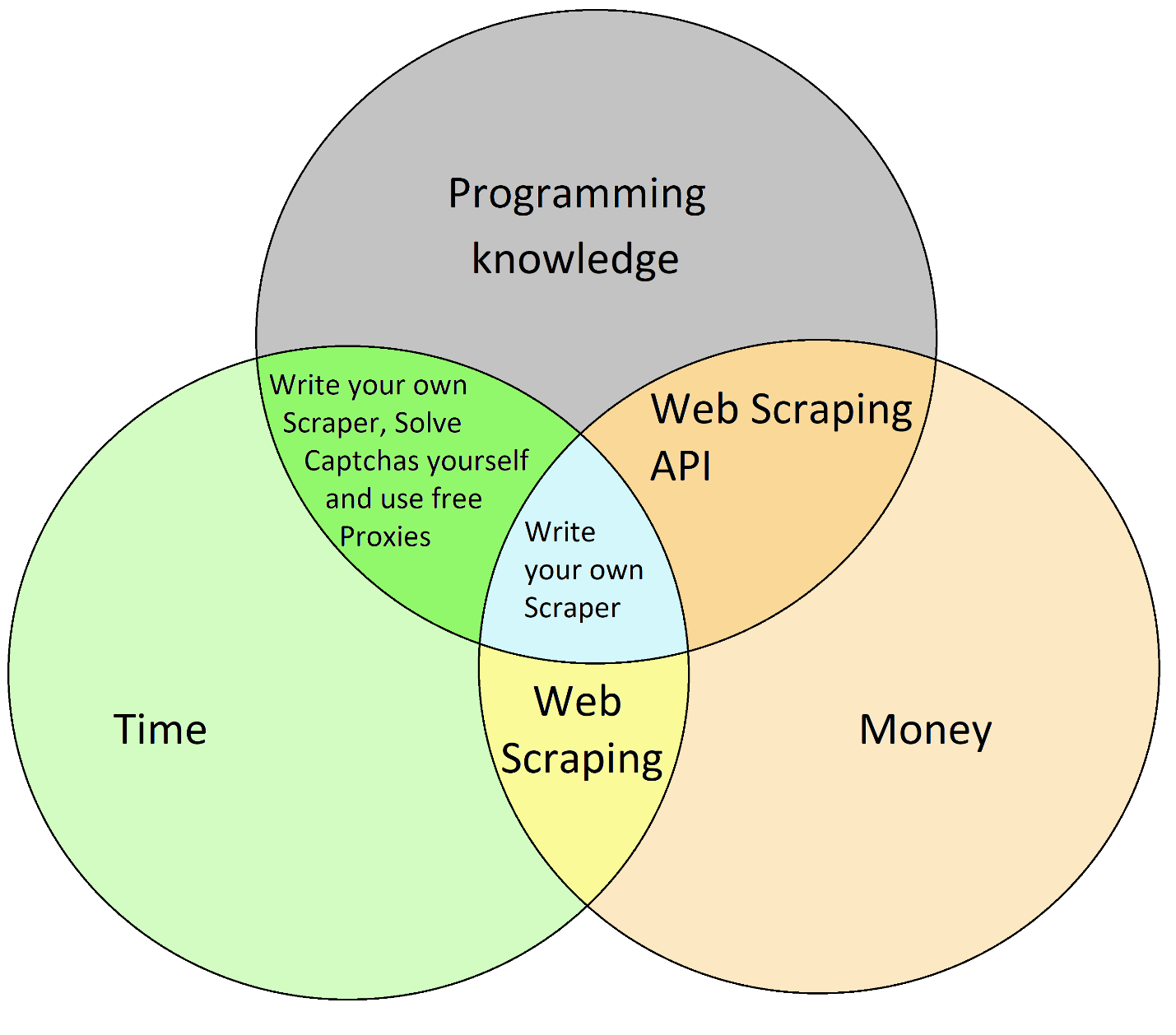

To use such an advanced tool as scraping, you must decide what you are ready to pay for: time, money, or programming knowledge.

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Here we will consider precisely the option of using the Web Scraping API. And you can even try it for free - at least the first 1000 requests.

If you think programming is too complex, there is an alternative. For example, you can use Zapier for visual customization and integration.

Scraping Google Maps Using Web Scraping API

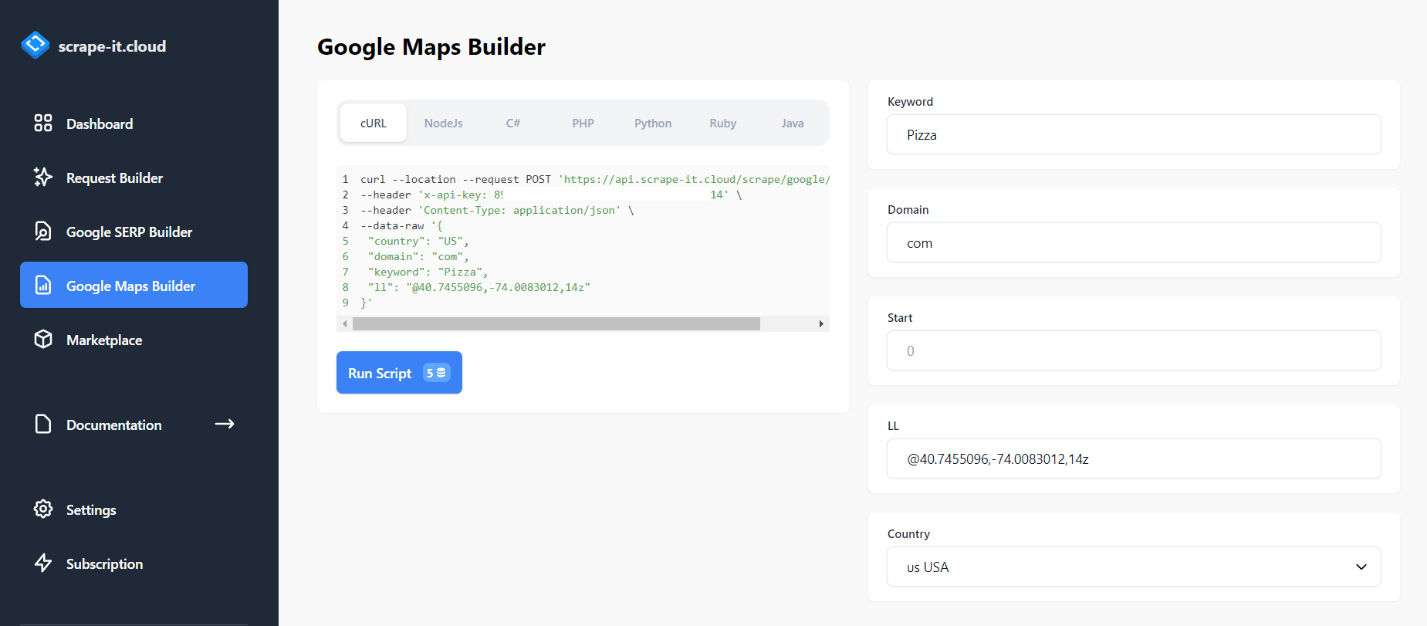

To use the web scraping API, sign up for HasData. To do this, click "Try for Free" and fill in the login information. Now you can create a simple request to scrape some data from Google Maps. To do this, go to the "Google Maps Builder" tab and enter the keyword for crawling in the keyword field:

Run the request from the site by clicking "Run Script.” It will return the first 20 rows of results from Google Maps.

Algorithm of Scraper Creating

We will write the entire script in the Python programming language, utilizing a Google Maps Search API. To execute the script, you'll need to install a Python interpreter. For more information on how to get started with this technique, you can refer to an introductory article about web scraping with Python.

Now, to more accurately imagine the final result, let's describe the algorithm of what we will consider next.

- Try an example of a simple Google Maps scraper.

- Get a list of keywords from an Excel document.

- Get the start value and several positions from the command line.

- Save data to a new document.

- Creating a Python file with a simple Google Maps scraper is the easiest part. To do this, go to Google Maps scraper API builder and select the code type - Python. Then copy the resulting code into a file with the *.py extension.

However, then the HTTP.client library will be used. But for further convenience, we should use the requests library:

import requests

import json

url = "https://api.hasdata.com/scrape/google/locals"

payload = json.dumps({

"country": "US",

"domain": "com",

"keyword": "Pizza",

"ll": "@40.7455096,-74.0083012,14z"

})

headers = {

'x-api-key': 'YOUR-API-KEY',

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)It is an example of the simplest scraper. So we can go to the next step.

It's Never Been Easier to Harvest Data from Google Maps

If you can copy/paste, you can scrape 1000+ businesses in less than 2 minutes. It's that easy!

- 1,000 rows included

- No Credit Card Required

- 30-Day Trial

Get a list of Keywords from a Document

Create a file that will store the keywords. Let's name it "keywords.csv.” Therefore, every time the program goes to a new line and sees a new keyword, it should re-execute the query.

Add a line that will open the keywords.csv file for reading and specify a line-by-line cycle:

with open("keywords.csv", "r+") as f:

for keyword in f:Place here the request body, where instead of the given keyword, there will be a variable:

temp = """{

"country": "US",

"domain": "com",

"keyword": """+"\""+keyword.strip()+"\""+""",

"ll": "@40.7455096,-74.0083012,14z"

}

"""

payload = json.loads(json.dumps(temp))To ensure everything is fine and working, you can run the file on the command line.

Set Rows Amount

Let's ask on the command line for the scraping start position and the number of pages from that position. We will understand 20 lines by one page since the API returns 20 rows, starting from the specified.

To do this, add a request to enter the starting position and enter it into the variable start:

start = int(input("Start position: "))And also, add a request for the number of pages:

pages = int(input("Number of pages: "))And with this in mind, let’s change the request body and add a loop for scraping the specified number of pages starting from the position start:

for i in range(start, 20*pages, 20):

temp = """{

"country": "US",

"domain": "com",

"keyword": """+"\""+keyword.strip()+"\""+""",

"ll": "@40.7455096,-74.0083012,14z",

"start": """+str(i)+"""

}

"""It is important to remember that we can use only string variables in the request body, but we need numeric variables for the loop.

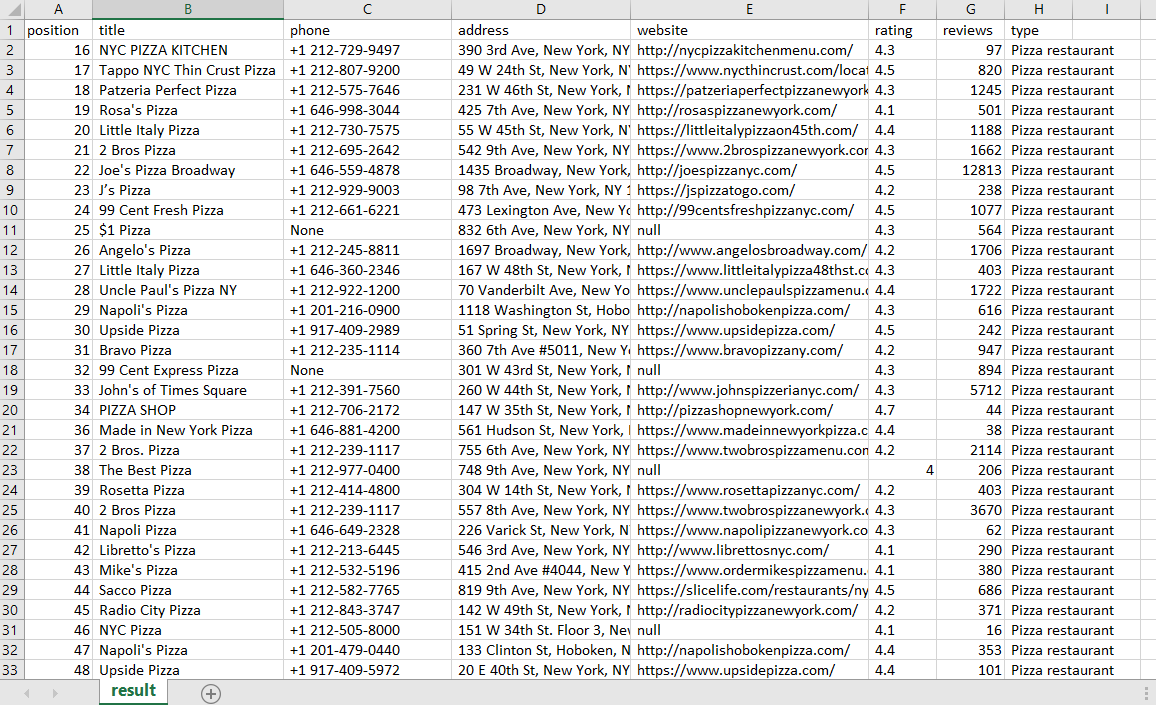

Save results in an Excel Document

For the data to be conveniently processed, let's save it to an excel file. Every time the program starts, it shouldn’t add the data in an existing file to the end. In this case, we will delete the old data. We add a file overwrite at the beginning of the script. We will also name the columns in which we will save the data:

with open("result.csv", "w") as f:

f.write("position; title; phone; address; website; rating; reviews; type\n")We will place data saving in the try..except block so that if the request returns an empty response, the script doesn’t stop its work completely:

try:

for item in data["scrapingResult"]["locals"]:

with open("result.csv", "a") as f:

f.write(str(item["position"])+"; "+str(item["title"])+"; "+str(item["phone"])+"; "+str(item["address"])+"; "+str(item["website"])+"; "+str(item["rating"])+"; "+str(item["reviews"])+"; "+str(item["type"])+"\n")

except Exception as e:

print("There are no locals")Let's try to run the resulting script and check the generated result.csv file:

Find and extract emails from any website with ease. Build targeted email lists for lead generation, outreach campaigns, and market research. Download your extracted…

Yellow Pages Scraper is the perfect solution for quickly and easily extracting business data! With no coding required, you can now scrape important information from…

Full Scraper Code

import requests

import json

url = "https://api.hasdata.com/scrape/google/locals"

headers = {

'x-api-key': 'YOUR-API-KEY',

'Content-Type': 'application/json'

}

with open("result.csv", "w") as f:

f.write("position; title; phone; address; website; rating; reviews; type\n")

start = int(input("Start position: "))

pages = int(input("Number of pages: "))

with open("keywords.csv", "r+") as f:

for keyword in f:

for i in range(start, 20*pages, 20):

temp = """{

"country": "US",

"domain": "com",

"keyword": """+"\""+keyword.strip()+"\""+""",

"ll": "@40.7455096,-74.0083012,14z",

"start": """+str(i)+"""

}

"""

payload = json.loads(json.dumps(temp))

response = requests.request("POST", url, headers=headers, data=payload)

data = json.loads(response.text)

try:

for item in data["scrapingResult"]["locals"]:

with open("result.csv", "a") as f:

f.write(str(item["position"])+"; "+str(item["title"])+"; "+str(item["phone"])+"; "+str(item["address"])+"; "+str(item["website"])+"; "+str(item["rating"])+"; "+str(item["reviews"])+"; "+str(item["type"])+"\n")

except Exception as e:

print("There are no locals")Conclusion and Takeaways

The web scraping API allows the developer to not worry about proxies and captcha solving and to focus on creating the main product. And using the Google Maps Search API allows you to save time when collecting leads or suppliers.

Using Python, the task of creating a full-fledged scraper is quite simple. Similarly to the option discussed in the article, you can also change coordinates or the country for which you will perform scraping.