With over 1.5 billion active listings, eBay ranks as one of the world's largest eCommerce platforms. Scraping publicly available data from eBay offers many benefits, like price monitoring and product trends.

In this detailed blog, you'll learn how to scrape eBay with Python, the challenges faced while scraping eBay, and how to use HasData API to extract data from any website with a simple API call without the need for a proxy.

Introduction to eBay Data Scraping

eBay is one of the most popular choices for e-commerce data extraction because it provides rich information for analysis and decision-making. eBay's business model differs from a standard retail platform (like Amazon) by its auction feature, where sellers list products at low prices and customers bid, leading to dynamic price changes.

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Our Amazon Best Sellers Scraper extracts the top most popular products from the Best Sellers category and downloads all the necessary data, such as product name,…

Sellers can extract valuable data from eBay to improve their offerings and gain a competitive edge in various ways, such as:

- Price Monitoring: In e-commerce, prices fluctuate constantly, making it crucial to access competitor data on eBay to offer the most competitive pricing. Scraping this data can be incredibly valuable. It can reveal the current price range for your target products, allowing you to make informed decisions about your pricing.

- Market Research: E-commerce data offers valuable insights into market trends, consumer preferences, and buying patterns. Analyzing your customers' shopping patterns can help you better predict their future purchases and identify broader market trends. Additionally, tracking customer locations can show where your products are performing well.

- Competitor Analysis: By gathering information about your competitors' product prices, discounts, and promotions, you can make data-driven decisions about your product offerings. Consider reducing your prices to attract customers or bidders if many similar products are available.

- Sentiment Analysis: Reviews and ratings offer valuable insights into customer satisfaction and product feedback. By scraping them, you can understand your customers' satisfaction and identify opportunities to enhance your product or service.

Structure of an eBay Page

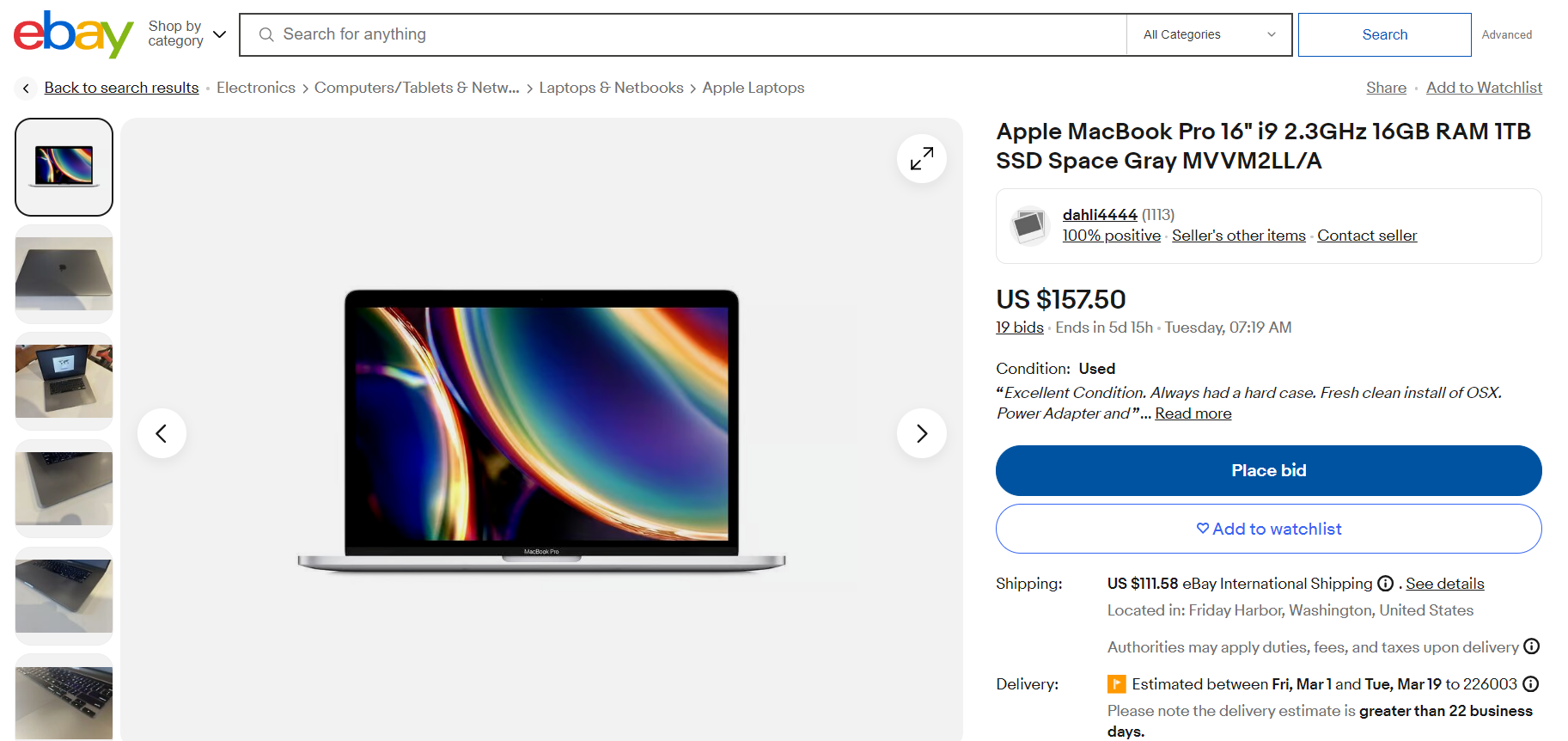

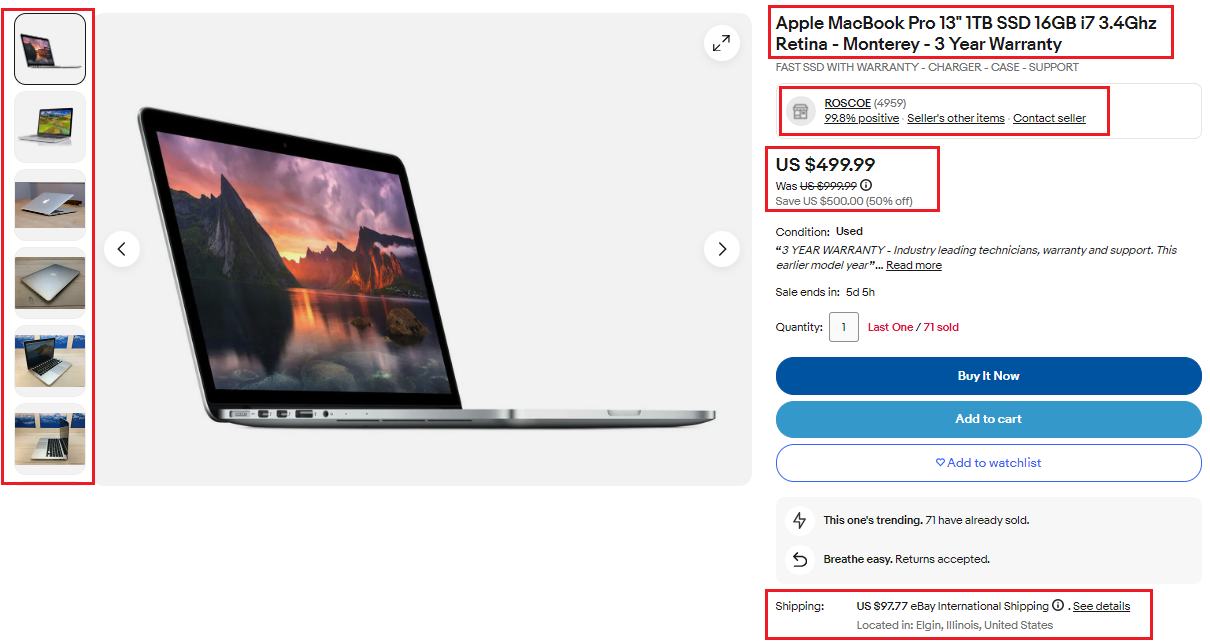

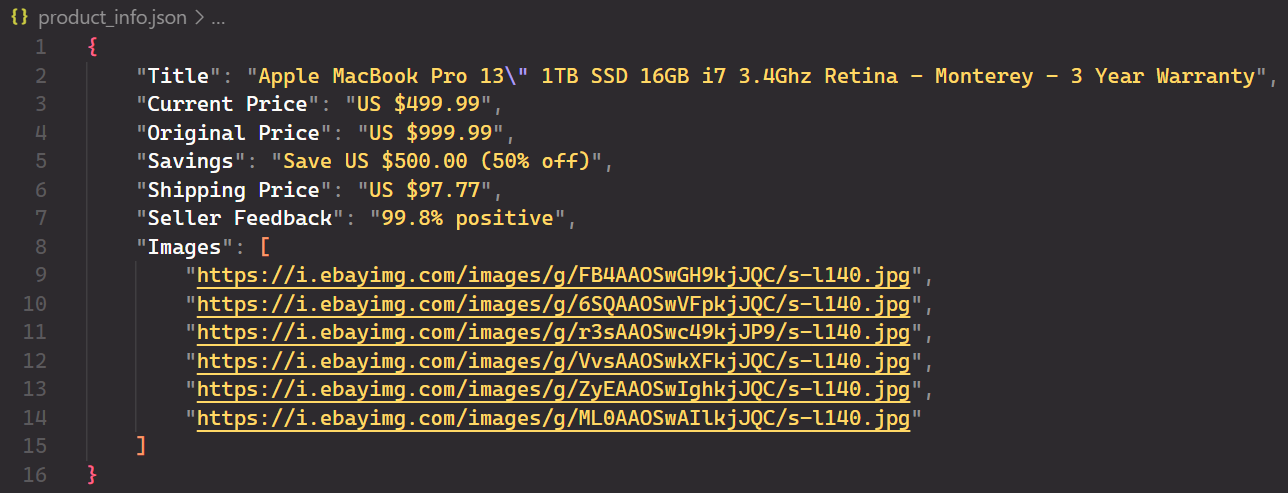

eBay's website includes both product and search results pages. A standard eBay product page, such as the Apple MacBook page shown below, contains useful information that can be scraped. This information contains the product title, description, image, rating, price, availability status, customer reviews, shipping cost, and delivery date.

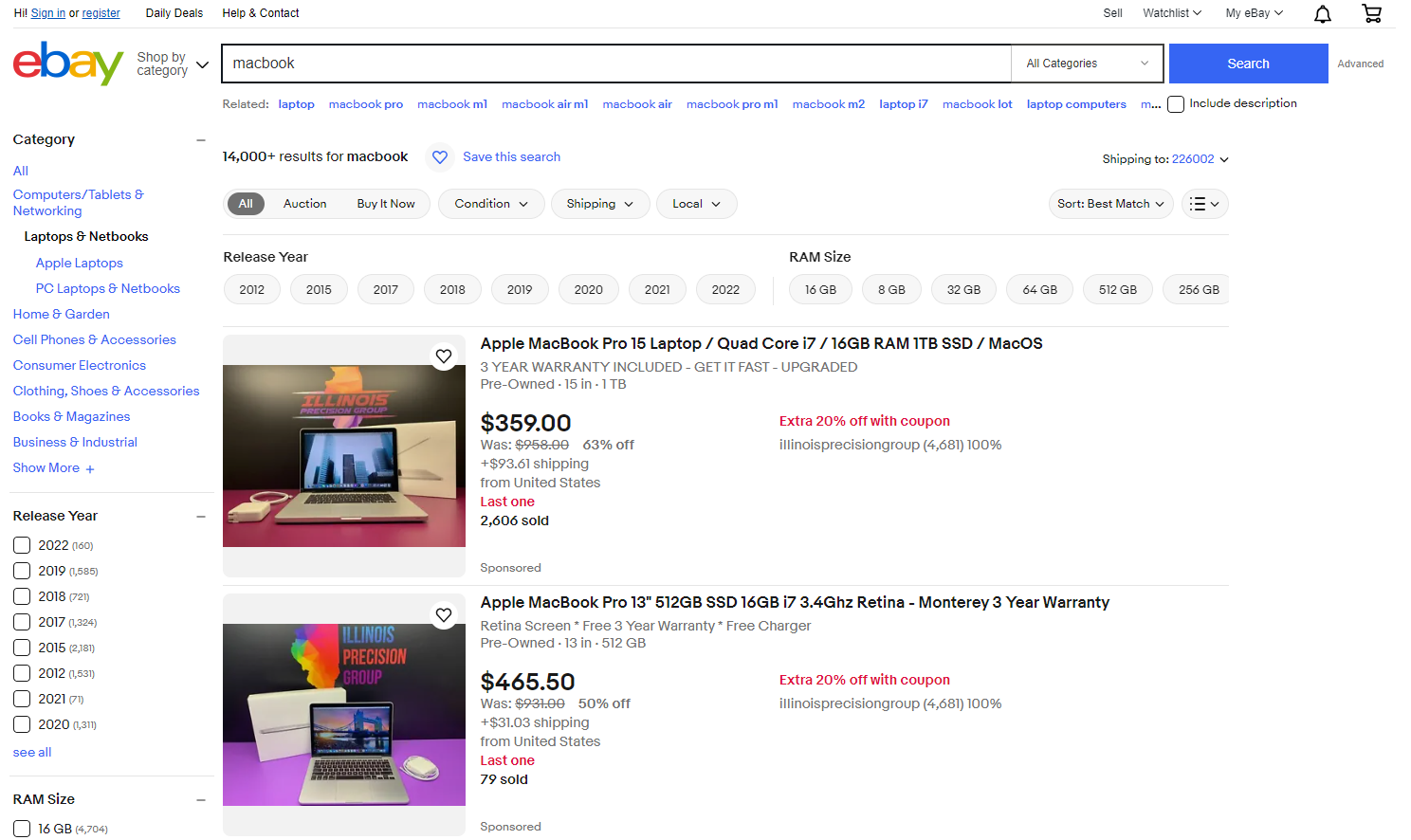

When you search for a keyword like "MacBook" in the search bar, you'll be directed to a search results page like the one below. You can extract all the listed products on this page, including their titles, product images, ratings, reviews, and more.

Here, you can see thousands of products that can be extracted, providing access to a wealth of information.

Now that you understand the significance of the data we can obtain from eBay, let's dive into a step-by-step guide for extracting specific data from eBay using Python.

Setting Up Your Python Environment for Scraping

To set up your Python environment for web scraping, you must meet some system requirements and install the required libraries.

Prerequisites

Before starting, ensure you meet the following requirements:

- Python Installed: Download the latest version from the official Python website. For this blog, we are using the Python 3.9.12.

- Code Editor: Choose a code editor, such as Visual Studio Code, PyCharm, or Jupyter Notebook.

Next, create a Python project called 'ebay-scraper' using the following commands:

mkdir ebay-scraper

cd ebay-scraperOpen this project in your preferred code editor and create a new Python file (scraper.py).

Install Required Libraries

To perform web scraping with Python, you must install some essential libraries: requests, BeautifulSoup, and Pandas.

- Pandas for the creation of DataFrame from the extracted data and efficient writing of the DataFrame to a CSV file. It officially supports Python 3.9, 3.10, 3.11, and 3.12.

- Requests send HTTP requests and retrieve HTML content from web pages. It officially supports Python 3.7+.

- BeautifulSoup extracts data from the raw HTML content using tags, attributes, classes, and CSS selectors.

pip install beautifulsoup4 requests pandasScraping eBay Product Page

Scraping a page involves understanding its structure, downloading the raw HTML content, parsing HTML content to extract product details, and then storing the data for further analysis.

Analyzing the eBay Product Page

A product page contains various data elements that we can extract. We'll scrape seven key attributes from the page, including:

- Images of the product

- Product Title

- Price

- Original price

- Discount

- Seller feedback rating

- Shipping charges

On eBay, the product page URL follows the format below. This is a dynamic URL that changes based on the item ID, which is a unique identifier for each item.

<https://www.ebay.com/itm/><ITM_ID>Let's scrape the page https://www.ebay.com/itm/404316395828. The item ID is 404316395828.

Getting the HTML Page

To get the HTML content of an eBay product page, first identify its unique ID number. Then, use the requests.get() function, supplying the ID as an argument. This function sends an HTTP GET request to eBay, requesting the specific product page.

If successful, the server sends back a response containing the entire page's HTML content, which gets stored in a variable for you to access. However, if errors occur during this process, like an invalid ID or network issues, the raise_for_status() function will trigger an exception to alert you.

url = f"<https://www.ebay.com/itm/{item_id}>"

response = requests.get(url)

response.raise_for_status()Parsing the HTML Page

Create a BeautifulSoup object by passing the response.text and the parser name ‘html.parser’ to the BeautifulSoup() constructor. This parsing process breaks down the HTML code into its constituent elements, including tags and attributes, and constructs a tree-like structure known as the Document Object Model (DOM).

Once the DOM is established, you can employ various methods such as find(), find_all(), select_one(), and select() to selectively extract elements. find() and find_all() are used for navigating the parse tree, while select_one() and select() are used for finding elements using CSS selectors.

soup = BeautifulSoup(response.text, "html.parser")Extracting Key Product Data

We'll be extracting seven key attributes from the product: images, title, current price, original price, discount, seller feedback rating, and shipping charges.

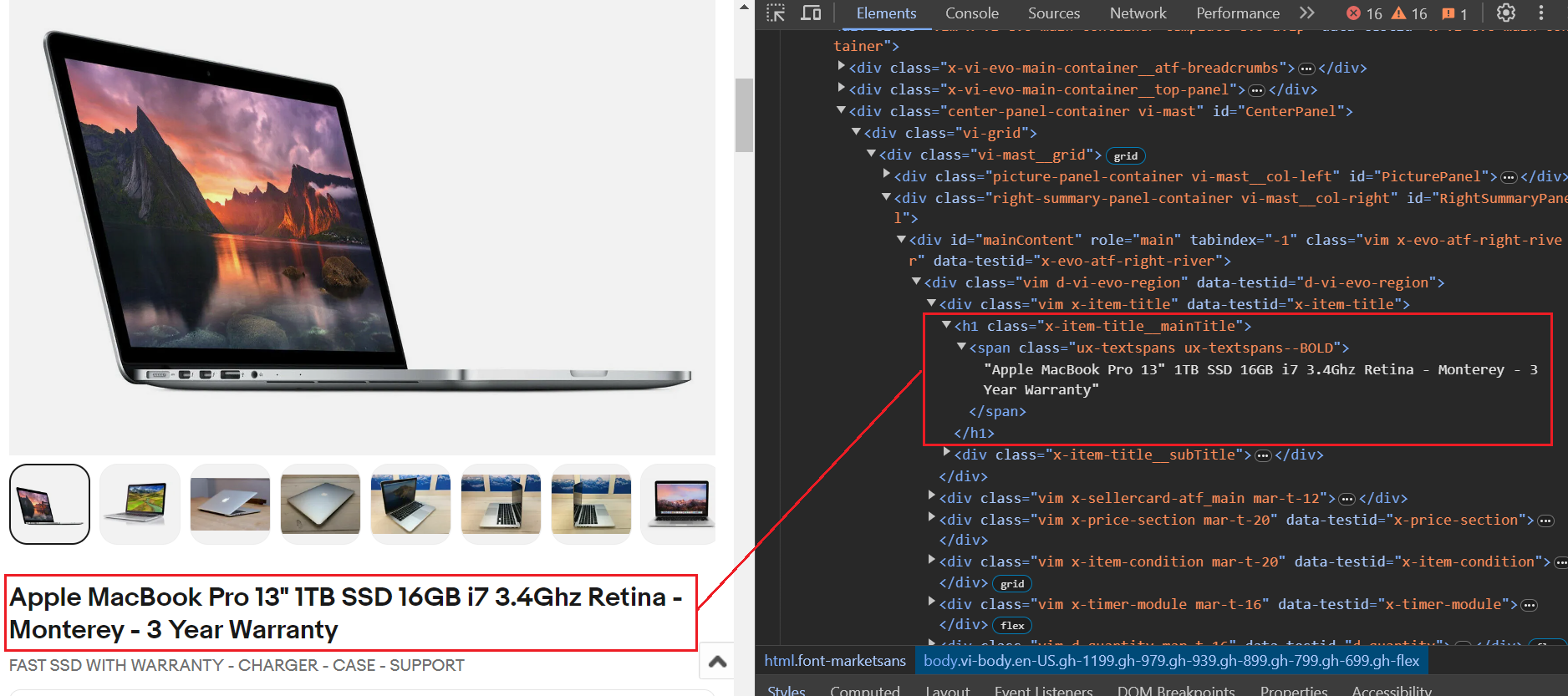

Extract Product Title

The title is stored within the h1 tag with the class x-item-title__mainTitle. Within this h1 tag, there's a span tag with two class names. We'll use the .ux-textspans--BOLD class name to extract the title text. Therefore, the final selector for extracting the title is .x-item-title__mainTitle .ux-textspans--BOLD.

Here's a sample code snippet. We use the select_one method, which will return the first element that matches the specified CSS selector. We start by extracting the title, then check if it's empty, and finally store it in the item_data dictionary, where all key attributes will be stored.

item_title_element = soup.select_one(".x-item-title__mainTitle .ux-textspans--BOLD")

item_title = item_title_element.text if item_title_element else "Not available"

item_data["Title"] = item_titleExtract Current Price

The price is located within a div tag with the class name x-price-primary. Inside this div tag, a span tag with the class name ux-textspans contains the price. To target this element using CSS accurately, the correct selector would be .x-price-primary .ux-textspans.

Here's the code snippet. The scraping operations are the same as we've seen in the title.

current_price_element = soup.select_one(".x-price-primary .ux-textspans")

current_price = current_price_element.text if current_price_element else "Not available"

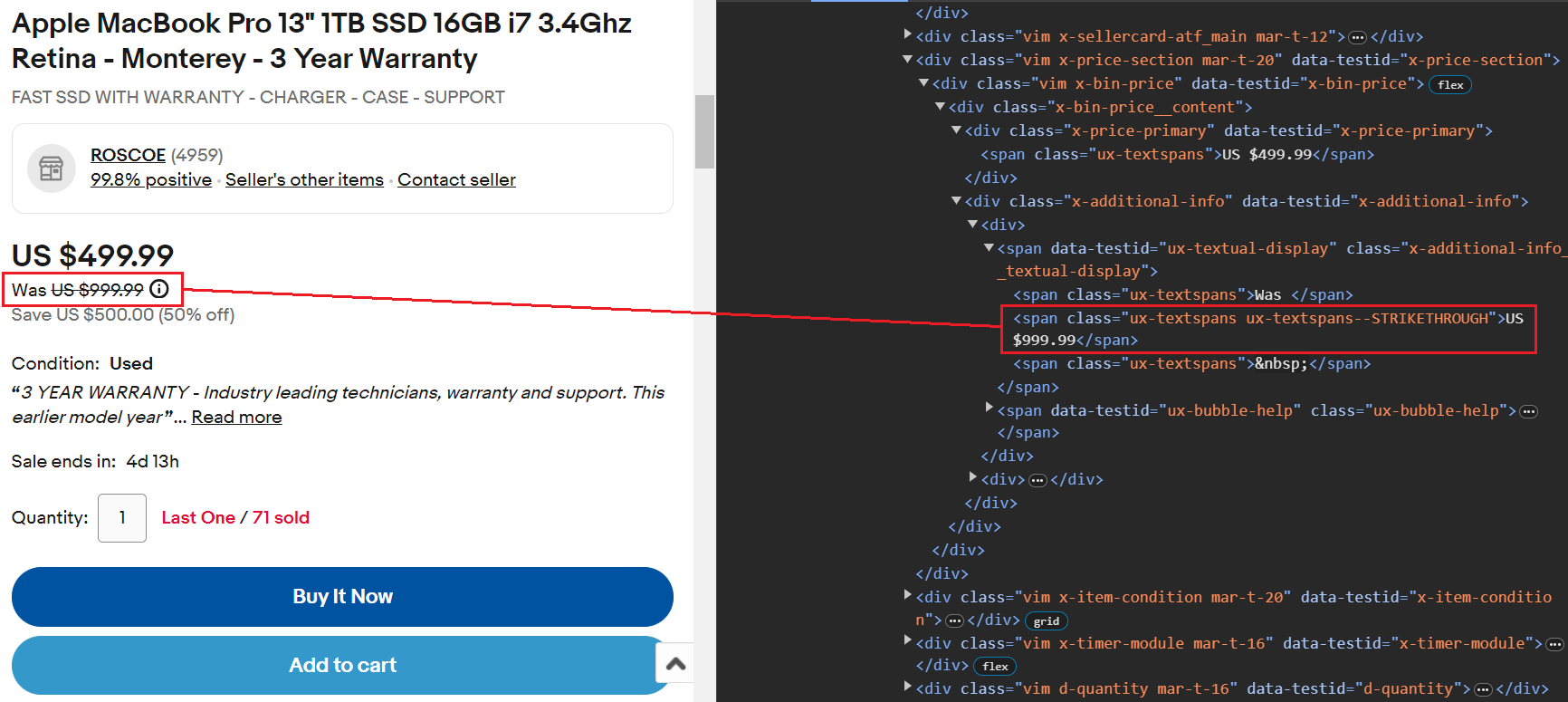

item_data["Current Price"] = current_priceExtract Actual Price

The strikethrough value indicates the actual price. This value can be easily extracted using the span tag class ux-textspans--STRIKETHROUGH.

Here’s the code snippet:

original_price_element = soup.select_one(".ux-textspans--STRIKETHROUGH")

original_price = (

original_price_element.text if original_price_element else "Not available")

item_data["Original Price"] = original_priceExtract Saving/Discount

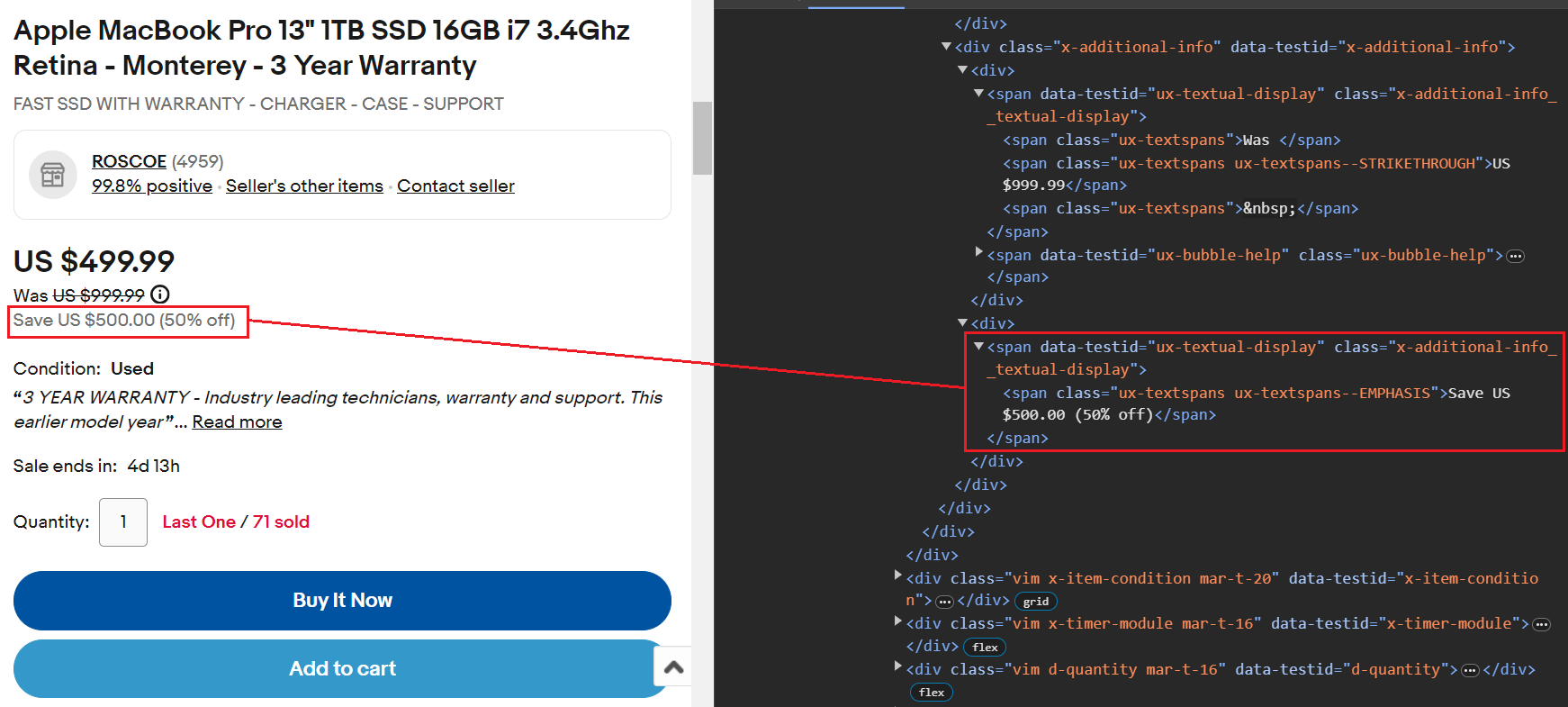

The discount is stored within a span tag with the class ux-textspans--EMPHASIS. This span tag is nested within a parent element that has the class x-additional-info__textual-display. Therefore, the appropriate selector to target this element would be .x-additional-info__textual-display .ux-textspans--EMPHASIS.

Here’s the code snippet:

savings_element = soup.select_one(

".x-additional-info__textual-display .ux-textspans--EMPHASIS")

savings = savings_element.text if savings_element else "Not available"

item_data["Savings"] = savingsExtract Shipping Price

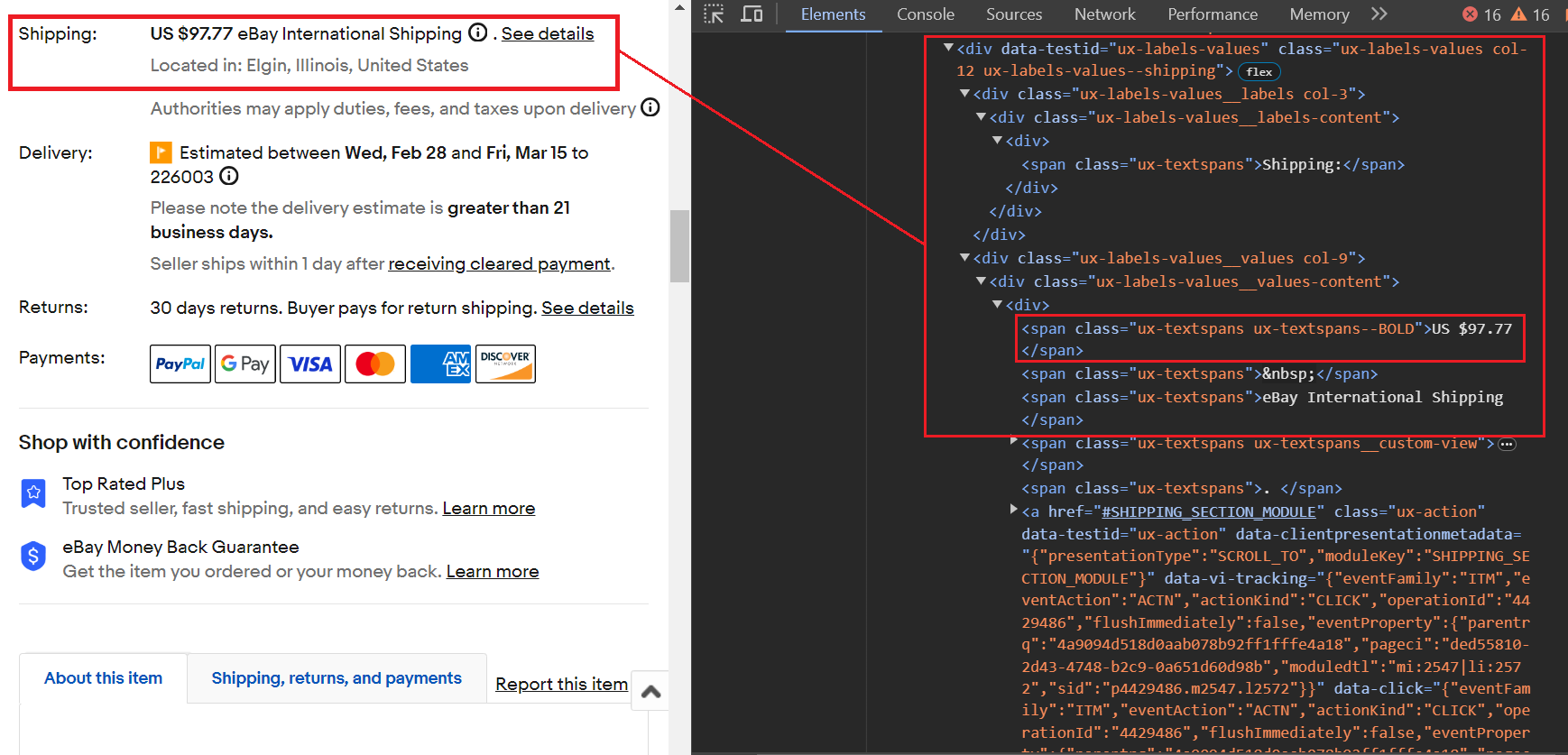

The shipping price is located within a span tag with the class ux-textspans--BOLD. Notably, this span tag is nested within various div tags, but we'll focus on the parent div tag that has the class ux-labels-values--shipping, as this div tag appears to have a unique class name. The scraping process involves these two steps:

- Use

soup.find('div', class_='ux-labels-values--shipping')to find thedivtag with the specified class name. - Apply

.find('span', class_='ux-textspans--BOLD').textto locate and extract the text content of the first matchingspanelement with the classux-textspans--BOLDwithin the identifieddivtag.

Here’s the code snippet:

shipping_parent_element = soup.find("div", class_="ux-labels-values--shipping")

shipping_info = (

"Free Shipping"

if not shipping_parent_element

else shipping_parent_element.find("span", class_="ux-textspans--BOLD").text

)

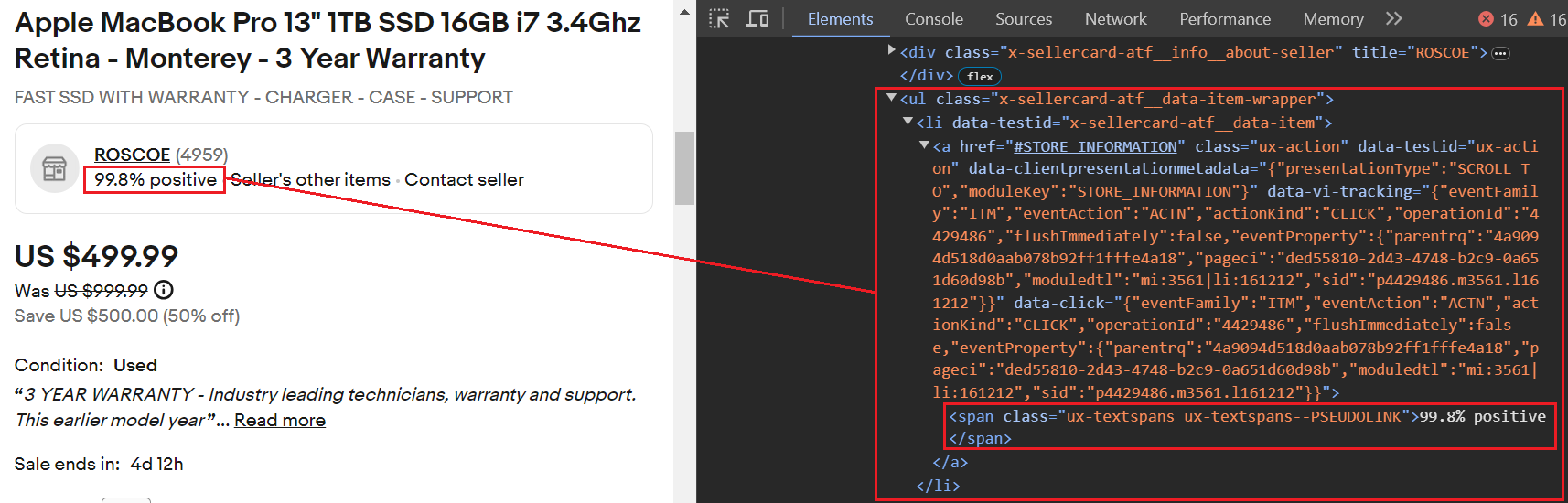

item_data["Shipping Price"] = shipping_infoExtract Seller Feedback

The seller feedback rating is located within the <a> tag, which is nested within a <li> tag having the data-testid attribute set to x-sellercard-atf__data-item. This <li> tag, in turn, is contained within a <ul> tag that has the class .x-sellercard-atf__data-item-wrapper.

Thus, the selector to target this element is .x-sellercard-atf__data-item-wrapper li[data-testid="x-sellercard-atf__data-item"] a.

Here’s the code snippet:

seller_feedback_element = soup.select_one(

'.x-sellercard-atf__data-item-wrapper li[data-testid="x-sellercard-atf__data-item"] a'

)

seller_feedback = (

seller_feedback_element.text if seller_feedback_element else "Not available"

)

item_data["Seller Feedback"] = seller_feedbackExtract Product Images

All of the images are located within individual button tags that share the common class name ux-image-grid-item. The parent container for these button tags is a div tag with the class ux-image-grid-container.

To access the images, follow these steps:

- Locate the div tag using the

findmethod. - Apply the

selectmethod with the CSS selector.ux-image-grid-item imgto retrieve a list of all matching image elements.

Here's the code snippet. After extracting all the <img> tags, we'll check if the src attribute is present within those tags. This is because the src attribute specifies the URL of the image.

image_grid_container = soup.find("div", class_="ux-image-grid-container")

image_links = []

if image_grid_container:

img_elements = image_grid_container.select(".ux-image-grid-item img")

image_links = [img["src"] for img in img_elements if "src" in img.attrs]

item_data["Images"] = image_linksExport Data to JSON

You've successfully extracted and stored all the data in the item_data dictionary. To save the data to a JSON file, import the json module and use the dump method.

with open(filename, "w") as file:

json.dump(item_data, file, indent=4)Here’s the JSON file:

Complete Code

Below is the complete code for the product data extraction. All you need is the item ID.

import requests

from bs4 import BeautifulSoup

import json

def fetch_ebay_item_info(item_id):

url = f"<https://www.ebay.com/itm/{item_id}>"

try:

response = requests.get(url)

response.raise_for_status() # Raise an exception for bad requests

except requests.exceptions.RequestException as e:

print(f"Error: Unable to fetch data from eBay ({e})")

return None

soup = BeautifulSoup(response.text, "html.parser")

item_data = {}

try:

current_price_element = soup.select_one(

".x-price-primary .ux-textspans")

current_price = (

current_price_element.text if current_price_element else "Not available"

)

original_price_element = soup.select_one(

".ux-textspans--STRIKETHROUGH")

original_price = (

original_price_element.text if original_price_element else "Not available"

)

savings_element = soup.select_one(

".x-additional-info__textual-display .ux-textspans--EMPHASIS"

)

savings = savings_element.text if savings_element else "Not available"

shipping_parent_element = soup.find(

"div", class_="ux-labels-values--shipping")

shipping_info = (

"Free Shipping"

if not shipping_parent_element

else shipping_parent_element.find("span", class_="ux-textspans--BOLD").text

)

seller_feedback_element = soup.select_one(

'.x-sellercard-atf__data-item-wrapper li[data-testid="x-sellercard-atf__data-item"] a'

)

seller_feedback = (

seller_feedback_element.text if seller_feedback_element else "Not available"

)

item_title_element = soup.select_one(

".x-item-title__mainTitle .ux-textspans--BOLD"

)

item_title = item_title_element.text if item_title_element else "Not available"

image_grid_container = soup.find(

"div", class_="ux-image-grid-container")

image_links = []

if image_grid_container:

img_elements = image_grid_container.select(

".ux-image-grid-item img")

image_links = [img["src"]

for img in img_elements if "src" in img.attrs]

item_data["Title"] = item_title

item_data["Current Price"] = current_price

item_data["Original Price"] = original_price

item_data["Savings"] = savings

item_data["Shipping Price"] = shipping_info

item_data["Seller Feedback"] = seller_feedback

item_data["Images"] = image_links

return item_data

except Exception as e:

print(f"Error: Unable to parse eBay data ({e})")

return None

def save_to_json(item_data, filename="product_info.json"):

if item_data:

with open(filename, "w") as file:

json.dump(item_data, file, indent=4)

print(f"Success: eBay item information saved to {filename}")

def main():

item_id = input("Enter the eBay item ID: ")

item_info = fetch_ebay_item_info(item_id)

if item_info:

save_to_json(item_info)

if __name__ == "__main__":

main()Scraping eBay Search

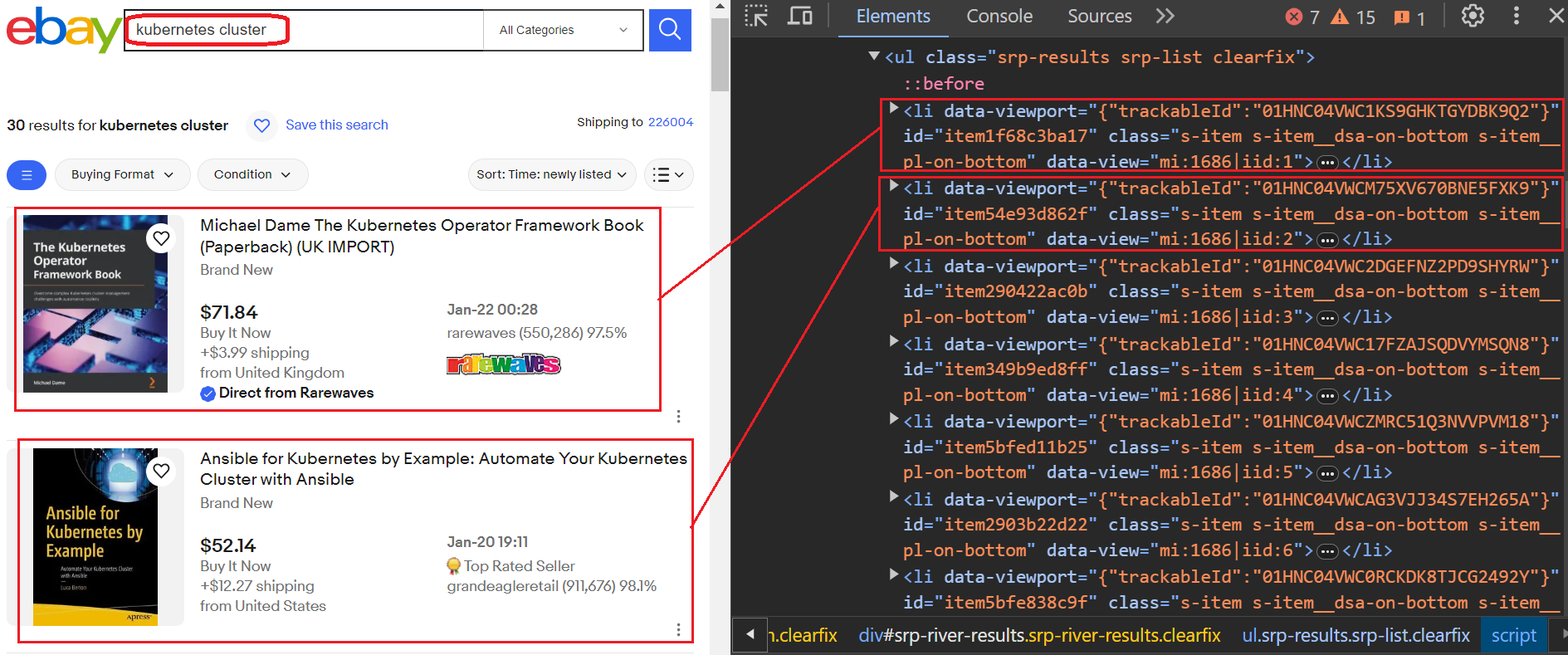

When you search for a keyword, eBay automatically redirects you to a specific URL containing your search results. For example, searching for ‘kubernetes cluster’ takes you to a URL similar to https://www.ebay.com/sch/i.html?_nkw=kubernetes+cluster&_sacat=0.

This URL uses several parameters to define your search query. Here are some of them:

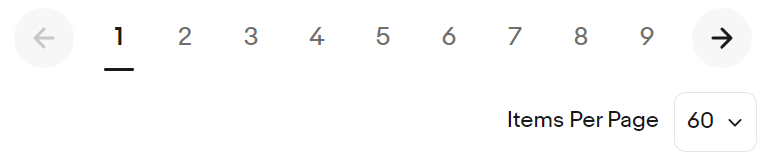

_nkw: The search keyword itself._sacat: Any category restriction you applied._sop: The chosen sorting type, like "best match" or "newly listed."_pgn: The current page number of the search results._ipg: The number of listings displayed per page (defaults to 60).

You’ll get several results for the search keyword. To successfully scrape the data, you have to go through all listings on the page and handle the pagination until you reach the last page.

eBay Listings

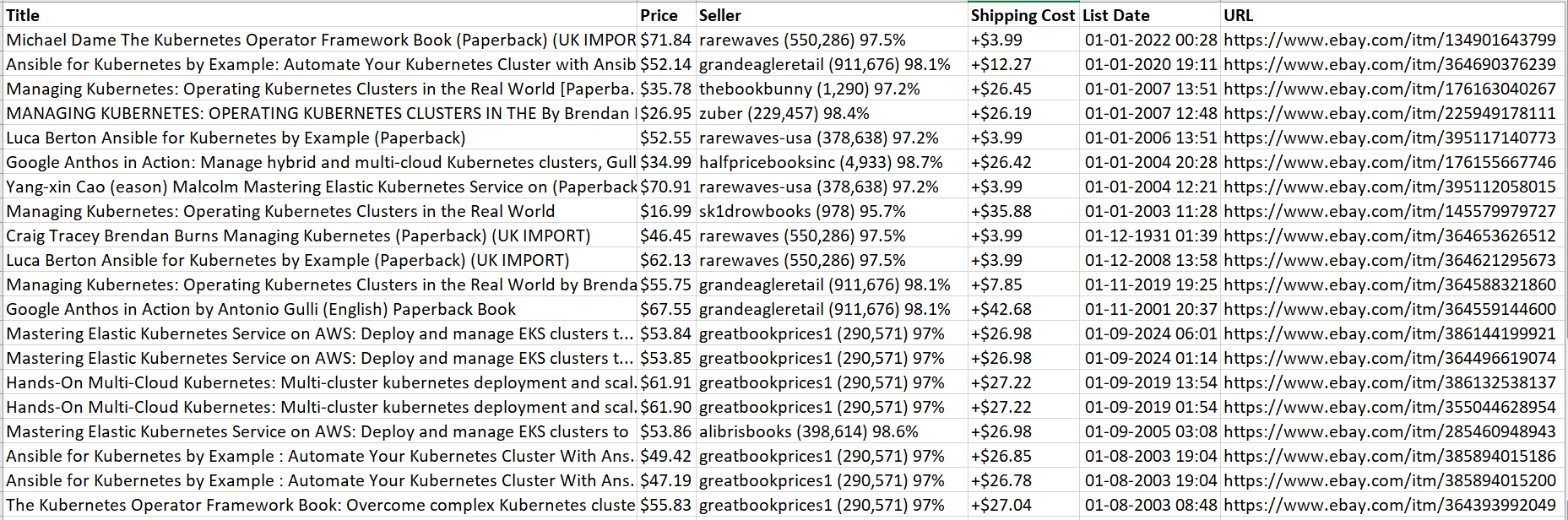

As you can see in the image below, we searched for the keyword and obtained a list of results. Each result is contained within a separate li tag. To extract the desired information, we need to iterate through all these li tags and carefully extract specific data. We'll be extracting the title, price, seller shipping cost, product URL, and list date.

Let's delve into how we can scrape product data from this list. Specifically, each product is wrapped in a li tag with the class s-item. We can use the find_all function to locate all these elements. This function will find all matching elements in the parse tree and return a list. Then, you can iterate through the list and pass each element individually to the extract_product_details function to extract its information.

for item in soup.find_all("li", class_="s-item"):

extract_product_details(item)Now you can extract all the data points by passing the tag with the class name. The title is inside the div tag that has class s-item__title. Similarly, the price is inside the span tag having class s-item__price, and the shipping cost is inside the span tag having class s-item__logisticsCost.

def extract_product_details(item):

title = item.find("div", class_="s-item__title")

price = item.find("span", class_="s-item__price")

seller = item.find("span", class_="s-item__seller-info-text")

shipping = item.find("span", class_="s-item__logisticsCost")

url = item.find("a", class_="s-item__link")

list_date = item.find("span", class_="s-item__listingDate")Handling Pagination

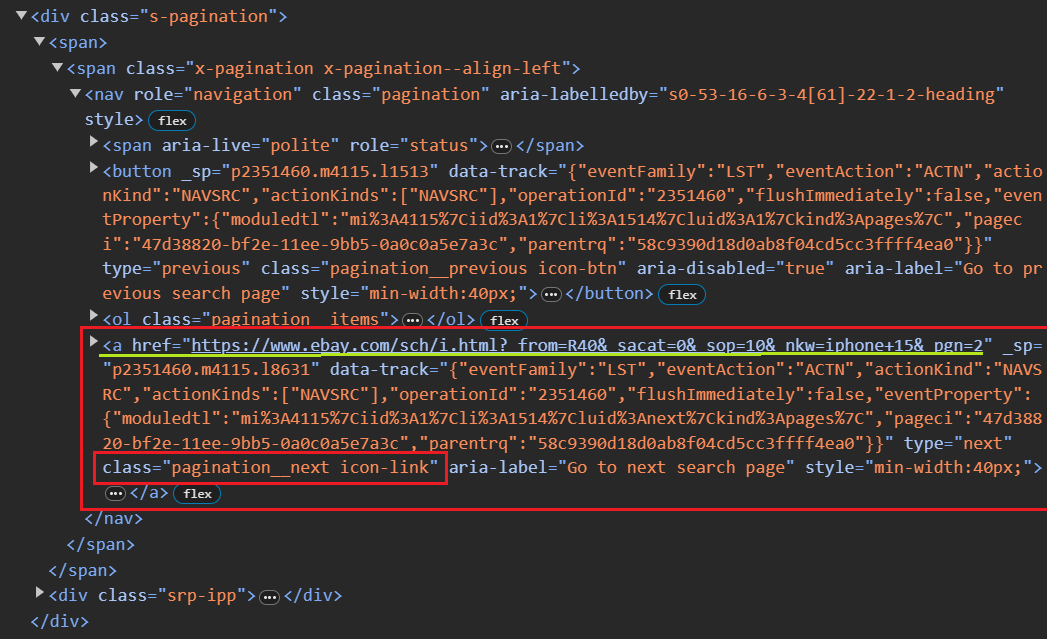

For a single page, scraping would be straightforward. However, eBay uses a numbered pagination system (consecutive page numbers in the URL). You can easily observe how the URL changes by clicking "Next": the _pgn parameter simply increments by one.

To handle pagination, we can implement a while loop that continues until no next page is available. After scraping each page, you need to extract the URL of the next page. Below is a rough code snippet:

def extract_next_url(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

next_page_button = soup.select_one("a.pagination__next")

next_page_url = next_page_button["href"] if next_page_button else None

while current_url:

products_on_page, next_page_url = extract_next_url(current_url)

current_url = next_page_urlThe above code snippet uses CSS selectors to find the first anchor element with the class pagination__next, which typically represents the "next page" button. If such a button is found, it extracts the value of the href attribute.

We did it! everything is stored in a CSV file.

Complete Code

The script essentially allows the user to input a search query and sorting preference (such as 'best_match,' 'ending_soonest,' or 'newly_listed’), scrapes eBay search results, extracts product details, and stores them in a CSV file.

import requests

from bs4 import BeautifulSoup

import pandas as pd

from urllib.parse import urlencode

def extract_product_details(item):

title = item.find("div", class_="s-item__title")

price = item.find("span", class_="s-item__price")

seller = item.find("span", class_="s-item__seller-info-text")

shipping = item.find("span", class_="s-item__logisticsCost")

url = item.find("a", class_="s-item__link")

list_date = item.find("span", class_="s-item__listingDate")

return {

"Title": title.text.strip() if title else "Not available",

"Price": price.text.strip() if price else "Not available",

"Seller": seller.text.strip() if seller else "Not available",

"Shipping Cost": (

shipping.text.strip().replace("shipping", "").strip()

if shipping

else "Not available"

),

"List Date": (

list_date.text.strip() if list_date else "Date not found"

),

"URL": url["href"].split("?")[0] if url else "Not available",

}

def extract_page_data(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

products = [

extract_product_details(item) for item in soup.find_all("li", class_="s-item")

]

next_page_button = soup.select_one("a.pagination__next")

next_page_url = next_page_button["href"] if next_page_button else None

return products, next_page_url

def write_to_csv(products, filename="product_info.csv"):

df = pd.DataFrame(products)

mode = "w" if not pd.io.common.file_exists(filename) else "a"

df.to_csv(filename, index=False, header=(mode == "w"), mode=mode)

print(f"Data has been written to {filename}")

def make_request(query, sort, items_per_page=60):

base_url = "<https://www.ebay.com/sch/i.html?">

query_params = {

"_nkw": query,

"_ipg": items_per_page,

"_sop": SORTING_MAP[sort],

}

return base_url + urlencode(query_params)

def scrape_ebay(search_query, sort):

current_url = make_request(search_query, sort, 240)

total_products = []

while current_url:

products_on_page, next_page_url = extract_page_data(current_url)

total_products.extend(products_on_page)

current_url = next_page_url

total_products = [

product for product in total_products if "Shop on eBay" not in product["Title"]

]

write_to_csv(total_products)

SORTING_MAP = {

"best_match": 12,

"ending_soonest": 1,

"newly_listed": 10,

}

user_search_query = input("Enter eBay search query: ")

sort = input("Choose one ('best_match', 'ending_soonest', 'newly_listed'): ")

scrape_ebay(user_search_query, sort)Challenges with Scraping eBay

To make eBay crawling more efficient and effective, you should also keep in mind that eBay can detect bots, block IP addresses, and ultimately disrupt data flow. Therefore, when scaling up eBay crawling, you must be prepared to face the following challenges:

- CAPTCHAs: Significantly hinder smooth data extraction.

- IP blocking: eBay actively blocks suspicious IP addresses.

- Session timeouts: Continuous scraping requires maintaining active sessions to avoid gaps in data.

- Pagination: Efficiently navigating through paginated content can significantly improve scraping speed.

- Legal and ethical considerations: Respecting eBay's terms of service and user privacy is important.

Get instant, real-time access to structured Google Shopping results with our API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Scraping eBay with HasData API

Let's see how easily the web scraping API can extract product information from e-commerce websites with just a simple API call without the need for a proxy.

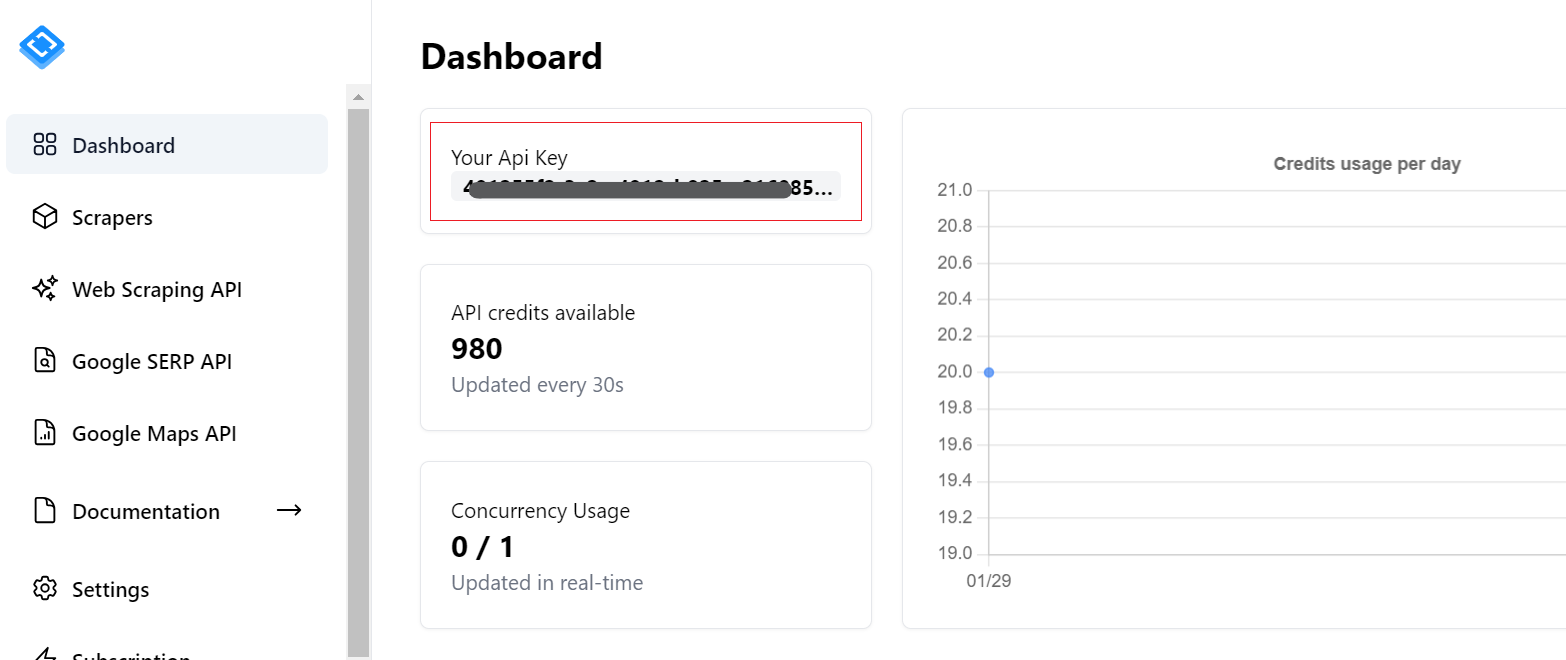

To get started, you need to get an API key. You can find it in your account after signing up on HasData. In addition, you’ll receive 1,000 free credits when you register to test our features.

Advantages of Using HasData for eBay Scraping

eBay, like most online platforms, has a negative view of bot scraping. Scraping large amounts of data in a short period of time can lead to increased website traffic, which can impact performance and availability. This can slow down response times for other users and lead to lost potential buyers. While not all scrapers harm the site during data collection, eBay may take steps to limit such bots if they are detected.

To limit bots, eBay uses measures such as CAPTCHA and blocking of suspicious IP addresses. These measures can make scraping significantly more difficult, but they can be circumvented. For example, you can implement CAPTCHA solving services into your application and use proxies to avoid blocking of your real IP address. However, this will require you to either find free proxies, which are unreliable, or buy expensive proxies and configure them for rotation.

To solve all of these problems at once, rather than circumventing them one by one, which can be costly, you can use a middleman service that will collect data for you, giving you the data ready-made. These middlemen are web scraping APIs. In this example, we will look at how to improve our code using HasData's web scraping API, which uses various anti-scraping technique avoidance mechanisms, including blocking avoidance, CAPTCHA solving, JavaScript rendering, proxy usage, and more.

Implementing HasData API in Your Project

HasData lets users directly obtain targeted data in JSON format, simplifying the data extraction process and eliminating the need for HTML parsing. Here are the steps for implementing HasData API in your project:

- Specify the HasData API URL.

- Define the payload: This contains the request data in JSON format. It includes:

- Target URL: The website address you want to scrape.

- Extraction rules: These specify how to extract specific elements using CSS selectors. For example,

{"Title": ".product-title-css-selector"}extracts the title element with the specified CSS selector class. You can define rules for various data points like price, seller, shipping cost, etc. - Headers: These include information like your API key for authentication.

- Send a POST request with the API URL, headers, and payload.

- Store JSON Response: The response contains all the data points you defined in your extraction rules.

Have you noticed anything different in this approach? Yes! You don't need to parse the HTML yourself. Simply choose a name for the data point and provide the corresponding CSS selector for the data you want to extract. HasData handles the parsing and returns the data directly in JSON format.

Here's a simple example of integrating the HasData API into your project:

def extract_page_data(url):

api_url = "<https://api.hasdata.com/scrape>"

payload = json.dumps(

{

"url": url,

"js_rendering": True,

"extract_rules": {

"Title": "div.s-item__title",

"Price": "span.s-item__price",

"Seller": "s-item__seller-info-text",

"Shipping cost": "span.s-item__logisticsCost",

"List Date": "span.s-item__listingDate",

"URL": "a.s-item__link @href",

"next": "a.pagination__next @href",

},

"proxy_type": "datacenter",

"proxy_country": "US",

}

)

headers = {

# Put HasData API key here

"x-api-key": "YOUR_API_KEY",

"Content-Type": "application/json",

}

full_response = requests.request("POST", api_url, headers=headers, data=payload)

data = json.loads(full_response.text)

title_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("Title", []), columns=["Title"]

)

price_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("Price", []), columns=["Price"]

)Here's the complete code for implementing the HasData API in your project.

import requests

import pandas as pd

from urllib.parse import urlencode

import json

def extract_page_data(url):

api_url = "<https://api.hasdata.com/scrape>"

payload = json.dumps(

{

"url": url,

"js_rendering": True,

"extract_rules": {

"Title": "div.s-item__title",

"Price": "span.s-item__price",

"Seller": "s-item__seller-info-text",

"Shipping cost": "span.s-item__logisticsCost",

"List Date": "span.s-item__listingDate",

"URL": "a.s-item__link @href",

"next": "a.pagination__next @href",

},

"proxy_type": "datacenter",

"proxy_country": "US",

}

)

headers = {

# Put HasData API key here

"x-api-key": "YOUR_API_KEY",

"Content-Type": "application/json",

}

full_response = requests.request(

"POST", api_url, headers=headers, data=payload)

data = json.loads(full_response.text)

title_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("Title", []), columns=["Title"]

)

price_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("Price", []), columns=["Price"]

)

seller_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("Seller", []), columns=["Seller"]

)

shipping_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("Shipping cost", []),

columns=["Shipping cost"],

)

list_date_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("List Date", []),

columns=["List Date"],

)

url_df = pd.DataFrame(

data["scrapingResult"]["extractedData"].get("URL", []), columns=["URL"]

)

products = pd.concat(

[title_df, price_df, seller_df, shipping_df, list_date_df, url_df], axis=1

)

products["URL_split"] = products["URL"].apply(lambda x: x.split("?")[0])

products = products.drop(columns=["URL"])

next_page_url = data["scrapingResult"]["extractedData"].get("next")

next_page_url = next_page_url[0] if next_page_url else None

return products, next_page_url

def make_request(query, sort, items_per_page=60):

base_url = "<https://www.ebay.com/sch/i.html?">

query_params = {

"_nkw": query,

"_ipg": items_per_page,

"_sop": SORTING_MAP[sort],

}

return base_url + urlencode(query_params)

def write_to_csv(products, filename="product_info.csv"):

mode = "w" if not pd.io.common.file_exists(filename) else "a"

products.to_csv(filename, index=False, header=(mode == "w"), mode=mode)

print(f"Data has been written to {filename}")

def scrape_ebay(search_query, sort):

current_url = make_request(search_query, sort, 240)

total_products = pd.DataFrame()

while current_url:

products_on_page, next_page_url = extract_page_data(current_url)

if total_products.empty:

total_products = pd.DataFrame(products_on_page)

else:

total_products = pd.concat(

[total_products, products_on_page], ignore_index=True

)

write_to_csv(total_products)

current_url = next_page_url

SORTING_MAP = {

"best_match": 12,

"ending_soonest": 1,

"newly_listed": 10,

}

user_search_query = input("Enter eBay search query: ")

sort = input("Choose one ('best_match', 'ending_soonest', 'newly_listed'): ")

scrape_ebay(user_search_query, sort)Conclusion

This tutorial guided you through scraping data elements from eBay. We used the requests library to download raw HTML and then parsed it with BS4. We extracted the desired data from the HTML's structured tree using built-in methods and saved it in a CSV file. Finally, to avoid being blocked, we used the HasData API, which intelligently configures every web scraper to prevent blocking.