Constant data collection lets you stay updated on ongoing changes and make informed, timely decisions. As one of the most popular search engines, Google can offer up-to-date information and the most relevant data. Whether you are searching for hotels or real estate agencies, Google provides relevant data.

There are many ways to scrape data: from manual data collection, ordering a data package from marketplaces, using web scraping APIs, and creating custom scrapers.

What Can We Scrape from Google?

Google has many services where you can find the information you need. There's the search engine results page (SERP), maps, images, news, and more. By scraping Google, you can track your own and your competitors' ranking on the Google search results page. You can gather lead information from Google Maps or use it for different purposes.

Search results

One of the most requested tasks is extracting information from Google SERP, including titles, URLs, descriptions, and snippets. For example, scraping Google SERP would be a good solution if you need to gather a list of resources involved in a particular area or gather service providers.

Google Maps

Scraping Google Maps is the second scraping target. With scraping maps, you can quickly gather information about businesses in a specific area and collect their contact information, reviews, and ratings.

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Google News

News aggregators usually scrape Google News to gather up-to-date events. Google News provides access to news articles, headlines, and publication details, and scraping can help you with data-getting automation.

Other Google services

Besides the ones mentioned before, Google has other services, such as Google Images and Google Shopping. They can also be scraped, although the information they provide may be less interesting for web scraping purposes.

Why Should We Scrape Google?

There are various reasons why people and companies can use Google data scraping. For example, researchers with Google scraping can access a huge amount of data for academic research and analysis. Similarly, businesses can use the resulting data for market research, competitive intelligence, or attracting potential customers.

In addition, web scraping will also be helpful for SEO specialists. They can better analyze search engine rankings and trends with their collected data. And content creators can even use Google scraping to aggregate information and create valuable content.

How to scrape Google data

As we said, there are several ways to get the required data. We won't delve into manual data collection or buying a ready-made data set. Instead, we would like to discuss obtaining the desired data yourself. So, you have two options here:

- Use specialized scraping tools like programs, no-code scrapers, and plug-ins.

- Create your scraper. Here you can create it from scratch, solving the challenges such as bypassing blocks, solving captchas, JS rendering, using proxies, and more. Alternatively, you can use an API to automate the process and overcome these problems with a single solution.

Let's inspect each of these options.

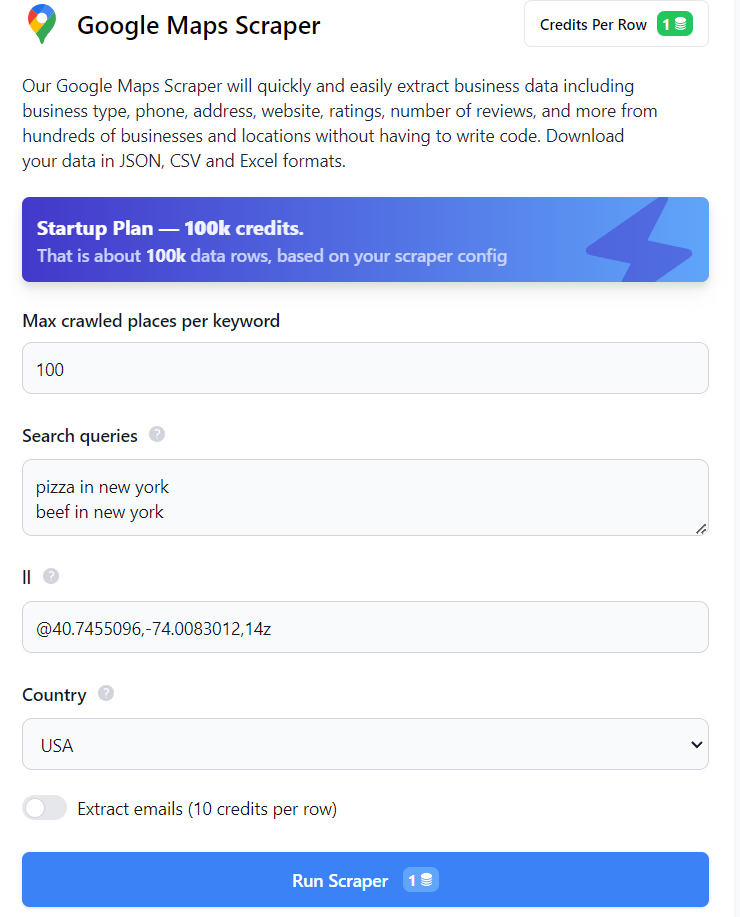

Using no-code Google Scraper

The easiest way to get data is to use Google Maps or Google SERP no-code scrapers. You don't need any programming knowledge or skills for this. To get data from Google SERP or Maps, sign up on our website and open the required no-code scraper. For example, to use Google Maps no-code scraper, just fill in the required fields and click the "Run Scraper" button.

After that, you can download the data in the desired format. In the same way, you can get data from Google SERP. You can also get additional data from Google services using tools like Google Trends or Google Reviews no-code scraper.

Effortlessly scrape and analyze customer reviews from Google Maps to gain actionable insights into customer sentiment, identify areas for improvement, and enhance…

Google Trends Scraper pulls data from several search queries and current topics listed on Google by selecting categories and specifying geographic location, allowing…

Build your own Google web scraping tool

The second option requires programming skills but allows you to create a flexible tool that fits your needs. To build your scraper, you can use one of the popular programming languages like NodeJS, Python, or R.

NodeJS offers several advantages compared to other programming languages. It has a wide range of npm packages that allow you to solve any task, including web scraping. Additionally, NodeJS is well-suited for dealing with dynamically generated pages like those on Google.

However, this approach also has its disadvantages. For example, if you scrape a website frequently, your actions may be regarded as suspicious, and the service can offer you to solve the CAPTCHA or even block your IP address. In this case, you must often change your IP using a proxy.

When creating your scraper, it's essential to consider these potential challenges to create an effective tool.

Preparing for Scraping

Before starting web scraping, it is essential to make the necessary preparations. Let's begin by setting up the environment, installing the required npm packages, and researching the pages we intend to scrape.

Installing the environment

NodeJS is a shell that allows you to execute and process JavaScript outside a web browser. The main advantage of NodeJS is that it implements an asynchronous architecture in a single thread, so your applications will run quickly.

To start writing a scraper using NodeJS, let's install it and update the NPM. Download the latest stable version of NodeJS from the official website and follow the instructions to install it. To be sure that everything was successfully installed, you can run the following command:

node -vYou should get a string displaying the installed version of NodeJS. Now, let's proceed with updating NPM.

npm install -g npmWith this done, we can move on to installing the libraries.

Installing the libraries

This tutorial will use Axios, Cheerio, Puppeteer, and HasData NPMs. We've already written about how to use Axios and Cheerio to scrape data, as they are great for beginners. Puppeteer is a more complex but also more functional NPM package. We also provide NPM packages based on our Google Maps and Google SERP APIs, so we will take a look at them too.

First, create a folder to store the project and open the command prompt in this folder (go to the folder, and in the address bar, type "cmd" and press enter). Then initialize npm:

npm init -yThis will create a file where dependencies will be listed. Now you can install the libraries themselves:

npm i axios

npm i cheerio

npm i puppeteer puppeteer-core chromium

npm i @scrapeit-cloud/google-maps-api

npm i @scrapeit-cloud/google-serp-apiNow that the necessary elements are installed let's examine the web pages we will be scraping. For example, we have chosen the most popular ones - Google SERP and Google Maps.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

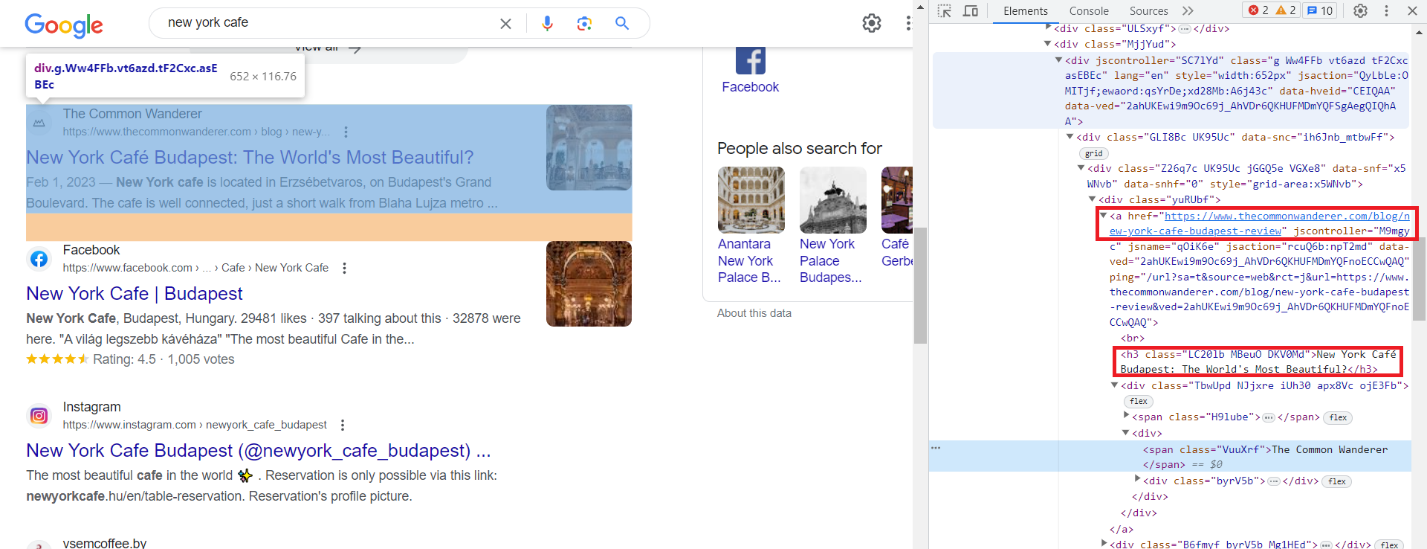

Google SERP page analysis

Let's use Google to search for "cafe new york" and find the results. Then, go to DevTools (F12 or right-click on the screen and select Inspect) and closely examine the items. As we can see, all the elements have automatically generated classes. Still, the overall structure remains constant, and the elements, apart from their changing classes, have a class called "g."

For example, we will retrieve the title and a link to the resource. The remaining elements will be scraped similarly so we won't focus on them.

The title is in the h3 tag, and the link is stored in the 'a' tag within the href attribute.

If you need more data, find it on the page, in the code, and find the patterns by which you can identify a particular element.

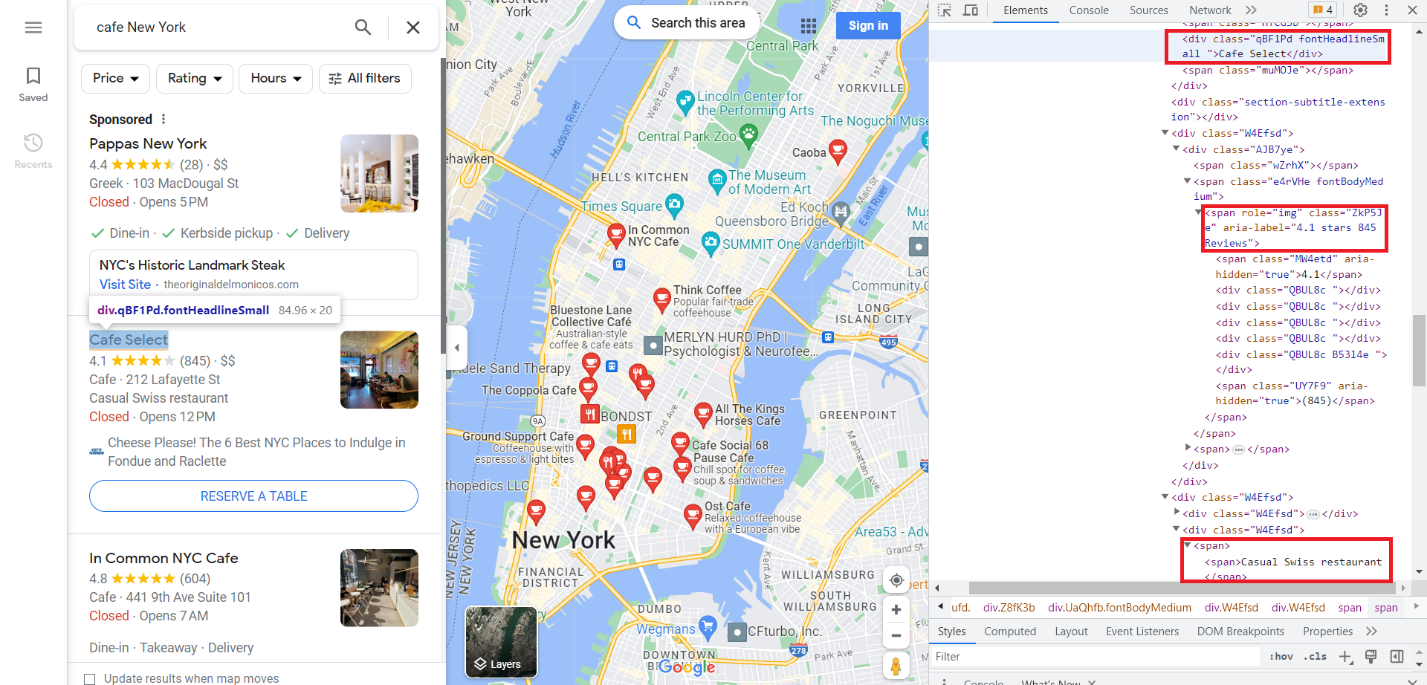

Google Map page analysis

Now, let's do the same for Google Maps. Go to the Google Maps tab and open DevTools. This time let's get a title, rating, and description. You can get a vast amount of data from Google Maps. We'll show it with the example of scraping with the API. However, if you create a full-fledged scraper to collect all available data from Google Maps, prepare for long and hard work.

As we can see, we need the data from the class "fontHeadlineSmall", from the span tag with the attribute role="img" and from the span tag.

Scraping Google SERP with NodeJS

Let's start by scraping Google SERPs. We analyzed the page earlier, so we just need to import the packages and use them to build the scraper.

Scrape Google SERP Using Axios and Cheerio

Let's import Axios and Cheerio packages. To do this, create a *.js file and write:

const axios = require('axios');

const cheerio = require('cheerio');Now let's run a query to get the required data:

axios.get('https://www.google.com/search?q=cafe+in+new+york') Remember, all elements we need have class "g". We have the html code of the page, so we need to process it and select all elements with class "g".

.then(response => {

const html = response.data;

const $ = cheerio.load(html);

console.log($.text())

const elements = $('.g'); After that, we only need to go through all the elements and select the title and link. Then we can display them on the screen.

elements.each((index, element) => {

const title = $(element).find('h3').text();

const link = $(element).find('a').attr('href');

console.log('Title:', title);

console.log('Link:', link);

console.log('');

});The code is ready to go. And it would work if Google's pages weren't dynamically generated. Unfortunately, even if we run this code, we won't be able to get the desired data. This is because Axios and Cheerio only work with static pages. All we can do is display the HTML code of the request we receive on the screen and ensure that it consists of a set of scripts that should generate the content when loaded.

Scrape Google SERP using Puppeteer

Let's use Puppeteer, which is great for working with dynamic pages. We will write a scraper that launches the browser, navigates to the desired page, and collects the necessary data. If you want to learn more about this npm package, you can find many examples of Puppeteer using it here.

Now connect the library and prepare the file for our scraper:

const puppeteer = require('puppeteer');

(async () => {

//Here will be the code of our scraper

})();Now let's launch the browser and go to the desired page:

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.google.com/search?q=cafe+in+new+york');Then wait for the page to load and get all elements with the class "g".

await page.waitForSelector('.g');

const elements = await page.$$('.g');Now let’s go through all the elements one by one and choose a title and a link for each. Then display the data on the screen.

for (const element of elements) {

const title = await element.$eval('h3', node => node.innerText);

const link = await element.$eval('a', node => node.getAttribute('href'));

console.log('Title:', title);

console.log('Link:', link);

console.log('');

}And at the end, do not forget to close the browser.

await browser.close();And this time, we get the data we need if we run this script.

D:\scripts>node serp_pu.js

Title: THE 10 BEST Cafés in New York City (Updated 2023)

Link: https://www.tripadvisor.com/Restaurants-g60763-c8-New_York_City_New_York.html

Title: Cafés In New York You Need To Try - Bucket Listers

Link: https://bucketlisters.com/inspiration/cafes-in-new-york-you-need-to-try

Title: Best Places To Get Coffee in New York - EspressoWorks

Link: https://espresso-works.com/blogs/coffee-life/new-york-coffee

Title: Caffe Reggio - Wikipedia

Link: https://en.wikipedia.org/wiki/Caffe_Reggio

...Now that you know how to get the needed data, you can easily modify your script using CSS selectors for the desired elements.

Scrape using Google SERP API

Scraping becomes even easier with the Google SERP API. To do this, just sign up on our website, copy the API key from your dashboard in the account, and make a GET request with the required parameters.

Let's start by connecting the necessary libraries and specifying the API link. Also, indicate the title where you need to insert your API key.

const fetch = require('node-fetch');

const url = 'https://api.hasdata.com/scrape/google?';

const headers = {

'x-api-key': 'YOUR-API-KEY'

};Now let's specify the request settings. Only one of them is required - "q", the rest are optional. You can find more information about the settings in our documentation.

const params = new URLSearchParams({

q: 'Coffee',

location: 'Austin, Texas, United States',

domain: 'google.com',

deviceType: 'desktop',

num: '100'

});And finally, let's display all the outcomes in the console.

fetch(`${url}${params.toString()}`, { headers })

.then(response => response.text())

.then(data => console.log(data))

.catch(error => console.error(error));You can easily use the API, and as a result, you get the same data without any restrictions or the need for proxies.

Scraping Google Maps using NodeJS

Now that we've covered scraping data from Google SERP, scraping data from Google Maps will be much easier for you since it's not very different.

Scrape Google Maps Using Axios and Cheerio

As we discussed before, it's not possible to scrape data from Google SERP or Maps using Axios and Cheerio. This is because the content is dynamically generated. If we try to scrape the data using these NPM packages, we will get an HTML document with scripts to generate the page content. Therefore, even if we write a script to scrape the required data, it won't work.

So, let's proceed to the Puppeteer library, which enables us to utilize a headless browser and interact with dynamic content. Using Puppeteer, we can overcome limitations and effectively scrape data from Google Maps.

Scrape Google Maps using Puppeteer

Let's use Google Maps this time to get a list of cafes in New York, their ratings, and the number of reviews. We'll use Puppeteer to launch a browser and navigate to the page to do this. This part is like the example of using Google SERP so we won't dwell on it. Also, close the browser and end the function.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.google.com/maps/search/cafe+near+New+York,+USA', { timeout: 60000 });

// Here will be information about data to scrape

await browser.close();

})();Now, all we need to do is identify the parent tag that all the elements have and iterate through each element, gathering data about its rating and the number of reviews it has.

const elements = await page.$$('div[role="article"]');

for (const element of elements) {

const title = await element.$eval('.fontHeadlineSmall', node => node.innerText);

const rating = await element.$eval('[role="img"][aria-label*=stars]', node => node.getAttribute('aria-label'));

console.log('Title:', title);

console.log('Rating:', rating);

console.log('');

}As a result, we get a list of cafe names and their rating.

D:\scripts>node maps_pu.js

Title: Victory Sweet Shop/Victory Garden Cafe

Rating: 4.5 stars 341 Reviews

Title: New York Booze Cruise

Rating: 3.0 stars 4 Reviews

Title: In Common NYC Cafe

Rating: 4.8 stars 606 Reviews

Title: Cafe Select

Rating: 4.1 stars 845 Reviews

Title: Pause Cafe

Rating: 4.6 stars 887 Reviews

...Now that we have explored libraries let's see how easy it is to get all the data using our Google Maps API.

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

Scrape using Google Map API

To use the web scraping API, you will need your unique API key, which you can get for free after signing up on our website, along with a certain amount of free credits.

Now let's connect the library to our project.

const ScrapeitSDK = require('@scrapeit-cloud/google-serp-api');Next, we will create a function to perform the HTTP request and add our API key. To avoid repeating past examples completely, let's change it and add try...catch blocks to catch errors that may occur during script execution.

(async() => {

const scrapeit = new ScrapeitSDK('YOUR-API-KEY');

try {

//Here will be a request

} catch(e) {

console.log(e.message);

}

})();Now all that's left for us is to make a request to the HasData API and display the data on the screen.

const response = await scrapeit.scrape({

"keyword": "pizza",

"country": "US",

"num_results": 100,

"domain": "com"

});

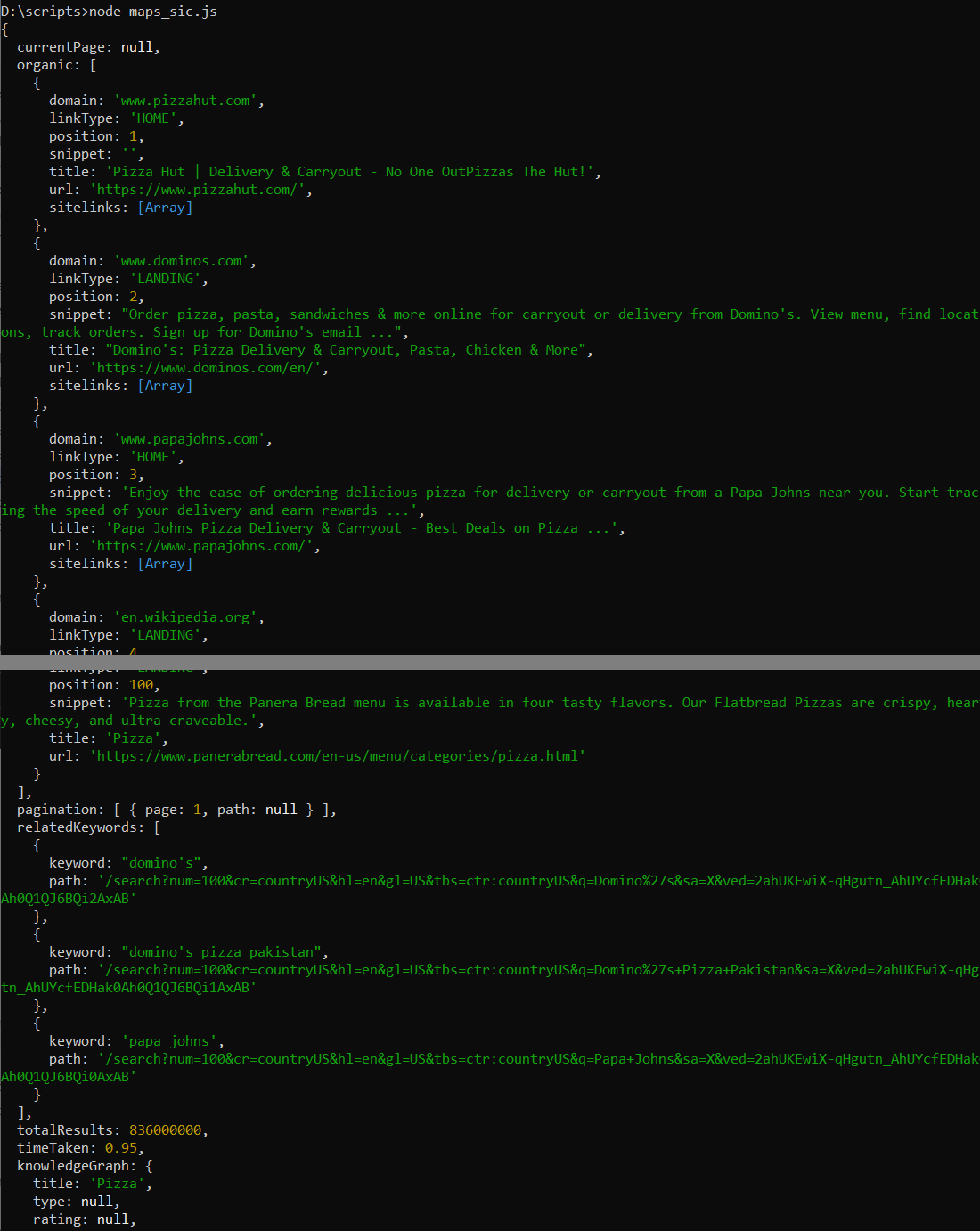

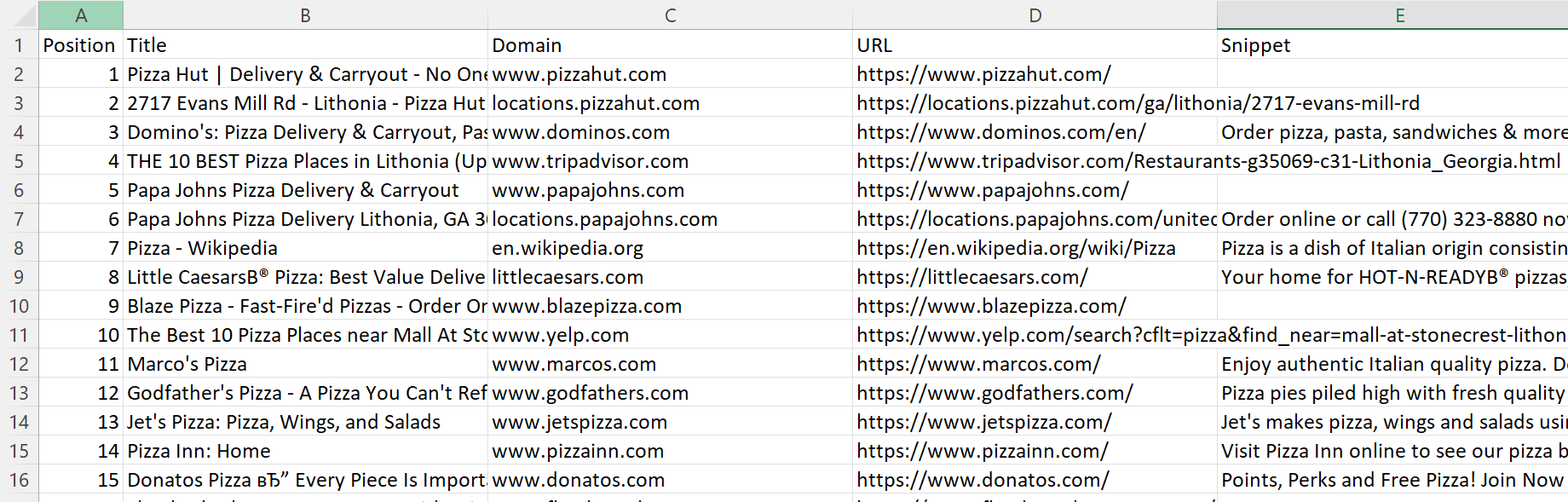

console.log(response);As a result, we will get a JSON response, which will display information on the first hundred items and some other information:

Since the previous process was too easy, and we have a large amount of data, let's additionally save it in a CSV file. To do this, we'll need to install "csv-writer" NPM package that we haven't installed before.

npm i csv-writerNow, let's add the import of the NPM package to the script.

const createCsvWriter = require('csv-writer').createObjectCsvWriter;Now all we need to do is make the try block more efficient. First, define the CSV writer with a semicolon delimiter after the response.

const csvWriter = createCsvWriter({

path: 'scraped_data.csv',

header: [

{ id: 'position', title: 'Position' },

{ id: 'title', title: 'Title' },

{ id: 'domain', title: 'Domain' },

{ id: 'url', title: 'URL' },

{ id: 'snippet', title: 'Snippet' }

],

fieldDelimiter: ';'

});Then extract the relevant data from the response:

const data = response.organic.map(item => ({

position: item.position,

title: item.title,

domain: item.domain,

url: item.url,

snippet: item.snippet

}));And at the end, write the data to the CSV file and display the message about successful saving:

await csvWriter.writeRecords(data);

console.log('Data saved to CSV file.');After running this script, we generated a CSV file that contains detailed information about the first hundred positions on Google Maps for the keyword of interest.

Full code:

const ScrapeitSDK = require('@scrapeit-cloud/google-serp-api');

const createCsvWriter = require('csv-writer').createObjectCsvWriter;

(async() => {

const scrapeit = new ScrapeitSDK('YOUR-API-KEY');

try {

const response = await scrapeit.scrape({

"keyword": "pizza",

"country": "US",

"num_results": 100,

"domain": "com"

});

const csvWriter = createCsvWriter({

path: 'scraped_data.csv',

header: [

{ id: 'position', title: 'Position' },

{ id: 'title', title: 'Title' },

{ id: 'domain', title: 'Domain' },

{ id: 'url', title: 'URL' },

{ id: 'snippet', title: 'Snippet' }

],

fieldDelimiter: ';'

});

const data = response.organic.map(item => ({

position: item.position,

title: item.title,

domain: item.domain,

url: item.url,

snippet: item.snippet

}));

await csvWriter.writeRecords(data);

console.log('Data saved to CSV file.');

} catch(e) {

console.log(e.message);

}

})();So, we can see that using the web scraping API makes extracting data from Google Maps very easy.

Challenges in Web Scraping Google

Creating a scraper that gathers all the required data is not enough. It is also essential to ensure that it avoids difficulties during scraping. You should consider solving some challenges in advance and make provisions to bypass them if they arise.

Anti-Scraping Mechanisms

Many sites use different ways to protect against bots, including scrapers. The constant execution of requests to the site has too great a load on the resource, so it begins to return responses with a delay and then out of order. Therefore, sites are taking measures to protect against frequent requests made by bots. For example, one of these ways of protecting is special traps, elements not visible on the page but in the code of the page. Passing on such an element can block or send you to non-existent looped pages.

CAPTCHAs

Another common way to protect against bots is to use a captcha. To get around this problem, you can use special services to solve a captcha or an API that returns ready-made data.

Rate Limiting

As we've said, too many queries can hurt the target site. In addition, the speed of bot queries is much faster than the human ones. Therefore, services can relatively easily determine that a bot performs actions.

For the safety of your scraper and to not harm the target resource, it is worth reducing the scraping speed to at least 30 requests per minute.

Changing HTML Structure

One more challenge during web scraping is the changing structure of the site. You saw it earlier with the example of Google. The class names and elements are generated automatically, making it more difficult to get the data. However, the structure of the site remains unchanged. The key is to analyze it accurately and use it effectively.

IP Blocking

IP blocking is another way to protect against bots and spam. If your actions appear suspicious to a website, you may be blocked. That's why you'll likely need to use a proxy. A proxy acts as an intermediary between you and the destination website. It allows you to access even those resources blocked in your country or specifically for your IP address.

Conclusion and takeaways

Data scraping from Google Maps can be complex and easy, depending on the tools chosen. Due to challenges such as JavaScript rendering and strong bot protection, developing a custom scraper can be quite difficult.

Furthermore, today's example demonstrated that not all libraries are suitable for scraping Google SERP and Google Maps. However, despite this, we also showed how to overcome these challenges. For instance, to solve the challenge of dynamic structure creation, we used the Puppeteer NPM. Additionally, for beginners or those who prefer to save time and effort handling captcha and proxies, we shew how to use a web scraping API to extract the desired data.