How Can Recruiters Benefit from Web Scraping?

Finding the talent you need for your company is essential to success, but it can be time-consuming and tedious. There are many agencies and employees to find and many databases to search through. Luckily, scrapers can automate some of the work.

The data scraping technique is a time-saving and cost-effective way to find the most relevant job postings. It is also a good way for individual job seekers to get all the information they need about a company or institution.

Companies and institutions also use this method to determine which jobs are in demand in the job market, what skills are needed, how much salary should be offered to potential candidates, etc.

Web scraping service helps extract information from different sources like social media, job boards, or other websites. The extracted data can then be analyzed using various analytical tools to find out what type of person would be best suited for the position. Constant data analysis is a fundamental process that helps to make decisions and improve the company's efficiency.

With web scraping tools, you can quickly and efficiently search:

- New employees;

- Companies operating in target industries;

- Specific objects that interest you.

In the last case, the criteria can be specific. For example, using web scraping, it will be easy to find a list of all potential employees who live in New York, have more than five years of experience, and are currently looking for a job.

Retrieve detailed information from the Indeed portal about saved and sponsored jobs, companies, CV postings, reviews, and ratings of jobs without writing code. Download…

Our Glassdoor Scraper tool will gather Glassdoor job data including salaries, company size, employees, industries, location, logo, organization type, investors,…

Let's say you need to find a C++ developer with ten years of experience, a portfolio, and several projects on GitHub, and an actively looking for a job.

To do this, you first need to find all potential employees who are currently unemployed. For this, Monster, Indeed, and LinkedIn services are suitable. Search results can be crawled using a scraper and summarized in convenient tables that are convenient to analyze later. Let's say we were only interested in the last name, profile link, work experience, current job availability, and GitHub link.

Once the table is filtered by work experience, job availability, and GitHub link, you can proceed to the analysis part of the projects. To do this, you need to follow the links of all users and scrape data about their projects. This will be enough to understand what a person is interested in, the level of his knowledge and skills, and what languages he can write.

And the best part is that all this information can be obtained automatically. And already having such data, you can learn a lot about an employee.

Search For The Most Qualified Candidates

Before getting this information, deciding how you will obtain it is necessary. There are several ways to receive such data automatically:

- Purchase of ready-made bases according to specific criteria. However, it is worth considering that you often have to buy such databases, and there will not always be guarantees that they are high-quality or complete.

- The use of ready-made scraping tools. This option is cheaper than the previous one and allows you to decide what data will be scraped, but it is not flexible. And therefore, they may not work on sites with a complex structures.

- Development of a new collecting data application from scratch. But then you must take various measures against IP blocks, look for proxies, connect captcha-solving services, and much more.

- Create an internal infrastructure for gathering data using web scraping APIs that help to cope with all the problems typical of web scraping, which we listed in the previous paragraph.

It is worth understanding their advantages and disadvantages to decide which option is suitable. Buying new bases is the easiest and most expensive option. In addition, you are not the one who has access to the received data but also provides it. So, your data can be resold more than once. In addition, then their relevance is questioned.

Using ready-made scraping tools, as mentioned above, is a cheaper but less flexible tool. As a rule, it is possible to obtain data only in the form provided by the developers, and not always convenient.

Automatically collect data on the job market

Web scraping is the best way to get real-time access to the job market. It's fast, efficient, and gets you all the data you could ever want.

- 1,000 Free API Credits

- No Credit Card Required

- 30-Day Trial

Developing your application is a good solution, but only for those with extensive programming knowledge or staff of programmers and analysts. However, the advantage is that the application will not depend on anyone. True, then the problem of bypassing locks arises.

And the last option is creating an internal job scraping infrastructure. It is optimal and allows you to get up-to-date data at a low cost without worrying about proxies and blocking.

The first two options don’t need full explanations. Regarding the third one, we have some articles:

- An Introduction to Scraping Websites with Axios and Cheerio

- Web Scraping with C#

- Web Scraping with Python: from Fundamentals to Practice

- Web Scraping Using Selenium Python

Therefore, in this article, we will focus on the last option.

Collecting HR Data with Web Scraping API

To use the web scraping API, register for our service. After that, you will receive 1000 free API credits that you can use for a month to test.

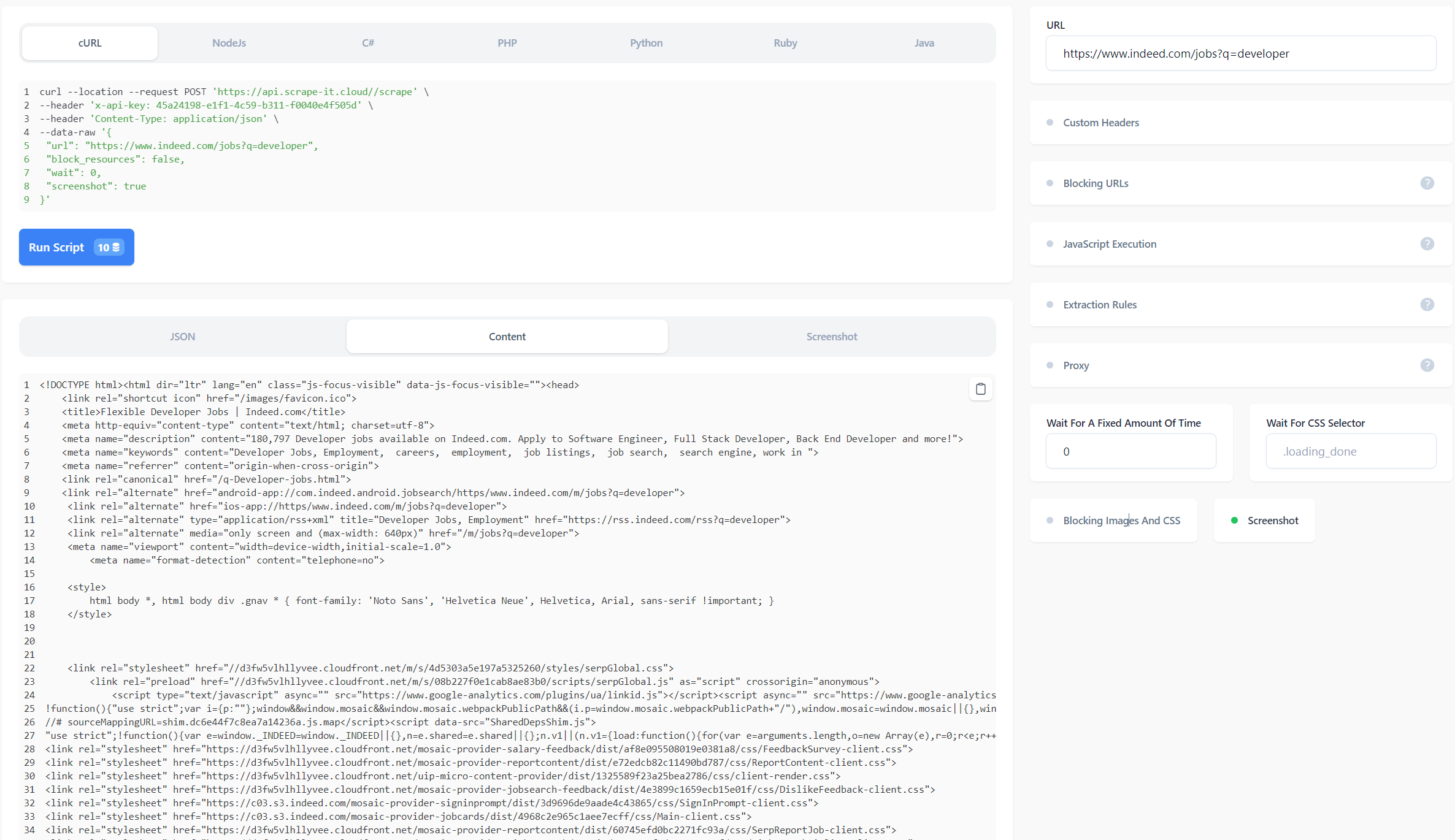

To understand how requests work and how to compose them, we will use a special builder. You can use the fields on the right side of the window to customize the query, after which you can run the resulting query. Let's get data from Indeed Find jobs.

For example, we use the query "developer". Insert a link to the search results page in the "URL" field. If you run the scraper now, you'll get the page's source code, which needs further processing to extract the data you want.

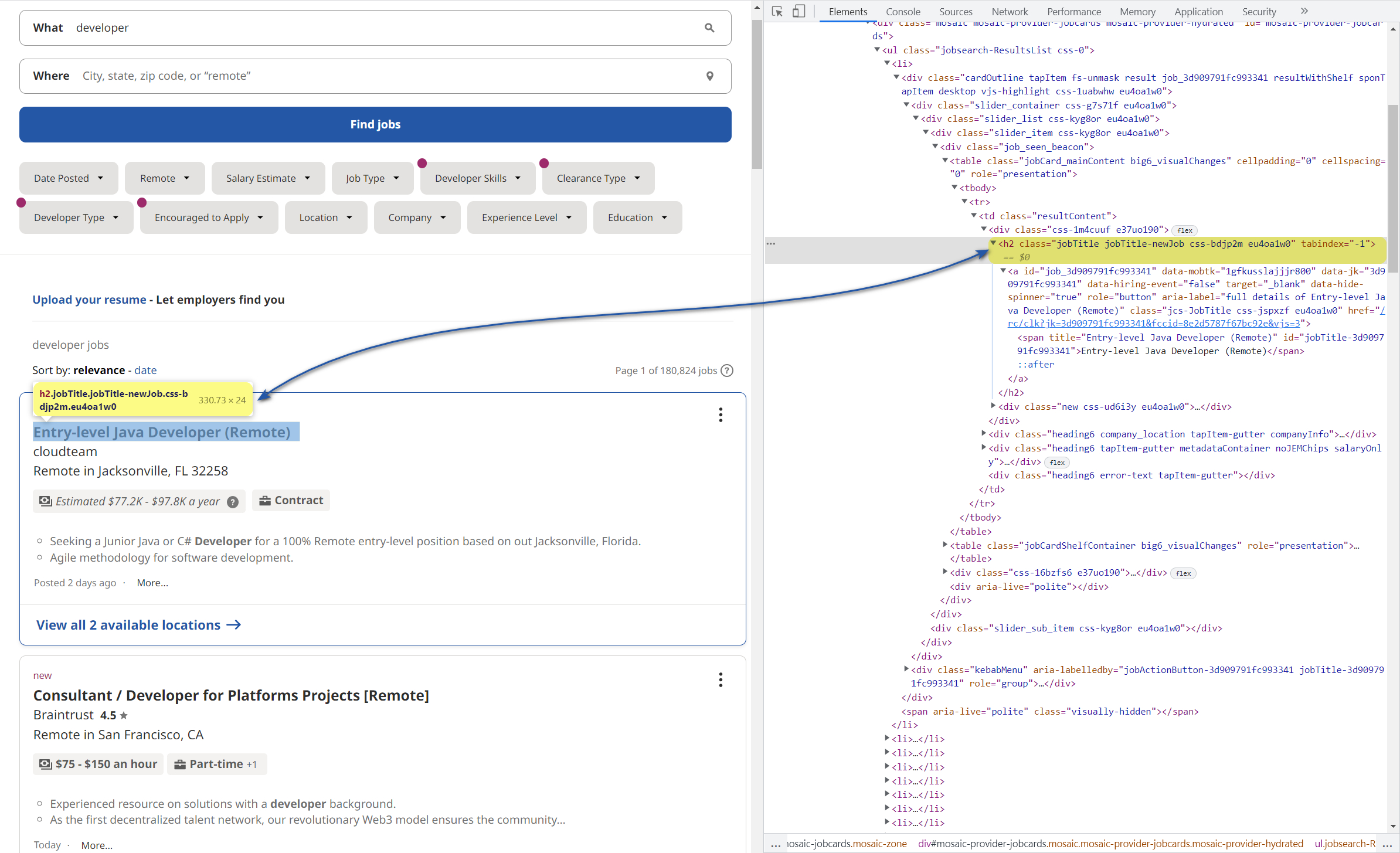

To get the required data immediately in JSON format, use “Extraction Rules”. To do this, go to the Indeed results page website and explore it. To do this, go to DevTools (F12) and select any title to get its code.

After that, right-click on the element in the code field and copy its selector. In our case, the selector for job titles looks like this:

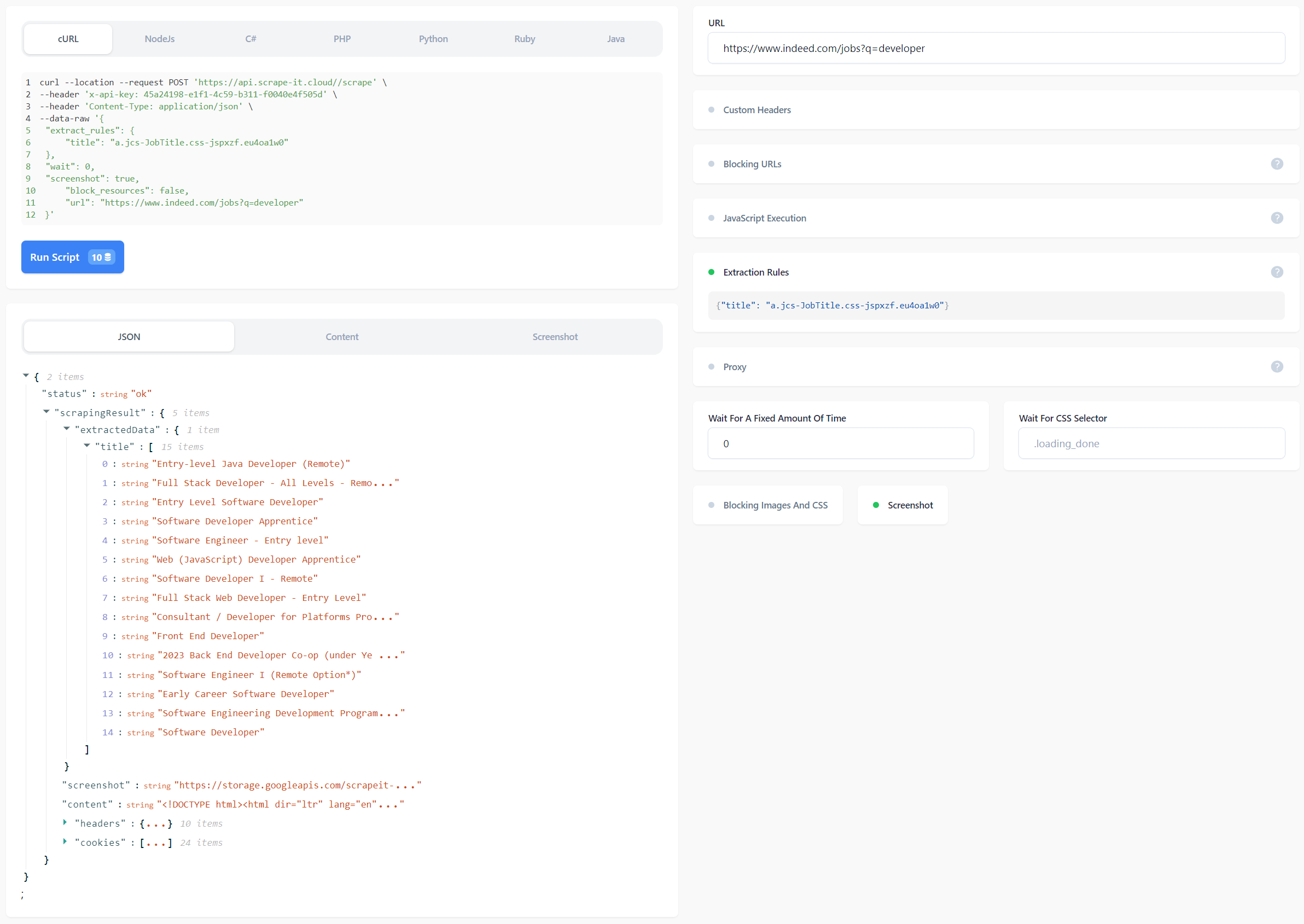

a.jcs-JobTitle.css-jspxzf.eu4oa1w0Now, if you insert the resulting selector into Extraction Rules, the result of the query will be much better:

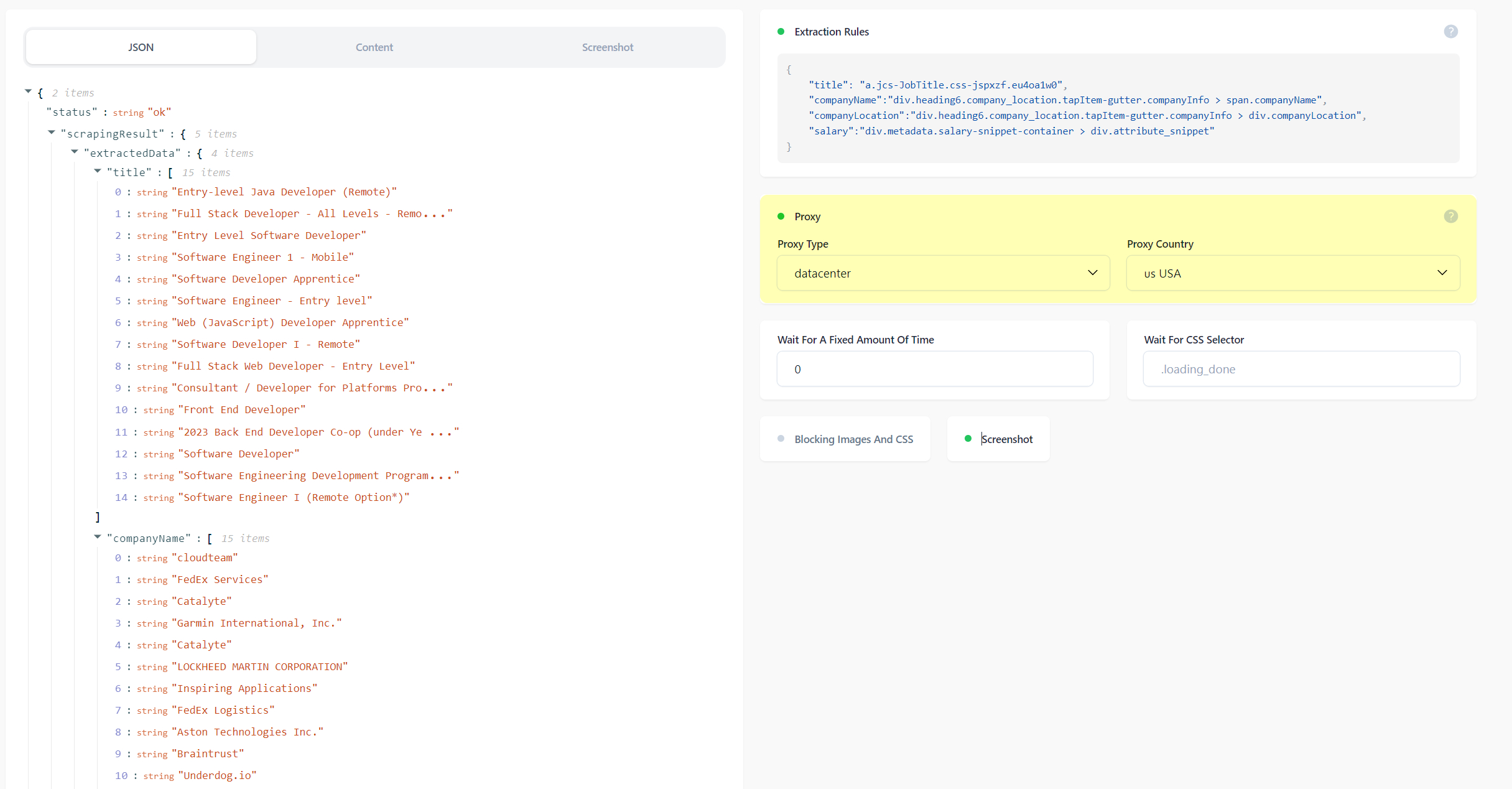

Similarly, you can get absolutely any valuable data from recruiting websites, which will facilitate the search for potential employees. For example, using the rule below, you can get job titles, company names, locations, and salaries.

{

"title": "a.jcs-JobTitle.css-jspxzf.eu4oa1w0",

"companyName":"div.heading6.company_location.tapItem-gutter.companyInfo > span.companyName",

"companyLocation":"div.heading6.company_location.tapItem-gutter.companyInfo > div.companyLocation",

"salary":"div.metadata.salary-snippet-container > div.attribute_snippet"

}Make Requests Through Proxies and Rotate them as Needed

If, when creating your scraper, you need to take care of how to collect a pool of IP proxies and configure their change, then if you use the API, it is enough to enable the corresponding function:

There are two types of proxies: resident and data center. Their main difference is that residential proxies are provided by providers, while data centers are not. It is worth noting that data center proxies are in high demand.

In general, proxies allow you to make the site see not your computer but another from the country that will be indicated.

Another problem that has been voiced is captchas. Headless browsers are used to avoid them, and special services are used to solve them. However, this problem can also be forgotten if the API is used.

Conclusion and Takeaways

Web scraping for recruiters is the ultimate tool for quickly and efficiently finding the best employees. It helps you do everything from finding qualified candidates in minutes to filtering down those shortlisted candidates to only the perfect one for you. Of course, buying a ready-made base will save time, but creating your API-based infrastructure is a simple, flexible, and feature-rich solution for collecting HR data with web scraping.

In addition, it is enough to set up and do everything once, and then you can receive similar data automatically. To be convinced of this, it is enough to try it once, and you can do it free of charge.