Airbnb is an online platform for booking accommodation, allowing people to rent out or lease their houses, apartments, rooms, or other types of accommodation for short-term stays. It enables travelers to find various accommodation options in different parts of the world, including hotels, apartments, and holiday homes.

Users can browse available options, read reviews from other guests, book accommodation, and contact hosts directly through the platform. You can obtain information about properties, reviews and ratings, hosts, bookings, and availability using scrapers.

Such information about available properties can be useful not only to avid travelers and frequent business travelers but also to real estate agents and private investors who can use other properties in the area to understand what amenities and prices competitors are offering to develop their proposals.

In this article, we will discuss the methods of data collection from Airbnb that can be used by both those who just want to get the data and those who just want to automate the data collection and develop Python-based solutions using different approaches.

Extract valuable data from Airbnb listings with ease: locations, prices, images, availability, ratings, number of reviews, host information, and more, in just a…

Zillow Scraper is the tool for real estate agents, investors, and market researchers. Its easy-to-use interface requires no coding knowledge and allows users to…

Scraping vs. Using an API: Understanding the Options

When collecting data from a website, the first option to consider is the official API. This method usually provides full data access without requiring workarounds. Although APIs may not provide all data, developers generally prefer to use them.

Scrapers are used when an official API is unavailable or impractical. In this case, a developer can create a scraper in any programming language to collect the required data automatically. This section will discuss the pros and cons of creating a Python scraper, the Airbnb API, and its alternatives.

Pros and Cons of Airbnb Scraping

Before diving into script development, it's crucial to understand the benefits and drawbacks of scraping Airbnb. For your convenience, we've compiled a comprehensive table summarizing the key considerations:

|

Pros of Airbnb Scraping |

Cons of Airbnb Scraping |

|---|---|

|

1. Flexibility: Can extract any data from Airbnb listings. |

1. Instability: Website changes can break scraping scripts. |

|

2. Customization: Able to tailor data extraction to specific needs. |

2. Stability: Scraping may be less stable compared to using an API, as it can be affected by website changes and updates. |

|

3. Independence: No reliance on Airbnb's API availability or limitations. |

3. Technical Complexity: Scraping requires coding skills and maintenance. |

|

4. Cost-effectiveness: Scraping can be cost-efficient compared to API access fees. |

4. Rate Limitations: Scraping may be subject to rate limitations imposed by Airbnb, affecting data retrieval speed. |

|

5. Access to Hidden Data: Can access data not exposed through Airbnb's API. |

5. Risk of Blocking: Airbnb might restrict access or IP addresses. |

|

6. Real-time Data: Can retrieve the latest data directly from the website. |

6. Captcha and Bot Detection: Airbnb may deploy measures to detect scraping activities. |

Here you can a balanced view of both the advantages and disadvantages of scraping data from Airbnb listings. You should consider these factors carefully when deciding whether to scrape data from Airbnb or explore alternative methods for accessing data.

Exploring Alternatives: Airbnb API and Third-Party Solutions

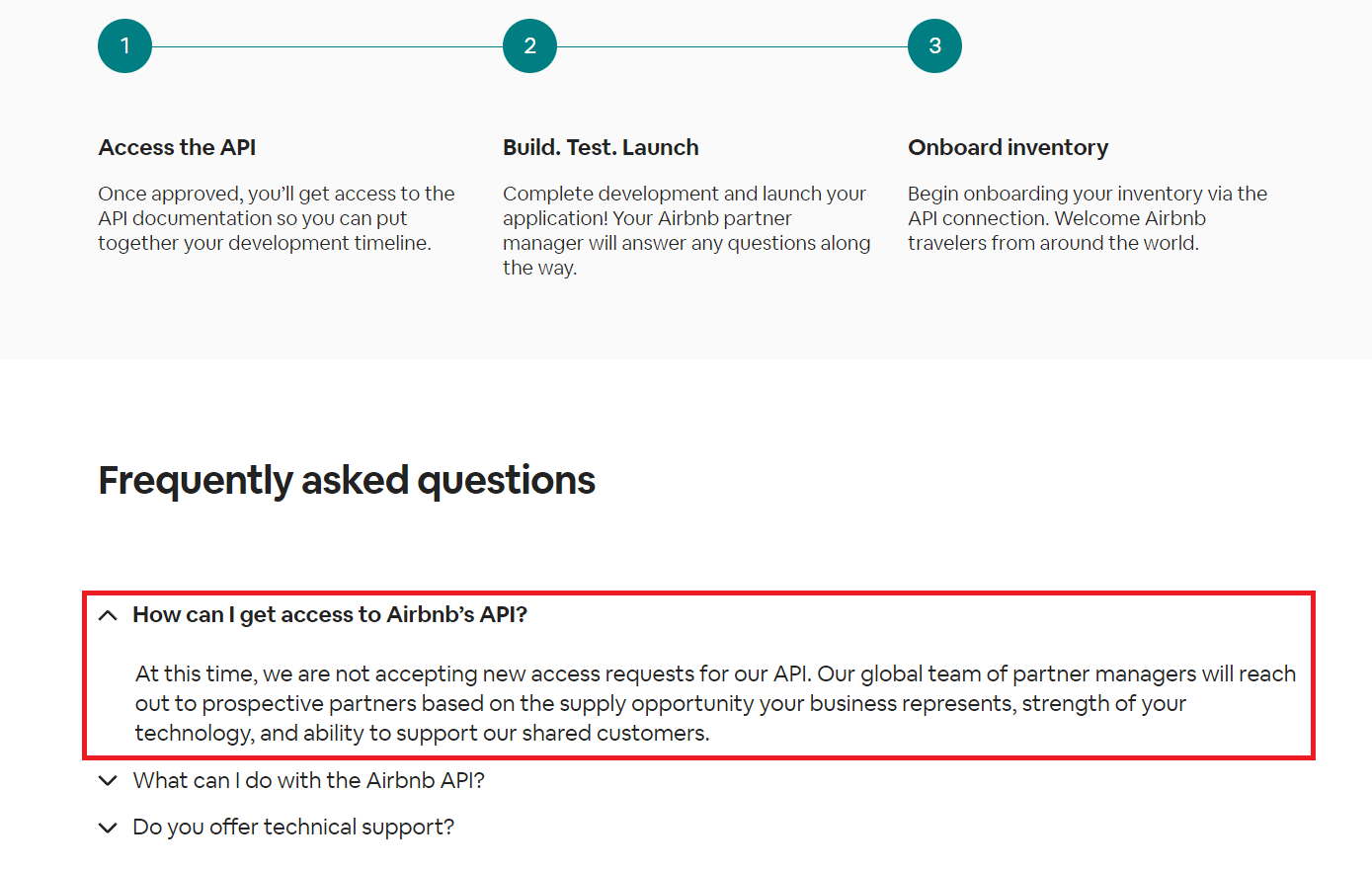

As we mentioned before, the most straightforward way to retrieve data from the service is through the official API, and Airbnb does have one. However, using it is unlikely to be successful. If you go to the official Airbnb API page, you can find the following information in the FAQ section:

So, the official API is not an option, but we have an alternative solution. To simplify the process of getting data from Airbnb, you can use third-party services to collect the data. If you just need a ready-made dataset, you don't need to write any scripts. Instead, you can use our Airbnb no-code scraper.

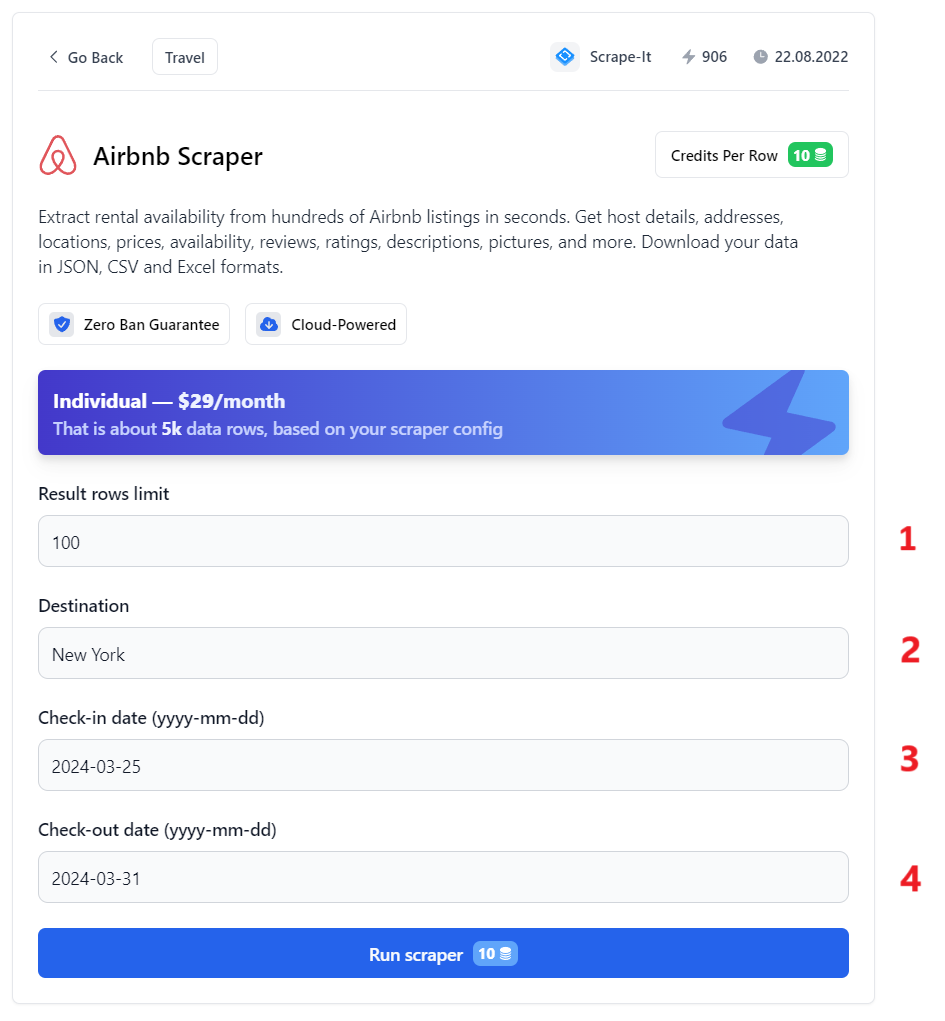

To obtain data, you only need to specify the most necessary parameters:

- Result Rows Limit. Enter the number of result rows you want to receive.

- Destination. Enter the city or town where you want to find offers.

- Check-in date. Enter the check-in date in the format

yyyy-mm-dd. - Check-out date. Enter the check-out date in the same format.

After you have specified all the necessary parameters, click the Run Scraper button to start the data collection process. When it is finished, you can download the data in JSON, CSV, or XLSX format on the right side of the screen.

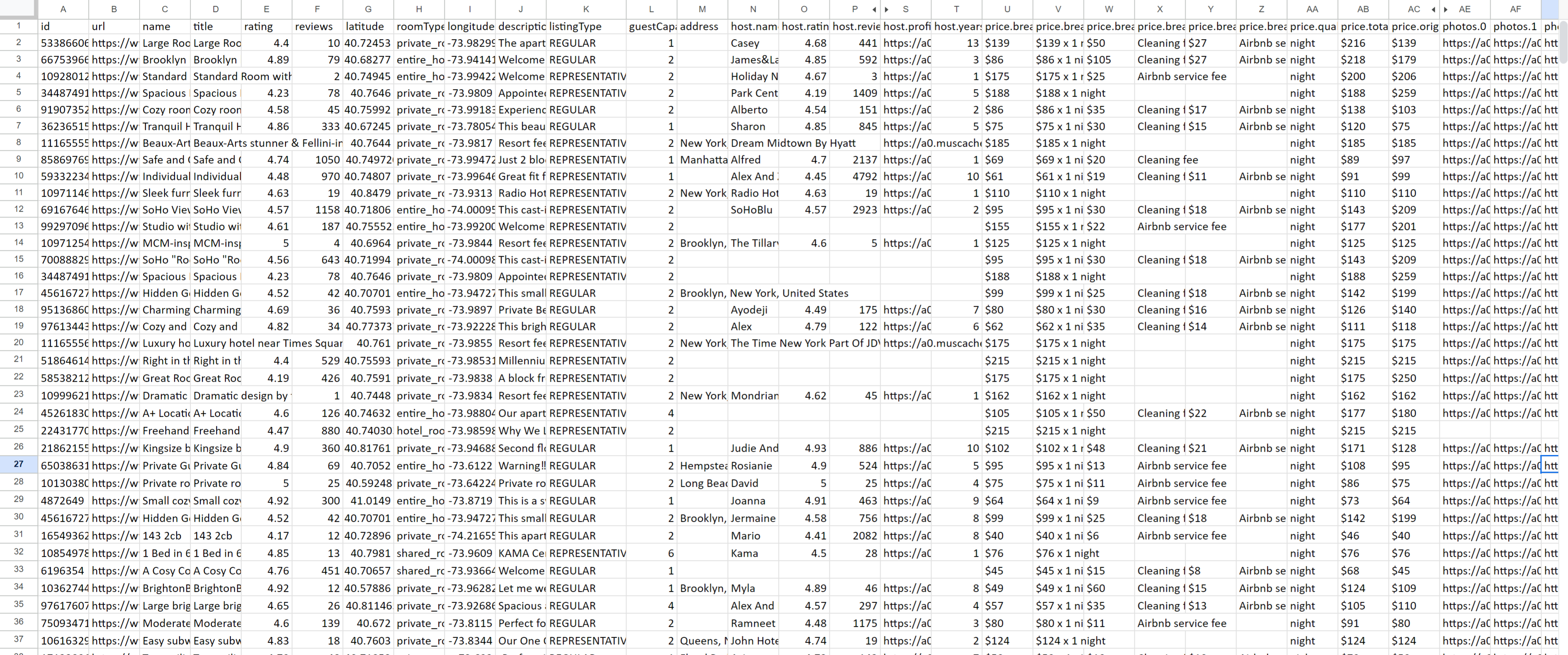

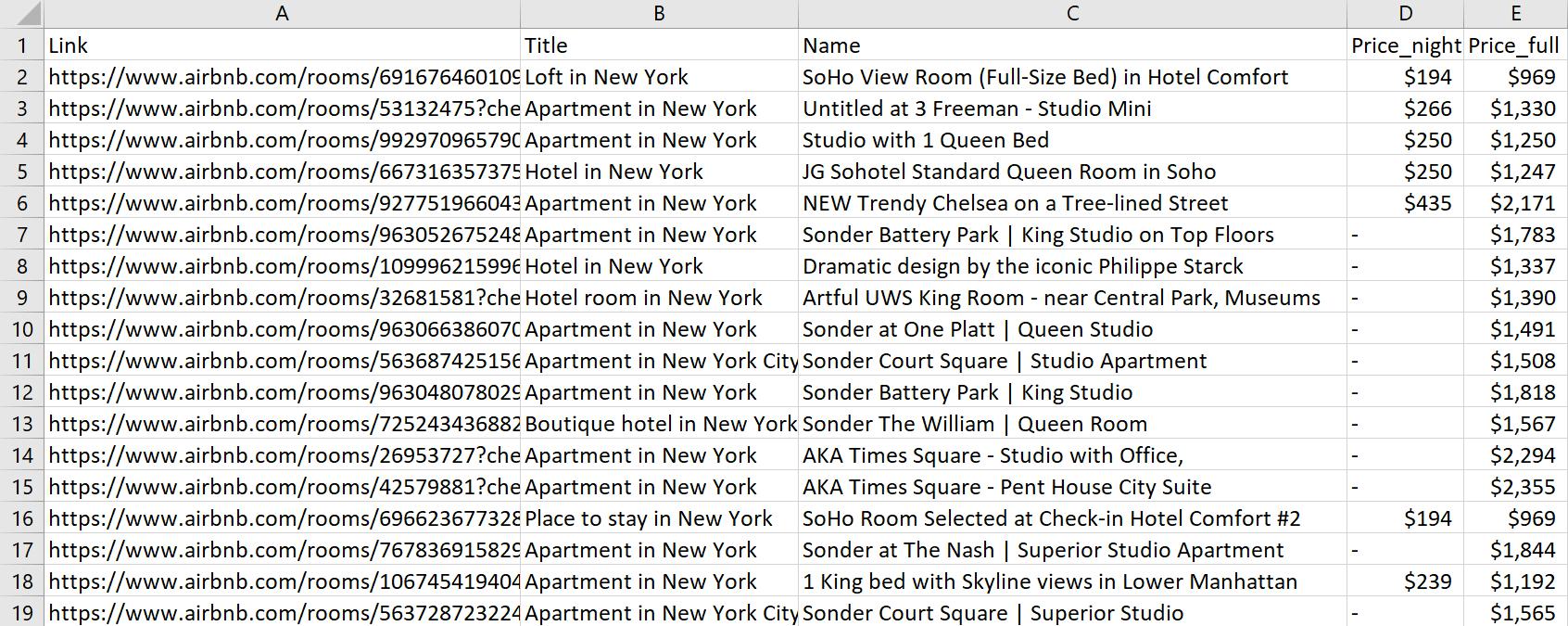

Example of the data you will receive:

This option may be more convenient if you just need to get the data. However, if you want to create your own application that constantly receives up-to-date data, we will tell you how to do it below.

Scrape Listings using Unofficial Airbnb API

Let's start with the simpler option of using HasData's Airbnb API to get listing data and parse it using Python. This API returns a JSON response in the following format:

- requestMetadata

- id

- status

- url

- properties (array of objects)

- id

- url

- title

- name

- roomType

- listingType

- latitude

- longitude

- photos (array of URLs)

- rating

- reviews

- badges

- price

- originalPrice

- discountedPrice

- qualifier

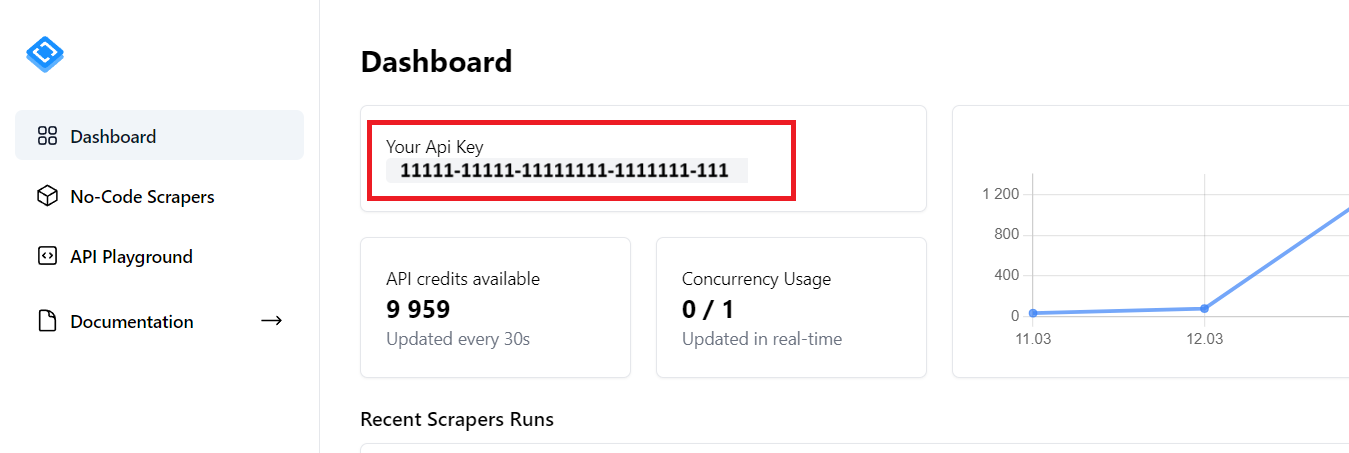

- breakdown To use it, sign up and copy your personal API key:

Let's proceed with building the scraper. If you want to get the resulting script, go to the Google Colaboratory.

Before we begin, please ensure you have installed Python 3.10 or above. This is a requirement for all the scripts we'll be using. To install the necessary libraries, open a command prompt or terminal and run the following commands:

pip install requests pandasCreate a new project and import the libraries:

import requests

import jsonSet your search parameters to scrape listings:

location = "New York"

check_in = "2024-03-28"

check_out = "2024-03-30"Provide a request header with your HasData API key:

headers = {

'x-api-key': 'YOUR-API-KEY'

}Create URL with the previously specified parameters:

url = f"https://api.hasdata.com/scrape/airbnb/listing?&location={location}&checkIn={check_in}&checkOut={check_out}"Make a request:

response = requests.get(url, headers=headers)Let's check if we received a positive response. If so, we will save the data in JSON format. Otherwise, we will display an error message:

if response.status_code == 200:

data = response.json()

with open('airbnb_listing.json', 'w') as json_file:

json.dump(data, json_file)

print("Saved to 'airbnb_listing.json'")

else:

print(f"Error: {response.status_code}")As a result, we will obtain a JSON object:

{

"requestMetadata": {

"id": "a9b4c918-0d73-447d-bc29-009035e4efcc",

"status": "ok",

"url": "https://www.airbnb.com/s/New%20York/homes?checkin=2024-03-28&checkout=2024-03-30"

},

"properties": [

{

"id": "691676460109271194",

"url": "https://www.airbnb.com/rooms/691676460109271194",

"title": "Loft in New York",

"name": "SoHo View Room (Full-Size Bed) in Hotel Comfort",

"roomType": "entire_home",

"listingType": "REPRESENTATIVE",

"latitude": 40.71806,

"longitude": -74.00095,

"photos": [

"https://a0.muscache.com/im/pictures/prohost-api/Hosting-691676460109271194/original/82824443-8970-4061-94bd-4524d6caee3c.jpeg?im_w=720", "https://a0.muscache.com/im/pictures/miso/Hosting-691676460109271194/original/1818d3a5-8578-4a59-a9b7-63786541f7d5.jpeg?im_w=720"

],

"rating": 4.56,

"reviews": 1175,

"badges": [

],

"price": {

"originalPrice": "$210",

"discountedPrice": "$157",

"qualifier": "night",

"breakdown": [

]

}

},

... (other listings)

]Using the Airbnb API will not only allow you to get all the necessary data quickly, but it will also help you avoid possible blocking, simplify your code, and allow you to focus on data analysis rather than data collection.

Scrape Airbnb Property Data with API

You can also use the Airbnb API to retrieve data not only through search but also for specific properties. This will provide you with more detailed information.

Let's use a different endpoint and slightly modify our script to iterate through an array of predefined properties. You can find a ready-made script in Google Colaboratory.

The beginning of the script remains the same:

import requests

import jsonThen, create a variable to store data:

data_list = []This is necessary to save all data to a single file at the end. You also need to specify the HasData API key and the links to the properties you want to scrape:

properties=["https://www.airbnb.com/rooms/946842435422127304", "https://www.airbnb.com/rooms/49662898"]

headers = {

'x-api-key': 'YOUR-API-KEY'

}Go through links:

for property in properties:Send the request to the API:

url = f"https://api.hasdata.com/scrape/airbnb/property?url={property}"

response = requests.get(url, headers=headers)Process the response if it was successful:

if response.status_code == 200:

data = response.json()At this point, we can either:

- Save the data for the current property to a separate file.

- Add it to a variable so that after iterating through all the links, we can save all the data to a single JSON file.

To save the data to a separate file, use the following code:

with open(f'airbnb_property_{data["property"]["id"]}.json', 'w') as json_file:

json.dump(data, json_file)To save the data for later use, store it in a variable:

data_list.append(data['property'])This way, your script will iterate through all the links and save detailed data for each offer. To obtain a final file with all the data, use the following code:

with open('airbnb_properties.json', 'w') as json_file:

json.dump(data_list, json_file)The code you have now is functional, but there are a few ways to improve it in the future and save the data not in JSON but in any more convenient format. Alternatively, you can add the ability to get a list of URLs from a file instead of a variable.

Scrape Airbnb Using Headless Browser

If you want to take the more challenging route and write your own scraper, you will need a headless browser. This will mimic a user's behavior and allow you to navigate the page and collect data.

Scraping without an API is difficult because Airbnb's content is dynamically generated. Trying to use simple requests to get data from the page won't work. But if you want to try it on your own, we have provided a script on Google Collaboratory that you can use to try scraping the page with Requests, BeautifulSoup or Scrapy and save the resulting HTML code to a file. The result will be quite visual:

Therefore, we will not consider this option and instead move on to a more effective solution: using a headless browser and the Selenium library.

Installing Necessary Libraries

We only need two libraries to create a web scraper: Selenium and Pandas. Let's install them using the package manager:

pip install selenium pandasFor Selenium, you may also need to download and install the webdriver separately. However, this is not necessary in the latest versions. If you haven't used headless browsers, you can find an article on scraping with Selenium in our blog.

Understanding Airbnb's Website Structure

Before we begin, it's worth noting that you can also find the ready-made script in Google Collaboratory. You can download and run it on your own PC since Google doesn't allow running Web Driver in Colab Research.

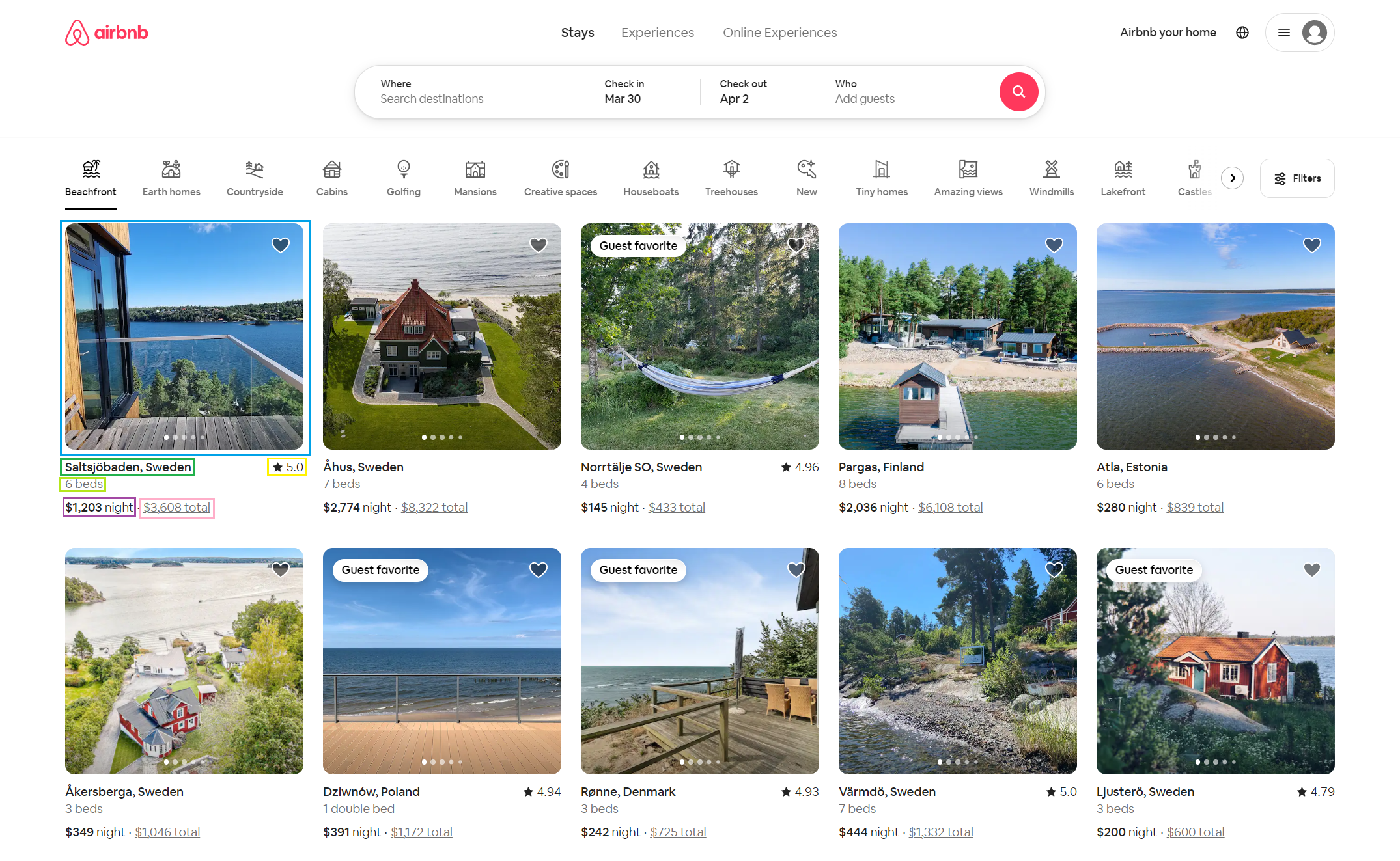

Now let's go to the Airbnb website, specify the housing search parameters, and see what data we can extract:

So, if we extract data from the Airbnb search page, we will get the following information:

- Property title.

- Number of rooms.

- Price. Airbnb returns the price for one night and the specified number of days.

- Rating. This parameter is not available for all listings, and this should be considered during scraping.

The next thing to pay attention to is the link. In general, for the request we made earlier, it will have the following form:

https://www.airbnb.com/?tab_id=home_tab&refinement_paths%5B%5D=%2Fhomes&search_mode=flex_destinations_search&flexible_trip_lengths%5B%5D=one_week&monthly_start_date=2024-04-01&monthly_length=3&monthly_end_date=2024-07-01&category_tag=Tag%3A789&price_filter_input_type=0&channel=EXPLORE&date_picker_type=calendar&checkin=2024-03-30&checkout=2024-04-02&source=structured_search_input_header&search_type=filter_changeOr, this form for specified location:

https://www.airbnb.com/s/New-York--United-States/homes?tab_id=home_tab&refinement_paths%5B%5D=%2Fhomes&flexible_trip_lengths%5B%5D=one_week&monthly_start_date=2024-04-01&monthly_length=3&monthly_end_date=2024-07-01&price_filter_input_type=0&channel=EXPLORE&date_picker_type=calendar&checkin=2024-03-30&checkout=2024-04-02&source=structured_search_input_header&search_type=autocomplete_click&price_filter_num_nights=3&query=New%20York%2C%20United%20States&place_id=ChIJOwE7_GTtwokRFq0uOwLSE9gLet's identify the main settings we'll be changing later on:

- Location. This is where you want to search, like "New-York--United-States ".

search_mode: It's the search mode. "flex_destinations_search" means flexible search for destinations.checkin: Check-in date. For example, if it's "2024-03-28", it means March 28, 2024.checkout: Check-out date. If it's "2024-04-02", it's April 2, 2024.source: Source of the search. "structured_search_input_header" indicates searching from the structured input header.

These settings are enough to create a working link for scraping.

Extracting Listing Details

Now, open your preferred text editor or IDE and create a new file with a .py extension. Remember that you need Python 3.10 or later for scripts in this tutorial.

At the top of your script, add import statements for the libraries you'll be using:

import pandas as pd

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as ECThen set the requested parameters:

city = str("New York").replace(' ', '-')

country = str("United States").replace(' ', '-')

checkin = "2024-03-28" #yyyy-mm-dd

checkout = "2024-04-02"

Configure the web driver settings and parameters, and then launch it:

service = Service('C:\driver\chromedriver.exe')

service.start()

options = Options()

options.headless = True

driver = webdriver.Chrome(service=service, options=options)To prevent errors from interrupting the program's execution, let’s use a try block. This allows your code to skip errors and continue running:

try:Then construct the request URL:

base_url = 'https://www.airbnb.com/s/'

query = f"{city}--{country}/homes"

url = f"{base_url}{query}?checkin={checkin}&checkout={checkout}&source=structured_search_input_header"Go by link:

driver.get(url)And wait for an element of advertisement:

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CSS_SELECTOR, '[data-testid="card-container"]')))Get all properties on the page:

listings = driver.find_elements(By.CSS_SELECTOR, '[data-testid="card-container"]')Before writing to a file, we need to store the data somewhere. Let's create a variable for this:

data = []Iterate through all the ads on the page and collect the necessary data.:

for listing in listings:

href = listing.find_element(By.TAG_NAME, 'a').get_attribute('href')

title = listing.find_element(By.CSS_SELECTOR, '[data-testid="listing-card-title"]').text.strip()

name = listing.find_element(By.CSS_SELECTOR, '[data-testid="listing-card-name"]').text.strip()

subtitle = listing.find_element(By.CSS_SELECTOR, '[data-testid="listing-card-subtitle"]>span').text.strip()

price_night = listing.find_element(By.CSS_SELECTOR, 'span._1y74zjx').text.strip().split(" ") if listing.find_elements(By.CSS_SELECTOR, 'span._1y74zjx') else '-'

price_full = listing.find_element(By.CSS_SELECTOR, 'div._10d7v0r').text.strip().split(" ") if listing.find_elements(By.CSS_SELECTOR, 'div._10d7v0r') else '-'

data.append({'Link': href, 'Title': title, 'Name': name, 'Subtitle': subtitle, 'Price_night': price_night, 'Price_full': price_full})Finally, close the webdriver:

finally:

driver.quit()This is crucial because if you forget to do this, the WebDriver will not automatically close and will continue to run in the background.

Save Airbnb Data

Previously, we imported the Pandas library, which provides a convenient way to work with tabular data stored in DataFrames. We chose this library because it supports saving data to most popular formats, including CSV, JSON, XLSX, and even databases.

To use it, let's create a DataFrame from our collected data:

df = pd.DataFrame(data)And save the data in any convenient format:

df.to_csv(f'airbnb_{city}_{country}_listings.csv', index=False)

df.to_json(f'airbnb_{city}_{country}_listings.json', orient='records')

df.to_sql('listings', conn, index=False, if_exists='replace')

df.to_excel(f'airbnb_{city}_{country}_listings.xlsx', index=False)The result:

In addition to the options discussed above, Pandas supports many others that you can find in the official documentation.

Airbnb Scraping Challenges

When scraping Airbnb, it is essential to remember that they prohibit scraping their website without their explicit permission, as per their Terms of Service. Using automated scraping tools without permission may block your IP address or account. To avoid this, you can use a scraping API or integrate a proxy into your script.

Another challenge you will face is updating the website structure periodically. If Airbnb changes the structure of its website, scraping may become unusable, as your scripts may stop working due to changes in the HTML or CSS markup. In such cases, you should update your scripts to match the new website structure.

Here are some additional points to consider:

- Use a scraping API. This is the safest and most reliable way to scrape Airbnb listings.

- Use a proxy. This can help to hide your IP address and avoid detection by Airbnb.

- Be prepared to update your scripts regularly. Airbnb frequently updates their website, so you will need to be prepared to update your scripts accordingly.

By following these tips, you can scrape Airbnb data safely and effectively.

Extract valuable data from Airbnb listings with ease: locations, prices, images, availability, ratings, number of reviews, host information, and more, in just a…

Zillow Scraper is the tool for real estate agents, investors, and market researchers. Its easy-to-use interface requires no coding knowledge and allows users to…

Conclusion and Takeaways

With the data you gather from Airbnb through web scraping, you can analyze the real estate market more effectively. You can create your own price comparison services or recommendation systems and use this data in your marketing strategies and advertisements. For example, businesses and entrepreneurs can use this data for targeted marketing and advertising campaigns aimed at audiences interested in renting properties.

In this article, we discussed the process of web scraping data from the popular online accommodation service Airbnb and provided examples of ready-made scripts. We discussed the advantages and disadvantages of data scraping compared to using APIs. We also explored alternative methods of obtaining data, including using the Airbnb API and third-party solutions.

If you prefer not to gather this data using Python, other programming languages are suitable for web scraping. For instance, JavaScript (using the Puppeteer library), Ruby (using the Nokogiri library), and Go (using the Colly library) can be good alternatives. Additionally, you can use a ready-made Airbnb no-code scraper, which provides a user interface for scraping data without coding.