Web scraping involves more than just retrieving HTML code. You should also process the code, and Python offers several libraries for this task. One of the most popular and beginner-friendly options is Beautiful Soup.

This library's simplicity makes it a popular choice for newcomers. This article will provide a detailed guide on using it for page parsing, along with potential challenges and workarounds.

Getting Started with Beautiful Soup

Before you start, make sure you have Python 3.9 or higher installed and a development environment set up. We also recommend setting up a virtual environment to ensure a secure development process.

Effortlessly extract Google Maps data – business types, phone numbers, addresses, websites, emails, ratings, review counts, and more. No coding needed! Download…

Google SERP Scraper is the perfect tool for any digital marketer looking to quickly and accurately collect data from Google search engine results. With no coding…

If you're new to Python programming, encounter installation issues, or need help setting up a virtual environment, refer to our introductory Python article for detailed instructions.

Install Beautiful Soup

BeautifulSoup, also known as bs4, is a popular Python library for web scraping. It allows you to easily parse HTML pages and extract various elements from their structure.

To install it, we will use the package manager and execute the following command in the terminal or command line, depending on the operating system:

pip install bs4Once installed, import bs4 into your Python scripts and start web scraping.

The Basic Structure of HTML Pages

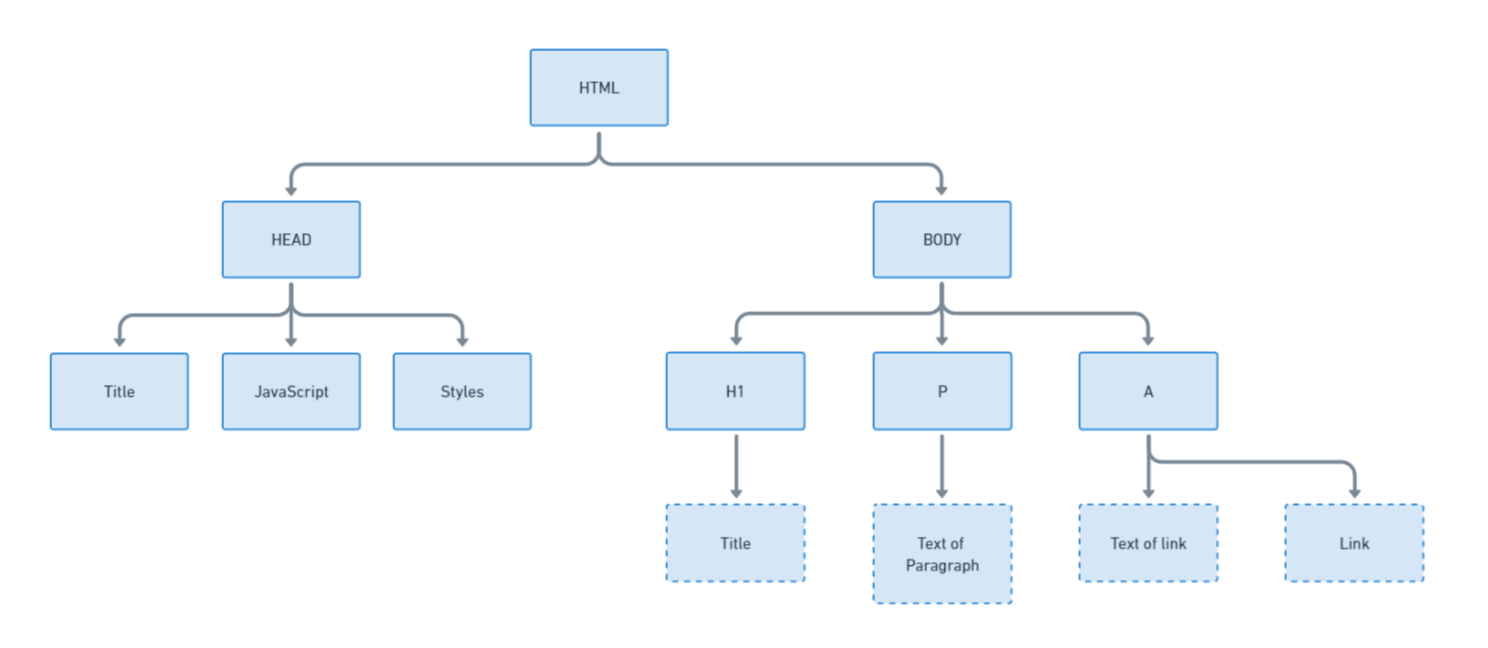

Before parsing data from an HTML page, it's crucial to understand its basic structure. HTML consists of tags, each with a specific role. For example, every page has a <head> containing the page title, styles, and scripts and a <body> containing the visible content.

To better understand the structure, let's visualize it:

Understanding the structure helps identify the data you want to extract. For example, links are typically stored in <a> tags, while plain text is in <p> tags. Let's consider the HTML code from example.com with some styles removed for brevity:

<html><head>

<title>Example Domain</title>

<meta charset="utf-8">

<meta http-equiv="Content-type" content="text/html; charset=utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<style type="text/css">

body {

background-color: #f0f0f2;

margin: 0;

padding: 0;

font-family: -apple-system, system-ui, BlinkMacSystemFont, "Segoe UI", "Open Sans", "Helvetica Neue", Helvetica, Arial, sans-serif;

}

div {

width: 600px;

margin: 5em auto;

padding: 2em;

background-color: #fdfdff;

border-radius: 0.5em;

box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02);

}

a:link, a:visited {

color: #38488f;

text-decoration: none;

}

@media (max-width: 700px) {

div {

margin: 0 auto;

width: auto;

}

}

</style>

<style>.reset-css {

border: 0;

line-height: 1.5;

margin: 0;

padding: 0;

width: auto;

height: auto;

font-size: 100%;

border-collapse: separate;

border-spacing: 0;

text-align: left;

vertical-align: middle;

}

</style></head>

<body>

<div>

<h1>Example Domain</h1>

<p>This domain is for use in illustrative examples in documents. You may use this

domain in literature without prior coordination or asking for permission.</p>

<p><a href="https://www.iana.org/domains/example">More information...</a></p>

</div>

<div id="wt-sky-root"></div></body></html>Every page is unique, with its own set of HTML tags. This means there's no one-size-fits-all script for parsing data from HTML pages. Instead, you need to identify the specific tags on each page containing your desired data.

To do this, you can use the DevTools in your browser. Open DevTools by pressing F12 or right-clicking the page and selecting "Inspect." Then, navigate to the "Elements" tab to view the HTML code.

Get an HTML from File or Website

As we mentioned, Beautiful Soup is a Python library for parsing HTML documents. It is unsuitable for web scraping or making HTTP requests, but it helps you parse specific HTML elements.

Before using BeautifulSoup, you need to obtain the HTML code you want to parse. Here are two common ways to do this: from an HTML file and a web page. Let's assume that we have a file index.html in the same folder as our script, which stores the HTML code of a page. Then, we can use the following code to get it:

with open('index.html', 'r') as file:

html_code = file.read()If you want to get data from a page, not an HTML file, you should use an additional library that can make requests. You can use any library you are familiar with, such as requests, urllib, or http.client. Let's consider the Requests library as an example:

import requests

response = requests.get('https://example.com')

html_code = response.textNow, the variable html_code will contain the entire HTML code of the page, and we can parse it using the bs4 library.

Parse HTML Code With Beautiful Soup

Now, we need to create a soup object, which will represent the HTML document we can interact with. We import the library into our project and create a soup object with the parser specified. In our case, it is the HTML parser:

from bs4 import BeautifulSoup

soup = BeautifulSoup(html_code, 'html.parser')After that, we can access this object to extract the necessary data.

Find HTML elements

Beautiful Soup provides various methods for searching and manipulating elements in an HTML document. Below are the main methods:

| Method | Description |

|---|---|

| find(name, attrs, recursive, string, **kwargs) | Find the first element that matches the specified parameters. |

| find_all(name, attrs, recursive, string, limit, **kwargs) | Find all elements that match the specified parameters. |

| find_next(name, attrs, string, **kwargs) | Find the next element after the specified element. |

| find_all_next(name, attrs, string, limit, **kwargs) | Find all elements after the specified element. |

| find_previous(name, attrs, string, **kwargs) | Find the previous element before the specified element. |

| find_all_previous(name, attrs, string, limit, **kwargs) | Find all elements before the specified element. |

| find_parent(name, attrs, string, **kwargs) | Find the parent element of the specified element. |

| find_all_parents(name, attrs, string, limit, **kwargs) | Find all parent elements of the specified element. |

| find_next_sibling(name, attrs, string, **kwargs) | Find the next sibling element of the specified element. |

| find_all_next_siblings(name, attrs, string, limit, **kwargs) | Find all next sibling elements of the specified element. |

| find_previous_sibling(name, attrs, string, **kwargs) | Find the previous sibling element of the specified element. |

| find_all_previous_siblings(name, attrs, string, limit, **kwargs) | Find all previous sibling elements of the specified element. |

| select(selector, recursive=True, limit=None, **kwargs) | Find elements using CSS selectors. |

You can find the full list of methods in the official documentation. In this section, we will discuss several examples of using these methods to search for the required elements by various parameters.

By HTML Tag Name

Beautiful Soup makes it easy to find elements on a page by their HTML tag. For example, if we know that all links are wrapped in <a> tags, we can use the find_all() method to find all <a> tags on a page:

all_a_tags = soup.find_all('a')This will return a list of all <a> tags found. We can also use find() to get only the first matching HTML tag:

first_a_tags = soup.find('a')It's important to note that the search is performed on the entire HTML code of the page, not just the part displayed on the screen. This means that the first <a> tag will usually be located in the <head> tag, not in the <body> tag. It's important to keep this in mind when parsing.

By ID

Sometimes, elements are assigned unique identifiers, IDs, uniquely identifying the object. There can only be one element with such an ID on a page, so searching by ID is quite reliable.

element_by_id = soup.find(id='example_id')The ID is specified as an attribute of the HTML tags.

By Class Name

Classes are another convenient way to describe elements. They are convenient for website developers because they can create their own set of styles for each class, which can be easily assigned. So, classes are also good for parsing.

elements_by_class = soup.find_all(class_='example_class')Sometimes, searching by class is the most convenient and even the only way to find elements on a page.

Using CSS selectors

Beautiful Soup also supports element search using CSS selectors, providing a fairly flexible approach. For example, let's consider finding all elements with the <a> tag located inside an element with the "container" class:

selected_elements = soup.select('.container a')Using CSS selectors makes BeautifulSoup a really convenient tool. And if you haven't used them before, you can learn more about CSS selectors and how to use them in our other article.

Extract data

Selecting the appropriate element is not enough to obtain the required data. First, you need to determine whether you need the element's text or the content of its attribute. Data extraction will differ depending on this. In this section, we will consider both options.

Extract Text Content

As we mentioned earlier, the text on a page is usually located in the <p> tag. Therefore, as an example, let's extract the text from such an element:

paragraph_text = soup.find('p').textIf you want to extract the text from all elements with the <p> tag, it is important to remember that the find_all method returns a list, not an element. Therefore, to extract the contents of the tag, you need to iterate through all the elements of the list and extract the text one by one:

all_paragraphs = soup.find_all('p')

all_paragraph_texts = [paragraph.text for paragraph in all_paragraphs]This code will allow you to extract the contents of all elements with the <p> tag on the page.

Extract Attribute Values

Another common use case is extracting links. However, links are not stored in the tag itself, but in an attribute of the tag, for example:

<a href="https://www.iana.org/domains/example">More information...</a>However, we can specify the attribute whose content we want to return when searching for an element:

link_href = soup.find('a')['href']Now, let's extract all the links on the page:

all_links = soup.find_all('a')

all_link_hrefs = [link['href'] for link in all_links]This way, you can extract information from absolutely any tag or its attribute. The main thing is to pay attention to whether you are getting an element or a list. The processing will differ depending on this.

Advanced Techniques

At this stage, we have already covered the main methods of the bs4 library, and they will be enough to solve most problems. However, we would also like to share some additional tricks that may be useful when working on certain projects.

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Navigate between Elements

Sometimes, it's easier to find an element by its siblings or parents than by itself. For example, suppose you can't find the element you need directly, but you can easily identify its previous sibling:

current_element = soup.find(id='example_id')Then find the next <p> element after current_element:

next_element = current_element.find_next_sibling('p')You can also find the previous sibling:

prev_element = current_element.find_previous_sibling('p')You can use similar methods to find parent and child elements.

Handling Nested Elements

However, Beautiful Soup offers a more concise way to navigate child elements: dot notation. This allows you to access nested elements directly from a parent element.

For example, let's find a parent div element:

outer_div = soup.find('div')Then access any child element directly:

inner_paragraph = outer_div.pThis can be more convenient for working with nested structures.

Filter Elements

You can use BeautifulSoup to find and filter elements when extracting specific data from a web page. This is useful when extracting specific information, removing unwanted elements, or organizing the data. For example, let’s find all <p> tags and filter them by text content:

filtered_elements = soup.find_all('p', text='desired_text')Using the same principle, you can filter the content of any tags and elements.

Extract all Text

While most text content is stored in <p> tags, there are cases where you need to extract all text from a page regardless of its container tag. To do this, you can use the get_text() method on the entire BeautifulSoup object:

all_text = soup.get_text()This code will return all text from any page, regardless of its tag structure.

Make HTML Formatting Pretty

Although this task is rarely encountered, there may be times when you need a way to make your HTML code pretty. You can do this using the following method:

pretty_html = soup.prettify()This will add the necessary indentation and line breaks to your HTML code.

Handling Challenges

You may encounter some difficulties when processing HTML code using the BeautifulSoup library. This section will try to tell you how to overcome them.

Missing or Broken HTML

Websites may contain incomplete or malformed HTML. BeautifulSoup is designed to handle such "dirty" HTML and will try its best to interpret it and provide access to the data. For example, the following incomplete HTML will be parsed correctly:

incomplete_html = '<p>This is a paragraph'

soup = BeautifulSoup(incomplete_html, 'html.parser')As practice shows, BeautifulSoup copes with such problems quite well.

Error Conditions

Like any code, BeautifulSoup can raise errors when processing data. For example, if you try to find a non-existent element, BeautifulSoup will return None. Further processing of this element will cause an exception. To avoid this, use try/except blocks or conditional checks to handle missing data.

Dynamic Content

Beautiful Soup only parses HTML, and it doesn't fetch it. You need a separate tool to retrieve the HTML to process dynamic web pages. Then, you can use BeautifulSoup or the chosen tool to extract the required elements.

Popular tools for handling dynamic pages include Selenium and Pyppeteer. You can use them to get the HTML code:

from selenium import webdriver

from bs4 import BeautifulSoup

driver = webdriver.Chrome()

driver.get('https://example.com')

dynamic_content = driver.page_source

soup = BeautifulSoup(dynamic_content, 'html.parser')However, since these tools provide their own parsing methods, using BeautifulSoup may be redundant.

Conclusion

Beautiful Soup is one of the most popular and user-friendly web scraping libraries. It's easy to learn, even for users without extensive web technologies or programming knowledge. It provides a variety of methods for searching, navigating, and extracting data from HTML. Its flexible filtering and navigation capabilities suit various web scraping scenarios.

However, as with any other tool, it has some limitations. It doesn't support asynchronous requests, which can be a drawback when working with large amounts of data or dynamic content. Additionally, it doesn't execute JavaScript, so other tools like Selenium may be required for web pages where content is generated using JavaScript.

As mentioned in the article, this library was developed for parsing and doesn't perform HTTP requests independently. Therefore, additional libraries like Requests are necessary for downloading web pages.

Overall, BS4 is a powerful and convenient tool for web scraping. However, like any technology, it has its own strengths and limitations. The choice of using it depends on the specific requirements of the task and the developer's preferences.