In this tutorial, we will use Python to scrape product data from Walmart's website. We will explore different ways to parse and extract the data we need and discuss some of the potential challenges associated with web scraping.

Walmart offers a vast selection of products in its online store, making it an ideal candidate for web scraping projects. By leveraging web scraping techniques, you can automate the collection of product data such as prices, availability, images, descriptions, rating, reviews, and more. This data can then be used in your applications or analysis projects.

Introduction to Walmart Scraping

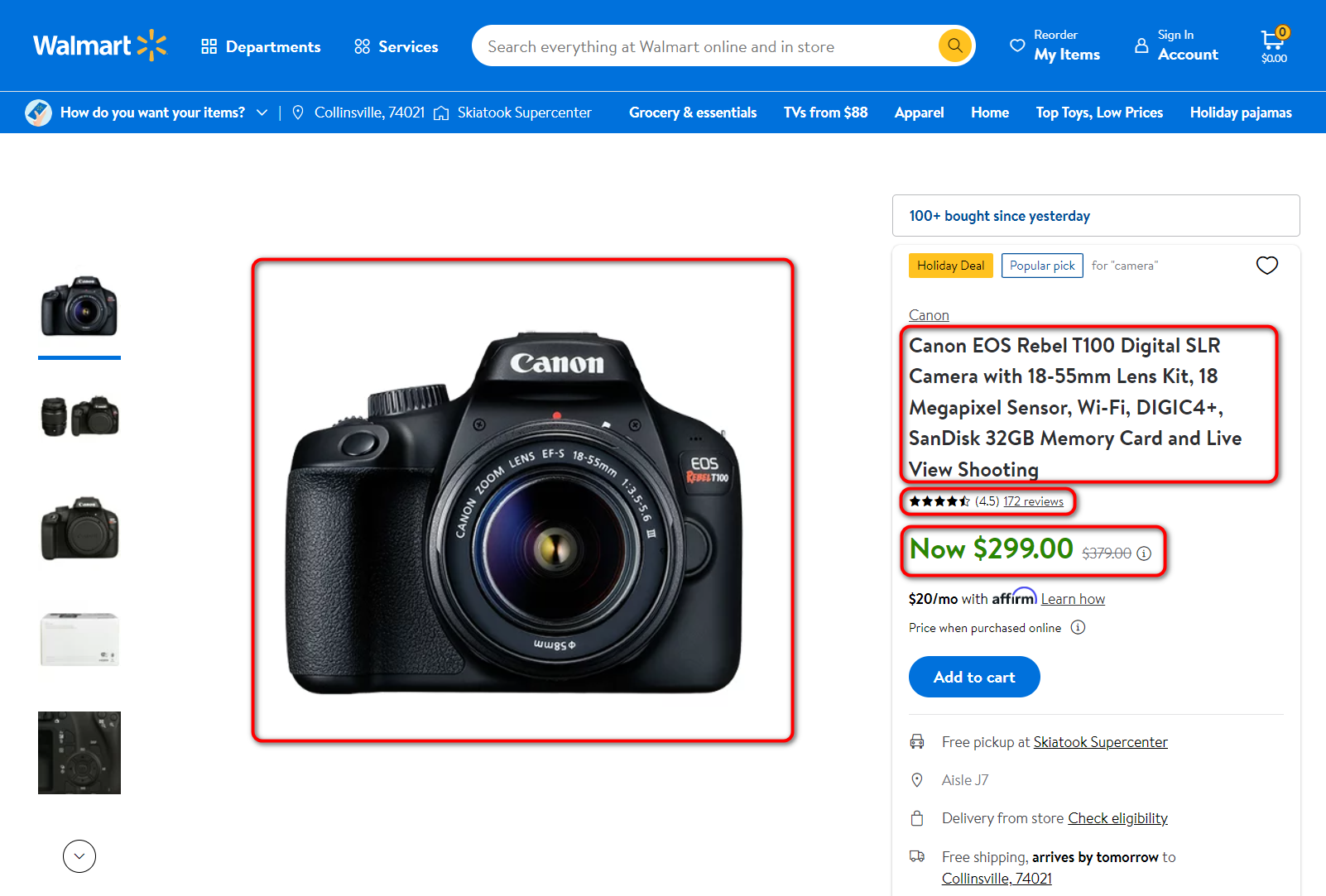

To scrape product data, you first need to analyze it. Let's look at the product page - they are all of the same types, and after analyzing one, you can extract needed web data using the written script.

We are interested in the following fields for scraping:

- Product name

- Product price

- The number of reviews

- Rating

- Main image

Of course, you may need other fields with different data for specific purposes like description or brand, but we will only consider the primary product data in this example. The other details can be extracted in the same way. So, as an example, let's build a scraper that will collect links from a .csv file, follow each of them and save the resulting data to another file. Read also about cURL to Python.

You can also collect product URLs from pages and categories using a script to collect all the products, but we will not discuss it in this guide. We previously published an article about scraping data from Amazon and looked at how you can organize the transition to the search results page and collect all product links. For Walmart, this can be done similarly.

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Our Amazon Best Sellers Scraper extracts the top most popular products from the Best Sellers category and downloads all the necessary data, such as product name,…

Walmart is problematic enough to extract data from because it does not support product scraping and has an anti-spam system. Tracking and blocking IP addresses will likely block most scrapers trying to access their site. Therefore, before creating a Walmart scraper in Python, we note that if you write your own scraper, you will also need to take care of bypassing blocks.

If there is no opportunity or time to organize a system for bypassing locks, you can scrape data using the web scraping API, which will solve these problems. How to scrape Walmart using our API will be covered in detail at the end of the article.

Read more: Web Scraping with Python: from Fundamentals to Practice

Installing Python Libraries for Scraping

Before searching for elements to scrape, let's create a Python file and include the necessary libraries. We will use:

- The Requests library for executing requests.

- The BeautifulSoup library for simplifying the parsing of the web page.

If you don't have the BeautifulSoup library (the requests library is built-in), use the following command on the command line:

pip install beautifulsoup4Include these libraries in a Python file:

from bs4 import BeautifulSoup

import requestsLet's create or clear the file (overwrite) in which we will store the data from Walmart. Let's name this file result.csv and define the columns that will be in it:

with open("result.csv", "w") as f:

f.write("title; price; rating; reviews; image\n")After that, open the links.csv file, which will store links to product pages that we will scrape. We will go through all of them in turn and perform data processing for each of them. The following code will do it:

with open("links.csv", "r+") as links:

for link in links:Now we get the entire code of the page with which we will work further and parse it using BeautifulSoup:

html_text = requests.get(link).text

soup = BeautifulSoup(html_text, 'lxml')At this stage, we already have the product page code, and we can display it, for example, using print(soup).

How To Build A List Of Walmart Product URLs

Before scraping individual products, let's assume we don't have a file with product page links and want to collect them. To avoid manual work, we'll create a script that automates this process and saves the links to a file. While saving to a file is optional (since we'll use the links directly later), we'll implement it for better clarity.

First, we'll create search page links. We'll use a list of keywords to find products and save them in a keywords.txt file. Then, we'll import the necessary libraries into our script. In addition to the previously mentioned ones, we'll also need the urllib library for convenient link manipulation and construction.

import urllib.parse

import requests

from bs4 import BeautifulSoupNext, define variables where you specify the path and filename of the keyword file for requests and the file where you want to store links to specific products.

keywords_filename = "keywords.txt"

output_filename = "output_links.txt" Define another variable to store the base URL we will use as a starting point when composing search page links.

base_url = "https://www.walmart.com/search"Next, extract a list of keywords from the file and create links to search pages.

with open(keywords_filename, 'r') as file:

keywords = [line.strip() for line in file]

search_links = [f"{base_url}?q={urllib.parse.quote(keyword)}" for keyword in keywords]Set the request headers. A list of latest User Agents, as well as information about what they are and why they are needed, can be found in our other article.

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}Create a list variable to store ready-made product links:

all_absolute_links = []Make a loop to iterate through all previously generated search page links:

for link in search_links:In this loop, you can decide whether to collect links from only one page or try to iterate through all of them. The code you write in this loop will be executed for all search pages. However, we will only get products from the first search page and won't go any further. To do this, we will request the current link and parse the response containing the page's HTML code.

response = requests.get(link, headers=headers)

soup = BeautifulSoup(response.text, 'html.parser')Extract all product links from a web page:

absolute_links = [a['href'] for a in soup.find_all('a', class_='absolute', href=True)]Let's extract only absolute links, because sometimes we may encounter relative links in child elements. If we don't filter them out, they will also be saved:

absolute_links = [url if url.startswith("https://") else "https://" + url for url in absolute_links]Add the collected links to the list:

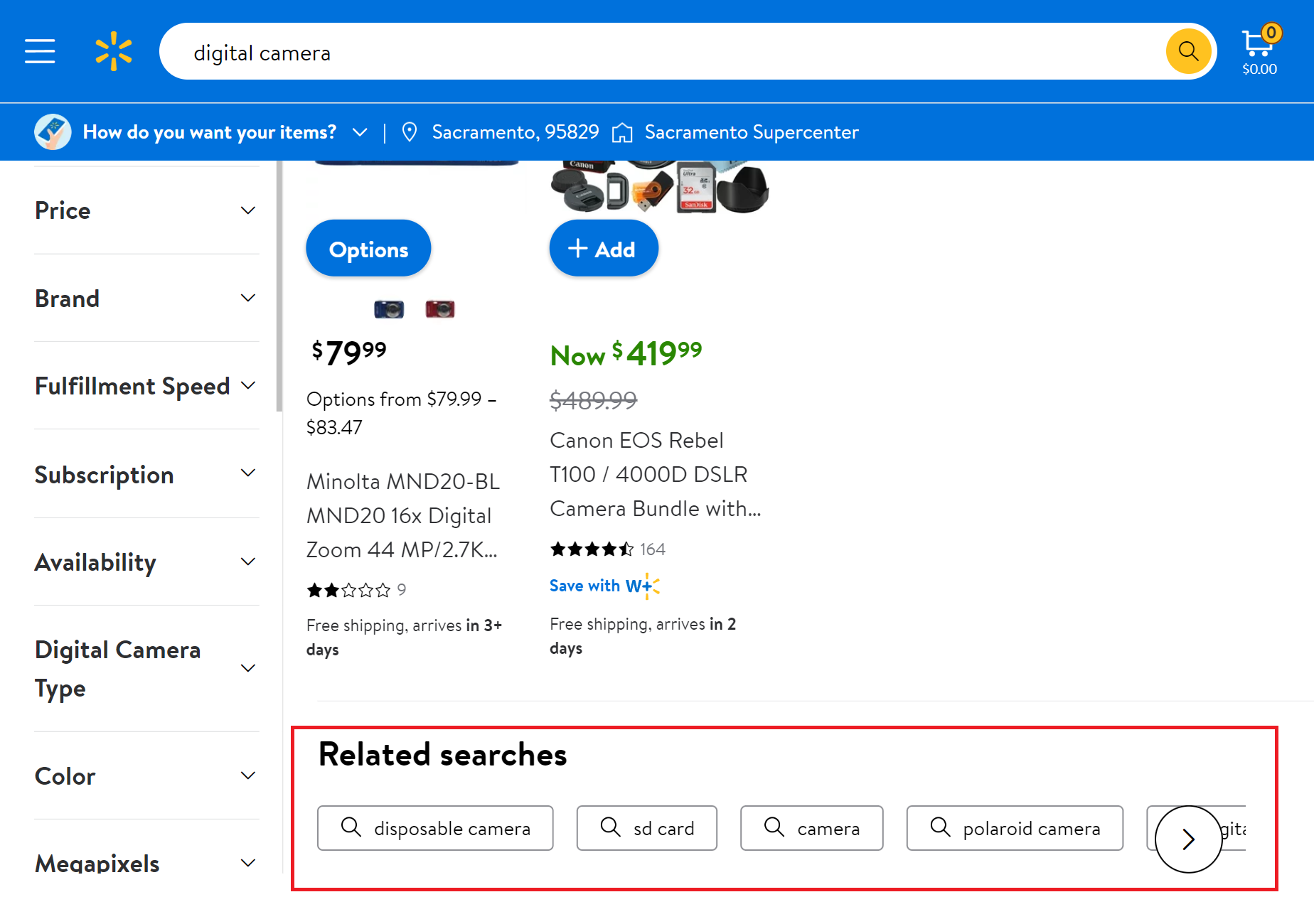

all_absolute_links.extend(absolute_links)You can also find related searches on the search results page that can be potential keywords for you.

Let's scrape them as well and save them in a separate file. To do this, create another list somewhere at the beginning where we can store the related searches:

related_searches = []Let's refine our loop and store the contents of the "related searches" container in a variable:

carousel_container = soup.find("ul", {"data-testid": "carousel-container"})If the variable is not empty, extract its elements and add their text to the "related searches" list:

if carousel_container:

searches = carousel_container.find_all("li")

for search in searches:

related_searches.append(search.get_text())Having collected the product links and related searches, we simply need to save them to the files.

with open(output_filename, 'w') as output_file:

for absolute_link in all_absolute_links:

output_file.write(absolute_link + '\n')After successfully scraping links, you can display a notification or continue processing the extracted product page links.

Extracting Data from Product Pages

Way 1. Parsing the Page Body

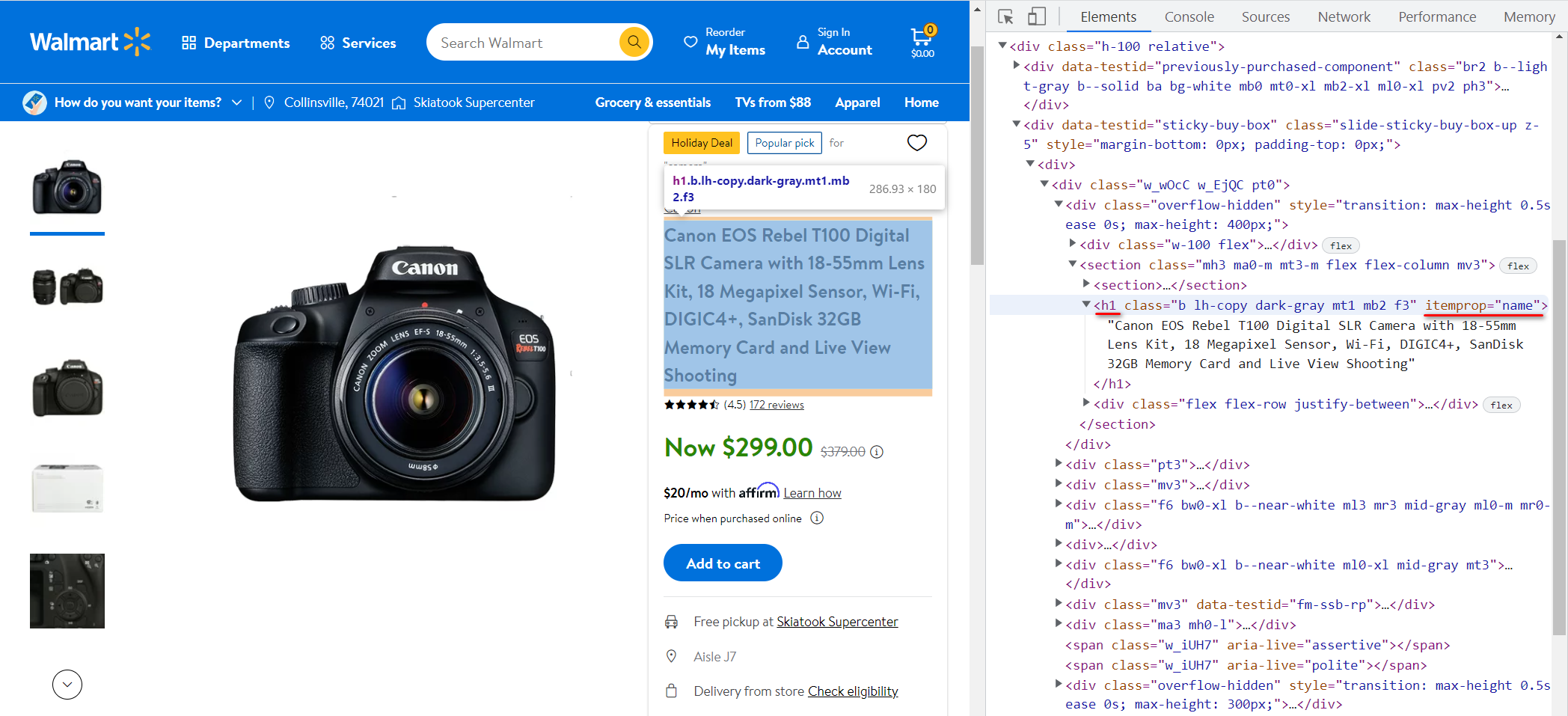

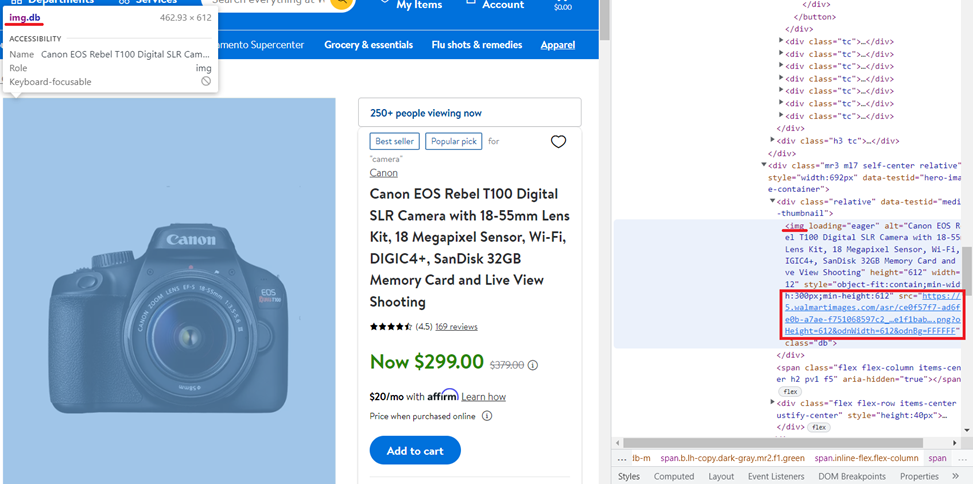

To select only the necessary information, let's analyze the page to find selectors and attributes that uniquely describe the essential data.

Let's go to the Walmart website and look at the product page again. Let's find the product title first. To do this, go to DevTools (F12) and find the element code (Ctrl + Shift + C and select the element):

In this case, it is in the <h1> tag, and its itemprop attribute has the value "name". Let's enter the value of the text stored in this tag in the variable title:

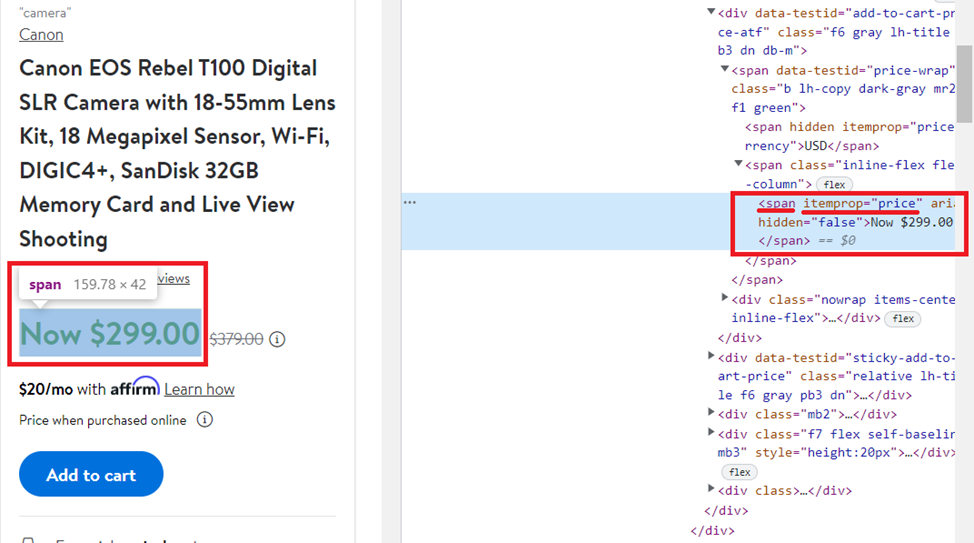

title = soup.find('h1', attrs={'itemprop': 'name'}).textLet's do the same, but now for the price:

Get the content of the <span> tag with the value of the itemprop attribute equal to "price":

price = soup.find('span', attrs={'itemprop': 'price'}).textFor review and rating, the analysis is performed similarly so that we will write a ready-made result:

reviews = soup.find('a', attrs={'itemprop': 'ratingCount'}).text

rating = soup.find('span', attrs={'class': 'rating-number'}).textWith the image, everything is a little different. Let's look at the product page and the item code:

This time, you need to get the src attribute's value, which is stored in the <img> tag with the "db" class and loading attribute equal "eager". Thanks to the BeautifulSoup library, this is quite easy to do:

image = soup.find('img', attrs={'class': 'db','loading': 'eager'})["src"]We should remember that in this case, not every page will return the image. So, it is better to use information from the meta-tag:

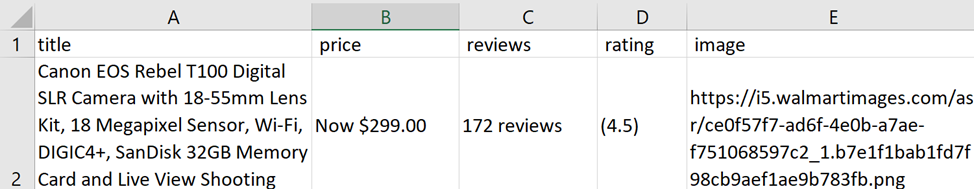

image = soup.find('meta', property="og:image")["content"]If we start scraping with the above variables, we will get the following product details:

Canon EOS Rebel T100 Digital SLR Camera with 18-55mm Lens Kit, 18 Megapixel Sensor, Wi-Fi, DIGIC4+, SanDisk 32GB Memory Card and Live View Shooting

Now $299.00

172 reviews

(4.5)

https://i5.walmartimages.com/asr/ce0f57f7-ad6f-4e0b-a7ae-f751068597c2_1.b7e1f1bab1fd7f98cb9aef1ae9b783fb.pngLet’s save the received data to the previously created/cleared file:

try:

with open("result.csv", "a") as f:

f.write(str(title)+"; "+str(price)+"; "+str(reviews)+"; "+str(rating)+"; "+str(image)+"\n")

except Exception as e:

print("There is no data")It is advisable to do this in a try…except block because if there is no data or the link is not specified correctly, the program execution will be interrupted. The try...except block allows you to "catch" such an error, report it and continue the program.

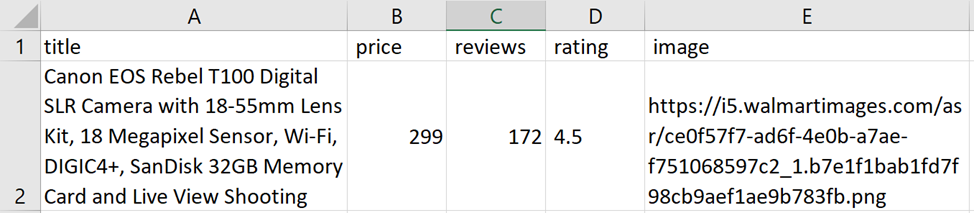

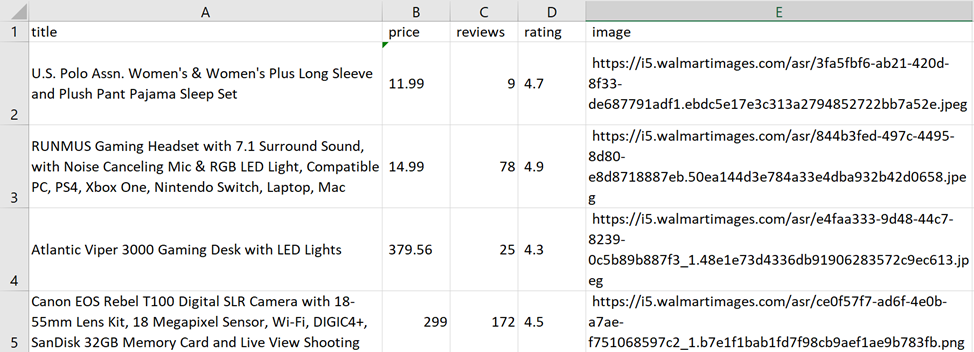

If you run this program, the scraped data will be saved in the file result.csv:

Here we see several problems. First, there is extra text in the price, reviews, and rating columns. Secondly, if there is a discount, the price column may display incorrect information or 2 prices.

Of course, it's not a problem to leave only numeric values with regular expressions, but why complicate the task if there is an easier way?

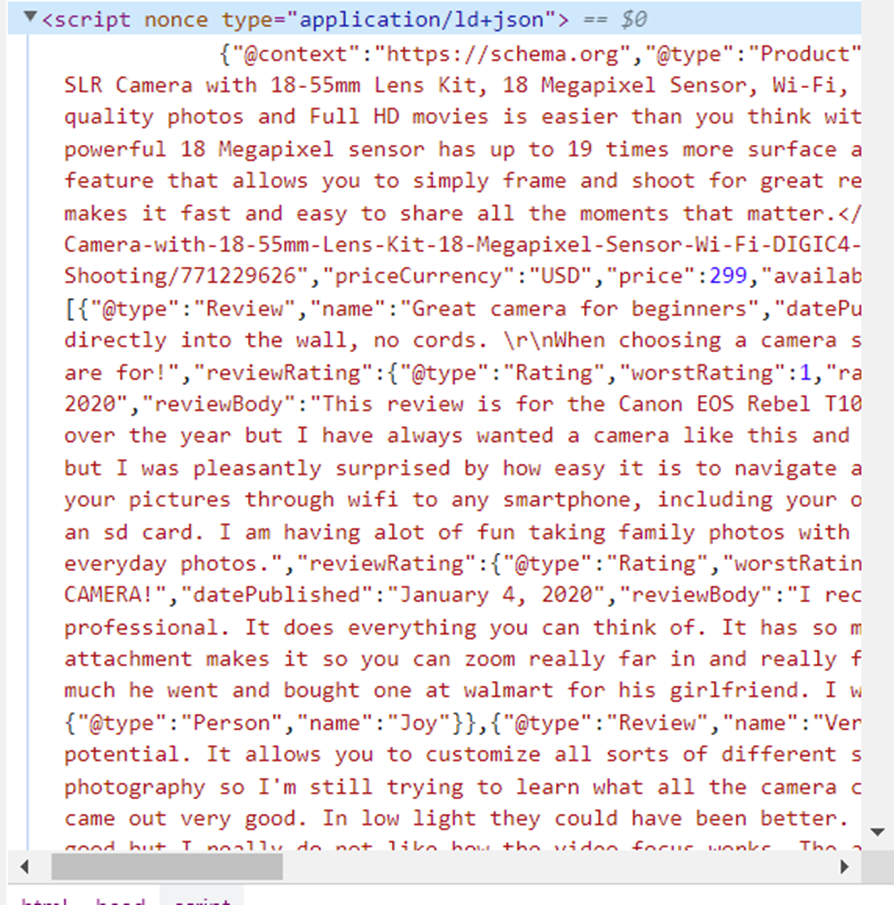

Way 2. Parsing the JSON-LD Structured Data

If you carefully look at the page code, you will notice that all the necessary information is stored not only in the page body. Let's pay attention to the <head>…</head> tag, more precisely to its <script nonce type="application/ld+json">…</script> tag.

This is the “Product” Schema Markup in JSON format. The product schema allows adding specific product attributes to product listings that can appear as rich results on the search engine results page (SERP). Let's copy it and format it into a convenient form for research:

{

"@context": "https://schema.org",

"@type": "Product",

"image": "https://i5.walmartimages.com/asr/ce0f57f7-ad6f-4e0b-a7ae-f751068597c2_1.b7e1f1bab1fd7f98cb9aef1ae9b783fb.png",

"name": "Canon EOS Rebel T100 Digital SLR Camera with 18-55mm Lens Kit, 18 Megapixel Sensor, Wi-Fi, DIGIC4+, SanDisk 32GB Memory Card and Live View Shooting",

"sku": "771229626",

"gtin13": "013803300550",

"description": "<p>Creating distinctive stories with DSLR quality photos and Full HD movies is easier than you think with the 18 Megapixel Canon EOS Rebel T100. Share instantly and shoot remotely via your compatible smartphone with Wi-Fi and the Canon Camera Connect app. The powerful 18 Megapixel sensor has up to 19 times more surface area than many smartphones, and you can instantly transfer photos and movies to your smart device. The Canon EOS Rebel T100 has a Scene Intelligent Auto feature that allows you to simply frame and shoot for great results. It also features Guided Live View shooting with Creative Auto mode, and you can add unique finishes with Creative Filters. The Canon EOS Rebel T100 makes it fast and easy to share all the moments that matter.</p>",

"model": "T100",

"brand": {

"@type": "Brand",

"name": "Canon"

},

"offers": {

"@type": "Offer",

"url": "https://www.walmart.com/ip/Canon-EOS-Rebel-T100-Digital-SLR-Camera-with-18-55mm-Lens-Kit-18-Megapixel-Sensor-Wi-Fi-DIGIC4-SanDisk-32GB-Memory-Card-and-Live-View-Shooting/771229626",

"priceCurrency": "USD",

"price": 299,

"availability": "https://schema.org/InStock",

"itemCondition": "https://schema.org/NewCondition",

"availableDeliveryMethod": "https://schema.org/OnSitePickup"

},

"review": [

{

"@type": "Review",

"name": "Great camera for beginners",

"datePublished": "January 4, 2020",

"reviewBody": "Love this camera....",

"reviewRating": {

"@type": "Rating",

"worstRating": 1,

"ratingValue": 5,

"bestRating": 5

},

"author": {

"@type": "Person",

"name": "Sparkles"

}

},

{

"@type": "Review",

"name": "Perfect for beginners",

"datePublished": "January 7, 2020",

"reviewBody": "I am so in love with this camera!...",

"reviewRating": {

"@type": "Rating",

"worstRating": 1,

"ratingValue": 5,

"bestRating": 5

},

"author": {

"@type": "Person",

"name": "Brazilchick32"

}

},

{

"@type": "Review",

"name": "Great camera",

"datePublished": "January 17, 2020",

"reviewBody": "I really love all the features this camera has. Every time I use it, I'm discovering a new one. I'm pretty technologically challenged, but this hasn't hindered me. The zoom and focus give very detailed and sharp images. I cannot wait to take it on my next trip as right now I've only photographed the dog a million times",

"reviewRating": {

"@type": "Rating",

"worstRating": 1,

"ratingValue": 5,

"bestRating": 5

},

"author": {

"@type": "Person",

"name": "userfriendly"

}

}

],

"aggregateRating": {

"@type": "AggregateRating",

"ratingValue": 4.5,

"bestRating": 5,

"reviewCount": 172

}

}Now it is obvious that this information is enough and it is stored in a more convenient form. That is, it is necessary to read the contents of the <head>…</head> tag and select from it a tag that stores data in JSON. Then set the variables to the following values:

-

Title value of the

[name]attribute. -

Price value of the

[offers][price]attribute. -

Reviews value of the

[aggregateRating][reviewCount]attribute. -

Rating value of the

[aggregateRating][ratingValue]attribute. -

Image value of the

[image]attribute.

To be able to work with JSON, it will be enough to include the built-in library:

import jsonLet's create a data variable in which we put the JSON data:

data = (json.loads(soup.find('script', attrs={'type': 'application/ld+json'}).text))After that, we will enter the data into the appropriate variables:

title = data['name']

price = data['offers']['price']

reviews = data['aggregateRating']['reviewCount']

rating = data['aggregateRating']['ratingValue']

image = data['image']The rest will remain the same. Check the script execution:

Full script:

from bs4 import BeautifulSoup

import requests

import json

with open("result.csv", "w") as f:

f.write("title; price; rating; reviews; image\n")

with open("links.csv", "r+") as links:

for link in links:

html_text = requests.get(link).text

soup = BeautifulSoup(html_text, 'lxml')

data = (json.loads(soup.find('script', attrs={'type': 'application/ld+json'}).text))

title = data['name']

price = data['offers']['price']

reviews = data['aggregateRating']['reviewCount']

rating = data['aggregateRating']['ratingValue']

image = data['image']

try:

with open("result.csv", "a") as f:

f.write(str(title)+"; "+str(price)+"; "+str(reviews)+"; "+str(rating)+"; "+str(image)+"\n")

except Exception as e:

print("There is no data")Walmart Anti-Bot Protection

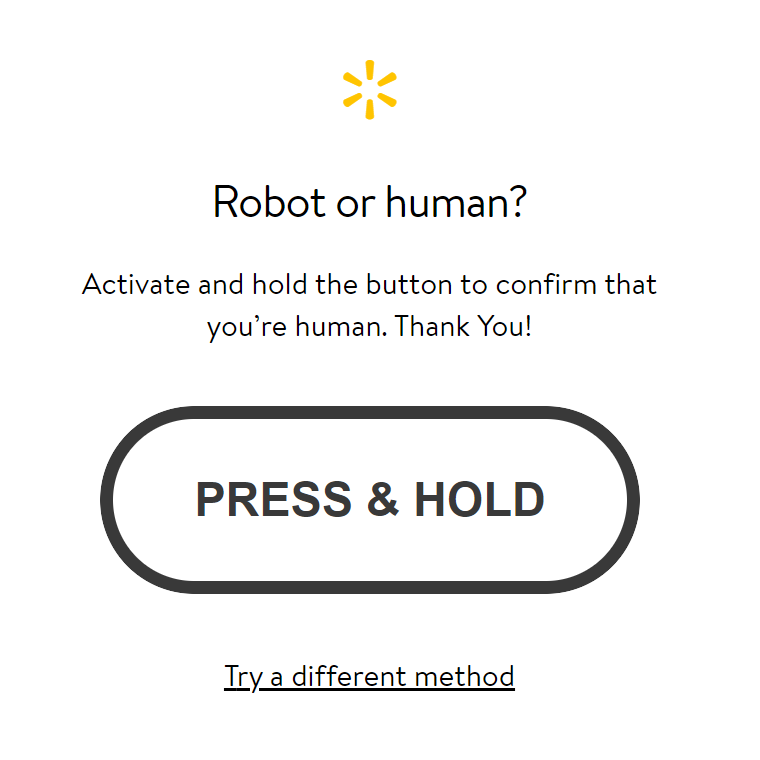

However, as mentioned at the beginning of the article, the service monitors activity that looks like bots and blocks it, offering to solve the captcha. So instead of getting a page with data, another page will come one time:

This is a suggestion to solve the captcha. To avoid this, you should comply with the conditions we described earlier.

You can also add headers to the code, which will slightly reduce the chance of blocking:

headers = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/106.0.0.0 Safari/537.36"}Then the request will look a little different:

html_text = requests.get(link, headers=headers).textThe result of the scraper:

Unfortunately, this also doesn’t always help to avoid blocking. This is where the web scraping API comes in.

Scrape Walmart Product Data Using the Web Scraping API

Let's look at a use case for the web scraping API, which will take care of blocking avoidance. Part of the script described earlier will not change. We will only modify it.

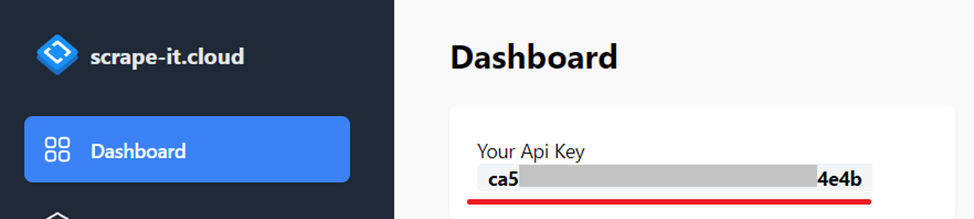

Create a scrape-it.cloud account. After that, you will receive 1,000 credits. You need an API key, which can be found in your account in the Dashboard section:

We will also need the JSON library, so let's add it to the project:

import jsonNow let's assign the API value to the URL variable, and put the API key and content type in the headers:

url = "https://api.scrape-it.cloud/scrape"

headers = {

'x-api-key': 'YOUR-API-KEY',

'Content-Type': 'application/json'

}To add a dynamic query:

temp = """{

"url": """+"\""+str(link)+"\""+""",

"block_resources": False,

"wait": 0,

"screenshot": True,

"proxy_country": "US",

"proxy_type": "datacenter"

}"""

payload = json.dumps(temp)

response = requests.request("POST", url, headers=headers, data=payload)Get the data in "content" which stores the code of the Walmart page:

html_text = json.loads(response.text)["scrapingResult"]["content"]Let's look at the complete code:

from bs4 import BeautifulSoup

import requests

import json

url = "https://api.scrape-it.cloud/scrape"

headers = {

'x-api-key': 'YOUR-API-KEY',

'Content-Type': 'application/json'

}

with open("result.csv", "w") as f:

f.write("title; price; rating; reviews; image\n")

with open("links.csv", "r+") as links:

for link in links:

html_text = requests.get(link).text

soup = BeautifulSoup(html_text, 'lxml')

data = (json.loads(soup.find('script', attrs={'type': 'application/ld+json'}).text))

title = data['name']

price = data['offers']['price']

reviews = data['aggregateRating']['reviewCount']

rating = data['aggregateRating']['ratingValue']

image = data['image']

try:

with open("result.csv", "a") as f:

f.write(str(title)+"; "+str(price)+"; "+str(reviews)+"; "+str(rating)+"; "+str(image)+"\n")

except Exception as e:

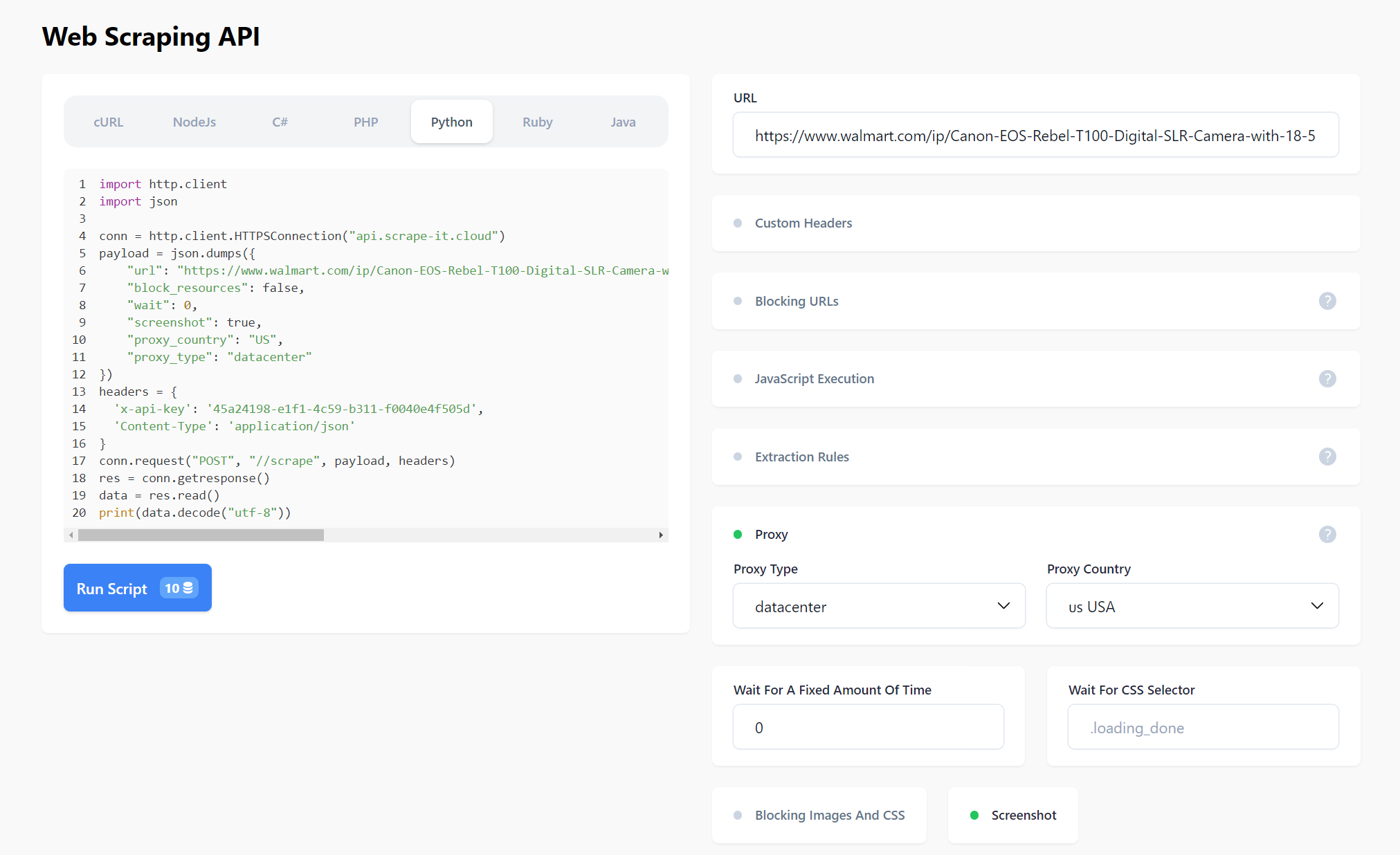

print("There is no data")In addition to the specified attributes, others can be set in the request body. You can read more in the documentation or try it in your account on the web scraping API tab.

You can make the request visually and then get the code in one of the supported programming languages.

Conclusion and Takeaways

Finding and scraping data to be used for e-commerce is a time-consuming task, especially when done manually. It's also hard to know whether it's accurate, objective data that will help you make the right business decisions.

Without high-quality data, it's hard to develop and implement the marketing strategies that your business needs to grow, succeed, and meet clients' needs. It can also affect your reputation, as you may be perceived as unreliable if your customers or partners find out your data is unreliable.

Zillow API Python is a Python library that provides convenient access to the Zillow API. It allows developers to retrieve real estate data such as property details,…

Our Shopify Node API offers a seamless solution, allowing you to get data from these Shopify stores without the need to navigate the complexities of web scraping,…

Scraping Walmart product data with Python is a great way to quickly gather large amounts of valuable information about products available on their website. By using powerful libraries like Beautiful Soup and Selenium along with XPath queries or Regexes, developers can easily extract specific pieces of information from any webpage they wish and turn them into structured datasets ready for use in their applications or analysis projects. And using the web scraping API to automate the data collection process will allow you not to worry about blocking, captcha, proxying, using headers, and much more.