Web scraping and web crawling are two distinct yet related processes that enable gathering data from across the internet. Both methods involve extracting specific information by navigating websites and identifying patterns in HTML documents, but they have fundamental differences.

This article will explore the differences between these approaches and discuss when each should best suit a particular situation. We will also look at some examples of how web scraping and crawling can be implemented in real-life applications. Finally, we'll examine tips on choosing which approach suits your project or business needs.

What is web crawling

Web crawling, also known as web spidering, is the process of automatically retrieving content from websites. It involves using software bots to systematically search web pages, index their contents, and follow links to other sites. Unlike web scraping, which focuses on extracting specific data from a website, web crawling is an automated way of exploring the entire internet by visiting every page it can find.

Web crawlers, also known as a spider, robots, or bots, are computer programs that traverse a series of related documents on the internet to locate relevant information or data for particular users or applications. A typical example would be Google's search engine spider which visits all available pages on the web and indexes them according to keywords found within those pages so searches can yield more comprehensive results for users who use those terms in their queries.

How web crawling works

At its core, a web crawler works by sending out requests over HTTP/HTTPS protocols requesting information from specific URLs and then analyzing returned HTML files for links related to other URLs – this process repeats itself until no new unique connections are found between documents or when it reaches either user-specified limits such as a maximum number of hops away from initial URL (to avoid infinite loops).

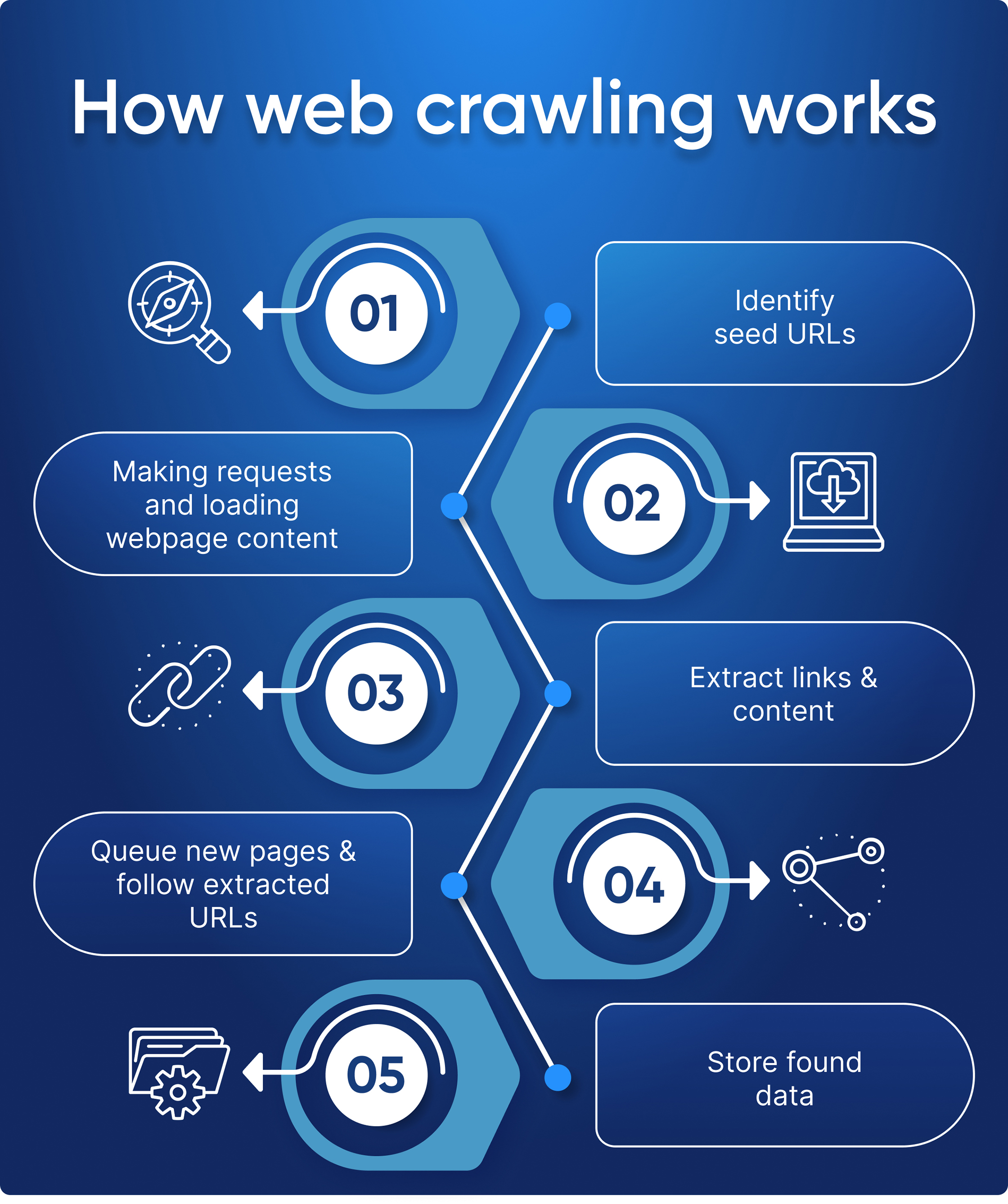

Here are the step-by-step processes that illustrate how a web crawler works:

- Identify seed URLs: This is where a web crawler will start its search. Seed URLs are the initial set of websites the user chooses to begin crawling.

- Request pages: Once a seed list of URLs has been identified, the crawler makes HTTP requests for each individual page it finds on these sites to retrieve their contents and analyze them further.

- Extract links & content: After receiving an HTML document from one of its requests, the crawler extracts all relevant links within that page as well as any other pertinent content such as images or text-based information (i..e metadata).

- Queue new pages & follow extracted URLs: At this point, newly discovered links may either be added to a priority queue if they have yet to be crawled or discarded if they've already been visited before by another instance of this program - allowing for efficient exploration without revisiting previously explored areas unnecessarily! Additionally, depending on how complex one wishes their crawl algorithm(s) to become, it could also prioritize specific patterns/keywords when determining which link should go next based upon relevance findings during parsing operations (i..e machine learning techniques like natural language processing).

- Store found data: When all necessary resources have been gathered and queued up correctly, then, finally, we reach our last step, which involves storing whatever data was collected while following through with its instructions.

A web crawler is like an enthusiastic tourist visiting a new city – they'll start with a few seed URLs (like the city's top attractions) and then follow any interesting links they find during their exploration, gathering as much information along the way as possible. Eventually, just like our tourist friend would have to do eventually, it will store all its findings in some sort of database or file for later use.

What is web scraping?

On the other hand, web scraping suggests that the link to the target website is already known, and there is no need to crawl through sites to collect links. It involves writing or using web scraping tools that collect information such as product prices, images, contact information, or any other data for further analysis or manipulation.

How web scraping works

The web scraping process involves sending out requests to a target website, extracting the data from the returned HTML code, and then reformatting it into usable information.

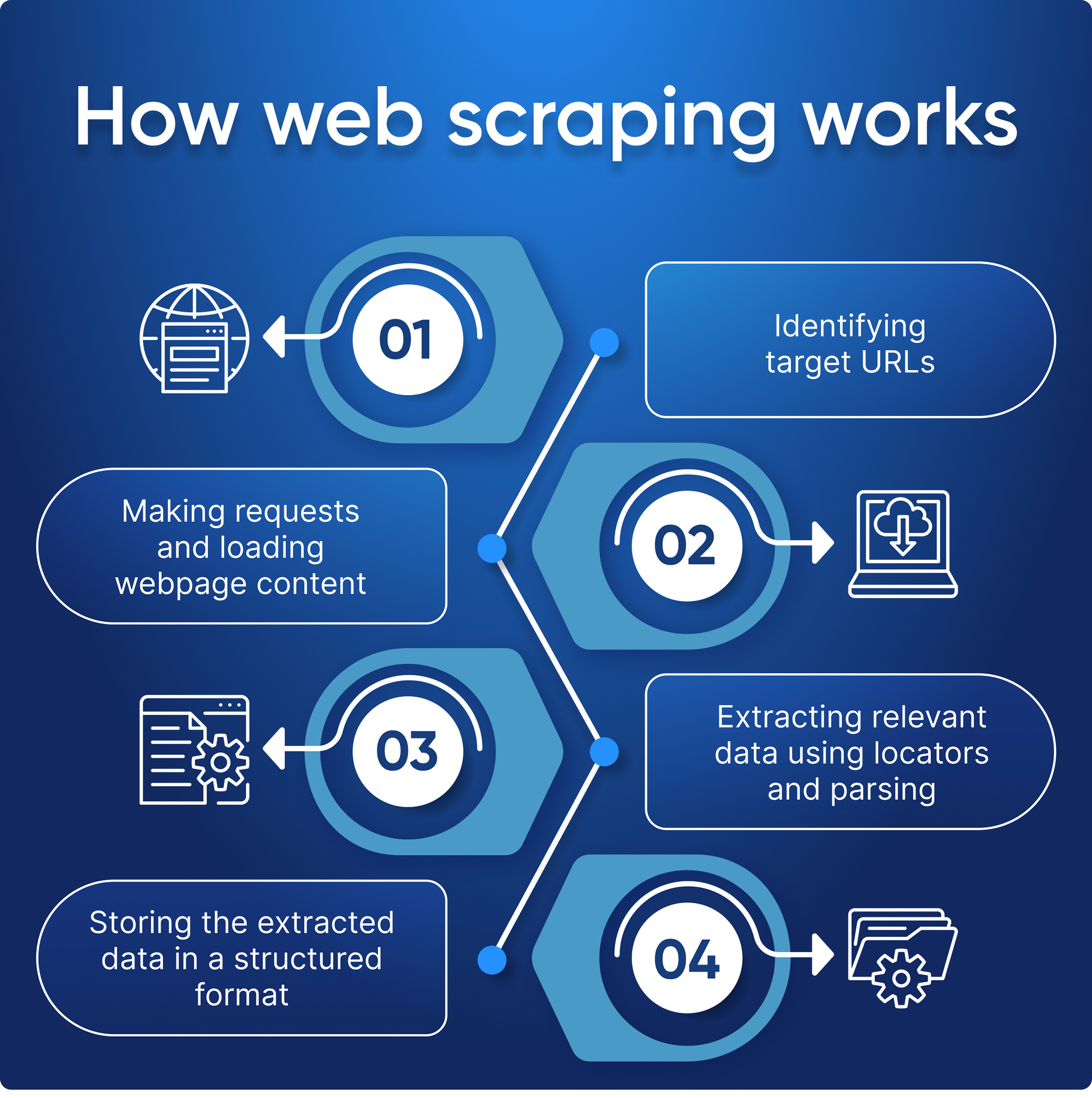

Here are the step-by-step processes that illustrate how web scraping works:

- Define the target site and its structure.

- Take steps to avoid being blocked:

- Connect a proxy.

- Connect captcha-solving services.

- Set up Headers and User-Agent.

- It is advisable to use a headless browser.

- Scraper makes a request and gets its HTML code.

- Extracts data from the page using locators and parsing.

- Saves web data in a convenient format, like JSON, Excel, or CSV.

Multiple links can also be used for scraping, but they will all be from the same site, meaning all pages will have a similar structure.

Unlike our "tourist crawler", a web scraper is like a savvy shopper. They know exactly what they're looking for and don't waste time browsing the entire store. They quickly jump from shop to shop, grabbing all the deals and discounts available in each location before moving on to the next one.

Main differences between web scraping and web crawling

As mentioned earlier, data crawling is for collecting links, and data scraping is for collecting data. Usually, they are used together: first, the crawler collects links, then the scraper processes them and collects the necessary data.

Let's compare web crawling and web scraping:

| Category | Web Scraping | Web Crawling |

|---|---|---|

| Definition | Extracting data from websites using software or web scraping tools that simulate human browsing behavior. | Automated process of browsing the internet, indexing and cataloging information, and organizing it in a database. |

| Purpose | Extract specific data from web pages for analysis and use | Explore and index web pages for search engines and data analysis |

| Data Extraction Method | Pulls data from a website's HTML structure. | Crawls web pages and follows hyperlinks to find new pages to index. |

| Scope | Limited to specific websites or pages. | It can cover a broader range of websites or pages. |

| Targeted Sites | Generally targeted at specific websites or pages. | It can cover a wide range of websites or pages. |

| Data Analysis | Data can be extracted in an easily analyzed format and used for various purposes, such as price comparison or marketing research. | Crawler does not perform data analysis |

| Scalability | Done on both small and large scales | Mostly employed on a large scales |

| Process Requirements | Only requires a crawler to request and retrieve web pages | Requires both a crawler to retrieve web pages and a parser to extract the relevant data from those pages |

Thus, the key difference between web crawling and web scraping is that web crawling involves automatically visiting every page it can find, while web scraping focuses on extracting specific data from a website. Web crawlers explore the entire internet by following links between pages, whereas web scrapers only target information related to their queries.

Use cases of web crawling and web scraping

Web scraping and web scanning are two distinct processes that can be used in tandem to significant effect. However, they can also be utilized independently, depending on the task.

Web crawling use cases

Web crawling, as mentioned earlier, is great for projects that require collecting links, may not have targeted resources, and need to retrieve entire page code without further parsing and processing. Here are some of the most common use cases:

Search Engines Indexing

Search engines like Google, Bing, and Yahoo use their teams of crawlers to find newly updated content or new pages. The searchers then store the information they find in an index, a massive database of all the content they find that they think is good enough to provide to users.

Improving the performance of your own site

Web crawling is a valuable tool for analyzing your website and improving its performance. By running a web crawler, you can detect broken links or images, duplicate content or meta tags, and other issues that could negatively affect your site's SEO performance. Web crawling also enables you to identify opportunities for optimization of the site's structure as a whole.

Analyzing competitors' websites for SEO purposes

You can also monitor changes not only on your website but also on your competitors. This will ensure that you are always aware of new changes from your competitors and can react to them successfully and quickly.

Data mining

Web crawlers can be used to collect and analyze large amounts of data from various sources on the internet. This makes it easier for researchers, businesses, or any other interested parties to gain valuable insights about a particular topic – allowing them to make informed decisions based on said findings.

Finding broken links on external sites

In addition to checking your pages, keeping all links on external sites up-to-date is important. While bugs on your pages can usually be found on the admin panel, finding broken links to external sites are much more difficult. To keep them up to date, you must either check them manually or use crawlers.

Content curation

Crawlers are also great at finding content-related topics quickly and efficiently, allowing businesses or individuals to curate them into collections based on specific criteria such as keywords or tags.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Web scraping use cases

On the other hand, web scraping is great when you already know what specific information you want to extract from a website. Some of its most common applications are:

- Price Monitoring

- Content Aggregation

- Lead Generation

- Competitor Analysis

- Social Media Analysis

- Online Reputation Management

For more information on web scraping and its use cases, check out our article, which details some of the most common applications.

| Use Case | Web Crawling | Web Scraping |

|---|---|---|

| Price Monitoring | ✔️ Can collect prices from multiple websites and track changes over time | ✔️ Can extract prices from specific web pages to compare and analyze |

| Job Aggregation | ✔️ Can collect job listings from various sites and present them in a centralized manner | ✔️ Can extract job listings from specific websites and present them in a centralized manner |

| Content Aggregation | ✔️ Can collect content from multiple websites to create a centralized repository | ❌ Not suitable, as it requires extracting specific information from each webpage |

| SEO Optimization | ✔️ Can analyze website structure and backlinks for search engine optimization | ❌ Not suitable, as it requires access to specific website data such as keyword density and metadata |

| News Monitoring | ✔️ Can monitor news outlets and RSS feeds for updates | ❌ Not suitable, as it requires extracting specific information from each news article |

| Product Review Aggregation | ✔️ Can collect reviews from multiple websites and analyze sentiment | ✔️ Can extract reviews from specific websites and analyze sentiment |

| Data Mining | ✔️ Can collect large amounts of data for analysis and modeling | ✔️ Can extract specific data points for analysis and modeling |

| Finding Broken Links on External Sites | ✔️ Can crawl external sites to identify broken links | ❌ Not suitable, as it requires extracting specific information from each webpage |

| Lead Generation | ✔️ Can collect contact information from multiple websites | ✔️ Can extract contact information from specific websites |

| Competitor Analysis | ✔️ Can gather data on competitor websites for analysis | ✔️ Can extract specific data points on competitor websites for analysis |

| Online Reputation Management | ✔️ Can monitor online mentions and reviews for reputation management | ❌ Not suitable, as it requires extracting specific information from each webpage |

Challenges of web crawling and web scraping

When it comes to web crawling and web scraping, some challenges need to be considered. Depending on the size of the project, these could range from simple technical issues such as slow loading times or blocked requests (due to anti-scraping measures) all the way up to complex legal questions regarding data privacy laws.

Blocking crawls in robots.txt

Before crawling a resource, ensure the site allows it. If the robots.txt file states that the resource prohibits the use of data from any page, it is worth being polite and complying with the terms.

IP blocking

While crawling, remember that a person cannot click on links every millisecond. Internet resources consider such actions suspicious and may block the IP from which such actions are performed. Therefore it makes sense to make at least a short delay between requests and use proxies that hide your real IP. But you can't use the same proxy indefinitely either, so for crawling, just like for scraping, you need to use several proxies (proxy pool) and keep changing them between each other.

To ensure successful crawling, you should also check your bot's settings. Specify headers and user agents manually, as this will help the site recognize that a real user is interacting with the site. The good idea is to use real user agents and headers, such as your browser headers.

CAPTCHAs

Try to avoid captchas by following all the above guidelines. And in case captchas cannot be avoided, you can use CAPTCHA-solving services.

Spider trap

Some resources leave crawler traps called Honeypots. These are additional hidden links in the code that are not visible to regular users in the browser. And if a crawler follows this link, the resource detects it is a bot and blocks it.

Overcrawling

Sometimes bot can get stuck in an infinite loop if not programmed properly or crawl too much and overwhelm the target website with excessive requests – thus taking away resources from other users who may be trying to access it at that time.

Web crawling and web scraping best practices

When crawling and scraping, there are some rules to follow that will make crawling easier for both you and the websites.

Be polite

If you have the option, crawl pages that the resource has allowed in robots.txt and limit the frequency of requests. Otherwise, a heavy load on a resource can cause it to respond to all users extremely slowly or even fail.

Crawl at low-use hours

You should also crawl when there is less pressure on resources, for example, at night. This will allow you to get the data you need while causing the least damage to the resource.

Use caching strategies

Implement caching strategies to reduce the number of requests made on each page and store previously crawled data for future reference points when deciding what content needs to be scraped next.

Conclusion and takeaways

Web scraping and web crawling are important tools used to collect data from the web. Web scraping is used to extract data from websites and can be done manually or through automated scripts. Web crawling uses bots to explore websites, gather information, and index content for search engines.

Let's see some tips on choosing which approach suits your project or business needs:

- Define the Purpose: The first step is understanding why you want to collect data through web scraping or crawling. This will help you choose the right method that fits your business needs.

- Identify the Data: You should identify the type of data you need and its location. This will help you determine if web scraping or crawling is the best data retrieval method.

- Analyze Data Complexity: It's important to evaluate the complexity of the data you want to collect. If it's a complex dataset, web crawling might be more suitable since it can efficiently gather more data in less time.

- Consider Data Update Frequency: If the data you require is frequently updated, web crawling may be the better option since it can be automated to keep the data up-to-date.

Both methods are essential for extracting data from the internet, but it depends on your requirements which technique is best suited for you.