Scrapy is the most popular web scraping framework in Python. Earlier, there was a review of similar tools. Unlike BeautifulSoup or Selenium, Scrapy is not a library. The big advantage is that the tool is completely free.

Despite this, it is multifunctional and is able to solve most of the tasks required when scraping data, for example:

- Supports multithreading.

- Can be used to move from link to link.

- Suitable for data extraction.

- Allows to perform data validation.

- Saves to most known formats/databases.

Scrapy uses Spiders, which are standalone crawlers that have a specific set of instructions. So it is easy to scale for projects of any size, while the code remains well structured. This allows even new developers to understand the ongoing processes. Scraped data can be saved in CSV format for further processing by data science professionals.

Zillow Scraper is the tool for real estate agents, investors, and market researchers. Its easy-to-use interface requires no coding knowledge and allows users to…

With this powerful tool, you can easily scrape accurate data from the Apartments.com website without any coding knowledge or experience. Get valuable insights into…

How To Install Scrapy In Python

Before start looking at the practical use of Scrapy, it should be installed. This is done on the command line:

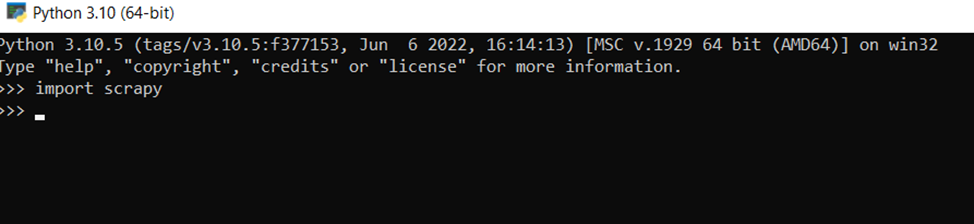

pip install scrapyIn order to make sure that everything went well, one can import Scrapy into the interpreter with the command:

>>> import scrapyAnd if there are no error messages, then everything went well.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

How to use XPath with Scrapy

Scrapy works equally well with XPath and CSS selectors. However, XPath has a number of advantages, so it is used more often. To simplify the example, all XPath code will be executed on the command line. To do this, go to a special python shell:

scrapy shellThen select the site for which web crawling will be performed, and use the following command:

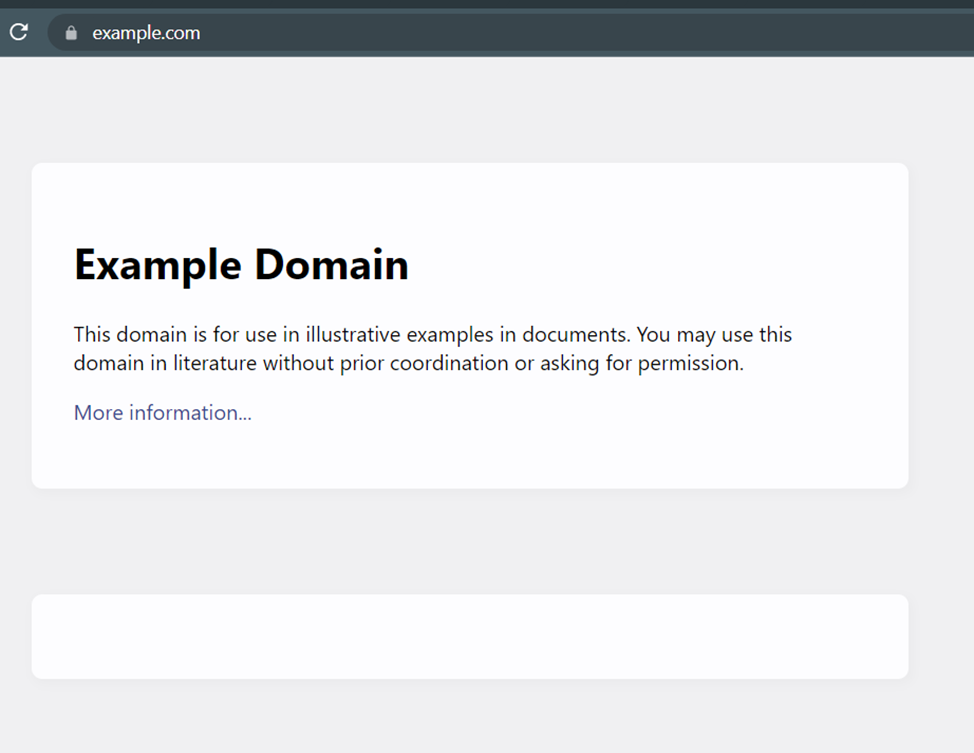

fetch("http://example.com/")

The execution result will be the following:

2022-07-20 14:50:33 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://example.com/> (referer: None)This is normal because it wasn't specified what data needs to be crawled. To select all the data stored in the < html >…</ html > tag, just use the following command:

response.xpath("/html").extract()Execution result:

['<html>\n<head>\n <title>Example Domain</title>\n\n <meta charset="utf-8">\n <meta http-equiv="Content-type" content= "text/html; charset=utf-8">\n <meta name="viewport" content="width=device-width, initial-scale=1">\n <style type="text/css">\ n body {\n background-color: #f0f0f2;\n margin: 0;\n padding: 0;\n font-family: -apple-system, system-ui, BlinkMacSystemFont, "Segoe UI", "Open Sans" , "Helvetica Neue", Helvetica, Arial, sans-serif;\n \n }\n div {\n width: 600px;\n margin: 5em auto;\n padding: 2em;\n background-color: #fdfdff ;\n border-radius: 0.5em;\n box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02);\n }\na:link, a:visited {\n color: #38488f; \n text-decoration: none;\n }\n @media (max-width: 700px) {\n div {\n margin: 0 auto;\n width: auto;\n }\n }\n </ style> \n</head>\n\n<body>\n<div>\n <h1>Example Domain</h1>\n <p>This domain is for use in illustrative examples in documents. You may use this\n domain in literature without prior coordination or asking for permission.</p>\n <p><a href="https://www.iana.org/domains/example">More information.. .</a></p>\n</div>\n</body>\n</html>']The use of XPath is standard and shouldn't go too far, but as an example, let's try to return only the content of < h1> tag:

>>> response.xpath("//h1").extract()

['<h1>Example Domain</h1>']Or get only the text:

>>> response.xpath("//h1/text()").extract()

['Example Domain']Thus, the use of XPath is a flexible and simple tool for elements search.

Scrapy and CSS Selectors

Selectors, unlike XPath, are less flexible and don't allow to work, for example, with parent elements. And although it is extremely rarely used, the lack of such an opportunity can be frustrating.

In general, for CSS selectors, the request is made in the same way:

>>>response.css("h1").extract()

['<h1>Example Domain</h1>']The choice between using XPath and CSS selectors is up to everyone to decide, as the difference between using them is mostly a matter of convenience for each individual user.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Web Pages Scraping with Scrapy

Now let's start building a simple scraper using Scrapy. To create the first scraper, use the built-in features of the framework. Let's use the following command:

scrapy startproject scrapy_exampleThis will automatically create a scrapy project, the name can be anything. If everything went well, the command line should return something similar to:

New Scrapy project 'scrapy_example', using template directory 'C:\Users\Admin\AppData\Local\Programs\Python\Python310\lib\site-packages\scrapy\templates\project', created in:

C:\Users\Admin\scrapy_example

You can start your first spider with:

cd scrapy_example

scrapy genspider example example.comThe cd command is used to go to the project directory, in this case, the scrapy_example directory. The genspider command allows you to automatically create a scraper with basic settings. Let's go to the scrapy project directory and create a scraper to collect data from the example.com site:

cd scrapy_example

scrapy genspider titles_scrapy_example example.comIf everything is well, it will return:

Created spider 'titles_scrapy_example' using template 'basic'Open the created scraper (python file), it will look like this:

import scrapy

class TitlesScrapyExampleSpider(scrapy.Spider):

name='scrapy_exampl'

allowed_domains = ['example.com']

start_urls = ['http://example.com/']

def parse(self, response):

pass</code class="language-python">Let's change the content of def parse(self, response) so that the scraper collects all h1 tags (using the XPath example above):

def parse(self, response):

titles = response.xpath("//h1/text()").extract()

yield {'titles': titles}And finally, to start the scraper, use the command:

scrapy crawl titles_scrapy_exampleThe results of the scraper will be displayed in the terminal output. Of course, this is a very simple example of a scraper, however, as a rule, more complex ones differ only in greater detail. Therefore, knowing the basics, and creating full-fledged web scrapers will not cause difficulties.

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs - ever. Streamline your development process without worrying…

Gain instant access to a wealth of business data on Google Maps, effortlessly extracting vital information like location, operating hours, reviews, and more in HTML…

Using Proxy in Scrapy web Scraping Tool

When creating a project, Scrapy also creates a settings.py file and a middleware.py file, where one can store spider middleware and also proxy settings, for example:

class ProxyMiddleware(object):

# overwrite process request

def process_request(self, request, spider):

# Set the location of the proxy

request.meta['proxy'] = http://YOUR_PROXY_IP:PORTDon't forget to make changes to the settings.py file:

DOWNLOADER_MIDDLEWARES = { 'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 110, 'title_scrapy_example.middlewares.ProxyMiddleware': 100,}Using a proxy is very important in order to increase security during scraping and avoid blocking. In addition, it is important to remember that the use of free proxies is unreliable and the speed is significantly reduced. It is advisable to use residential proxies.

If there is no desire or ability to independently configure and use a proxy, and also to avoid blocking, there are ready-made APIs that will act as an intermediary between the user and the site. For example, the simplest scraper using our service would look like this:

import http.client

import json

conn = http.client.HTTPSConnection("api.scrape-it.cloud")

payload = json.dumps({

"url": "https://example.com"

})

headers = {

'x-api-key': '<your api client>',

'Content-Type': 'application/json'

}

conn.request("POST", "/scrape", payload, headers)

res = conn.getresponse()

data = res.read()

print(data.decode("utf-8"))This provides IP blocking bypass, JavaScript Rendering, using Residential Proxies and Data Center Proxies and configuring Custom HTTP Headers and Custom Cookies.

Zillow API Node.js is a programming interface that allows developers to interact with Zillow's real estate data using the Node.js platform. It provides a set of…

Shopify API Node.js framework can be used to create custom eCommerce solutions, integrate Shopify functionality into existing applications, and automate various…

Scrapy vs BeautifulSoup for Extracting Data

Before finally deciding to use Scrapy in projects, it's worth comparing it to other popular Python scraping libraries. One of these is the Beautiful Soup library. It is quite simple and suitable even for beginners.

However, unlike Scrapy, it lacks flexibility and scalability. Also, the built-in parser is rather slow.

Beautiful Soup easily extracts data from HTML and XML files, however, it should be needed to use additional libraries to send a request to web pages. Requests or UrlLib library are suitable for this. For further analysis html.parser or Lxml can help.

For the example, let's get all the names of goods that are stored in paragraphs p in tags h3:

from bs4 import BeautifulSoup

soup = BeautifulSoup(html_doc, 'html.parser')

for title in soup.find_all('p'):

print(title.get('h3'))Due to the fact that the library is popular, usually among beginners, a fairly active community has developed. This will allow finding the answer to any question quickly.

What's more, Beautiful Soup has complete and detailed documentation, which makes it very easy to learn. A detailed description of the methods, as well as examples of use, is here.

selenium import webdriver

driver = webdriver.Chrome()

driver.get("http://www.example.com")

elem = driver.find_element_by_name("h3")

driver.close()So, let's look at the comparison table:

| Feature | Scrapy | BeautifulSoup |

|---|---|---|

| Ease Of Learning | Medium | High |

| Scraping Speed | High | Low |

| Flexibility And Scalability | High | Low |

| Completeness Of Documentation | Medium | High |

| PC Resource Consumption | Low | Medium |

Although the tool should be chosen depending on the goals, objectives, and possibilities, it is worth noting that BeautifulSoup is more suitable for beginners or small projects. While Scrapy should be used by those who are already somewhat familiar with scraping in Python and are planning to create a flexible and easily scalable project.

Read also about Python cURL

Scrapy vs Selenium

Selenium is a more advanced tool that allows crawling websites with not only static but dynamic data too. That is, Selenium supports JavaScript thanks to headless browsers.

Selenium was originally developed for test automation, thanks to which, this library helps to simulate the behavior of a real user. Therefore, using this library also helps to reduce the risk of blocking.

Also, a big advantage is that Selenium can easily work with AJAX and PJAX requests. In addition, unlike Scrapy, Selenium works equally well in C#, Java, Python, Ruby, and other programming languages.

Let's look at a similar example as for BeautifulSoup, only using Selenium and headless browsers:

selenium import webdriver

driver = webdriver.Chrome()

driver.get("http://www.example.com")

elem = driver.find_element_by_name("h3")

driver.close()The code didn't become more complicated, however, it became possible to imitate the behavior of a real user. Given that this library is quite popular, it also has an active community and good documentation.

So let's compare Selenium and Scrapy to finally determine the pros and cons:

| Feature | Scrapy | Selenium |

|---|---|---|

| Ease Of Learning | Medium | Medium |

| Scraping Speed | High | Medium |

| Flexibility And Scalability | High | Medium |

| Completeness Of Documentation | Medium | High |

| PC Resource Consumption | Low | High |

| Ability To Work With Javascript | No | Yes |

Thus, it is better to use Selenium for complex projects in which it is necessary to work with dynamic data, as well as imitate the behavior of a real user. At the same time, Scrapy is better for flexible and scalable projects, where low consumption of PC resources is important and parsing of dynamic data is not important.

Read more about Google Maps Scraper

Conclusion and Takeaways

One of the biggest advantages of Scrapy is that it is very easy to move an already existing project to another one. Therefore, for large or complex projects, Scrapy will be the best choice.

Also, if the project needs a proxy or a data pipeline, then it is a good idea to use Scrapy. In addition, when compared in terms of resource consumption and execution speed, Scrapy wins among all Python libraries.