Amazon, one of the biggest online marketplaces for buying and selling things, holds a treasure trove of information valuable to researchers, business analysts, and entrepreneurs. But accessing data on Amazon, like many other websites, can be challenging. It involves analyzing and finding the best way to scrape the data.

In this article, we will explore different methods of gathering data from Amazon website using Python. We'll start by examining how Amazon's product pages are structured and finish by creating automated tools for web data extraction.

We'll also discuss the challenges you might face when collecting data from Amazon and provide practical tips on overcoming them. We'll look into techniques to avoid IP address blocks, use API to collect data, and offer examples of code and tools to help you efficiently gather the data you need.

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Amazon Reviews Scraper is the quickest, easiest way to gather customer reviews for any product on Amazon! Simply enter the product URLs or ASINs, and our scraper…

Analyzing Amazon Product Page

Amazon is a vast and well-known marketplace. Data processing from it can be time-consuming but can be automated. Before we start developing our Amazon scraper in Python, let's study the structure of product pages.

Page Elements

Each Amazon product page contains a lot of information, some of which is specific to a particular category. However, we will only extract information from the elements that are common to all product pages.

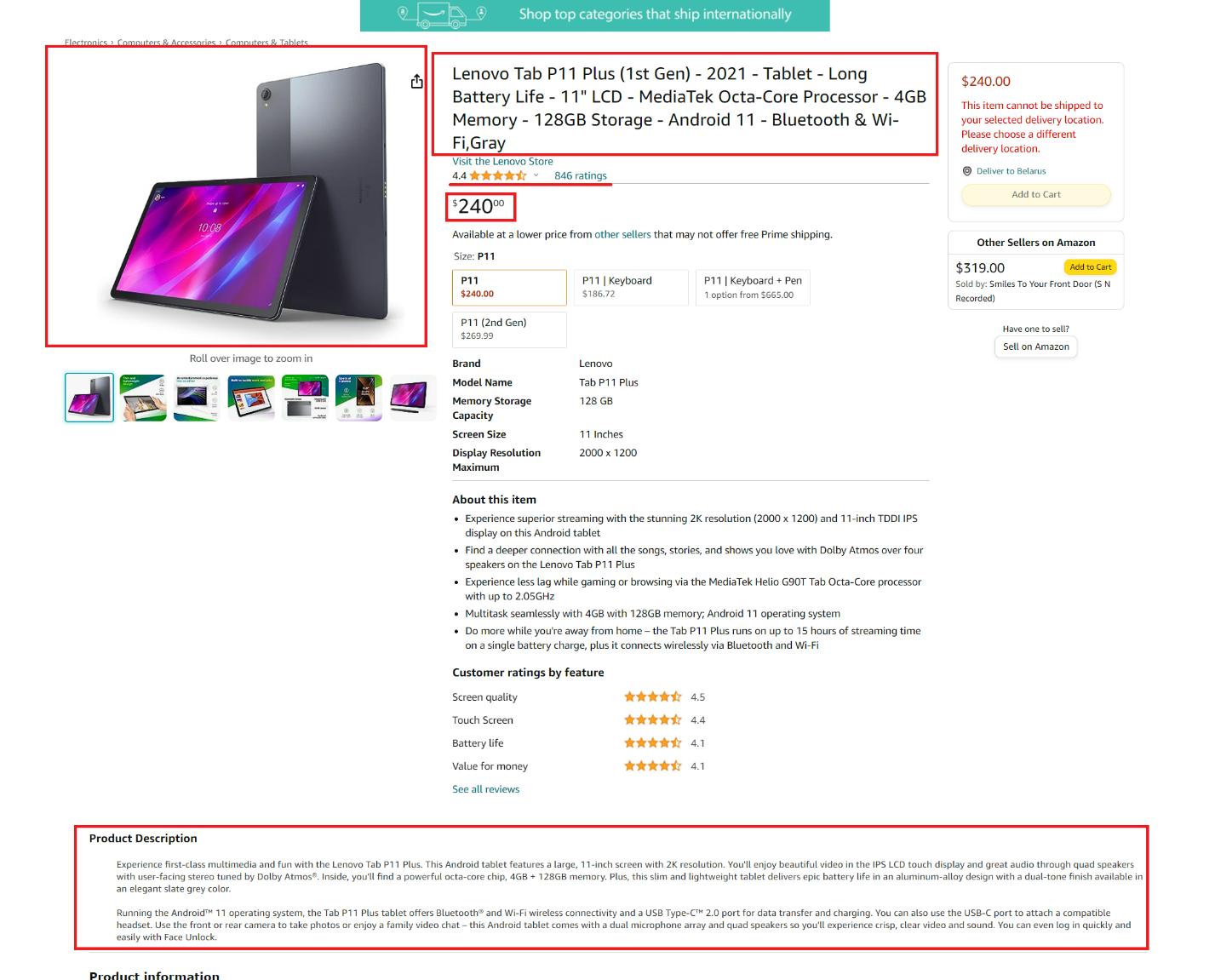

Let's look at a product page and identify the elements we want to scrape:

Here we can highlight the following elements:

- The title or product name.

- The price of the product.

- The general description of the product.

- A link to the product image.

- Product rating.

- The number of reviews for the product.

These elements are common to all product categories and can be extracted using the scraper. If there are additional category-specific elements on the page, you can add code later to extract them yourself.

Retrieving Page HTML

Before we move on to processing the page and retrieving specific data, let's write a basic query to retrieve the entire page and display this data. To do this, create a new file with the *.py extension and import the Requests library:

import requestsTo make it easier for us to use later, let's put the link to the page in a variable:

url = 'https://www.amazon.com/Lenovo-Tab-P11-Plus-1st/dp/B09B17DVYR/ref=sr_1_2?qid=1695021332&rnid=16225007011&s=computers-intl-ship&sr=1-2&th=1'Now we need to set headers for our request. This is not required to send the request itself, but Amazon will not provide you with the page content if you do not specify them. Because Amazon is unwilling to provide data to bots and programs, you need to make your script more human-like to get the data. One way to do this is by using headers:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36'

}Now we can execute the query and output the data if it succeeds or an error if it fails:

response = requests.get(url, headers=headers)

if response.status_code == 200:

print (response.text)

else:

print(str(response.status_code)+' - Error loading the page')You can use this straightforward method to gather data from almost any website. While this method makes the script more human-like, it may not be as reliable. To truly mimic human actions, you would need a headless browser and libraries like Pyppeteer or Selenium.

But, these can be difficult for beginners, so we will not be covering them here. Instead, we will discuss how to avoid all the hassle by using a web scraping API later in the article.

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Our no-code scrapers make it easy to extract data from popular websites with just a few clicks.

Tired of getting blocked while scraping the web?

Try now for free

Collect structured data without any coding!

Scrape with No Code

Scraping Amazon Product Details Using Python Libraries

Now that we have the page's content, we can parse it and get the specific elements we discussed earlier. We'll need the BeautifulSoup library for this, so we'll import it and then parse the entire page to extract specific page elements using their CSS selectors.

import requests

from bs4 import BeautifulSoup

url = 'https://www.amazon.com/Lenovo-Tab-P11-Plus-1st/dp/B09B17DVYR/ref=sr_1_2?qid=1695021332&rnid=16225007011&s=computers-intl-ship&sr=1-2&th=1'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36'

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

# Extract data here

else:

print(str(response.status_code)+' - Error loading the page')Now, let's consider each element we need and add its extraction to our code.

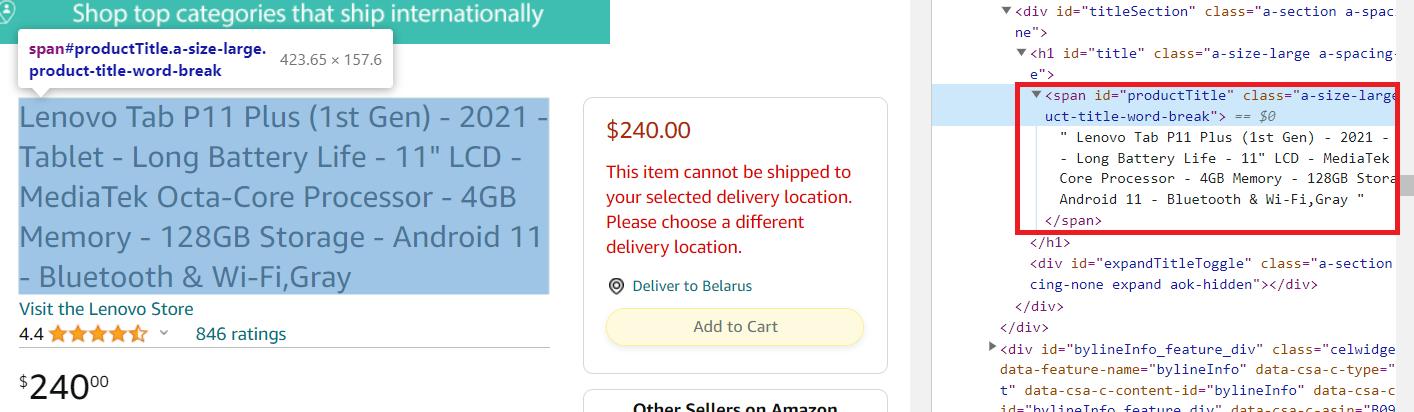

Product Title

First, get the product title. To do this, go to the product page and open DevTools (F12 or right-click on the screen and select Inspect). Then, select the product name on the page and see what part of the code describes it.

As we can see, this element is inside the span tag with the unique identifier productTitle. So, we can use the following code to extract it:

title_element = soup.find('span', id='productTitle')

title = title_element.get_text()However, the page may return with an error, and in this case, if the element is not found, we will get an error, and our script will stop working. Let's change the second line a bit to assign a value depending on its presence:

title = title_element.get_text(strip=True) if title_element else 'Title not found'Now you can display the extracted data on the screen:

print(f'Title: {title}')Now let's move on to the next element.

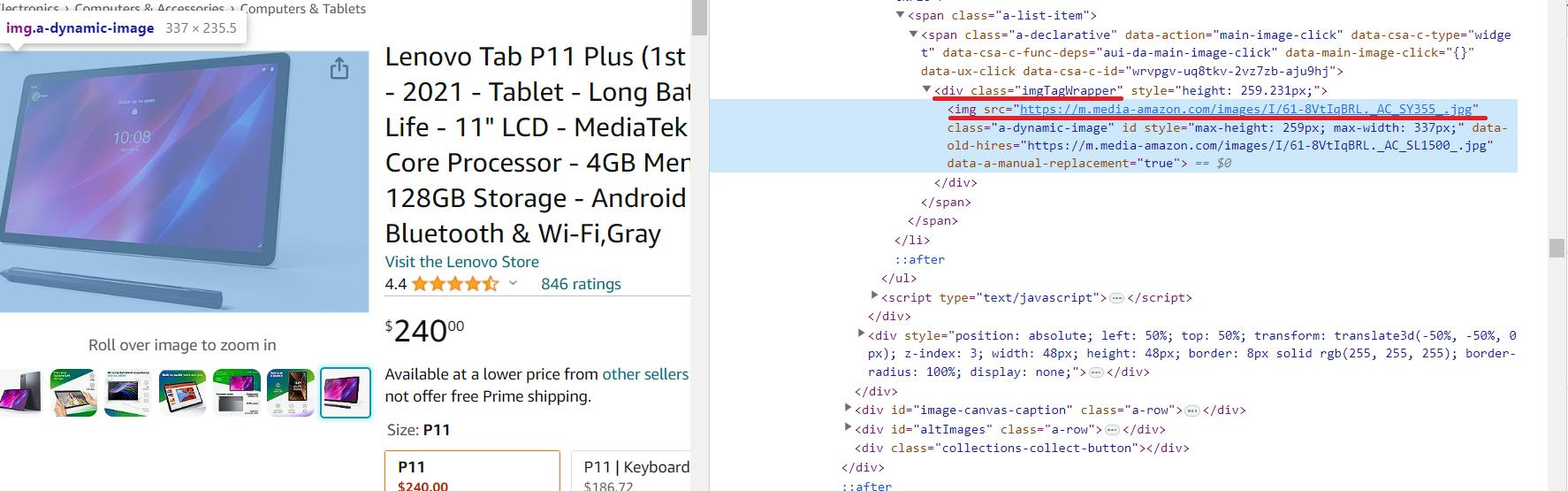

Product Image

We will scrape the image link from the preview. There will not be high resolution images, but our libraries are great for beginners and unsuitable for extracting information from meta tags.

The link to the high-resolution product image is in the nested img tag of the div element with the id=imgTagWrapperId. Unlike other elements, the link to the product image is stored not as text in a tag, but as an attribute of the tag. Therefore, the code for scraping it will be slightly different.

image_element = soup.find('div', id='imgTagWrapperId')

if image_element:

image_url = image_element.find('img')['src']

else:

image_url = 'Image link not found'And then display the data on the screen:

print(f'Image link: {image_url}')Although getting the image is slightly different, it is also quite simple.

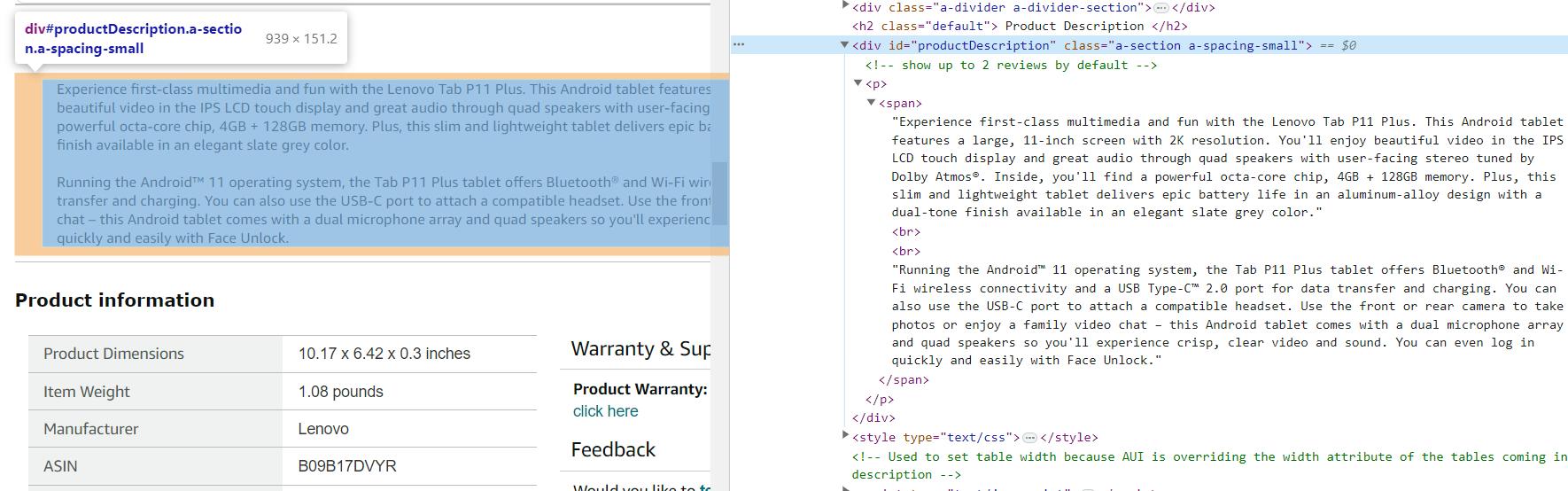

Product Description

The product description is an important and useful piece of information. Different product categories may have additional descriptions and features, but they are transient, dynamic, and different from category to category. Therefore, we will only get one general product description, which is present on all product pages.

As we can see, the product description is in the div element with the unique identifier productDescription:

description_element = soup.find('div', id='productDescription')

description = description_element.get_text() if description_element else 'Description not found'Displaying information on the screen is the same:

print(f'Description: {description}')On some pages, the description may have different tags or may not be transmitted at all, as it is displayed dynamically after the entire page has loaded. This should also be taken into account when scraping.

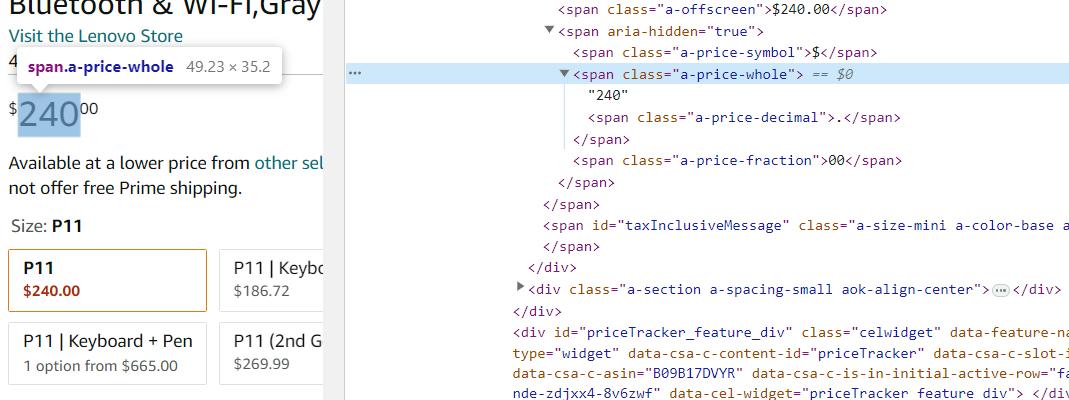

Product Price

Some items on Amazon may have multiple prices, and their tags and classes may vary depending on the category. However, the general price of the product will be present on all pages by default. Therefore, we will scrape exactly it.

The product's price is in the span element with class a-price-whole. Let's get this data using BeautifulSoup:

price_element = soup.find('span', class_='a-price-whole')

price = price_element.get_text(strip=True) if price_element else 'Price not found'For output, use print:

print(f'Price: {price}')With this approach, you will collect the actual prices from product pages, receiving the data in the form of a number without specifying the currency. If you need to extract prices along with the indication of the currency, use another, more general selector.

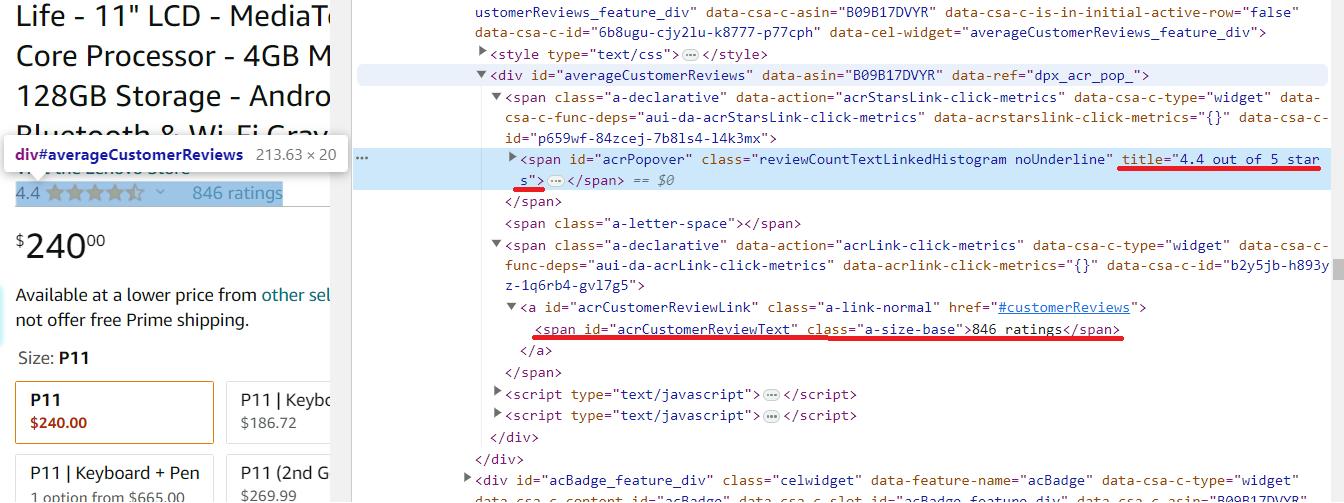

Reviews and Rating

We will consider ratings and reviews together, as they are related. However, they are two different elements, so we will extract them using different selectors.

The product rating is in the span element with the class reviewCountTextLinkedHistogram, and its value is available via the title attribute. The number of reviews about the product is in the span element with the unique identifier acrCustomerReviewText.

# Extract the product rating

rating_element = soup.find('span', class_='reviewCountTextLinkedHistogram')

rating = rating_element['title'] if rating_element else 'Rating not found'

# Extract the number of reviews

reviews_element = soup.find('span', id='acrCustomerReviewText')

reviews = reviews_element.get_text(strip=True) if reviews_element else 'Number of reviews not found'And then display this data on the screen:

print(f'Rating: {rating}')

print(f'Number of reviews: {reviews}')Now that we have collected all the necessary data, let's add a save to the CSV file and look at the resulting script.

Easily gather search results for any keyword on Amazon and export them in JSON, CSV, and Excel formats. Perfect for market research, product research, and competitor…

Amazon Price Scraper is a must-have tool for any business operating in the eCommerce space. It simplifies and streamlines data collection, allowing you to automate…

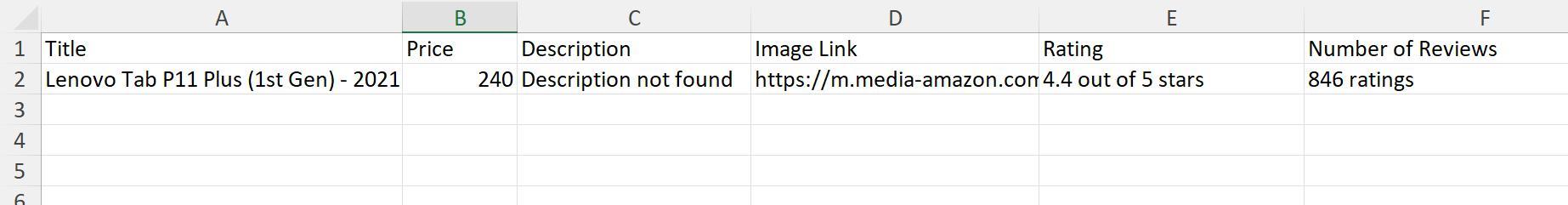

Complete Code with Data Storage

To work freely with CSV files, we need the csv library. Import it into the script::

import csvNow use it to save all the collected data after it has been executed:

with open('product_data.csv', mode='w', newline='') as csv_file:

writer = csv.writer(csv_file)

writer.writerow(['Title', 'Price', 'Description', 'Image Link', 'Rating', 'Number of Reviews'])

writer.writerow([title, price, description, image_url, rating, reviews])

print('Data has been saved to product_data.csv')After executing the script, we will get the information not in the command line but in the file product_data.csv. This method is more convenient, for example, when you have a lot of product pages and want to get all summary data in one file.

Full code:

import requests

from bs4 import BeautifulSoup

import csv

url = 'https://www.amazon.com/Lenovo-Tab-P11-Plus-1st/dp/B09B17DVYR/ref=sr_1_2?qid=1695021332&rnid=16225007011&s=computers-intl-ship&sr=1-2&th=1'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36'

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

title_element = soup.find('span', id='productTitle')

title = title_element.get_text(strip=True) if title_element else 'Title not found'

price_element = soup.find('span', class_='a-price-whole')

price = price_element.get_text(strip=True) if price_element else 'Price not found'

description_element = soup.find('div', id='productDescription')

description = description_element.get_text() if description_element else 'Description not found'

image_element = soup.find('div', id='imgTagWrapperId')

if image_element:

image_url = image_element.find('img')['src']

else:

image_url = 'Image link not found'

rating_element = soup.find('span', class_='reviewCountTextLinkedHistogram')

rating = rating_element['title'] if rating_element else 'Rating not found'

reviews_element = soup.find('span', id='acrCustomerReviewText')

reviews = reviews_element.get_text(strip=True) if reviews_element else 'Number of reviews not found'

with open('product_data.csv', mode='w', newline='') as csv_file:

writer = csv.writer(csv_file)

writer.writerow(['Title', 'Price', 'Description', 'Image Link', 'Rating', 'Number of Reviews'])

writer.writerow([title, price, description, image_url, rating, reviews])

print('Data has been saved to product_data.csv')

else:

print(str(response.status_code)+' - Error loading the page')You can refine it for more data or use it as it is.

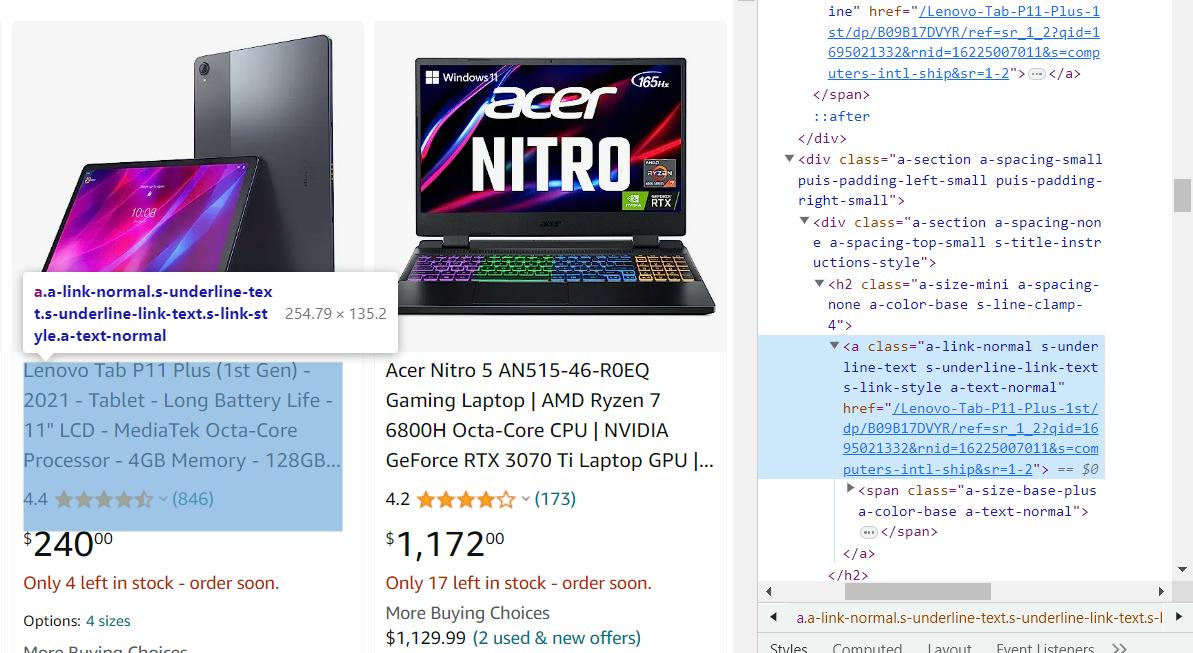

Creating a Crawler for Product Links

In the previous example, we considered a script option for extracting information from a product page. We manually specified the link to the product page, but we could collect it automatically. To do this, we will go to the category page and see where the links to products are stored to create a small crawler for collecting them.

Scraping links is no different from executing data from the product page, so we'll give you the resulting code right away:

import requests

from bs4 import BeautifulSoup

url = 'https://www.amazon.com/s?bbn=16225007011&rh=n%3A16225007011%2Cn%3A13896617011&dc&qid=1695021317&rnid=16225007011&ref=lp_16225007011_nr_n_2'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36'

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

product_links = soup.find_all('a', class_='a-link-normal s-underline-text s-underline-link-text s-link-style a-text-normal')

for link in product_links:

product_url = 'https://www.amazon.com' + link['href']

print(product_url)

else:

print(response.status_code)If you want your web spider to automatically collect links and product information instead of just displaying links on the screen, you can use the script we looked at earlier.

Challenges of Amazon Scraping: Problems and Solutions

Web scraping can be a challenging task for several reasons. Creating the scraper itself can be a challenging task, but it is even more difficult to avoid being blocked while scraping and keeping up with the changes made by developers to the scraped site. If you don't constantly support and improve your Amazon scraper, it will quickly lose its effectiveness. So, let's take a look at how to avoid the most common problems when scraping data.

First of all, Amazon's terms of service explicitly prohibit web scraping, which is the process of extracting data from Amazon's website using automated tools or robots. This means that any automated access to Amazon's website is prohibited, including scraping product information, prices, reviews, ratings, and other relevant data from Amazon's product listings.

Technical Measures

Amazon has a number of measures in place to detect and prevent scraping, such as rate-limiting requests, banning IP addresses, and using browser fingerprinting to identify bots. Suspicious online activities may lead to potential blocking, yet Amazon typically verifying the user's identity by presenting a CAPTCHA challenge before implementing any restrictions, ensuring the distinction between humans and bots.

We've talked about this before and how to avoid blocking. But if you want to avoid reading another article, here's a quick solution: use a web scraping API to gather product details. It's the best way to bypass CAPTCHAs and prevent getting blocked.

Amazon scraper is a powerful and user-friendly tool that allows you to quickly and easily extract data from Amazon. With this tool, you can scrape product information…

Our Amazon Best Sellers Scraper extracts the top most popular products from the Best Sellers category and downloads all the necessary data, such as product name,…

Frequent Website Structure Changes

Amazon is constantly keeps changing its website, adding new things and shifting how it looks. This can lead to scrapers that were created to collect data from these websites to stop working. To avoid this problem, it is necessary to monitor changes on the site. This can be done manually, by browsing the site, or using change monitoring tools.

Also, if you assume that a part may look different or is likely to change soon, you can use try..except to prevent the entire code from breaking. This way, even if the structure changes, you will still be able to get the necessary data, albeit in a slightly smaller volume.

Another option is to use existing tools to scrape Amazon product data. For example, you can use our no-code scraper. In this case, you don't need to worry about the page structure or anything else - you will just get the data you need.

Amazon Data Collection Using Web Scraping API

As already mentioned, we can use a web scraping API to solve the tasks at hand. It takes care of performing the requests for you. This means that you don't have to worry about your IP address being blocked, and there is no need to use proxy servers to bypass CAPTCHA. In addition, our web scraping API allows you to quickly collect data using CSS selectors, using extraction rules.

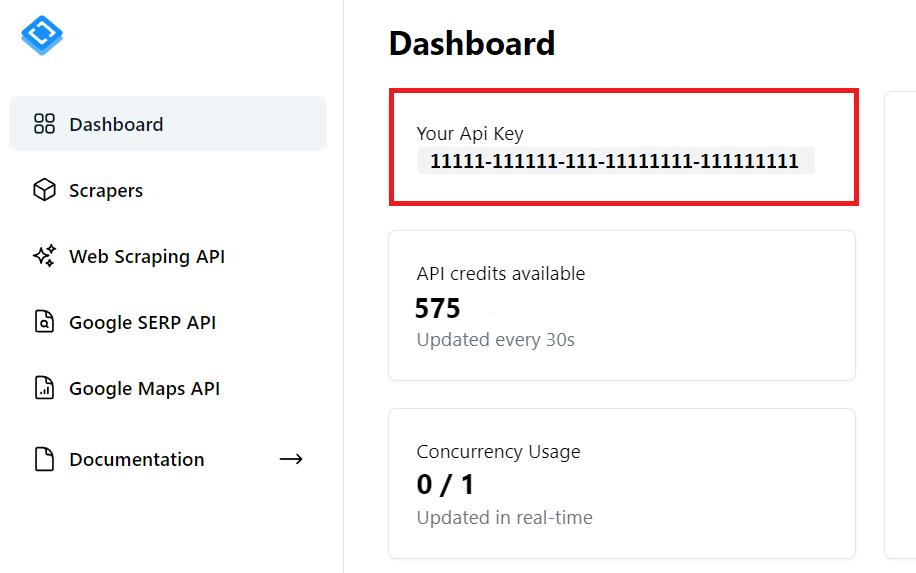

Getting API key

To get started, you need to get an API key. You can find it in your account after registering on HasData. In addition, you will receive 1,000 free credits when you register to test our features.

You will need this API Key in future code.

Extracting Data

Now let's get the same data as in the previous examples, but use the web scraping API. Also, we will save all the data we get to an Excel file. Import all the libraries we're going to use:

import requests

import json

import pandas as pdWe'll need the Requests library to make requests to the API. Then we'll use the JSON library to handle the response from the API, which comes in JSON format. And at the end, we'll use the Pandas library to create a data frame and save the data to an Excel file.

Now let's set the variables and parameters that we will use throughout the script:

url = 'https://www.amazon.com/s?bbn=16225007011&rh=n%3A16225007011%2Cn%3A13896617011&dc&qid=1695021317&rnid=16225007011&ref=lp_16225007011_nr_n_2'

api_url = 'https://api.scrape-it.cloud/scrape'

api_key = 'PUT-YOUR-API-KEY'

headers = {

'x-api-key': api_key,

'Content-Type': 'application/json'

}Here you should provide your link to the products page and your personal API key. Then form the body of the query to get all the links on the page:

payload = json.dumps({

"url": url,

"js_rendering": True,

"block_ads": True,

"extract_rules": {

'links': 'a.a-link-normal.s-underline-text.s-underline-link-text.s-link-style.a-text-normal @href'

},

"proxy_type": "datacenter",

"proxy_country": "US"

})Here we have specified a CSS selector in the extraction rules to get the data we need immediately. Now we will execute the request and parse the response in JSON format to get a list of links:

response = requests.request("POST", api_url, headers=headers, data=payload)

if response.status_code == 200:

data = response.json()

product_links = data['scrapingResult']['extractedData']['links']Set extraction rules that will get the data we need:

extract_rules = {

"title": "span#productTitle",

"price": "span.a-price-whole",

"description": "div#productDescription",

"image": "div#imgTagWrapperId img",

"rating": "span.reviewCountTextLinkedHistogram",

"reviews": "span#acrCustomerReviewText"

}Add a for loop to go through all the links and make requests to get data from each of them:

for product_link in product_links:

url = str("https://www.amazon.com/")+str(product_link)

print(url)

payload = json.dumps({

"extract_rules": extract_rules,

"wait": 0,

"block_resources": False,

"url": url

})

response = requests.post(api_url, headers=headers, data=payload)The only thing left is to save the data to a file. For this we will use the Pandas library, which creates the headers itself and allows us to save the necessary data conveniently:

if response.status_code == 200:

data = response.json()

data = data['scrapingResult']['extractedData']

df = pd.DataFrame(data)

df.to_excel("scraped_data.xlsx", index=False)

else:

print(f'Error: {response.status_code} - Error loading the page for {product_link}')Even though we now have a data file containing all the products we could have obtained by combining previous examples, when using an API, we don't have to worry about issues like blocking or getting dynamic content.

Conclusion

In this article, we have discussed various ways of collecting data from Amazon pages. We have also discussed the problems you will face if you use your scraper and methods of overcoming these problems.

You can use the web scraping API to avoid problems such as IP blocking and dealing with dynamic content. If you don't want to face any difficulties, you can use our ready-made no-code Amazon scraper, which will give you Amazon product details in convenient format.